elasticsearch

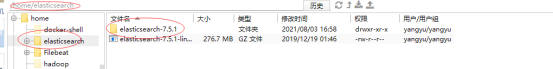

1.安装地址:wget?https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.5.1-linux-x86_64.tar.gz

2.创建普通账号 注意elasticsearch最后用非root账号运行

2.1创建过程

[root@master ~]# useradd yangyu

[root@master ~]# passwd yangyu

Changing password for user yangyu.

3.解压:

tar -zxvf elasticsearch-7.5.1-linux-x86_64.tar.gz

4.修改目录用户和用户组

[root@master ~]# chown -R yangyu:yangyu /home/elasticsearch

5.运行

?$cd /home/elasticsearch

启动。通过 -d 参数,表示后台运行。

$ bin/elasticsearch -d

6.使用普通账号启动elasticsearch可能出现的问题

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535]

[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

[3]: the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

1解决方案之一

解决办法:

#切换到root用户修改

vim /etc/security/limits.conf

# 在最后面追加下面内容

*** hard nofile 65536

*** soft nofile 65536

*** 是启动ES的用户

2解决方案:

在/etc/sysctl.conf文件最后添加一行

vm.max_map_count=262144

执行/sbin/sysctl -p 立即生效

3.解决方案

修改elasticsearch.yml? 找到discovery那一块,做如下修改

?cluster.initial_master_nodes: ["node-1","node-2"]修改为:cluster.initial_master_nodes: ["node-1"]7.

- 通过 su 普通用户启动

$ bin/elasticsearch -d

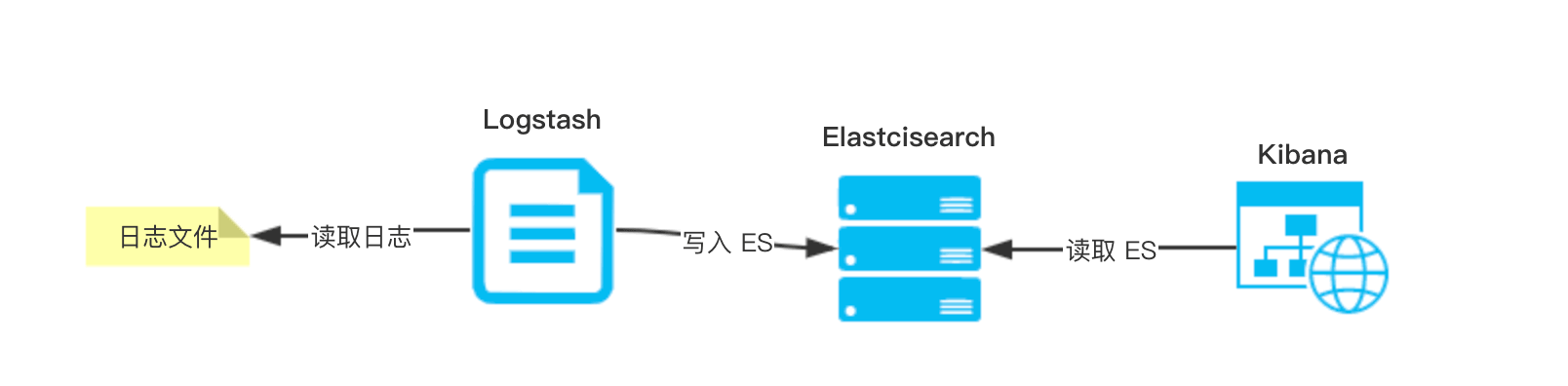

--------------------------------------------------------------------------------------------------------------------------------Logstash

Logstash

unzip logstash-7.5.1.zip

cd logstash-7.5.1

- 在root账户下启动

使用默认配置文件

执行?nohup bin/logstash -f config/logstash-sample.conf &?命令,后台启动 Logstash 服务。

?

?--------------------------------------------------------------------------------------------------------------------------------

?Beats

Beats 是一个全品类采集器的系列,包含多个:

Filebeat?:轻量型日志采集器。

Metricbeat?:轻量型指标采集器。

Packetbeat?:轻量型网络数据采集器。

Winlogbeat?:轻量型 Windows 事件日志采集器。

Auditbeat?:轻量型审计日志采集器。

Heartbeat?:面向运行状态监测的轻量型采集器。

Functionbeat?:面向云端数据的无服务器采集器。

演示日志搜集

- 下载

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.5.1-linux-x86_64.tar.gz- 解压

解压

$ tar -zxvf filebeat-7.5.1-linux-x86_64.tar.gz

$ cd filebeat-7.5.1-linux-x86_64

- 配置默认采集日志地址

Config filebeat.yml?定义inputs和输出地址output 选择的是5044

#=========================== Filebeat inputs

filebeat.inputs:

- type: log

??# Change to true to enable this input configuration.

??enabled: true

??# Paths that should be crawled and fetched. Glob based paths.

??paths:

????# - /var/log/*.log

????#- c:\programdata\elasticsearch\logs\*

????- /home/logs/spring.log # 配置我们要读取的 Spring Boot 应用的日志

#-------------------------- Elasticsearch output ----------------------------

#output.elasticsearch:

??# Array of hosts to connect to.

??# hosts: ["localhost:9200"]

#----------------------------- Logstash output --------------------------------

output.logstash:

??# The Logstash hosts

??hosts: ["localhost:5044"]

测试案例 spring.log

2021-08-04 11:08:21.388 INFO 11220 --- [ main] org.apache.catalina.core.StandardEngine : Starting Servlet engine: [Apache Tomcat/9.0.46]

2021-08-04 11:08:21.663 INFO 11220 --- [ main] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring embedded WebApplicationContext

2021-08-04 11:08:21.663 INFO 11220 --- [ main] w.s.c.ServletWebServerApplicationContext : Root WebApplicationContext: initialization completed in 1656 ms

2021-08-04 11:08:22.034 INFO 11220 --- [ main] org.apache.spark.SparkContext : Running Spark version 2.2.0

2021-08-04 11:08:22.353 INFO 11220 --- [ main] org.apache.spark.SparkContext : Submitted application: sparkTest

2021-08-04 11:08:22.370 INFO 11220 --- [ main] org.apache.spark.SecurityManager : Changing view acls to: GR-35

2021-08-04 11:08:22.370 INFO 11220 --- [ main] org.apache.spark.SecurityManager : Changing modify acls to: GR-35

2021-08-04 11:08:22.371 INFO 11220 --- [ main] org.apache.spark.SecurityManager : Changing view acls groups to:

2021-08-04 11:08:22.371 INFO 11220 --- [ main] org.apache.spark.SecurityManager : Changing modify acls groups to:

2021-08-04 11:08:22.372 INFO 11220 --- [ main] org.apache.spark.SecurityManager : SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(GR-35); groups with view permissions: Set(); users with modify permissions: Set(GR-35); groups with modify permissions: Set()

2021-08-04 11:08:23.569 INFO 11220 --- [ main] org.apache.spark.util.Utils : Successfully started service 'sparkDriver' on port 50792.

2021-08-04 11:08:23.584 INFO 11220 --- [ main] org.apache.spark.SparkEnv : Registering MapOutputTracker

2021-08-04 11:08:23.599 INFO 11220 --- [ main] org.apache.spark.SparkEnv : Registering BlockManagerMaster

2021-08-04 11:08:23.602 INFO 11220 --- [ main] o.a.s.s.BlockManagerMasterEndpoint : Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2021-08-04 11:08:23.602 INFO 11220 --- [ main] o.a.s.s.BlockManagerMasterEndpoint : BlockManagerMasterEndpoint up

2021-08-04 11:08:23.608 INFO 11220 --- [ main] o.apache.spark.storage.DiskBlockManager : Created local directory at C:\Users\GR-35\AppData\Local\Temp\blockmgr-f7ff5fd7-21ad-46b2-9a95-880d9c92c4dc

2021-08-04 11:08:23.634 INFO 11220 --- [ main] o.a.spark.storage.memory.MemoryStore : MemoryStore started with capacity 891.0 MB

2021-08-04 11:08:23.671 INFO 11220 --- [ main] org.apache.spark.SparkEnv : Registering OutputCommitCoordinator

2021-08-04 11:08:23.723 INFO 11220 --- [ main] org.spark_project.jetty.util.log : Logging initialized @5971ms

2021-08-04 11:08:23.771 INFO 11220 --- [ main] org.spark_project.jetty.server.Server : jetty-9.3.z-SNAPSHOT

2021-08-04 11:08:23.781 INFO 11220 --- [ main] org.spark_project.jetty.server.Server : Started @6029ms

2021-08-04 11:08:23.798 INFO 11220 --- [ main] o.s.jetty.server.AbstractConnector : Started ServerConnector@33634f04{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2021-08-04 11:08:23.798 INFO 11220 --- [ main] org.apache.spark.util.Utils : Successfully started service 'SparkUI' on port 4040.

2021-08-04 11:08:23.810 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@737d100a{/jobs,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.811 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@3c98781a{/jobs/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.811 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@4601203a{/jobs/job,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.812 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@80bfa9d{/jobs/job/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.812 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@4b039c6d{/stages,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.812 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@507d64aa{/stages/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.812 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@60b34931{/stages/stage,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.813 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@1e0a864d{/stages/stage/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.813 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@3e67f5f2{/stages/pool,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.814 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@4527f70a{/stages/pool/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.814 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@7132a9dc{/storage,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.814 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@2da66a44{/storage/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.815 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@61bfc9bf{/storage/rdd,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.816 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@329bad59{/storage/rdd/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.818 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@178f268a{/environment,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.819 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@5c723f2d{/environment/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.820 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@2d7a9786{/executors,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.820 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@366d8b97{/executors/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.820 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@7654f833{/executors/threadDump,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.821 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@d7109be{/executors/threadDump/json,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.827 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@f4a3a8d{/static,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.828 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@1f1ff879{/,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.829 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@24f870ee{/api,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.829 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@1f03fba0{/jobs/job/kill,null,AVAILABLE,@Spark}

2021-08-04 11:08:23.830 INFO 11220 --- [ main] o.s.jetty.server.handler.ContextHandler : Started o.s.j.s.ServletContextHandler@3bbf6abe{/stages/stage/kill,null,AVAILABLE,@Spark}--------------------------------------------------------------------------------------------------------------------------------?

?Kibana?

1.下载

curl -O https://artifacts.elastic.co/downloads/kibana/kibana-7.5.1-linux-x86_64.tar.gz

2.解压

tar -zxvf kibana-7.5.1-linux-x86_64.tar.gz

cd kibana-7.5.1-linux-x86_64

3.配置

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://localhost:9200"]

kibana.index: ".kibana"

4使用跟elasticsearch 一样用户启动

改变文件属性

chown -R yangyu:yangyu /home/Kibana

执行?nohup bin/kibana &?命令,后台启动 Kibana 服务。

可以通过?nohup.out?日志,查看启动是否成功。

5 .在使用kibana之前要启动 elasticsearch 、logstash、filebeat

且日志已经写入到配置地址 /home/logs/spring.log

filebeat会自动采集日志信息,通过logstash转换,存储到elasticsearch ,这样才能在kibana显示出来?

第一步要搜索到filebeat-7.5.1-*,必须第五点执行完成

至此,elasticsearch 、logstash、filebeat安装和具体数据流程已经完毕,下章介绍通过sprinboot-LogBack组件来完成日志生成,使用filebeat进行日志采集