typora-root-url: …\image

1. 极速入门Flume

1. 什么是Flume

Flume是一个高可用,高可靠,分布式的海量日志采集、聚合和传输的系统,能够有效的收集、聚合、移 动大量的日志数据。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-2wsLqbvh-1628220177210)(/image-20210802154303040.png)]](https://img-blog.csdnimg.cn/8dcfdb95135b4d1d9b9218f39bd12c4f.png)

Flume中的三大核心组件:source是数据源负责读取数据 channel是临时存储数据的,source会把读取到的数据临时存储到channel中 sink是负责从channel中读取数据的,最终将数据写出去,写到指定的目的地中

2. Flume高级应用场景

注意了,Flume中多个Agent之间是可以连通的,只需要让前面Agent的sink组件把数据写到下一 个Agent的source组件中即可。

3. Flume的三大核心组件

- Source:数据源

- Channel:临时存储数据的管道

- Sink:目的地

3.1 Source

Flume内置支持读取很多种数据源,基于文件、基于目录、基于TCP\UDP端口、基于HTTP、Kafka的 等等、当然了,如果这里面没有你喜欢的,他也是支持自定义的,在这我们挑几个常用的看一下:

- Exec Source:实现文件监控,可以实时监控文件中的新增内容,类似于linux中的tail -f 效果。 在这需要注意 tail -F 和 tail -f 的区别 tail -F 等同于–follow=name --retry,根据文件名进行追踪,并保持重试,即该文件被删除或改名后,如果 再次创建相同的文件名,会继续追踪 tail -f 等同于–follow=descriptor,根据文件描述符进行追踪,当文件改名或被删除,追踪停止 在实际工作中我们的日志数据一般都会通过log4j记录,log4j产生的日志文件名称是固定的,每天定 时给文件重命名 假设默认log4j会向access.log文件中写日志,每当凌晨0点的时候,log4j都会对文件进行重命名,在 access后面添加昨天的日期,然后再创建新的access.log记录当天的新增日志数据。 这个时候如果想要一直监控access.log文件中的新增日志数据的话,就需要使用tail -F

- NetCat TCP/UDP Source: 采集指定端口(tcp、udp)的数据,可以读取流经端口的每一行数据

- **Spooling Directory Source:**采集文件夹里新增的文件

- **Kafka Source:**从Kafka消息队列中采集数据

3.2 Channel

Channel:接受Source发出的数据,可以把channel理解为一个临时存储数据的管道 Channel的类型有很多:内存、文件,内存+文件、JDBC等

接下来我们来分析一下

- Memory Channel:使用内存作为数据的存储 优点是效率高,因为就不涉及磁盘IO 缺点有两个 1:可能会丢数据,如果Flume的agent挂了,那么channel中的数据就丢失了。 2:内存是有限的,会存在内存不够用的情况

- File Channel:使用文件来作为数据的存储 优点是数据不会丢失 缺点是效率相对内存来说会有点慢,但是这个慢并没有我们想象中的那么慢, 所以这个也是比较常用的一种channel。

- Spillable Memory Channel:使用内存和文件作为数据存储,即先把数据存到内存中,如果内存中 数据达到阈值再flush到文件中 优点:解决了内存不够用的问题。 缺点:还是存在数据丢失的风险

3.3 Sink

Sink:从Channel中读取数据并存储到指定目的地

Sink的表现形式有很多:打印到控制台、HDFS、Kafka等,

注意:Channel中的数据直到进入目的地才会被删除,当Sink写入目的地失败后,可以自动重写, 不会造成数据丢失,这块是有一个事务保证的。

常用的sink组件有:

- Logger Sink:将数据作为日志处理,可以选择打印到控制台或者写到文件中,这个主要在测试的时 候使用

- HDFS Sink:将数据传输到HDFS中,这个是比较常见的,主要针对离线计算的场景

- Kafka Sink:将数据发送到kafka消息队列中,这个也是比较常见的,主要针对实时计算场景,数据 不落盘,实时传输,最后使用实时计算框架直接处理。

4. Flume安装部署

安装包下载地址:http://flume.apache.org/download.html

- 解压

[root@bigdata04 soft]# tar -zxvf apache-flume-1.9.0-bin.tar.gz

- 修改盘flume的env环境变量配置文件 在flume的conf目录下,修改flume-env.sh.template的名字,去掉后缀template

[root@bigdata04 conf]# mv flume-env.sh.template flume-env.sh

- 这个时候我们不需要启动任何进程,只有在配置好采集任务之 后才需要启动Flume。

2 .极速上手Flume使用

1. 官方文档

2. 一个Agent配置的例子

# example.conf: A single-node Flume configuration

# a1代表agent的名称

# 为这个agent的三大组件起名字

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# 描述 source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# 描述sink

a1.sinks.k1.type = logger

# 描述 channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# 绑定 source 、sink 到 channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

3.三大组件的详细配置

4.启动agent

[root@bigdata01 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/example.conf -Dflume.root.logger=INFO,console

agent,表示启动一个Flume的agent代理

–name:指定agent的名字

–conf:指定flume配置文件的目录

–conf-file:指定Agent对应的配置文件(包含source、channel、sink配置的文件)

-D:动态添加一些参数,在这里是指定了flume的日志输出级别和输出位置,

- ? 安装telnet,连接44444端口,即可发送数据到flume

[root@bigdata01 ~]# yum install -y telnet

[root@bigdata01 ~]# telnet localhost 44444

- 如果启动多个agent,可用如下命令详细查看

[root@bigdata01 ~]# jps -m

[root@bigdata01 ~]# jps -ml

[root@bigdata01 ~]# ps -ef|grep flume

5. 案例:采集文件内容上传至HDFS

采集文件内容上传至 HDFS

需求:采集目录中已有的文件内容,存储到 HDFS

分析:source是要基于目录的,channel建议使用file,可以保证不丢数据,sink 使用 hdfs

- 设置agent

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source --->Spooling Directory Source

a1.sources.r1.type = spooldir

#指定监控目录

a1.sources.r1.spoolDir = /data/log/studentDir

# Use a channel which buffers events in memory --->File channels

a1.channels.c1.type = file

#存放检查点目录

a1.channels.c1.checkpointDir = /data/soft/apache-flume-1.9.0-bin/data/student

#存放数据的目录

a1.channels.c1.dataDirs = /data/soft/apache-flume-1.9.0-bin/data/studentDir/data

# Describe the sink --->hdfssink

a1.sinks.k1.type = hdfs

#hdfs上的存储目录

a1.sinks.k1.hdfs.path = hdfs://192.168.32.100:9000/flume/studentDir

#这个是一个文件前缀,会在hdfs上生成的文件前面加上这个前缀

a1.sinks.k1.hdfs.filePrefix = stu-

a1.sinks.k1.hdfs.fileType = DataStream

a1.sinks.k1.hdfs.writeFormat = Text

#hdfs多长时间切分一个文件

a1.sinks.k1.hdfs.rollInterval = 3600

#hdfs上切出来的文件大小都是1024字节

a1.sinks.k1.hdfs.rollSize = 134217728

#表示每隔10条数据切出来一个文件,如果设置为0表示不按数据条数切文件

a1.sinks.k1.hdfs.rollCount = 0

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

- 启动agent

[root@bigdata01 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/file-to-hdfs.conf -Dflume.root.logger=INFO,console

- hdfs 会给文件正在使用的文件加上.tem后缀

[root@bigdata01 studentDir]# hdfs dfs -ls /flume/studentDirFound 2 items-rw-r--r-- 1 root supergroup 41 2021-08-03 17:22 /flume/studentDir/stu-.1627982533329.tmp

- 已经被flume处理的文件会加上.COMPLETED后缀,表示已经处理过了

[root@bigdata01 conf]# cd /data/log/studentDir/[root@bigdata01 studentDir]# ls1.COMPLETED class1.dat.COMPLETED

6.案例:采集网站日志上传至HDFS

- 将A和B两台机器实时产生的日志数据汇总到机器C中

- 通过机器C将数据统一上传至HDFS的指定目录中

- 注意:HDFS中的目录是按天生成的,每天一个目录

- 其中 bigdata02和bigdata03中的source使用基于file的source,ExecSource. 因为要实时读取文件中的 新增数据 channel在这里我们使用基于内存的channel(速度快不怕丢失数据)。 由于bigdata02和bigdata03的数据需要快速发送到bigdata04中,为了快速发送我们可以通过网络直接传 输,sink建议使用avrosink,avro是一种数据序列化系统,经过它序列化的数据传输起来效率更高,并且 它对应的还有一个avrosource,avrosink的数据可以直接发送给avrosource,所以他们可以无缝衔接。

- bigdata04的source就确定了 使用avrosource、channel还是基于内存的channel,sink就使用 hdfssink,因为是要向hdfs中写数据的。

- 配置bigdata02节点

# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = execa1.sources.r1.command = tail -F /data/log/access.log# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件,hostname需要配置bigdata04节点ipa1.sinks.k1.type = avroa1.sinks.k1.hostname = 192.168.32.100a1.sinks.k1.port = 45454# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- 配置bigdata03节点

# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = execa1.sources.r1.command = tail -F /data/log/access.log# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件,hostname需要配置bigdata04节点ipa1.sinks.k1.type = avroa1.sinks.k1.hostname = 192.168.32.100a1.sinks.k1.port = 45454# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- 配置bigdata04节点

# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = avroa1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 45454# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://192.168.32.100:9000/access/%Y%m%da1.sinks.k1.hdfs.filePrefix = accessa1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollSize = 134217728a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.useLocalTimeStamp = true# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- 先启动bigdata04节点agent

[root@bigdata01 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/avro-to-hdfs.conf -Dflume.root.logger=INFO,console

-

启动bigdata02、bigdata03节点,并且模拟日志生成

模拟数据生成

[root@bigdata02 ~]# mkdir -p /data/log[root@bigdata02 ~]# cd /data/log/[root@bigdata02 log]# vi generateAccessLog.sh#!/bin/bash# 循环向文件中生成数据while [ "1" = "1" ]do # 获取当前时间戳 curr_time=`date +%s` # 获取当前主机名 name=`hostname` echo ${name}_${curr_time} >> /data/log/access.log # 暂停1秒 sleep 1done

[root@bigdata03 ~]# mkdir /data/log[root@bigdata03 ~]# cd /data/log/[root@bigdata03 log]# vi generateAccessLog.sh#!/bin/bash# 循环向文件中生成数据while [ "1" = "1" ]do # 获取当前时间戳 curr_time=`date +%s` # 获取当前主机名 name=`hostname` echo ${name}_${curr_time} >> /data/log/access.log # 暂停1秒 sleep 1done

启动 agent && 执行脚本

[root@bigdata02 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/file-to-avro-101.conf -Dflume.root.logger=INFO,console........[root@bigdata02 log]# sh -x generateAccessLog.sh

[root@bigdata03 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/file-to-avro-102.conf -Dflume.root.logger=INFO,console........[root@bigdata03 log]# sh -x generateAccessLog.sh

执行结果

[root@bigdata01 apache-flume-1.9.0-bin]# hdfs dfs -cat /access/20210803/access.1627990401737.tmp....bigdata03_1627961636bigdata03_1627961637bigdata03_1627961638bigdata02_1627961639bigdata02_1627961640bigdata02_1627961641bigdata02_1627961642bigdata02_1627961643bigdata02_1627961644bigdata03_1627961639bigdata03_1627961640bigdata03_1627961641bigdata03_1627961642bigdata03_1627961643

3 . 精讲Flume高级组件

3.1 Event

Event是Flume传输数据的基本单位,也是事务的基本单位,在文本文件中,通常一行记录就是一个 Event ,Event中包含header和body

- body是采集到的那一行记录的原始内容

- header类型为Map,里面可以存储一些属性信息,方便后面使用 我们可以在Source中给每一条数据的header中增加key-value,在Channel和Sink中使用header中的 值了。

3.2 高级组件

- **Source Interceptors:**Source可以指定一个或者多个拦截器按先后顺序依次对采集到的数据进行处 理。

- **Channel Selectors:**Source发往多个Channel的策略设置,如果source后面接了多个channel,到 底是给所有的channel都发,还是根据规则发送到不同channel,这些是由Channel Selectors来控制 的

- **Sink Processors:**Sink 发送数据的策略设置,一个channel后面可以接多个sink,channel中的数据 是被哪个sink获取,这个是由Sink Processors控制的

3.2.1 Source Interceptors

Source Interceptors 系统中已经内置提供了很多Source Interceptors 常见的Source Interceptors类型:Timestamp Interceptor、Host Interceptor、Search and Replace Interceptor 、Static Interceptor、Regex Extractor Interceptor 等

- **Timestamp Interceptor:**向event中的header里面添加timestamp 时间戳信息

- Host Interceptor:向event中的header里面添加host属性,host的值为当前机器的主机名或者ip

- **Search and Replace Interceptor:**根据指定的规则查询Event中body里面的数据,然后进行替换,

这个拦截器会修改event中body的值,也就是会修改原始采集到的数据内容 - **Static Interceptor:**向event中的header里面添加固定的key和value

- **Regex Extractor Interceptor:**根据指定的规则从Event中的body里面抽取数据,生成key和value,

再把key和value添加到header中

总结

- Timestamp Interceptor、Host Interceptor、Static Interceptor、Regex Extractor Interceptor是向

event中的header里面添加key-value类型的数据,方便后面的channel和sink组件使用,对采集到的原始

数据内容没有任何影响. - Search and Replace Interceptor 是会根据规则修改event中body里面的原始数据内容,对header没有任

何影响,使用这个拦截器需要特别小心,因为他会修改原始数据内容。

3.3 对采集到的数据按天按类型分目录存储

-

场景: log日志文件信息包含 视频信息、用户信息、送礼信息,数据都是json格式的

video_info{"id":"14943445328940974601","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974602","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974603","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974604","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974605","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974606","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974607","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974608","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974609","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}{"id":"14943445328940974610","uid":"840717325115457536","lat":"53.530598","lnt":"-2.5620373","hots":0,"title":"0","status":"1","topicId":"0","end_time":"1494344570","watch_num":0,"share_num":"1","replay_url":null,"replay_num":0,"start_time":"1494344544","timestamp":1494344571,"type":"video_info"}userinfo{"uid":"861848974414839801","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839802","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839803","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839804","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839805","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839806","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839807","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839808","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839809","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}{"uid":"861848974414839810","nickname":"mick","usign":"","sex":1,"birthday":"","face":"","big_face":"","email":"abc@qq.com","mobile":"","reg_type":"102","last_login_time":"1494344580","reg_time":"1494344580","last_update_time":"1494344580","status":"5","is_verified":"0","verified_info":"","is_seller":"0","level":1,"exp":0,"anchor_level":0,"anchor_exp":0,"os":"android","timestamp":1494344580,"type":"userinfo"}gift_record{"send_id":"834688818270961664","good_id":"223","video_id":"14943443045138661356","gold":"10","timestamp":1494344574,"type":"gift_record"}{"send_id":"829622867955417088","good_id":"72","video_id":"14943429572096925829","gold":"4","timestamp":1494344574,"type":"gift_record"}{"send_id":"827187230564286464","good_id":"193","video_id":"14943394752706070833","gold":"6","timestamp":1494344574,"type":"gift_record"}{"send_id":"829622867955417088","good_id":"80","video_id":"14943429572096925829","gold":"6","timestamp":1494344574,"type":"gift_record"}{"send_id":"799051982152663040","good_id":"72","video_id":"14943435528719800690","gold":"4","timestamp":1494344574,"type":"gift_record"}{"send_id":"848799149716930560","good_id":"72","video_id":"14943435528719800690","gold":"4","timestamp":1494344574,"type":"gift_record"}{"send_id":"775251729037262848","good_id":"777","video_id":"14943390379833490630","gold":"5","timestamp":1494344574,"type":"gift_record"}{"send_id":"835670464000425984","good_id":"238","video_id":"14943428496217015696","gold":"2","timestamp":1494344574,"type":"gift_record"}{"send_id":"834688818270961664","good_id":"223","video_id":"14943443045138661356","gold":"10","timestamp":1494344574,"type":"gift_record"}{"send_id":"834688818270961664","good_id":"223","video_id":"14943443045138661356","gold":"10","timestamp":1494344574,"type":"gift_record"} -

分析: source使用基于文件的execsource、channle使用基于文件的channle, 我们希望保证数据的完整性和准确性,sink使用hdfssink,最后流程:Exec Source -> Search and Replace Interceptor->Regex Extractor Interceptor->File Channel -> hdfssink

在hdfs中存储数据文件的大概情况

hdfs://192.168.182.100:9000/moreType/20200101/videoInfo hdfs://192.168.182.100:9000/moreType/20200101/userInfo hdfs://192.168.182.100:9000/moreType/20200101/giftRecord

3.3.1 在bigdata04配置Agent

file-to-hdfs-moreType.conf

# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = execa1.sources.r1.command = tail -F /data/log/moreType.log# 配置拦截器 [多个拦截器按照顺序依次执行]a1.sources.r1.interceptors = i1 i2 i3 i4a1.sources.r1.interceptors.i1.type = search_replacea1.sources.r1.interceptors.i1.searchPattern = "type":"video_info"a1.sources.r1.interceptors.i1.replaceString = "type":"videoInfo"a1.sources.r1.interceptors.i2.type = search_replacea1.sources.r1.interceptors.i2.searchPattern = "type":"user_info"a1.sources.r1.interceptors.i2.replaceString = "type":"userInfo"a1.sources.r1.interceptors.i3.type = search_replacea1.sources.r1.interceptors.i3.searchPattern = "type":"gift_record"a1.sources.r1.interceptors.i3.replaceString = "type":"giftRecord"a1.sources.r1.interceptors.i4.type = regex_extractora1.sources.r1.interceptors.i4.regex = "type":"(\\w+)"a1.sources.r1.interceptors.i4.serializers = s1a1.sources.r1.interceptors.i4.serializers.s1.name = logType# 配置channel组件a1.channels.c1.type = filea1.channels.c1.checkpointDir = /data/soft/apache-flume-1.9.0-bin/data/moreTypea1.channels.c1.dataDirs = /data/soft/apache-flume-1.9.0-bin/data/moreType/data# 配置sink组件a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://192.168.32.50:9000/moreType/%Y%m%d/%{logType}a1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollSize = 134217728a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.useLocalTimeStamp = true#增加文件前缀和后缀a1.sinks.k1.hdfs.filePrefix = dataa1.sinks.k1.hdfs.fileSuffix = .log# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- 开启agent

[root@bigdata01 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/file-to-hdfs-moreType.conf -Dflume.root.logger=INFO,console

- 查看hdfs

[root@bigdata01 apache-flume-1.9.0-bin]# hdfs dfs -ls -R /moreType/20210804-rw-r--r-- 1 root supergroup 3 2021-08-03 16:44 /moreType/20210804/data.1628009072051.log.tmpdrwxr-xr-x - root supergroup 0 2021-08-03 16:40 /moreType/20210804/giftRecord-rw-r--r-- 1 root supergroup 1369 2021-08-03 16:40 /moreType/20210804/giftRecord/data.1628008621595.logdrwxr-xr-x - root supergroup 0 2021-08-03 16:42 /moreType/20210804/userInfo-rw-r--r-- 1 root supergroup 4130 2021-08-03 16:42 /moreType/20210804/userInfo/data.1628008920106.log.tmpdrwxr-xr-x - root supergroup 0 2021-08-03 16:44 /moreType/20210804/videoInfo-rw-r--r-- 1 root supergroup 2970 2021-08-03 16:44 /moreType/20210804/videoInfo/data.1628009072104.log.tmp

3.4 Channel Selector 实践案例

Channel Selectors类型包括:Replicating Channel Selector 和 Multiplexing Channel Selector 其中 Replicating Channel Selector是默认的channel 选择器,它会将Source采集过来的Event发往所有 Channel

一、Replicating Channel Selector

在这个例子的配置中,source的数据会发往c1、c2、c3这三个channle中,可以保证c1、 c2一定能接收到所有数据,但是c3就无法保证了 这个selector.optional参数是一个可选项,可以不用配置就行。

二、Multiplexing Channel Selector

在这个例子的配置中,指定了4个channel,c1、c2、c3、c4 source采集到的数据具体会发送到哪个channel中,会根据event中header里面的state属性的值,这个 是通过selector.header控制的 如果state属性的值是CZ,则发送给c1 如果state属性的值是US,则发送给c2 c3 如果state属性的值是其它值,则发送给c4 这些规则是通过selector.mapping和selector.default控制的 这样就可以实现根据一定规则把数据分发给不同的channel了。

3.4.1 案例一:多Channel之Replicating Channel Selector

[root@bigdata04 conf]# vi tcp-to-replicatingchannel.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1 c2a1.sinks = k1 k2# 配置source组件a1.sources.r1.type = netcata1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 44444# 配置channle选择器[默认就是replicating,所以可以省略]a1.sources.r1.selector.type = replicating# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100a1.channels.c2.type = memorya1.channels.c2.capacity = 1000a1.channels.c2.transactionCapacity = 100# 配置sink组件a1.sinks.k1.type = loggera1.sinks.k2.type = hdfsa1.sinks.k2.hdfs.path = hdfs://192.168.32.50:9000/replicatinga1.sinks.k2.hdfs.fileType = DataStreama1.sinks.k2.hdfs.writeFormat = Texta1.sinks.k2.hdfs.rollInterval = 3600a1.sinks.k2.hdfs.rollSize = 134217728a1.sinks.k2.hdfs.rollCount = 0a1.sinks.k2.hdfs.useLocalTimeStamp = truea1.sinks.k2.hdfs.filePrefix = dataa1.sinks.k2.hdfs.fileSuffix = .log# 把组件连接起来a1.sources.r1.channels = c1 c2a1.sinks.k1.channel = c1a1.sinks.k2.channel = c2

- 开启agent

[root@bigdata01 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/tcp-to-replicatingchannel.conf -Dflume.root.logger=INFO,console

3.4.2 案例二:多Channel之Multiplexing Channel Selector

[root@bigdata04 conf]# vi tcp-to-multiplexingchannel.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1 c2a1.sinks = k1 k2# 配置source组件a1.sources.r1.type = netcata1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 44444# 配置source拦截器a1.sources.r1.interceptors = i1a1.sources.r1.interceptors.i1.type = regex_extractora1.sources.r1.interceptors.i1.regex = "city":"(\\w+)"a1.sources.r1.interceptors.i1.serializers = s1a1.sources.r1.interceptors.i1.serializers.s1.name = city# 配置channle选择器a1.sources.r1.selector.type = multiplexinga1.sources.r1.selector.header = citya1.sources.r1.selector.mapping.bj = c1a1.sources.r1.selector.default = c2# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100a1.channels.c2.type = memorya1.channels.c2.capacity = 1000a1.channels.c2.transactionCapacity = 100# 配置sink组件a1.sinks.k1.type = loggera1.sinks.k2.type = hdfsa1.sinks.k2.hdfs.path = hdfs://192.168.32.50:9000/multiplexinga1.sinks.k2.hdfs.fileType = DataStreama1.sinks.k2.hdfs.writeFormat = Texta1.sinks.k2.hdfs.rollInterval = 3600a1.sinks.k2.hdfs.rollSize = 134217728a1.sinks.k2.hdfs.rollCount = 0a1.sinks.k2.hdfs.useLocalTimeStamp = truea1.sinks.k2.hdfs.filePrefix = dataa1.sinks.k2.hdfs.fileSuffix = .log# 把组件连接起来a1.sources.r1.channels = c1 c2a1.sinks.k1.channel = c1a1.sinks.k2.channel = c2

- 启动agent

[root@bigdata01 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/tcp-to-multiplexingchannel.conf -Dflume.root.logger=INFO,console

[root@bigdata01 data]# telnet localhost 44444Trying ::1...Connected to localhost.Escape character is '^]'.{"name":"jack","age":19,"city":"bj"}OK{"name":"tom","age":26,"city":"sh"}OK

- 执行结果

2021-08-04 01:53:33,974 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:95)] Event: { headers:{city=bj} body: 7B 22 6E 61 6D 65 22 3A 22 6A 61 63 6B 22 2C 22 {"name":"jack"," }2021-08-04 01:53:43,768 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.HDFSDataStream.configure(HDFSDataStream.java:57)] Serializer = TEXT, UseRawLocalFileSystem = false2021-08-04 01:53:43,915 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:246)] Creating hdfs://192.168.32.50:9000/multiplexing/data.1628013223769.log.tmp

[root@bigdata01 apache-flume-1.9.0-bin]# hdfs dfs -cat /multiplexing/data.1628013223769.log.tmp{"name":"tom","age":26,"city":"sh"}

3.5 Sink Processors

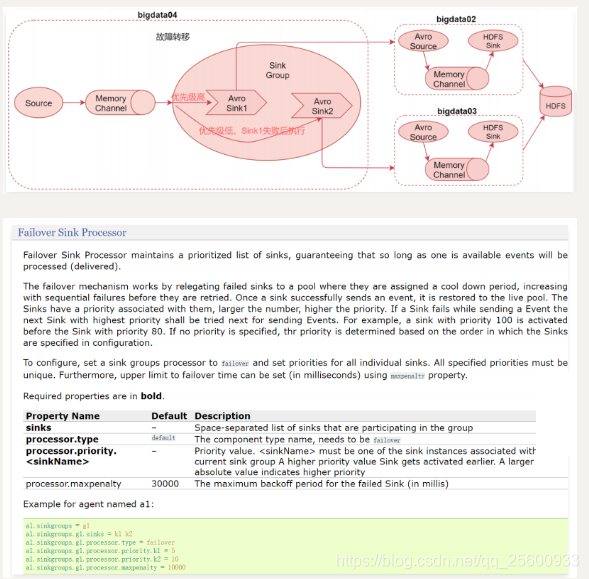

Sink Processors 接下来看一下Sink处理器 Sink Processors类型包括这三种:Default Sink Processor、Load balancing Sink Processor 和 Failover Sink Processor . DefaultSink Processor是默认的,不用配置sinkgroup,一个 channel后面接一个sink的形式 Load balancing Sink Processor是负载均衡处理器,一个channle后面可以接多个sink,这多个sink属于 一个sink group,根据指定的算法进行轮询或者随机发送,减轻单个sink的压力

Failover Sink Processor是故障转移处理器,一个channle后面可以接多个sink,这多个sink属于一个 sink group,按照sink的优先级,默认先让优先级高的sink来处理数据,如果这个sink出现了故障,则用 优先级低一点的sink处理数据,可以保证数据不丢失。

3.5.1 Load balancing Sink Processor

-

processor.sinks:指定这个sink groups中有哪些sink,指定sink的名称,多个的话中间使用空格隔开即可

【注意,这里写的是processor.sinks,但是在下面的example中使用的是sinks,实际上就是sinks,所以文档也是有一些瑕疵的,不过Flume的文档已经算是写的非常好的了】

-

processor.type:针对负载均衡的sink处理器,这里需要指定load_balance

-

processor.selector:此参数的值内置支持两个,round_robin和random,round_robin表示轮询,按照sink的顺序,轮流处理数据,random表示随机。

-

processor.backoff:默认为false,设置为true后,故障的节点会列入黑名单,过一定时间才会再次发送数据,如果还失败,则等待时间是指数级增长;一直到达到最大的时间。如果不开启,故障的节点每次还会被重试发送,如果真有故障节点的话就会影响效率。

-

processor.selector.maxTimeOut:最大的黑名单时间,默认是30秒

- 配置bigdata04节点

[root@bigdata04 conf]# vi load-balancing.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1 k2# 配置source组件a1.sources.r1.type = netcata1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 44444# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件,[为了方便演示效果,把batch-size设置为1]a1.sinks.k1.type=avroa1.sinks.k1.hostname=192.168.32.51a1.sinks.k1.port=41414a1.sinks.k1.batch-size = 1a1.sinks.k2.type=avroa1.sinks.k2.hostname=192.168.32.52a1.sinks.k2.port=41414a1.sinks.k2.batch-size = 1# 配置sink策略a1.sinkgroups = g1a1.sinkgroups.g1.sinks = k1 k2a1.sinkgroups.g1.processor.type = load_balancea1.sinkgroups.g1.processor.backoff = truea1.sinkgroups.g1.processor.selector = round_robin# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1a1.sinks.k2.channel = c1

- bigdata02节点

[root@bigdata02 conf]# vi load-balancing-51.conf# agent的名称是# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = avroa1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 41414# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件[为了区分两个sink组件生成的文件,修改filePrefix的值]a1.sinks.k1.type = hdfs#配置为bigdata01 hdfs主节点a1.sinks.k1.hdfs.path = hdfs://192.168.32.100:9000/load_balancea1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollSize = 134217728a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.useLocalTimeStamp = truea1.sinks.k1.hdfs.filePrefix = data101a1.sinks.k1.hdfs.fileSuffix = .log# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- bigdata03节点

[root@bigdata03 conf]# vi load-balancing-52.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = avroa1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 41414# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件[为了区分两个sink组件生成的文件,修改filePrefix的值]a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://192.168.32.100:9000/load_balancea1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollSize = 134217728a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.useLocalTimeStamp = truea1.sinks.k1.hdfs.filePrefix = data102# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

注意:bigdata04中指定的 a1.sinks.k1.port=41414 和 a1.sinks.k2.port=41414 需要和 bigdata02 和 bigdata03 中的 a1.sources.r1.port = 41414 的值保持一致

- 一次启动agent

[root@bigdata02 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/load-balancing-51.conf -Dflume.root.logger=INFO,console.....[root@bigdata03 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/load-balancing-52.conf -Dflume.root.logger=INFO,console....[root@bigdata04 apache-flume-1.9.0-bin]# bin/flume-ng agent --name a1 --conf conf --conf-file conf/load-balancing.conf -Dflume.root.logger=INFO,console

[root@bigdata04 ~]# telnet localhost 44444Trying ::1...Connected to localhost.Escape character is '^]'.heheOKhahaOK

3.5.2 Failover Sink Processor

- processor.type:针对故障转移的sink处理器,使用failover

- processor.priority.:指定sink group中每一个sink组件的优先级,默认情况下channel中的数据会被 优先级比较高的sink取走

- processor.maxpenalty:sink发生故障之后,最大等待时间

- bigdata04 节点

[root@bigdata04 conf]# vi failover.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1 k2# 配置source组件a1.sources.r1.type = netcata1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 44444# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件,[为了方便演示效果,把batch-size设置为1]a1.sinks.k1.type = avroa1.sinks.k1.hostname = 192.168.32.51a1.sinks.k1.port = 41414a1.sinks.k1.batch-size = 1a1.sinks.k2.type = avroa1.sinks.k2.hostname = 192.168.32.52a1.sinks.k2.port = 41414a1.sinks.k2.batch-size = 1# 配置sink策略a1.sinkgroups = g1a1.sinkgroups.g1.sinks = k1 k2a1.sinkgroups.g1.processor.type = failovera1.sinkgroups.g1.processor.priority.k1 = 5a1.sinkgroups.g1.processor.priority.k2 = 10a1.sinkgroups.g1.processor.maxpenalty = 10000# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1a1.sinks.k2.channel = c1

- bigdata02节点

[root@bigdata02 conf]# vi failover-51.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = avroa1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 41414# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件[为了区分两个sink组件生成的文件,修改filePrefix的值]a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://192.168.32.100:9000/failovera1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollSize = 134217728a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.useLocalTimeStamp = truea1.sinks.k1.hdfs.filePrefix = data51a1.sinks.k1.hdfs.fileSuffix = .log# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- bigdata03节点

[root@bigdata03 conf]# vi failover-52.conf# agent的名称是a1# 指定source组件、channel组件和Sink组件的名称a1.sources = r1a1.channels = c1a1.sinks = k1# 配置source组件a1.sources.r1.type = avroa1.sources.r1.bind = 0.0.0.0a1.sources.r1.port = 41414# 配置channel组件a1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# 配置sink组件[为了区分两个sink组件生成的文件,修改filePrefix的值]a1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = hdfs://192.168.32.100:9000/failovera1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat = Texta1.sinks.k1.hdfs.rollInterval = 3600a1.sinks.k1.hdfs.rollSize = 134217728a1.sinks.k1.hdfs.rollCount = 0a1.sinks.k1.hdfs.useLocalTimeStamp = truea1.sinks.k1.hdfs.filePrefix = data52a1.sinks.k1.hdfs.fileSuffix = .log# 把组件连接起来a1.sources.r1.channels = c1a1.sinks.k1.channel = c1

- 传输数据

[root@bigdata04 ~]# telnet localhost 44444Trying ::1...Connected to localhost.Escape character is '^]'.heheOKhahaOK

- 192.168.32.52 节点的优先权比较大,优先经过改节点

- 把192.168.32.52 节点关掉,会经过192.168.32.51

4.Flume出神入化篇

4.1 各种自定义组件

4.2 Flume优化

4.2.1 调整Flume进程的内存大小,建议设置1G~2G,太小的话会导致频繁GC 因为Flume进程也是基于Java的,所以就涉及到进程的内存设置,一般建议启动的单个Flume进程(或 者说单个Agent)内存设置为1G~2G,内存太小的话会频繁GC,影响Agent的执行效率。

- 这个需求需要根据Agent读取的数据量的大小和速度有关系,所以需要具体情况具体分析,当Flume的 Agent启动之后,对应就会启动一个进程,我们可以通过jstat -gcutil PID 1000来看看这个进程GC的信 息,每一秒钟刷新一次,如果GC次数增长过快,说明内存不够用。

使用 jps查看目前启动flume进程

[root@bigdata04 ~]# jps2957 Jps2799 Application

执行 jstat -gcutil PID 1000

[root@bigdata04 ~]# jstat -gcutil 2799 1000S0 S1 E O M CCS YGC YGCT FGC FGCT GCT100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0100.00 0.00 17.54 42.80 96.46 92.38 8 0.029 0 0.000 0

- 在这里主要看YGC YGCT FGC FGCT GCT

- YGC:表示新生代堆内存GC的次数,如果每隔几十秒产生一次,也还可以接受,如果每秒都会发生

一次YGC,那说明需要增加内存了 - YGCT:表示新生代堆内存GC消耗的总时间

- FGC:FULL GC发生的次数,注意,如果发生FUCC GC,则Flume进程会进入暂停状态,FUCC GC执

行完以后 - Flume才会继续工作,所以FUCC GC是非常影响效率的,这个指标的值越低越好,没有更好。

- GCT:所有类型的GC消耗的总时间

如果需要调整Flume进程内存的话,需要调整 flume-env.sh脚本中的 JAVA_OPTS 参数 把 export JAVA_OPTS 参数前面的#号去掉,建议这里的 Xms 和 Xmx 设置为一样大,避免进行内存交换,内存交换也比较消耗性能。

export JAVA_OPTS="-Xms1024m -Xmx1024m -Dcom.sun.management.jmxremote"

4.2.2在一台服务器启动多个agent的时候,建议修改配置区分日志文件

在conf目录下有log4j.properties,在这里面指定了日志文件的名称和位置。所以建议拷贝多个conf目录,然后修改对应conf目录中log4j.properties日志的文件名称(可以保证多个 agent的日志分别存储),并且把日志级别调整为warn(减少垃圾日志的产生),默认info级别会记录很多日 志信息。 这样在启动Agent的时候分别通过–conf参数指定不同的conf目录,后期分析日志就方便了,每一个Agent 都有一个单独的日志文件。

- 以bigdata04机器为例: 复制conf-failover目录,以后启动sink的failover任务的时候使用这个目录 修改 log4j.properties中的日志记录级别和日志文件名称,日志文件目录可以不用修改,统一使用logs目 录即可

[root@bigdata04 apache-flume-1.9.0-bin]# cp -r conf/ conf-failover[root@bigdata04 apache-flume-1.9.0-bin]# cd conf-failover/[root@bigdata04 conf-failover]# vi log4j.properties.....#记录的日志级别flume.root.logger=WARN,LOGFILE#日志目录flume.log.dir=./logs#日志文件flume.log.file=flume-failover.log.....

- 启动agent

[root@bigdata04 apache-flume-1.9.0-bin]# nohup ./flume-ng agent -c conf -n a1 -f ../conf/log4j.properties -Dflume.root.logger=INFO,console &

4.3 Flume进程监控

- 思路

首先需要有一个配置文件,配置文件中指定你现在需要监控哪些Agent

有一个脚本负责读取配置文件中的内容,定时挨个检查Agent对应的进程还在不在,如果发现对应的 进程不在,则记录错误信息,然后告警(发短信或者发邮件) 并尝试重启

- 创建一个文件 monlist.conf 文件中的第一列指定一个Agent的唯一标识,后期需要根据这个标识过滤对应的Flume进程,所以一定要 保证至少在一台机器上是唯一的, 等号后面是一个启动Flume进程的脚本,这个脚本和Agent的唯一标识是一一对应的,后期如果根据 Agent标识没有找到对应的进程,那么就需要根据这个脚本启动进程

example=startExample.sh

- 这个脚本的内容如下: startExample.sh

nohup ${flume_path}/bin/flume-ng agent --name a1 --conf ${flume_path}/conf/ --conf-file ${flume_path}/conf/example.conf &

- 接着就是要写一个脚本来检查进程在不在,不在的话尝试重启 创建脚本 monlist.sh

#!/bin/bashmonlist=`cat monlist.conf`echo "start check"for item in ${monlist}do # 设置字段分隔符 OLD_IFS=$IFS IFS="=" # 把一行内容转成多列[数组] arr=($item) # 获取等号左边的内容 name=${arr[0]} # 获取等号右边的内容 script=${arr[1]} echo "time is:"`date +"%Y-%m-%d %H:%M:%S"`" check "$name if [ `jps -m|grep $name | wc -l` -eq 0 ] then # 发短信或者邮件告警 echo `date +"%Y-%m-%d %H:%M:%S"`$name "is none" sh -x ./${script} fidone

注意:这个需要定时执行,所以可以使用crontab定时调度

* * * * * root /bin/bash /data/soft/monlist.sh