一、概述

1. 使用场景

Map Join适用于一张表十分小、一张表很大的场景。

2. 优点

思考:在Reduce端处理过多的表,非常容易产生数据倾斜。怎么办?

在Map端缓存多张表,提前处理业务逻辑,这样增加Map端业务,减少Reduce端数据的压力,尽可能的减少数据倾斜。

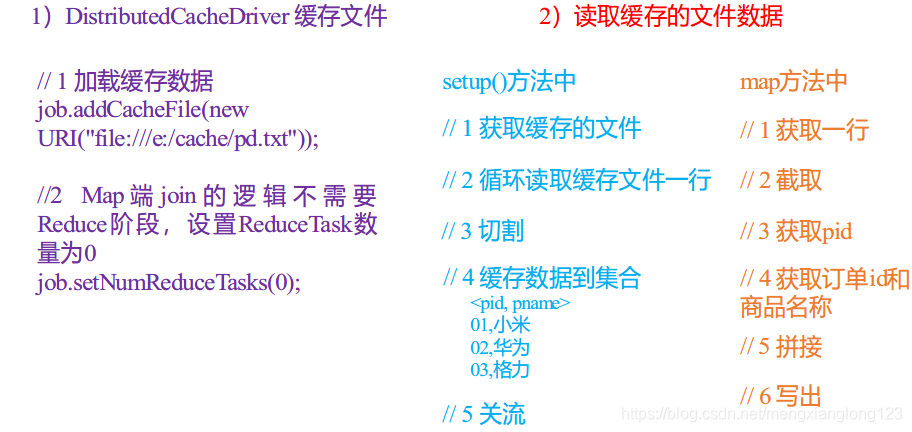

3. 具体办法:采用DistributedCache

(1)在Mapper的setup阶段,将文件读取到缓存集合中。

(2)在Driver驱动类中加载缓存。

//缓存普通文件到Task运行节点。

job.addCacheFile(new URI("file:///e:/cache/pd.txt"));

//如果是集群运行,需要设置HDFS路径

job.addCacheFile(new URI("hdfs://hadoop102:8020/cache/pd.txt"));

二、Map Join案例实操

1. 需求

同:https://blog.csdn.net/mengxianglong123/article/details/119506147

2. 需求分析

3. 代码实现

(1)先在MapJoinDriver驱动类中添加缓存文件

package com.atguigu.mapreduce.mapjoin;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

public class MapJoinDriver {

public static void main(String[] args) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException {

// 1 获取job信息

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

// 2 设置加载jar包路径

job.setJarByClass(MapJoinDriver.class);

// 3 关联mapper

job.setMapperClass(MapJoinMapper.class);

// 4 设置Map输出KV类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

// 5 设置最终输出KV类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

// 加载缓存数据

job.addCacheFile(new URI("file:///D:/input/tablecache/pd.txt"));

// Map端Join的逻辑不需要Reduce阶段,设置reduceTask数量为0

job.setNumReduceTasks(0);

// 6 设置输入输出路径

FileInputFormat.setInputPaths(job, new Path("D:\\input"));

FileOutputFormat.setOutputPath(job, new Path("D:\\output"));

// 7 提交

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

(2)在MapJoinMapper类中的setup方法中读取缓存文件

package com.atguigu.mapreduce.mapjoin;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.URI;

import java.util.HashMap;

import java.util.Map;

public class MapJoinMapper extends Mapper<LongWritable, Text, Text, NullWritable> {

private Map<String, String> pdMap = new HashMap<>();

private Text text = new Text();

//任务开始前将pd数据缓存进pdMap

@Override

protected void setup(Context context) throws IOException, InterruptedException {

//通过缓存文件得到小表数据pd.txt

URI[] cacheFiles = context.getCacheFiles();

Path path = new Path(cacheFiles[0]);

//获取文件系统对象,并开流

FileSystem fs = FileSystem.get(context.getConfiguration());

FSDataInputStream fis = fs.open(path);

//通过包装流转换为reader,方便按行读取

BufferedReader reader = new BufferedReader(new InputStreamReader(fis, "UTF-8"));

//逐行读取,按行处理

String line;

while (StringUtils.isNotEmpty(line = reader.readLine())) {

//切割一行

//01 小米

String[] split = line.split("\t");

pdMap.put(split[0], split[1]);

}

//关流

IOUtils.closeStream(reader);

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//读取大表数据

//1001 01 1

String[] fields = value.toString().split("\t");

//通过大表每行数据的pid,去pdMap里面取出pname

String pname = pdMap.get(fields[1]);

//将大表每行数据的pid替换为pname

text.set(fields[0] + "\t" + pname + "\t" + fields[2]);

//写出

context.write(text,NullWritable.get());

}

}