Linux下创建用户

????????elasticsearch在启动的时候是不允许使用root用户的,所以要重新建一个用户作为elasticsearch的用户。

? ? ? ? 首先创建一个linux用户:

sudo useradd elk -d -m /home/gdca/elk? ? ? 这里里面-d -m命令是是创建并设置elk用户的登录路径,这样通过用elk用户登陆以后直接就进入到这个目录下面,这里面有个细节 需要手动创建/home/gdca 这个目录,因为这个命令只会创建最后一级文件目录,直接使用会提示文件创建失败。

? ? ? ? 接下来创建用户密码:

sudo passwd elk? ? ? ?到这里我们的elk操作用户就已经创建好了,接下来就是下载和安装?elasticsearch了。

下载和安装

? ? ? ? 首先我们要去elasticsearch的官网下载zip压缩包,通过elk用户名密码登陆到linux上的/home/gdca/elk目录下,执行下载命令,这里我下载的是7.6.0版本,如果需要下载其他版本请自行去官网查询版本下载链接。

curl -L -O https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.6.0-linux-x86_64.tar.gz下载成功以后,解压缩到本地,或者指定解压缩文件路径也可以,这里我直接解压在本地

tar -zxvf elasticsearch-7.6.0-linux-x86_64.tar.gz解压成功以后,进入到?elasticsearch-7.6.0中

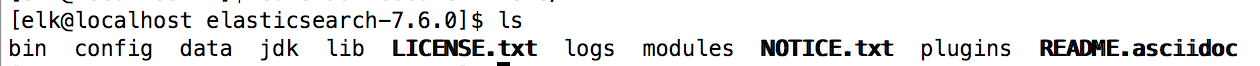

cd? /home/gdca/elk/elasticsearch-7.6.0可以看到以下文件目录?

?ES配置?

? ? ? ? 接下来我们做一些简单的配置管理,因为项目要求需要在3台服务器上配置elasticsearch,所以我采用集群的方式搭建,服务器的ip地址如下:

- 192.168.10.194

- 192.168.10.195

- 192.168.10.196

? ? elasticsearch所有的配置文件都在config目录下,首先我们要配置一下jvm的参数,具体可以根据当前机器能够提供的物理内存来设置,这里我设置成256m

cd config

vim jvm.operation?## JVM configuration

################################################################

## IMPORTANT: JVM heap size

################################################################

##

## You should always set the min and max JVM heap

## size to the same value. For example, to set

## the heap to 4 GB, set:

##

## -Xms4g

## -Xmx4g

##

## See https://www.elastic.co/guide/en/elasticsearch/reference/current/heap-size.html

## for more information

##

################################################################

# Xms represents the initial size of total heap space

# Xmx represents the maximum size of total heap space

-Xms256m

-Xmx256m

################################################################

## Expert settings

################################################################

##

## All settings below this section are considered

## expert settings. Don't tamper with them unless

## you understand what you are doing

##

################################################################

## GC configuration

8-13:-XX:+UseConcMarkSweepGC

8-13:-XX:CMSInitiatingOccupancyFraction=75

8-13:-XX:+UseCMSInitiatingOccupancyOnly

## G1GC Configuration

# NOTE: G1 GC is only supported on JDK version 10 or later

# to use G1GC, uncomment the next two lines and update the version on the

# following three lines to your version of the JDK

# 10-13:-XX:-UseConcMarkSweepGC

# 10-13:-XX:-UseCMSInitiatingOccupancyOnly

14-:-XX:+UseG1GC

14-:-XX:G1ReservePercent=25

14-:-XX:InitiatingHeapOccupancyPercent=30

## JVM temporary directory

-Djava.io.tmpdir=${ES_TMPDIR}

## heap dumps

# generate a heap dump when an allocation from the Java heap fails

# heap dumps are created in the working directory of the JVM

-XX:+HeapDumpOnOutOfMemoryError

# specify an alternative path for heap dumps; ensure the directory exists and

# has sufficient space

-XX:HeapDumpPath=data

# specify an alternative path for JVM fatal error logs

-XX:ErrorFile=logs/hs_err_pid%p.log

## JDK 8 GC logging

8:-XX:+PrintGCDetails

8:-XX:+PrintGCDateStamps

8:-XX:+PrintTenuringDistribution

8:-XX:+PrintGCApplicationStoppedTime

8:-Xloggc:logs/gc.log

8:-XX:+UseGCLogFileRotation

8:-XX:NumberOfGCLogFiles=32

8:-XX:GCLogFileSize=64m

# JDK 9+ GC logging

9-:-Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64mi? ?里面是一些?elasticsearch启动的jvm参数配置,可以看到默认是jdk8启动的,采用的gc是cms模式,这里我把堆内存的大小xms和xmx设置成了256m的大小。

?然后,我们再打开elasticsearch.yml文件,配置一下这里边的参数,这里面是elasticsearch本身的一些服务配置参数

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

# 这里是集群的名称,注意几台服务器的集群名称要保持一致,不然是找不到的

# 可以自由定义没有严格要求,保持一致即可

cluster.name: cluster-es-gdca

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

# 这里是节点的名称,我用服务器的ip地址作为后缀

# 其他的机器依次可以按照这种方式处理

node.name: node-194

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------接下来配置日志路径和访问路径

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

# 这里是默认的数据路径,默认在/data下面,一般也不建议修改

#path.data: /path/to/data

#

# Path to log files:

# 这里是日志文件打印的路径,默认是logs下面,一般不建议修改

#path.logs: /path/to/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

# 这里是配置服务器访问地址,linux设置0.0.0.0默认就可以通过本机ip地址访问到

network.host: 0.0.0.0

#

# Set a custom port for HTTP:

# 这里是配置访问端口,这里我用了他默认的9200,如果不想改这里直接把#号保留也可以

http.port: 9200?接下来配置一集群的构成结构参数

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

# 这个是组成集群的客户端ip地址

discovery.seed_hosts: ["192.168.10.194", "192.168.10.194","192.168.10.196"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

# 这里是组成集群的节点名称

cluster.initial_master_nodes: ["node-194", "node-195", "node-196"]

#

# For more information, consult the discovery and cluster formation module documentation.

#?这里我都是用ip地址作为节点配置的关键参数,当然也可定义一个具体的业务规则,我为了方便省事也好看是那个ip地址的节点。

系统配置

? ? ? ? 如果你直接前台启动呢,其实系统配置是不怎么需要改动的,但是前台启动关闭shell服务就停了,所以我们需要用后台模式启动,后台启动的基础命令如下:

./bin/elasticsearch -d -p pid? ? ? ? 注意这里我用./bin是因为我在上层目录,意思就是调用bin目录下的?elasticsearch这个启动命令,你也可以直接进到bin目录下运行。

?????????后台启动的话需要配置一些参数,不然的话就会像我第一次装的时候一样踩坑,具体坑我晚点写,先给操作过程。

调整mmapfs的数值

????????由于ES是使用mmapfs存储索引,但是系统的默认值太低了,我们调高一点。

# 设置参数值

sysctl -w vm.max_map_count=262144

# 重启让参数生效

sysctl -p??配置线程数量等参数

? ? ? ? 这里我们需要切换到root用户下面,去修改系统的一个参数文件

vim?/etc/security/limits.conf#Where:

#<domain> can be:

# - a user name

# - a group name, with @group syntax

# - the wildcard *, for default entry

# - the wildcard %, can be also used with %group syntax,

# for maxlogin limit

#

#<type> can have the two values:

# - "soft" for enforcing the soft limits

# - "hard" for enforcing hard limits

#

#<item> can be one of the following:

# - core - limits the core file size (KB)

# - data - max data size (KB)

# - fsize - maximum filesize (KB)

# - memlock - max locked-in-memory address space (KB)

# - nofile - max number of open file descriptors

# - rss - max resident set size (KB)

# - stack - max stack size (KB)

# - cpu - max CPU time (MIN)

# - nproc - max number of processes

# - as - address space limit (KB)

# - maxlogins - max number of logins for this user

# - maxsyslogins - max number of logins on the system

# - priority - the priority to run user process with

# - locks - max number of file locks the user can hold

# - sigpending - max number of pending signals

# - msgqueue - max memory used by POSIX message queues (bytes)

# - nice - max nice priority allowed to raise to values: [-20, 19]

# - rtprio - max realtime priority

#

#<domain> <type> <item> <value>

#

#* soft core 0

#* hard rss 10000

#@student hard nproc 20

#@faculty soft nproc 20

#@faculty hard nproc 50

#ftp hard nproc 0

#@student - maxlogins 4

# 这里是添加的参数

* soft nofile 65536

* hard nofile 65536

* soft nproc 4096

* hard nproc 4096

* soft memlock unlimited

* hard memlock unlimited好了,到这里基本所有的参数就配置完成了,然后通过前面的启动命令启动elasticsearch服务。

启动检查

?正常启动检查

命令行执行:

curl http://192.168.10.194:9200

返回结果

{

"name" : "node-194",

"cluster_name" : "cluster-es-gdca",

"cluster_uuid" : "a7kYn2AXTT2yt7GJpFLDEw",

"version" : {

"number" : "7.6.0",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "7f634e9f44834fbc12724506cc1da681b0c3b1e3",

"build_date" : "2020-02-06T00:09:00.449973Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}查询集群

命令行输入:

curl http://192.168.10.194:9200/_cluster/health

返回结果:

{"cluster_name":"cluster-es-gdca","status":"green","timed_out":false,"number_of_nodes":3,"number_of_data_nodes":3,"active_primary_shards":5,"active_shards":10,"relocating_shards":0,"initializing_shards":0,"unassigned_shards":0,"delayed_unassigned_shards":0,"number_of_pending_tasks":0,"number_of_in_flight_fetch":0,"task_max_waiting_in_queue_millis":0,"active_shards_percent_as_number":100.0}好了,这里可以看到有访问有返回,那么一个基础的集群版?elasticsearch服务就搭建完成,如果要配单机版的就跳过集群配置那一步即可。