阿里云环境下搭建HadoopHA集群

1. HadoopHA介绍

1.1 hadoop高可用集群的简介

? hadoop是一个海量数据存储和计算的平台,能够存储PB级以上的数据,并且利用MapReduce可以对这些数据进行并发式计算;hadoop平台采用的是主从式架构(master/slave)即一个master和若干个slave,这个master就是namenode节点,该节点负责存储和管理元数据、配置副本策略、管理数据块的映射信息以及处理客服端的读写请求,由此可见namenode节点的压力还是比较大;众所周知,主从结构的框架虽然容易进行集群资源的管理和调度,但是有个很大的问题,也就是单点故障的问题。假如namenode节点宕机,将造成整个集群瘫痪无法工作;设计者虽然设计了Secondnamenode节点帮助namenode进行资源管理,但这毕竟是冷备份,无法做到实时热备。因此,我们利用zookeeper(集群协调者)来实现hadoop的高可用集群,即一个集群中有两个namenode,当其中一个宕机,另一个能够无缝衔接,做到实时的热备,保证大数据集群的稳定性。

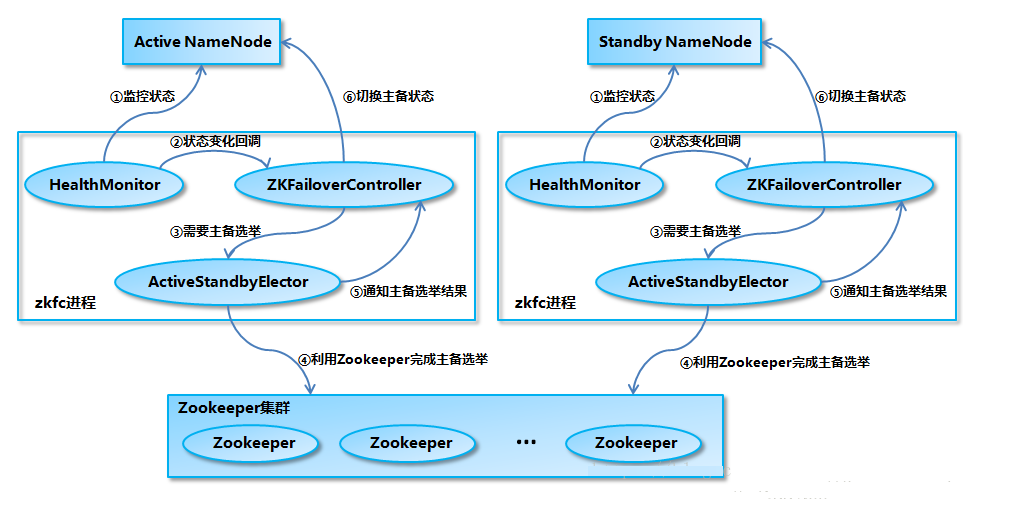

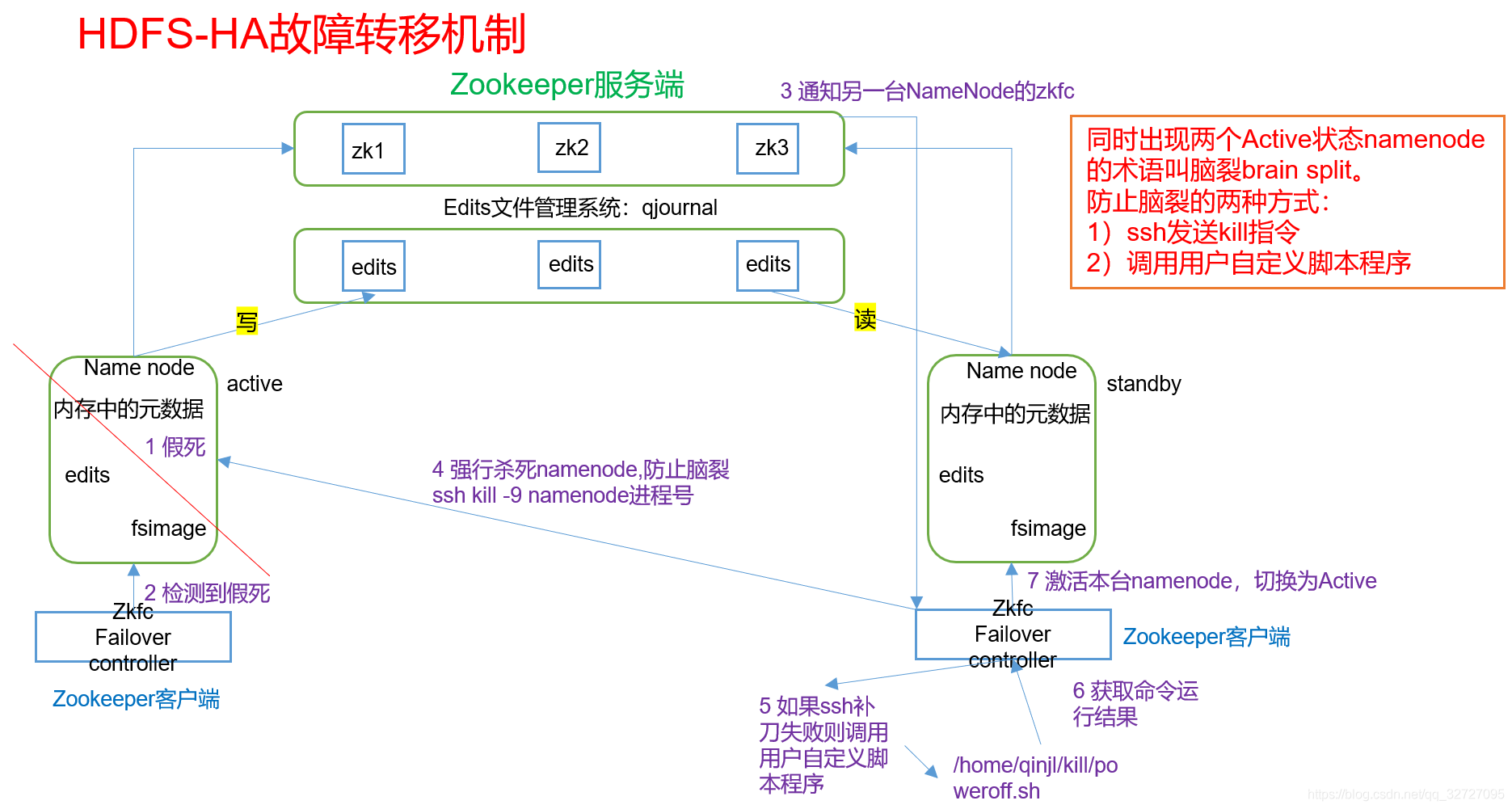

1.2 HadoopHA架构

1.3 自动故障转移工作机制

1.4 集群规划

这里使用三台机器搭建集群

| hadoop001 | hadoop002 | hadoop003 |

|---|---|---|

| NameNode | NameNode | |

| JournalNode | JournalNode | JournalNode |

| DataNode | DataNode | DataNode |

| zk | zk | zk |

| ResourceManager | ResourceManager | |

| NodeManager | NodeManager | NodeManager |

2. 阿里云服务器的购买

由于我还是学生,资金有限,这里选择简单低配的服务器

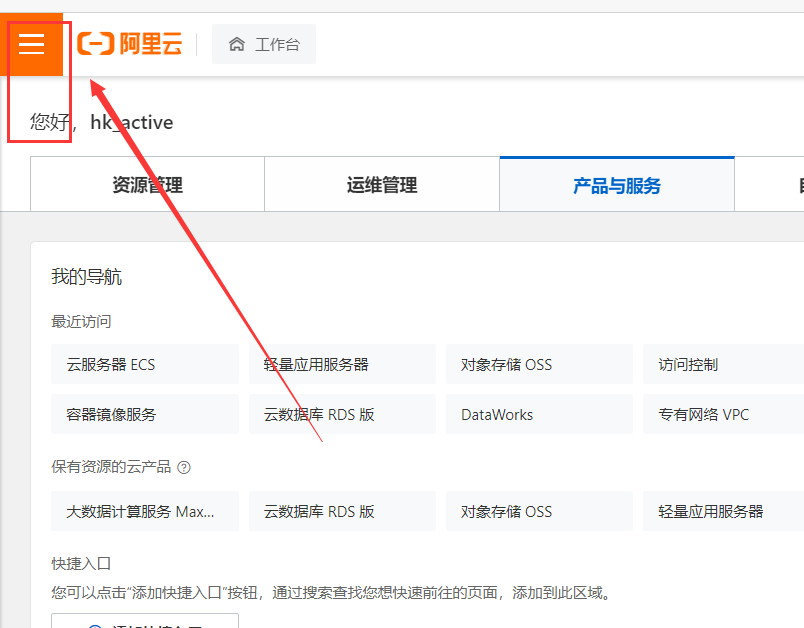

2.1 进入阿里云

先注册登录

选择右上角的控制台

点击左上角的三条横线

选择ECS云服务器

2.1 选择服务器

点击创建服务器

我这里选择2核8G的抢占式主机,这个比较便宜

注意:上面的区域应该同一个(例如:可用区K,因为不同地区的实例内网不同,而hadoop集群需要使用内网搭建)。我忘记选了,后面随机给我分到一个区了。

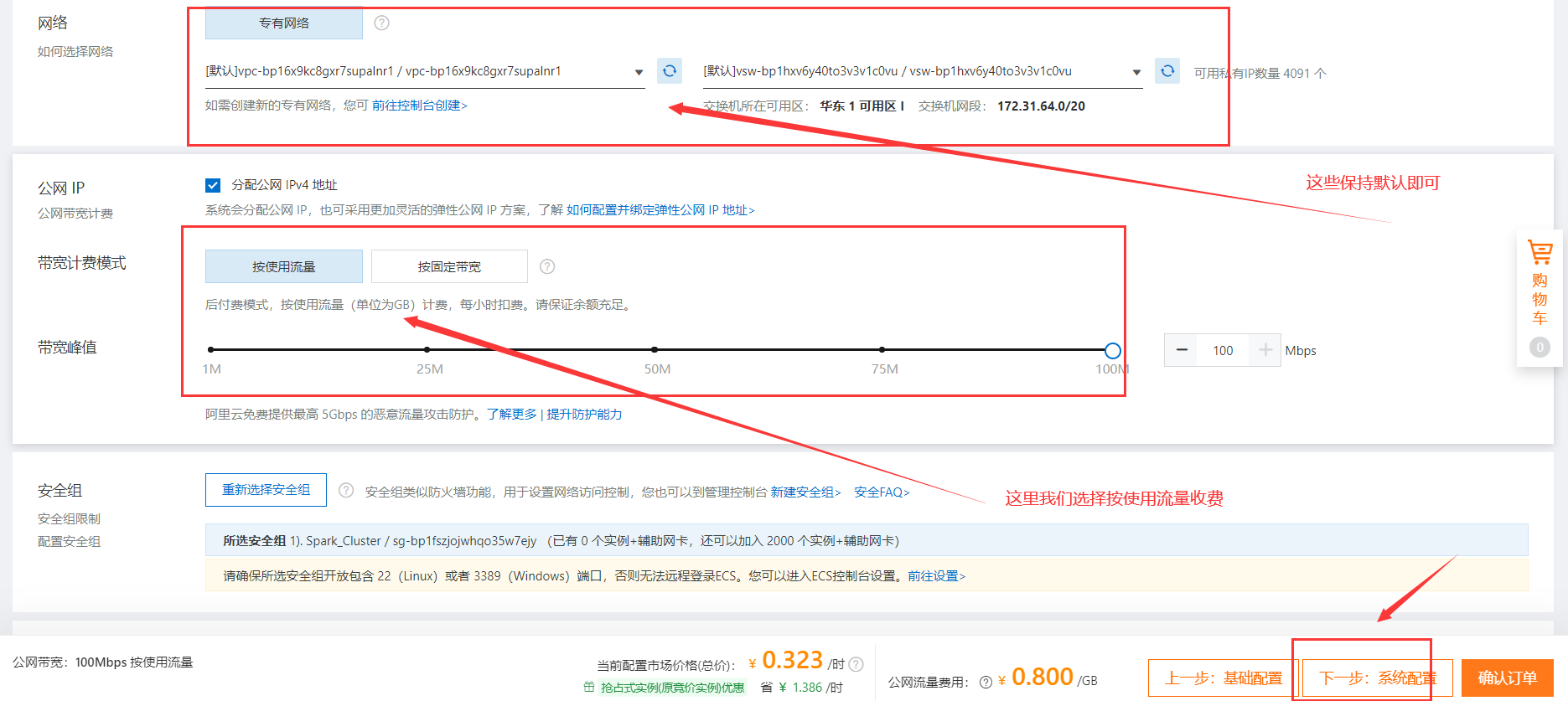

然后点击下面的网络配置

2.3 网络选择配置

2.4 系统配置

然后点击分组设置

分组设置保持默认即可,不需要配置

最后确认清晰,然后提交订单即可

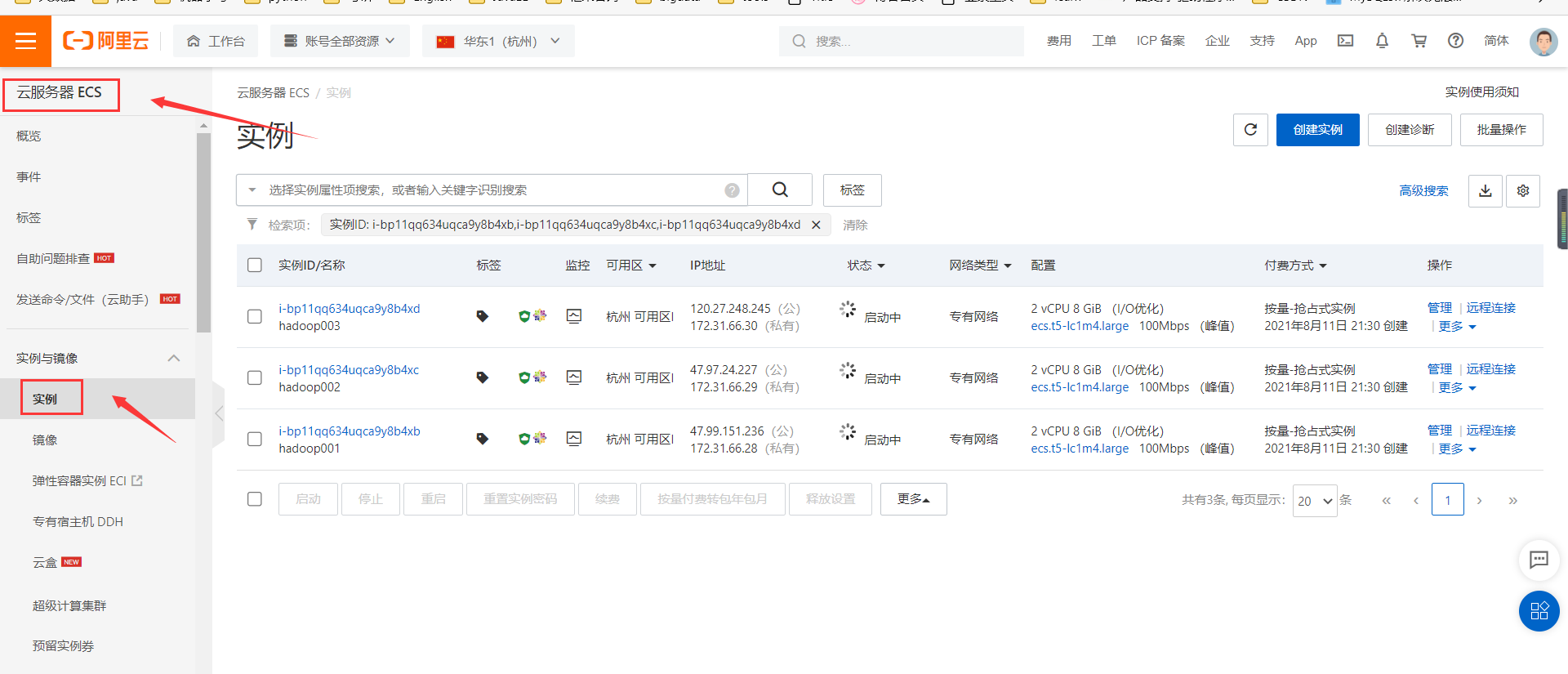

然后在控制台的左边栏点击ECS云服务器然后点击示例

可以看出,服务器正在创建

2.5 配置服务器远程连接

等待服务器创建完成后,我们需要使用远程连接工具来连接服务器

这里我使用的是xshell进行连接

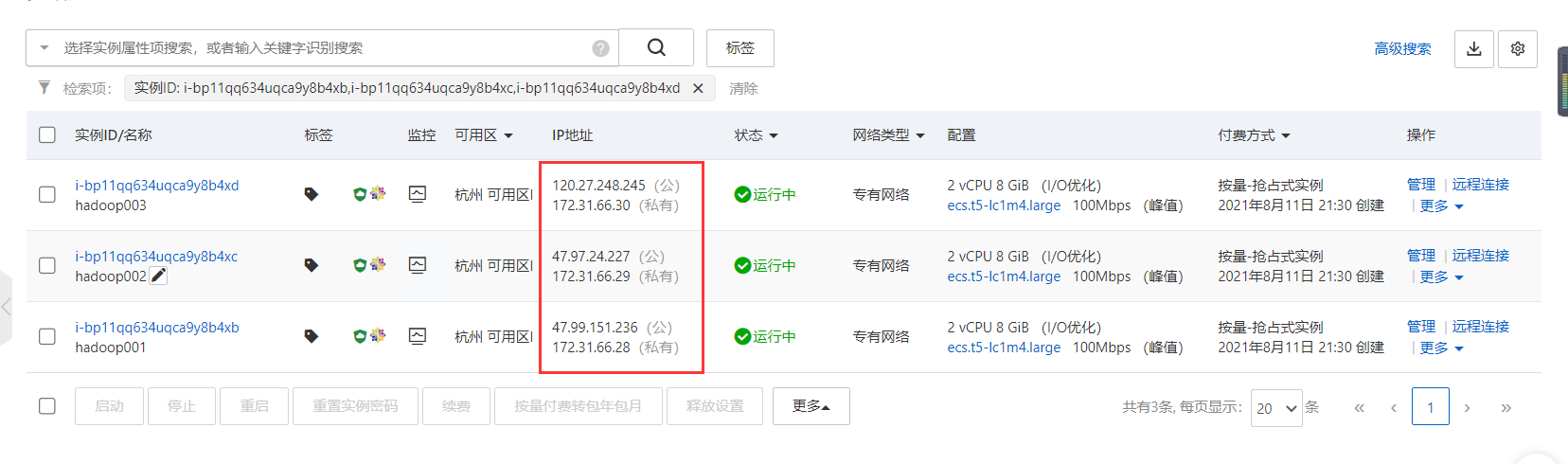

这里我们可以看到,每个服务器有两个IP地址,一个公网ip,一个是内网ip;我们搭建hadoop集群是使用内网ip

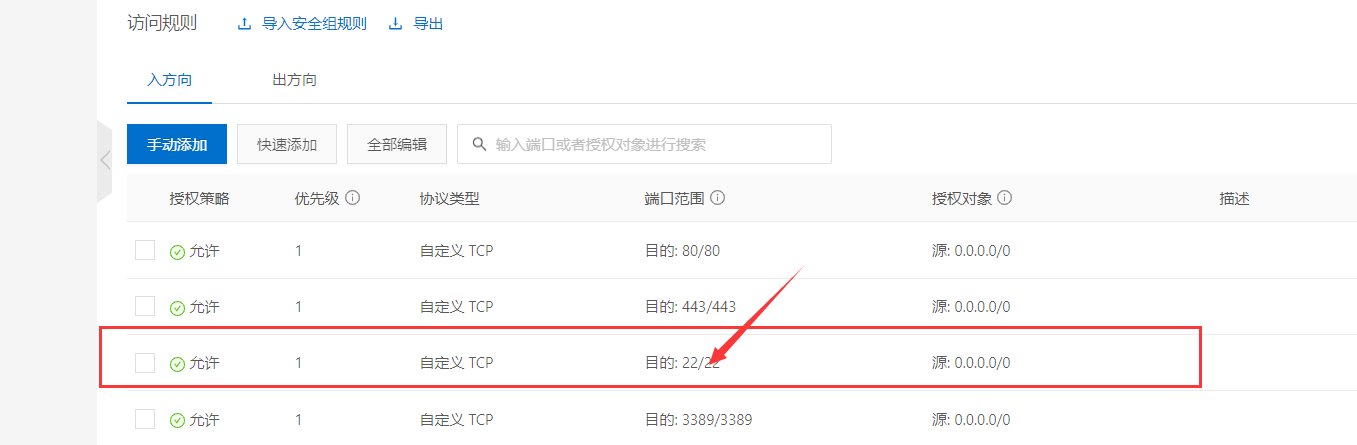

首先检查安全组对外的22号端口是否开放

注意:我这里只有一个安全组,因此所有实例都配置在这个安全组里面。

进去后检查22号端口是否开放,若没够开放,需要自己手动添加一个。

使用公网IP连接ECS

复制这里的公网IP,然后根据自己设置的用户名和密码使用Xshell连接即可。

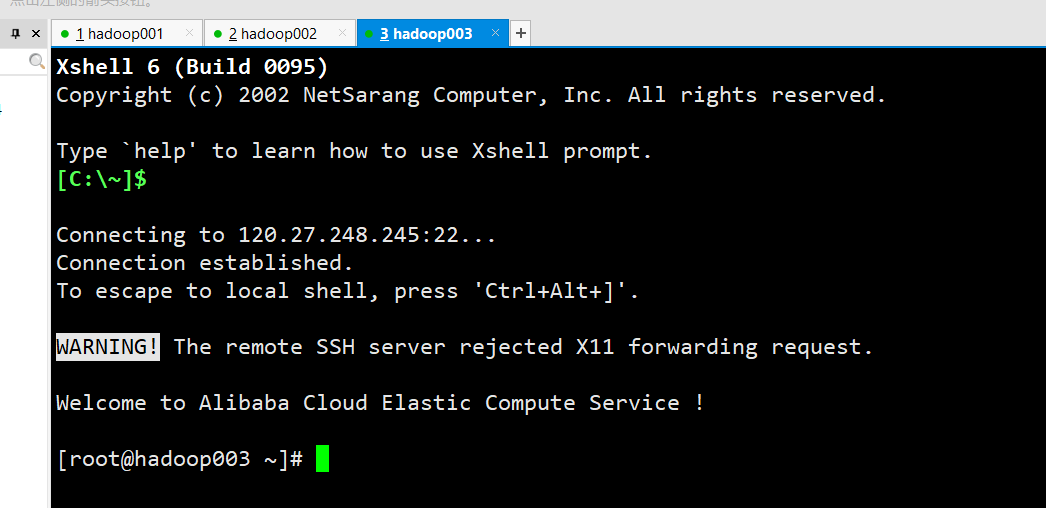

这里我三台机器已经连接

3. 配置网络

3.1 配置主机名

这里由于主机名已经预设好了,因此不用配置了

3.2 配置hosts文件

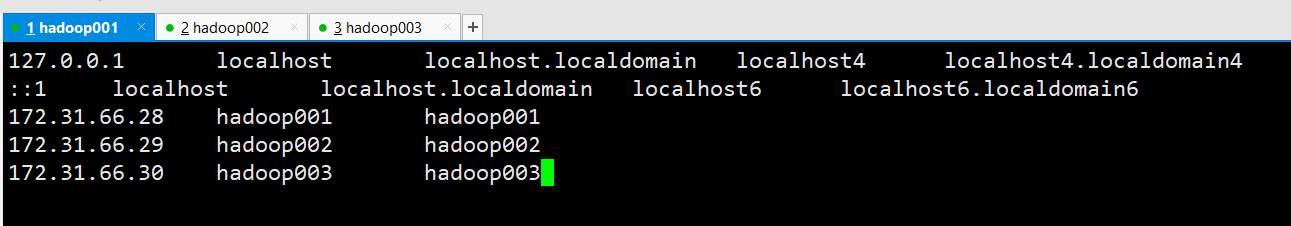

[root@hadoop001 ~]# vim /etc/hosts

需要在三台机器上都配置

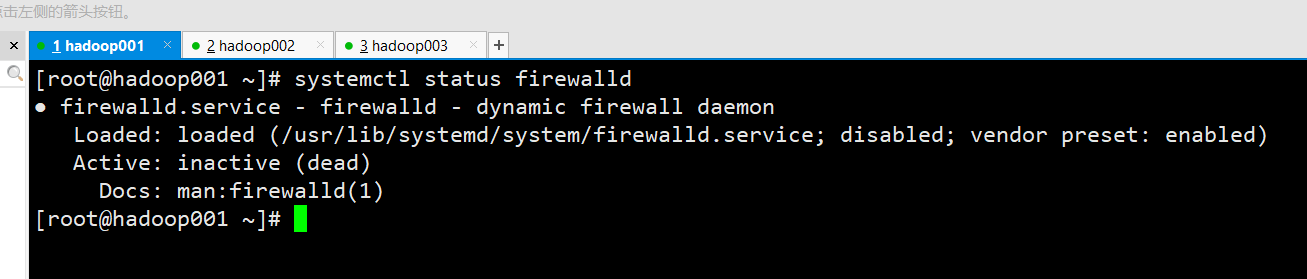

3.2 检查防火墙

一般企业内部的集群之间会关闭防火墙,防止集群间通信被阻挡;企业会在外部网口处统一设置防火墙

阿里云默认的机器防火墙是关闭的,因此可以不用管了

3.3 配置SSH

首先执行:ssh-keygen -t rsa指令

[root@hadoop001 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Q7rbZb6Eu2aHpYEhZtHw97bCuIo1Tl14DALrKwiHb9U root@hadoop001

The key's randomart image is:

+---[RSA 2048]----+

| . .o |

| o... |

| . ..o o |

| o +o.B . |

|o oo..EoS o |

|oo o ..*.+.. |

|o + + + +== |

| o = . +=B. |

| . o.oo+oo. |

+----[SHA256]-----+

连续按下三次回车,生成公钥和私钥

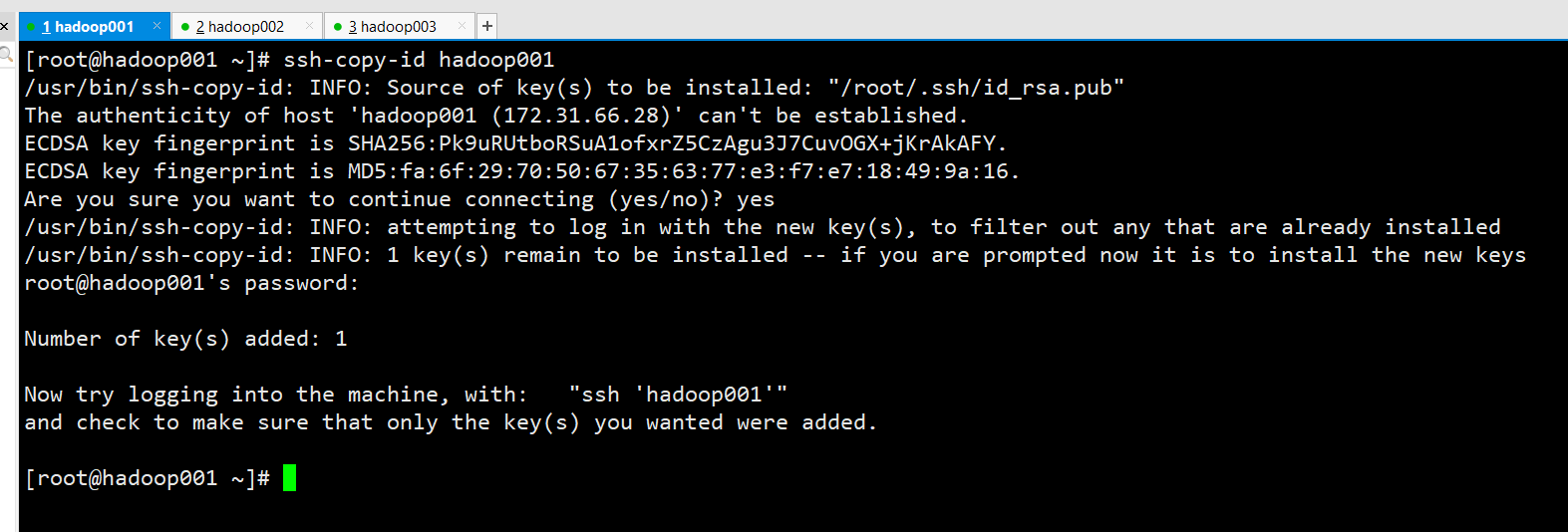

将公钥分发到其他集群

以此类推,每台机器都要配置。

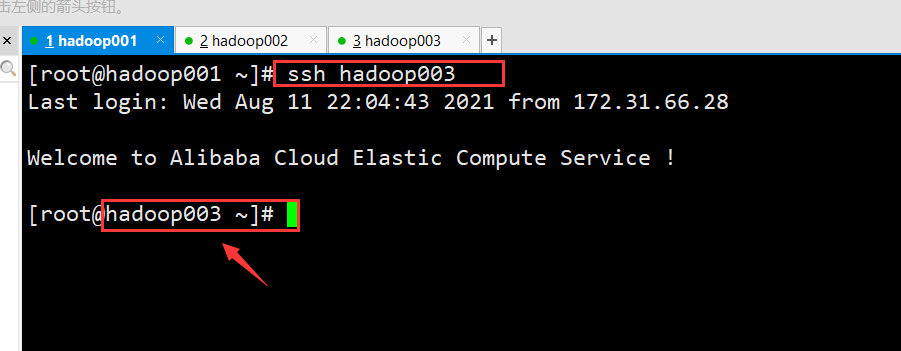

然后我们使用ssh 主机名 测试能够互相登录

4. 安装JDK

由于hadoop时使用java语言编写的,因此我们需要java的运行环境

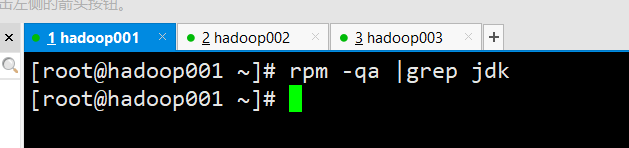

4.1 先卸载预装的jdk

经过搜索,没有预装jdk,因此我们不用卸载了

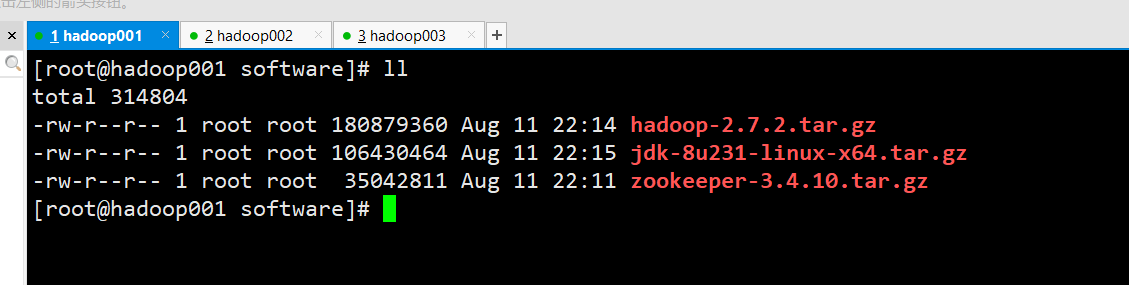

4.2 上传软件安装包

这里将三个安装包都上传

4.3 解压到指定目录

[root@hadoop001 software]# tar -zxvf jdk-8u231-linux-x64.tar.gz -C /opt/module/

4.4 配置环境变量

[root@hadoop001 jdk1.8.0_231]# pwd

/opt/module/jdk1.8.0_231

[root@hadoop001 jdk1.8.0_231]# vim /etc/profile

# 在profile文件的末尾添加:

export JAVA_HOME=/opt/module/jdk1.8.0_231

export PATH=$PATH:$JAVA_HOME/bin

# 保存退出后,使用source指令使得配置生效

[root@hadoop001 jdk1.8.0_231]# source /etc/profile

# 执行java -version指令

[root@hadoop001 ~]# java -version

java version "1.8.0_231"

Java(TM) SE Runtime Environment (build 1.8.0_231-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.231-b11, mixed mode)

[root@hadoop001 ~]#

# 可以看出已经配置成功

其他两个节点也需要配置

5.搭建zookeeper集群

注意:我所有的软件都安装在/opt/module下面,可以按照个人习惯修改

5.1 解压zookeeper安装包

[root@hadoop001 software]# tar -zxvf zookeeper-3.4.10.tar.gz -C /opt/module/

[root@hadoop001 module]# ll

total 8

drwxr-xr-x 7 10 143 4096 Oct 5 2019 jdk1.8.0_231

drwxr-xr-x 10 1001 1001 4096 Mar 23 2017 zookeeper-3.4.10

5.2 配置zookeeper

(1)、在/opt/module/zookeeper-3.4.10下创建zkData目录

[root@hadoop001 zookeeper-3.4.10]# mkdir zkData

(2)、将/opt/module/zookeeper-3.4.10/conf这个目录下的zoo_sample.cfg修改为zoo.cfg

[root@hadoop001 conf]# ll

total 12

-rw-rw-r-- 1 1001 1001 535 Mar 23 2017 configuration.xsl

-rw-rw-r-- 1 1001 1001 2161 Mar 23 2017 log4j.properties

-rw-rw-r-- 1 1001 1001 922 Mar 23 2017 zoo_sample.cfg

[root@hadoop001 conf]# mv zoo_sample.cfg zoo.cfg

[root@hadoop001 conf]#

(3)、配置zoo.cfg

# 修改dataDir

dataDir=/opt/module/zookeeper-3.4.10/zkData

# 添加集群配置

server.1=hadoop001:2888:3888

server.2=hadoop002:2888:3888

server.3=hadoop003:2888:3888

(4)、在zkData中创建myid文件

[root@hadoop001 conf]# cd /opt/module/zookeeper-3.4.10/zkData/

# 进入zkData下面创建

[root@hadoop001 zkData]# touch myid

[root@hadoop001 zkData]# vim myid

# myid中添加服务器编号,也就上面zoo.cfg中server.后面的数字

(5)、将zookeeper分发到其他两个节点

[root@hadoop001 module]# scp -r zookeeper-3.4.10/ hadoop002:`pwd`

(6)、修改各个节点对应的myid中的值

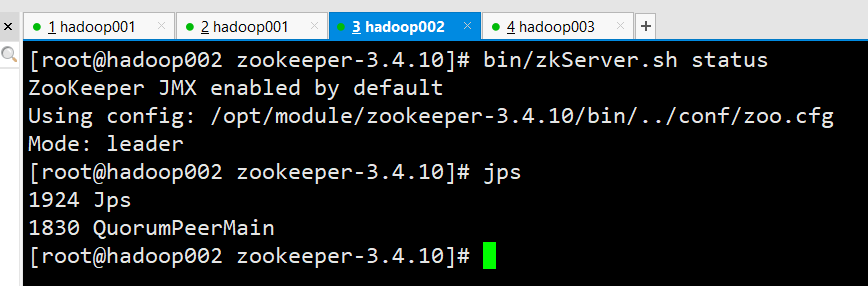

5.3 启动zookeeper

我们需要在三台节点上执行:

[root@hadoop001 zookeeper-3.4.10]# bin/zkServer.sh start

各个节点都出现QuorumPeerMain进程,并也已经选举产生了leader,因此zookeeper集群安装完成。

6. 搭建hadoopHA

6.1 解压Hadoop

[root@hadoop001 software]# tar -zxvf hadoop-2.7.2.tar.gz -C /opt/module/

6.2 配置环境变量

[root@hadoop001 software]# cd /opt/module/hadoop-2.7.2/

[root@hadoop001 hadoop-2.7.2]# pwd

/opt/module/hadoop-2.7.2

[root@hadoop001 hadoop-2.7.2]# vim /etc/profile

# 在profile文件最后添加

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

# source使文件生效

[root@hadoop001 hadoop-2.7.2]# source /etc/profile

[root@hadoop001 hadoop-2.7.2]# hadoop version

Hadoop 2.7.2

Subversion Unknown -r Unknown

Compiled by root on 2017-05-22T10:49Z

Compiled with protoc 2.5.0

From source with checksum d0fda26633fa762bff87ec759ebe689c

This command was run using /opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-common-2.7.2.jar

[root@hadoop001 hadoop-2.7.2]#

# 可以看出,配置完成

6.3 修改配置文件

# 进入配置文件目录

[root@hadoop001 hadoop-2.7.2]# cd etc/hadoop/

[root@hadoop001 hadoop]# pwd

/opt/module/hadoop-2.7.2/etc/hadoop

(1)、编辑hadoop-env.sh文件

[root@hadoop001 hadoop]# vim hadoop-env.sh

# 修改JAVA_HOME配置

export JAVA_HOME=/opt/module/jdk1.8.0_231

# 修改HADOOP_CONF_DIR配置

export HADOOP_CONF_DIR=/opt/module/hadoop-2.7.2/etc/hadoop

(2)、编写slaves文件

[root@hadoop001 hadoop]# vim slaves

# 在slaves中添加

hadoop001

hadoop002

hadoop003

(3)、配置core-site.xml文件

# 在configuration标签中添加

<!-- 指定在Zookeeper上注册的节点的名字 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns</value>

</property>

<!-- 指定Hadoop数据存放目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.2/data</value>

</property>

<!-- 指定zookeeper的连接地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

(4)、编辑hdfs-site.xml文件

# 在configuration标签中添加

<!-- 绑定在Zookeeper上注册的节点名 -->

<property>

<name>dfs.nameservices</name>

<value>ns</value>

</property>

<!-- ns集群下有两个namenode,分别为nn1, nn2 -->

<property>

<name>dfs.ha.namenodes.ns</name>

<value>nn1,nn2</value>

</property>

<!--nn1的RPC通信-->

<property>

<name>dfs.namenode.rpc-address.ns.nn1</name>

<value>hadoop001:9000</value>

</property>

<!--nn1的http通信-->

<property>

<name>dfs.namenode.http-address.ns.nn1</name>

<value>hadoop001:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn2</name>

<value>hadoop002:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn2</name>

<value>hadoop002:50070</value>

</property>

<!--指定namenode的元数据在JournalNode上存放的位置,这样,namenode2可以从journalnode集群里的指定位置上获取信息,达到热备效果-->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop001:8485;hadoop002:8485;hadoop003:8485/ns</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/module/hadoop-2.7.2/data/journal</value>

</property>

<!-- 开启NameNode故障时自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!--配置namenode存放元数据的目录,可以不配置,如果不配置则默认放到hadoop.tmp.dir下-->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///opt/module/hadoop-2.7.2/data/hdfs/name</value>

</property>

<!--配置datanode存放元数据的目录,可以不配置,如果不配置则默认放到hadoop.tmp.dir下-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///opt/module/hadoop-2.7.2/data/hdfs/data</value>

</property>

<!--配置副本数量-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--设置用户的操作权限,false表示关闭权限验证,任何用户都可以操作-->

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

(5)、编辑mapred-site.xml文件

[root@hadoop001 hadoop]# mv mapred-site.xml.template mapred-site.xml

[root@hadoop001 hadoop]# vim mapred-site.xml

# 在configuration标签中添加

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

(6)、编辑yarn-site.xml文件

# 在configuration标签中添加

<!--配置yarn的高可用-->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!--指定两个resourcemaneger的名称-->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!--配置rm1的主机-->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>hadoop001</value>

</property>

<!--配置rm2的主机-->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>hadoop003</value>

</property>

<!--开启yarn恢复机制-->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!--执行rm恢复机制实现类-->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<!--配置zookeeper的地址-->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<!--执行yarn集群的别名-->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>ns-yarn</value>

</property>

<!-- 指定nodemanager启动时加载server的方式为shuffle server -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定resourcemanager地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop003</value>

</property>

6.4 分发hadoop

# 分别拷贝到hadoop002和hadoop003

[root@hadoop001 module]# scp -r hadoop-2.7.2/ hadoop002:`pwd`

[root@hadoop001 module]# scp -r hadoop-2.7.2/ hadoop003:`pwd`

# 然后在其他两个机器上配置环境变量

6.5 启动hadoopHA集群

注意: zookeeper需要先开启

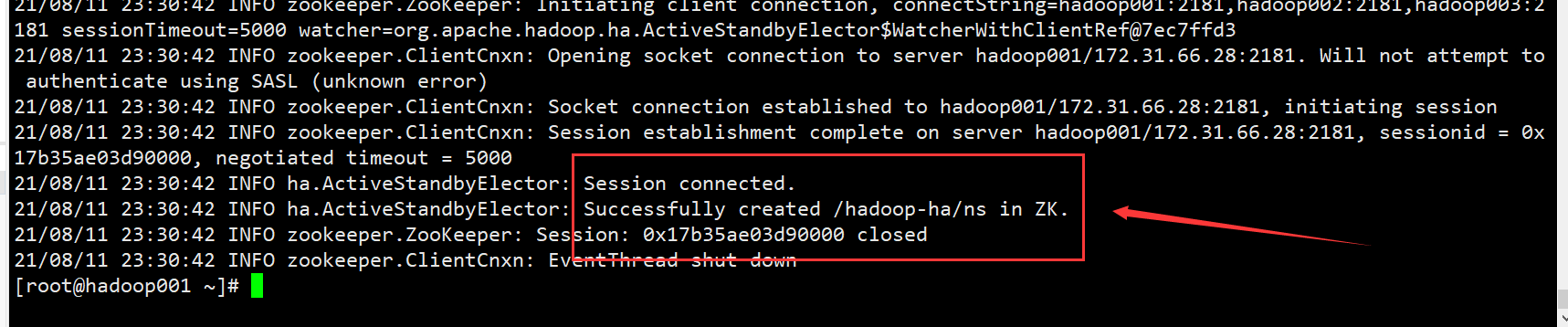

(1)、在hadoop001上格式化Zookeeper

[root@hadoop001 ~]# hdfs zkfc -formatZK

出现上面这种,说明成功

(2)、在三台节点上启动journalNode

[root@hadoop001 ~]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-root-journalnode-hadoop001.out

[root@hadoop001 ~]# jps

4945 Jps

2418 QuorumPeerMain

4890 JournalNode

[root@hadoop001 ~]#

注意: 三台机器都要启动

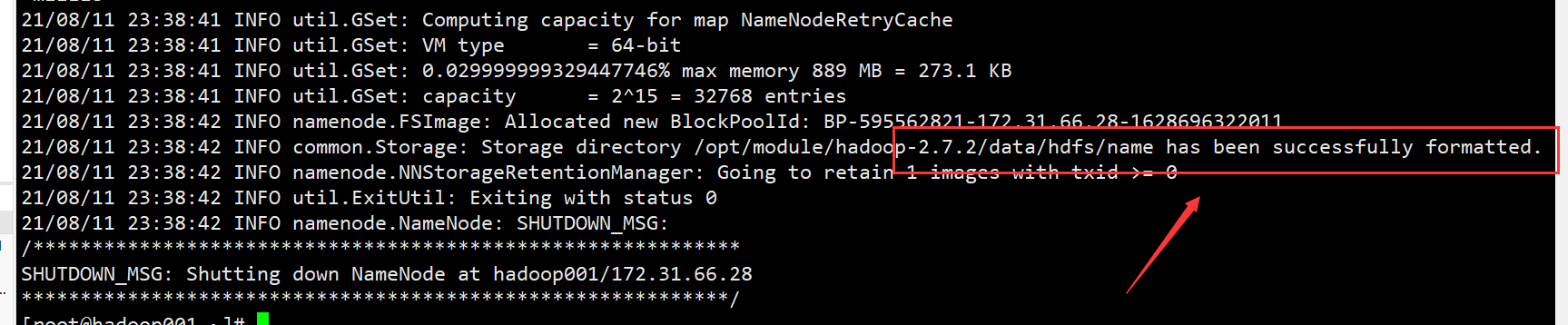

(3)、在hadoop001上执行格式化指令并启动namenode

[root@hadoop001 ~]# hadoop namenode -format

执行成功

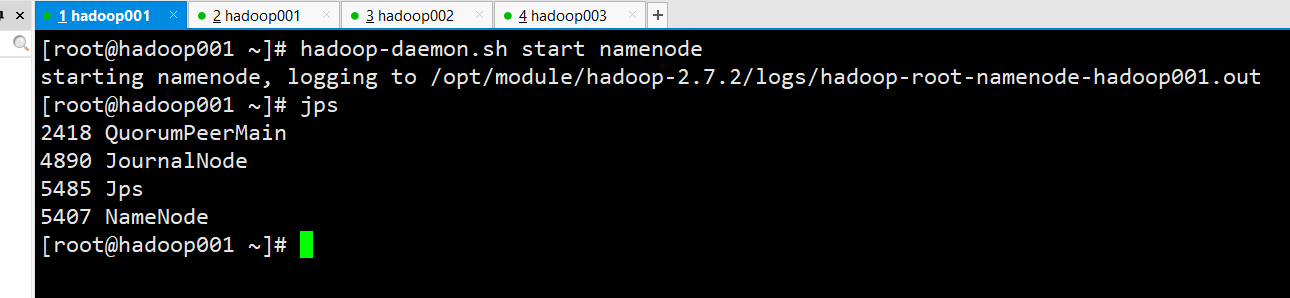

启动namenode

[root@hadoop001 ~]# hadoop-daemon.sh start namenode

starting namenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-root-namenode-hadoop001.out

[root@hadoop001 ~]# jps

2418 QuorumPeerMain

4890 JournalNode

5485 Jps

5407 NameNode

[root@hadoop001 ~]#

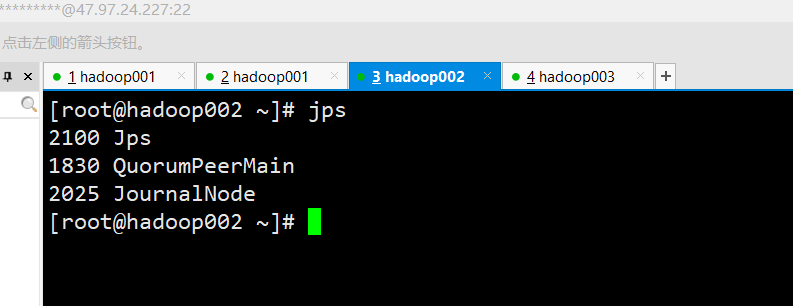

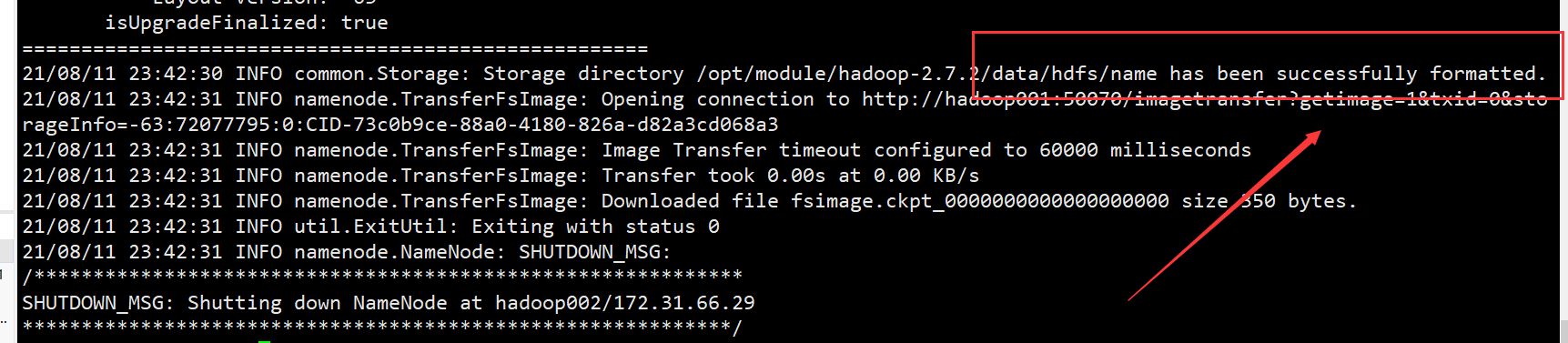

(4)、在hadoop002上执行格式化指令并启动namenode

[root@hadoop002 ~]# hadoop namenode -bootstrapStandBy

格式化成功

启动namenode:

[root@hadoop002 ~]# hadoop-daemon.sh start namenode

starting namenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-root-namenode-hadoop002.out

[root@hadoop002 ~]# jps

2256 Jps

1830 QuorumPeerMain

2182 NameNode

2025 JournalNode

[root@hadoop002 ~]#

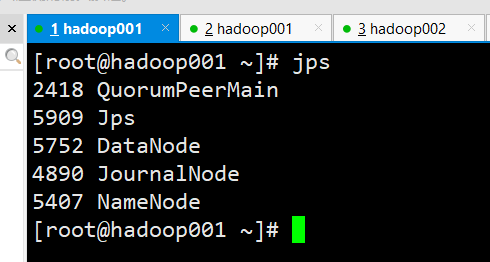

(5)、启动三台节点的datanode

# 三台机器都要执行

[root@hadoop001 ~]# hadoop-daemon.sh start datanode

(6)、在hadoop001和hadoop002上启动FailOverController

[root@hadoop001 ~]# hadoop-daemon.sh start zkfc

starting zkfc, logging to /opt/module/hadoop-2.7.2/logs/hadoop-root-zkfc-hadoop001.out

[root@hadoop001 ~]# jps

2418 QuorumPeerMain

5990 DFSZKFailoverController

5752 DataNode

4890 JournalNode

6074 Jps

5407 NameNode

[root@hadoop001 ~]#

(7)、在hadoop003上执行start-yarn.sh

[root@hadoop003 ~]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-root-resourcemanager-hadoop003.out

hadoop002: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-root-nodemanager-hadoop002.out

hadoop001: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-root-nodemanager-hadoop001.out

hadoop003: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-root-nodemanager-hadoop003.out

[root@hadoop003 ~]# jps

1856 QuorumPeerMain

2609 Jps

2214 ResourceManager

2007 JournalNode

2089 DataNode

2319 NodeManager

[root@hadoop003 ~]#

(8)、在hadoop001上执行yarn-daemon.sh start resourcemanager

[root@hadoop001 ~]# yarn-daemon.sh start resourcemanager

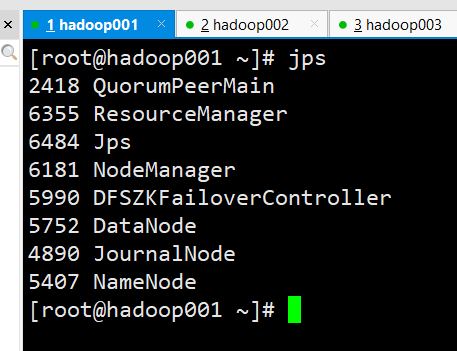

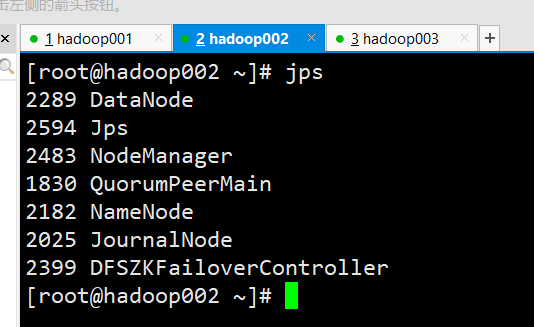

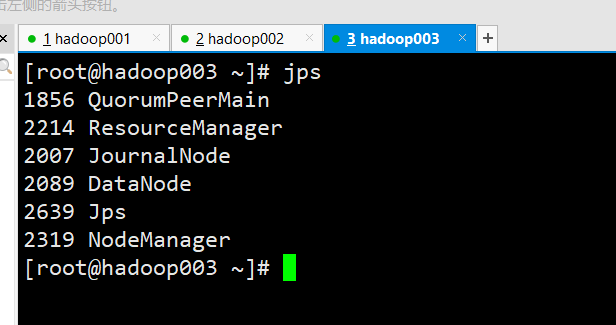

(9)、最终节点状态

hadoop001: (8个进程)

hadoop002: (7个进程)

hadoop003: (6个进程)

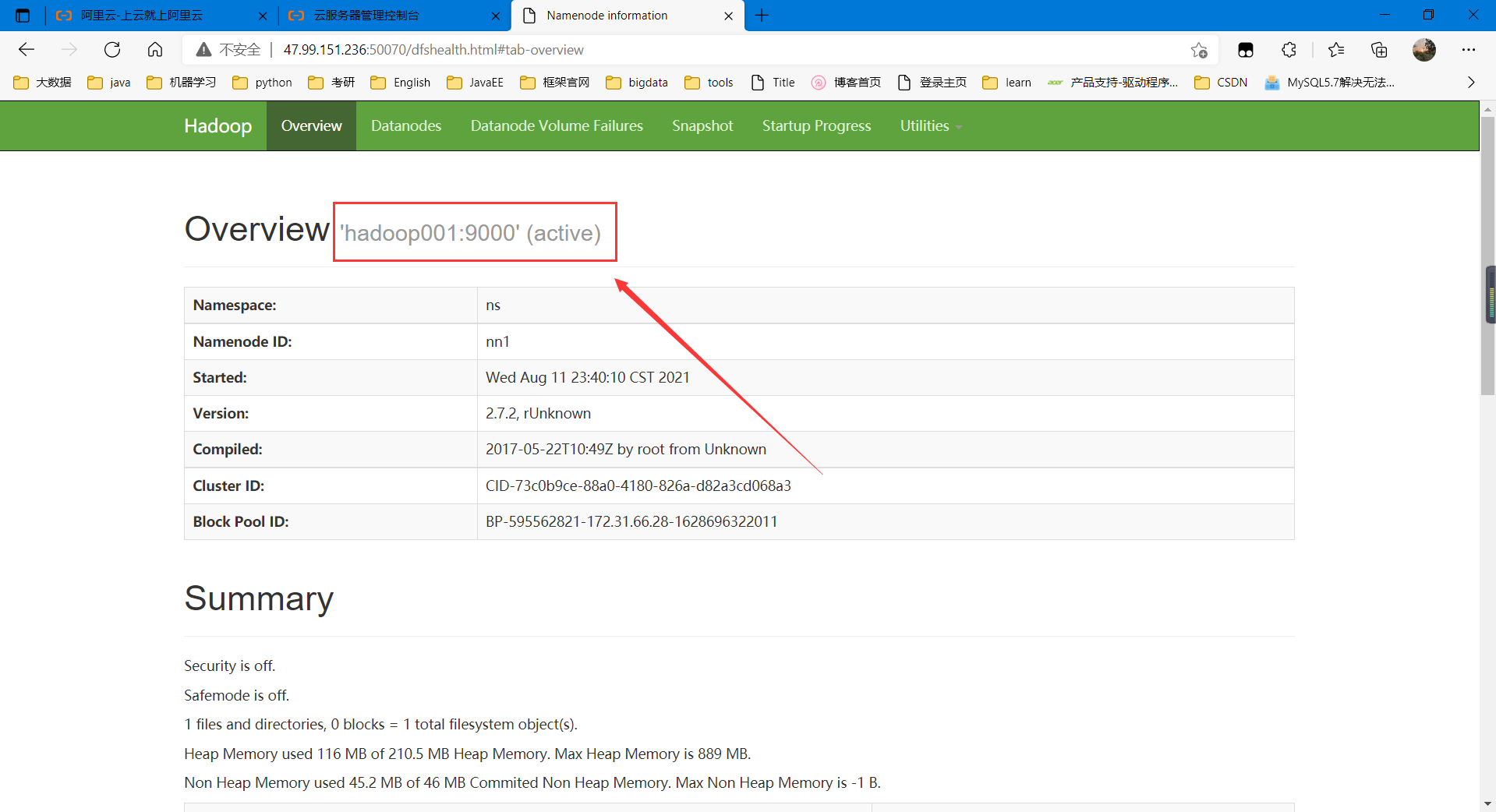

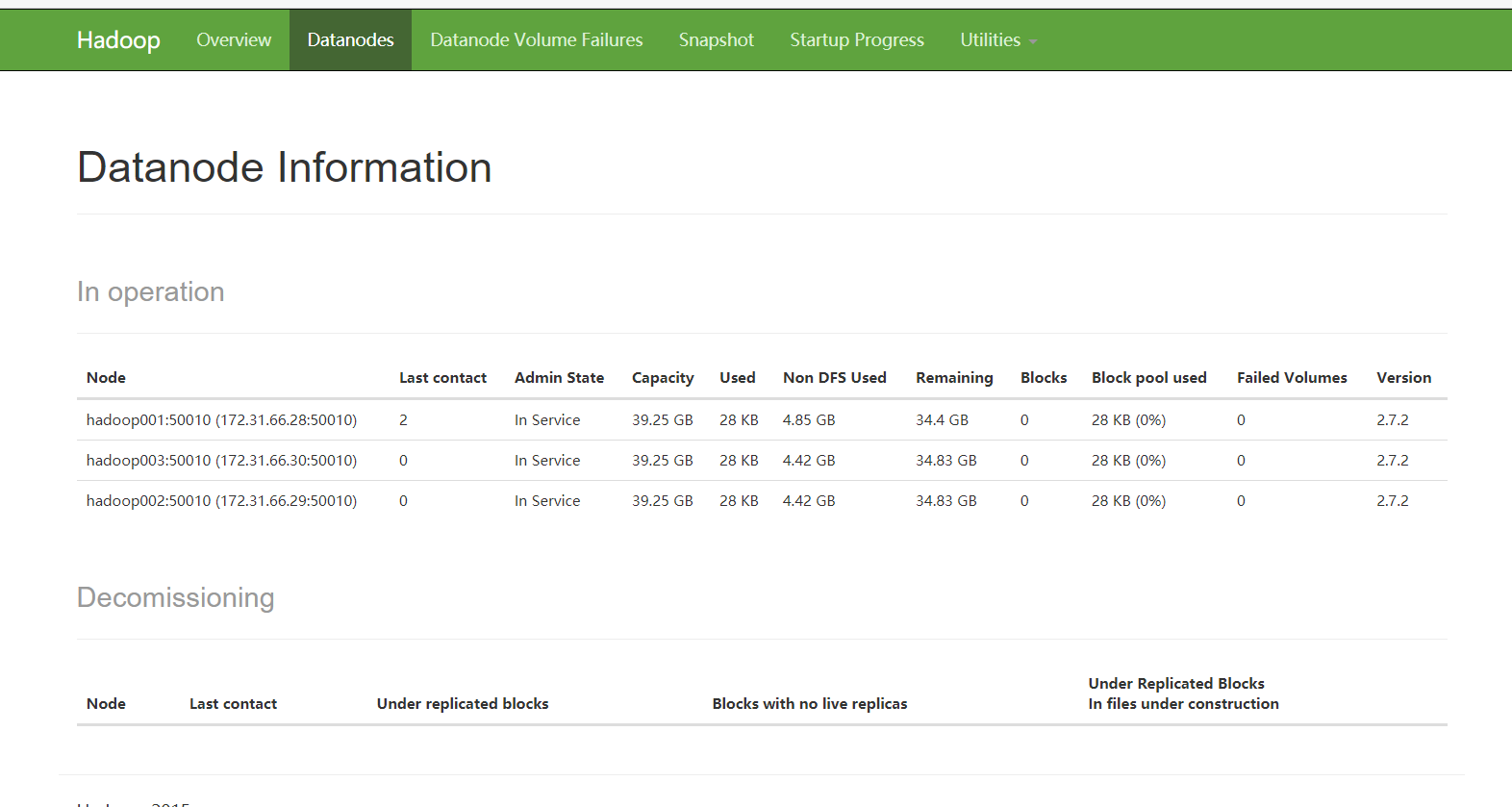

6.6 hadoopweb ui界面

这里需要先去阿里云的安全组开放50070端口,然后才能访问。

上面可以看出,各个组件的webui均能访问。

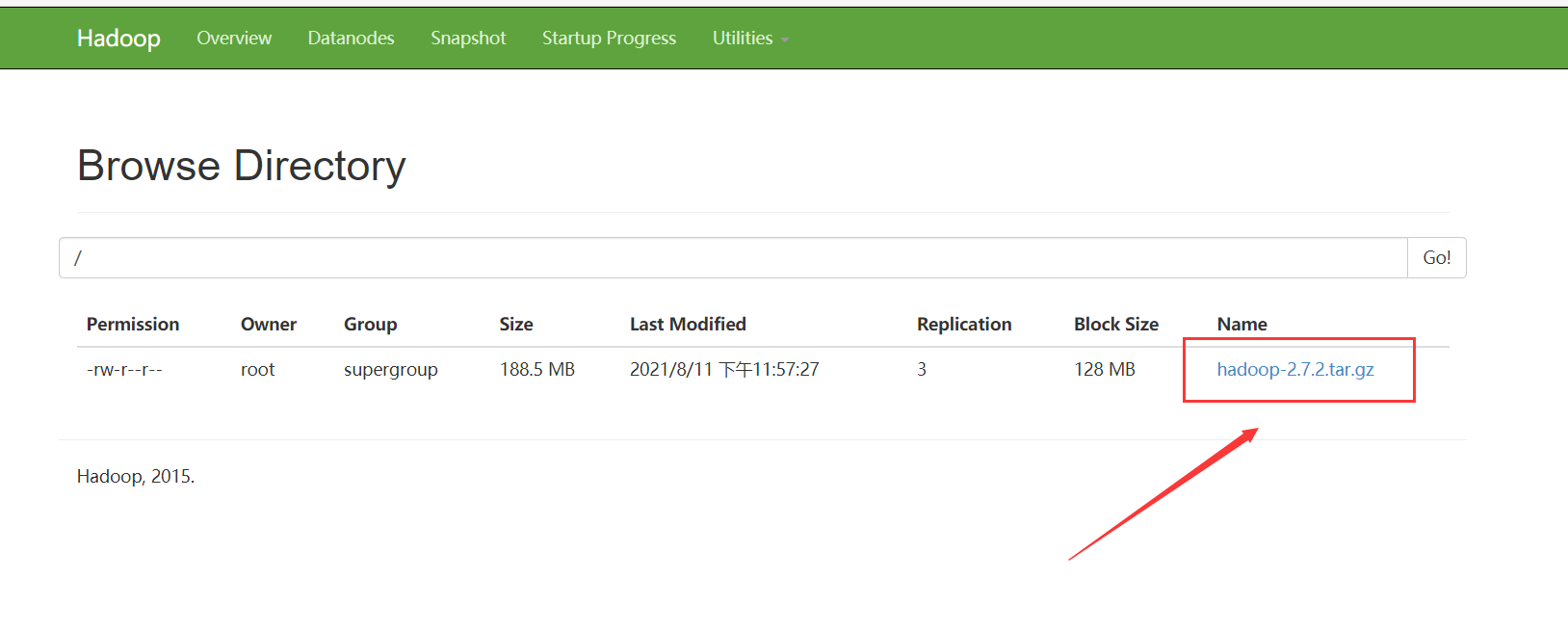

6.7 测试hdfs集群

我们上传一些文件:

# 使用put指令上传hadoop安装包

[root@hadoop001 software]# hadoop fs -put hadoop-2.7.2.tar.gz /

[root@hadoop001 software]# hadoop fs -ls /

Found 1 items

-rw-r--r-- 3 root supergroup 197657687 2021-08-11 23:57 /hadoop-2.7.2.tar.gz

[root@hadoop001 software]#

webui查看

上传成功

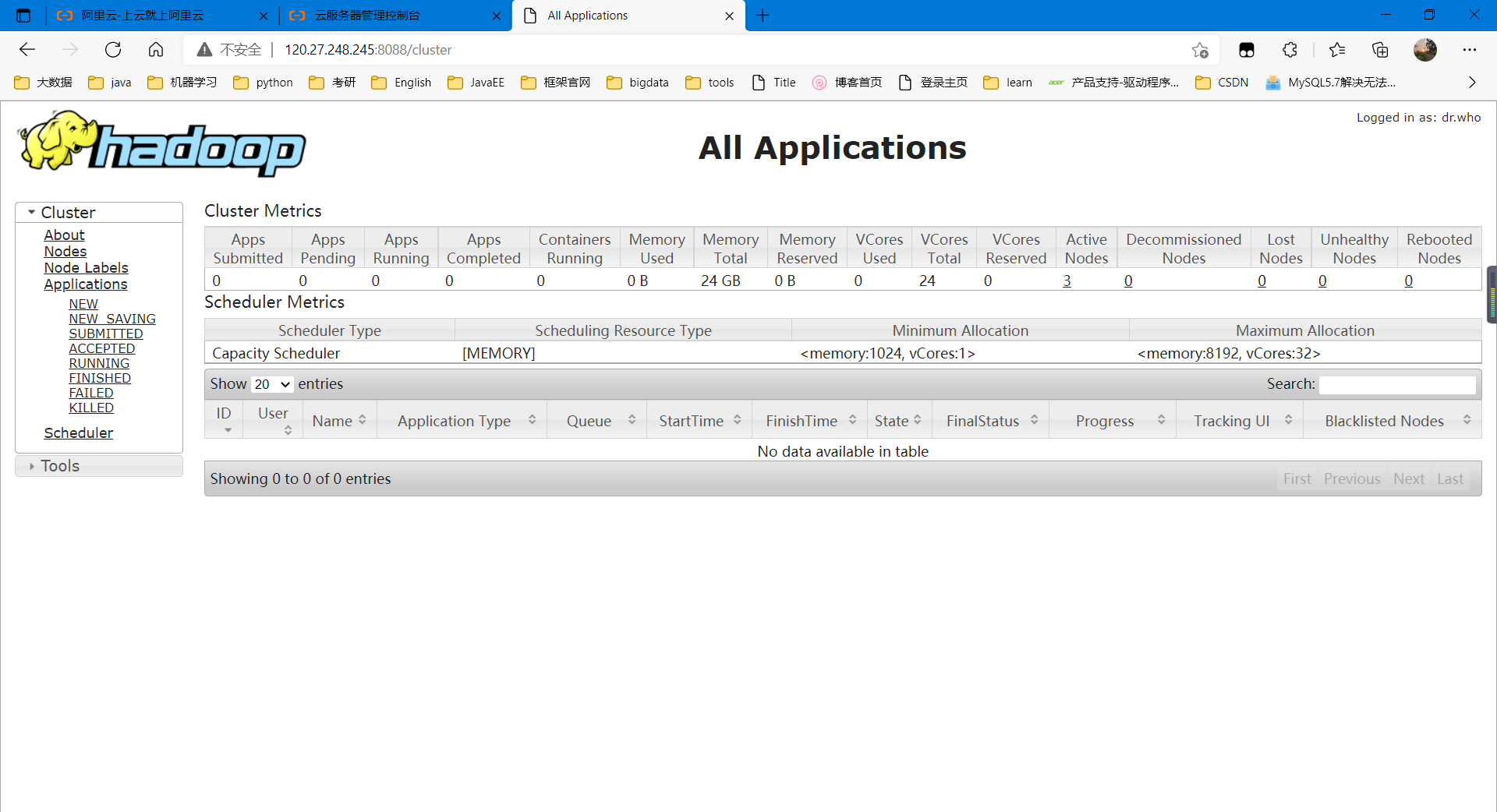

6.8 测试yarn集群

运行一个mapreduce任务:

# 计算圆周率

[root@hadoop001 hadoop-2.7.2]# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar pi 20 20

Number of Maps = 20

Samples per Map = 20

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Wrote input for Map #5

Wrote input for Map #6

Wrote input for Map #7

Wrote input for Map #8

Wrote input for Map #9

Wrote input for Map #10

Wrote input for Map #11

Wrote input for Map #12

Wrote input for Map #13

Wrote input for Map #14

Wrote input for Map #15

Wrote input for Map #16

Wrote input for Map #17

Wrote input for Map #18

Wrote input for Map #19

Starting Job

21/08/12 00:00:47 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

21/08/12 00:00:48 INFO input.FileInputFormat: Total input paths to process : 20

21/08/12 00:00:48 INFO mapreduce.JobSubmitter: number of splits:20

21/08/12 00:00:48 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1628696898160_0001

21/08/12 00:00:48 INFO impl.YarnClientImpl: Submitted application application_1628696898160_0001

21/08/12 00:00:48 INFO mapreduce.Job: The url to track the job: http://hadoop003:8088/proxy/application_1628696898160_0001/

21/08/12 00:00:48 INFO mapreduce.Job: Running job: job_1628696898160_0001

21/08/12 00:00:55 INFO mapreduce.Job: Job job_1628696898160_0001 running in uber mode : false

21/08/12 00:00:55 INFO mapreduce.Job: map 0% reduce 0%

21/08/12 00:01:07 INFO mapreduce.Job: map 30% reduce 0%

21/08/12 00:01:09 INFO mapreduce.Job: map 45% reduce 0%

21/08/12 00:01:10 INFO mapreduce.Job: map 65% reduce 0%

21/08/12 00:01:11 INFO mapreduce.Job: map 100% reduce 0%

21/08/12 00:01:12 INFO mapreduce.Job: map 100% reduce 100%

21/08/12 00:01:13 INFO mapreduce.Job: Job job_1628696898160_0001 completed successfully

21/08/12 00:01:13 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=446

FILE: Number of bytes written=2524447

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=5030

HDFS: Number of bytes written=215

HDFS: Number of read operations=83

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=20

Launched reduce tasks=1

Data-local map tasks=20

Total time spent by all maps in occupied slots (ms)=253189

Total time spent by all reduces in occupied slots (ms)=2746

Total time spent by all map tasks (ms)=253189

Total time spent by all reduce tasks (ms)=2746

Total vcore-milliseconds taken by all map tasks=253189

Total vcore-milliseconds taken by all reduce tasks=2746

Total megabyte-milliseconds taken by all map tasks=259265536

Total megabyte-milliseconds taken by all reduce tasks=2811904

Map-Reduce Framework

Map input records=20

Map output records=40

Map output bytes=360

Map output materialized bytes=560

Input split bytes=2670

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=560

Reduce input records=40

Reduce output records=0

Spilled Records=80

Shuffled Maps =20

Failed Shuffles=0

Merged Map outputs=20

GC time elapsed (ms)=6425

CPU time spent (ms)=6740

Physical memory (bytes) snapshot=5434896384

Virtual memory (bytes) snapshot=44580233216

Total committed heap usage (bytes)=4205838336

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=2360

File Output Format Counters

Bytes Written=97

Job Finished in 26.222 seconds

Estimated value of Pi is 3.17000000000000000000

这里计算成功。

6.9 关闭集群

在hadoop001上执行stop-all.sh

[root@hadoop001 ~]# stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [hadoop001 hadoop002]

hadoop002: stopping namenode

hadoop001: stopping namenode

hadoop002: stopping datanode

hadoop001: stopping datanode

hadoop003: stopping datanode

Stopping journal nodes [hadoop001 hadoop002 hadoop003]

hadoop003: stopping journalnode

hadoop001: stopping journalnode

hadoop002: stopping journalnode

Stopping ZK Failover Controllers on NN hosts [hadoop001 hadoop002]

hadoop002: stopping zkfc

hadoop001: stopping zkfc

stopping yarn daemons

stopping resourcemanager

hadoop003: stopping nodemanager

hadoop002: stopping nodemanager

hadoop001: stopping nodemanager

hadoop003: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

hadoop002: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

hadoop001: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

no proxyserver to stop

已经关闭了集群。

7. 注意点

1、 集群第一次启动由于需要初始化,因此启动比较麻烦。

第一次启动成功后,下次启动,在hadoop001上执行start-all.sh就可以了。

[root@hadoop001 ~]# start-all.sh

2、启动hadoopHA集群前一定要先启动zookeeper集群

3: stopping datanode

Stopping journal nodes [hadoop001 hadoop002 hadoop003]

hadoop003: stopping journalnode

hadoop001: stopping journalnode

hadoop002: stopping journalnode

Stopping ZK Failover Controllers on NN hosts [hadoop001 hadoop002]

hadoop002: stopping zkfc

hadoop001: stopping zkfc

stopping yarn daemons

stopping resourcemanager

hadoop003: stopping nodemanager

hadoop002: stopping nodemanager

hadoop001: stopping nodemanager

hadoop003: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

hadoop002: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

hadoop001: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

no proxyserver to stop

已经关闭了集群。

## 7. 注意点

1、 集群第一次启动由于需要初始化,因此启动比较麻烦。

第一次启动成功后,下次启动,在hadoop001上执行start-all.sh就可以了。

```shell

[root@hadoop001 ~]# start-all.sh

2、启动hadoopHA集群前一定要先启动zookeeper集群