群起hadoop服务器时,出现的相关问题

楼主最近在学习b站up主尚硅谷发布的hadoop x3.0视频,群起服务器的时候遇到以下问题

*环境:centos7.5,hadoop x3.0 ,root账号操作,使用两台腾讯云服务器,一台天翼云服务器 *

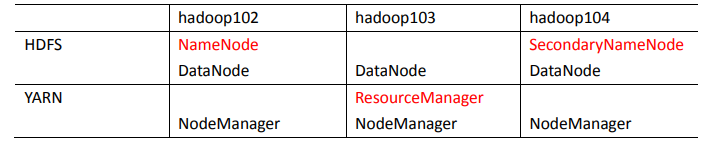

云服务器分配方式:

问题一:sbin/start-dfs.sh出现报错

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

这是在hadoop102输入“sbin/start-dfs.sh”指令后,出现的报错。

解决方法

在sbin/start-dfs.sh和sbin/stop-dfs.sh文件内的顶部添加:

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

在sbin/start-yarn.sh和sbin/stop-yarn.sh文件内的顶部添加:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

之后分发至各个服务器中,最后重新输入sbin/start-dfs.sh

问题二:sbin/start-dfs.sh没出现错误信息,但namenode没有启动。

在查看了logs/hadoop-root-namenode-hadoop102.log日志文件后,发现如下信息:

2021-08-12 18:23:07,966 INFO org.apache.hadoop.http.HttpServer2: addJerseyResourcePackage: packageName=org.apache.hadoop.hdfs.server.namenode.web.resources;org.apache.hadoop.hdfs.web.resources, pathSpec=/webhdfs/v1/*

2021-08-12 18:23:07,976 INFO org.apache.hadoop.http.HttpServer2: HttpServer.start() threw a non Bind IOException

java.net.BindException: Port in use: hadoop102:9870

at org.apache.hadoop.http.HttpServer2.constructBindException(HttpServer2.java:1213)

at org.apache.hadoop.http.HttpServer2.bindForSinglePort(HttpServer2.java:1235)

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:1294)

at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:1149)

at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:181)

at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:881)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:703)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:949)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:922)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1688)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1755)

Caused by: java.net.BindException: Cannot assign requested address

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.Net.bind(Net.java:425)

信息中有两条语句:

“java.net.BindException: Port in use: hadoop102:9870”

“Caused by: java.net.BindException: Cannot assign requested address”

楼主一开始以为是第一条语句是根本原因

但是在确认所有服务器都关闭防火墙以及端口没有被占用后,并经过多次尝试相关的解决方法后,发现问题还是没有解决,故放弃第一条语句为突破口

转而向下面的第二条语句进行分析。

以下是解决方法。

解决方法

将./etc/hadoop/下的

core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml文件中

有关hadoop102,hadoop103,hadoop104的地方

全部改成对应云服务器的局域网ip

然后分发给各个服务器。