文章目录

一、ELK日志分析系统

- ELK三部分组成

①E:Elasticsearch

②L:Logstash

③K:Kibana

1、日志服务器

- 优点:①提高安全性

②集中化管理 - 缺点:对日志分析困难

2、日志处理步骤

①将日志进行集中化管理

②将日志格式化(Logstash)并输出到Elastucsearch

③对格式化的数据进行索引和存储(Elasticsearch)

④前端数据的展示(Kibana)

二、Elasticsearch

- 提供了一个分布式多用户能力的全文搜索引擎

- 提供将索引分成对个分片的功能,当在创建时,可以定义想要分片的数量,每个分片就是一个全功能的独立索引,可以位于集群中任何节点上

1、特性

①接近实时

②集群

③节点

④索引

索引(库)——类型(表)——文档(记录)

⑤分片和副本

2、分片

- 分布式分片的机制和搜索请求的文档如何汇总完全是有elasticsearch控制的,这些对用户而言是透明的

- 水平分割扩展,增大存储量

- 分布式并行跨分片操作,提供性能和吞吐量

3、副本

- 高可用性,以应对分片或节点故障,出于这个原因,分片副本要在不同的节点上

- 性能加强,增加吞吐量,搜索可以并行在所有副本上执行

三、LogStash

- 一款强大的数据处理工具

- 可实现数据传输、格式处理、格式化输出

- 数据输入、数据加工(如过滤,改写等)以及数据输出

三组件组成:Input、 Output、Filter Plugin

- Input:获取日志

- Output:输出日志

- Filter Plugin:过滤日志、格式处理

1、LogStash主要组件

- Shipper

- Indexer

- Broker

- Search and Storage

- Web Interface

四、Kibana

- 一个针对Elasticsearch的开源分析及可视化平台

- 搜索、查看存储在Elasticsearch索引的数据

- 通过各种图表进行高级数据分析及展示

1、Kibana主要功能

①Elasticsearch无缝之集成

②整合数据,负责数据分析

③让更多团队成员收益

④接口灵活,分享更容易

⑤配置简单、可视化多数据源

⑥简单数据导出

五、ELK部署日志分析系统

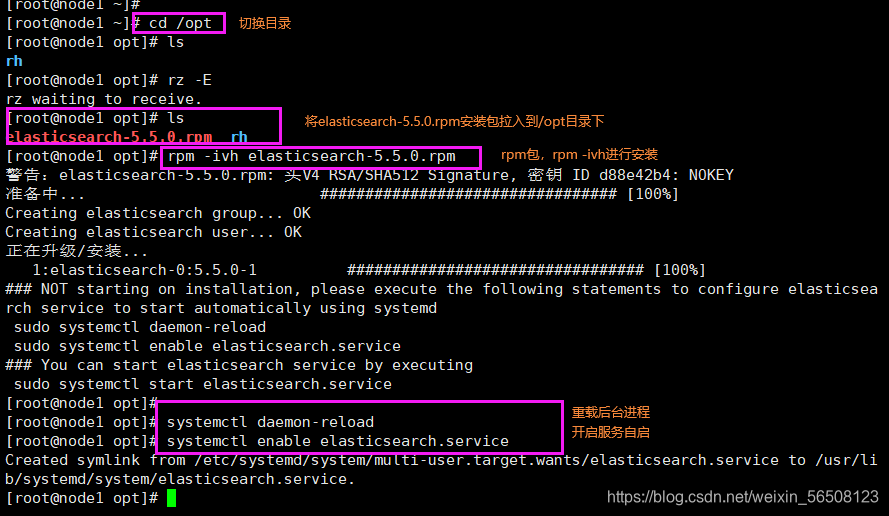

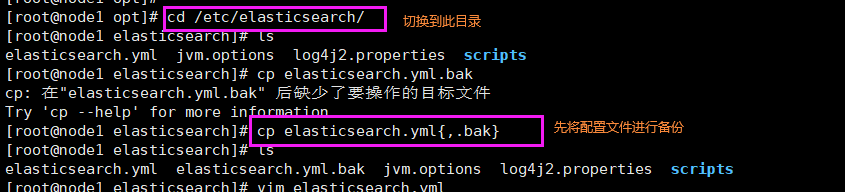

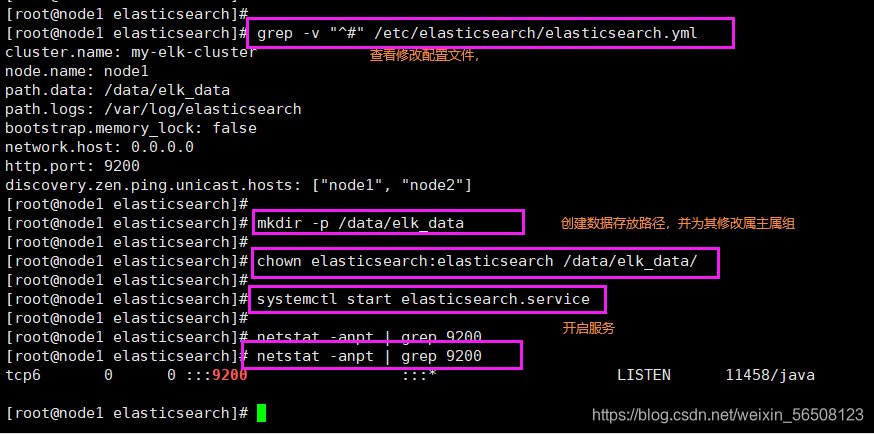

1、在node节点上配置elasticsearch 环境

[root@node1 elasticsearch]# vim elasticsearch.yml /修改配置文件

17 cluster.name: my-elk-cluster /定义集群名称,两node节点配置一致

23 node.name: node1 /当前节点名称

33 path.data: /data/elk_data /数据存放路径

37 path.logs: /var/log/elasticsearch /日志存放路径

43 bootstrap.memory_lock: false /关闭启动时锁定内存(前端缓存)

55 network.host: 0.0.0.0 /服务器监听IP,0.0.0.0监听所有

59 http.port: 9200 /监听端口9200

68 discovery.zen.ping.unicast.hosts: ["node1", "node2"] /定义集群内部节点,集群发现通过单播实现

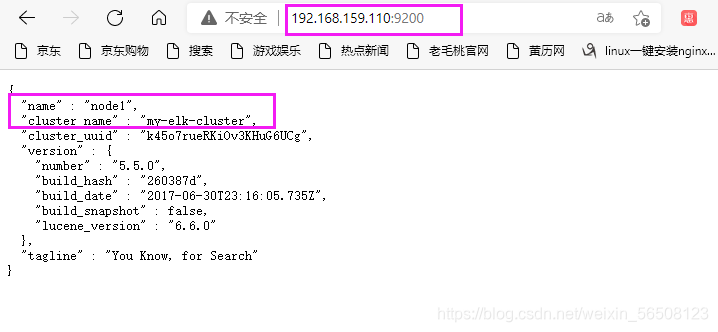

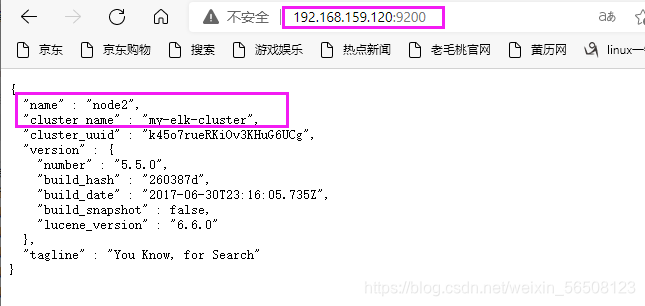

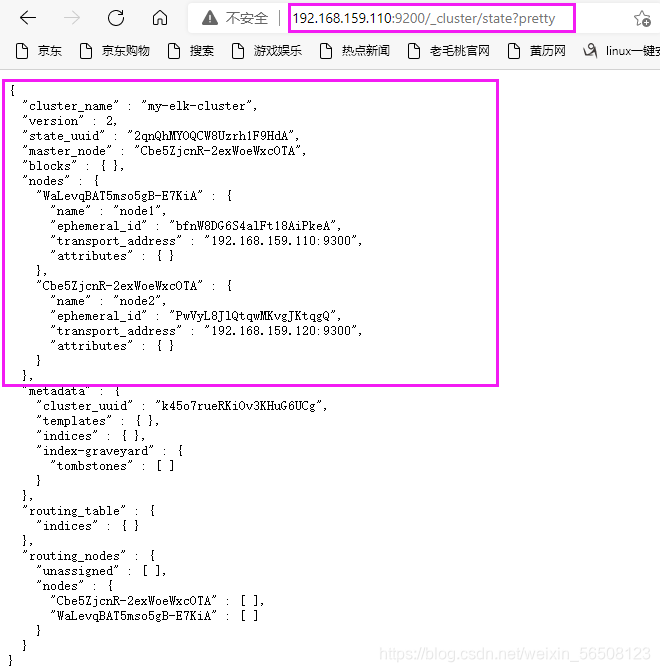

2、访问宿主机,查看节点信息

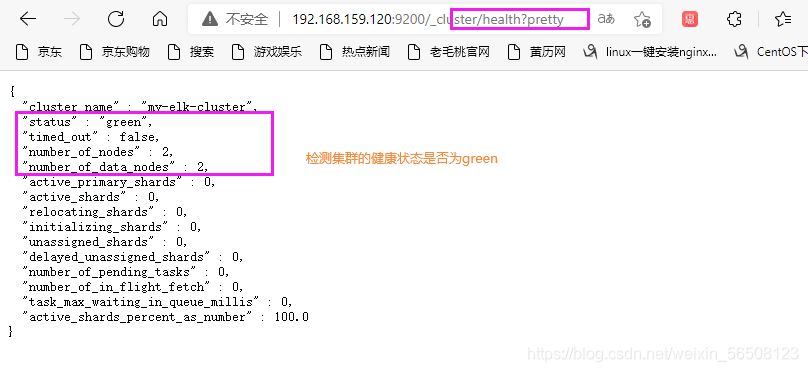

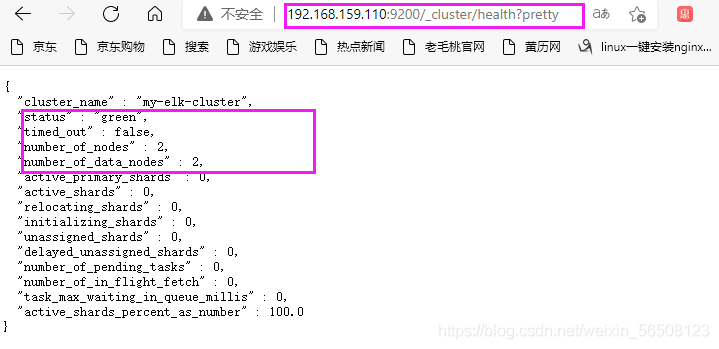

3、宿主机检测集群健康

4、查看集群状态

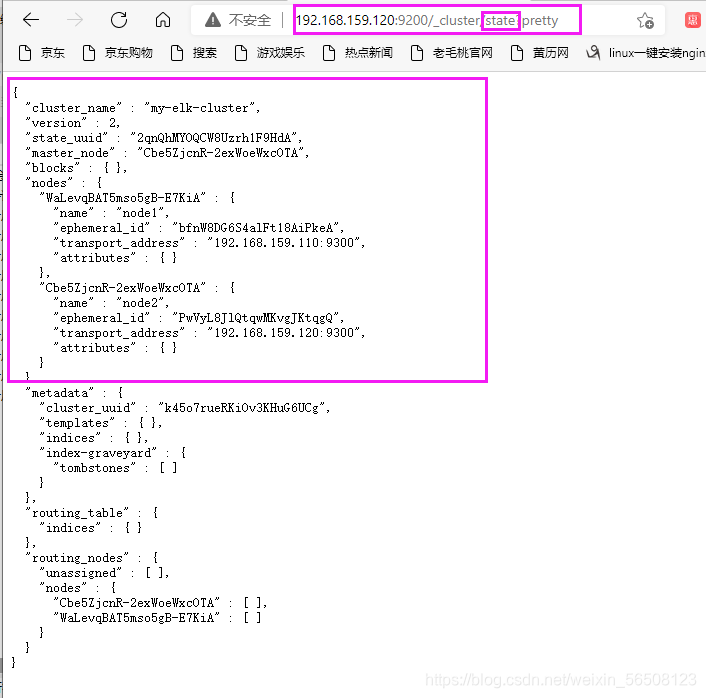

5、安装elasticsearch-head插件

5.1 安装node组件

[root@node1 opt]# tar zxvf node-v8.2.1.tar.gz

[root@node1 opt]# ls

elasticsearch-5.5.0.rpm node-v8.2.1 node-v8.2.1.tar.gz rh

[root@node1 opt]# cd node-v8.2.1/

[root@node1 node-v8.2.1]# ./configure

[root@node1 node-v8.2.1]# make && make install

5.2 安装phantomjs前端框架

[root@node1 node-v8.2.1]# cd /usr/local/src

[root@node1 src]# rz -E

rz waiting to receive.

[root@node1 src]# ls

phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 src]# tar xjvf phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 src]# ls

phantomjs-2.1.1-linux-x86_64 phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node1 src]# cd phantomjs-2.1.1-linux-x86_64/bin

[root@node1 bin]# ls

phantomjs

[root@node1 bin]# cp phantomjs /usr/local/bin

[root@node1 src]# cd /usr/local/src/

[root@node1 src]# tar zxvf elasticsearch-head.tar.gz

[root@node1 src]# ls

elasticsearch-head phantomjs-2.1.1-linux-x86_64

elasticsearch-head.tar.gz phantomjs-2.1.1-linux-x86_64.tar.bz2

5.3 修改配置文件,在末行加入

[root@node1 elasticsearch-head]# vim /etc/elasticsearch/elasticsearch.yml

89 http.cors.enabled: true /开启阔约访问支持

90 http.cors.allow-origin: "*" /跨域范根允许的域名地址

[root@node1 elasticsearch-head]# systemctl restart elasticsearch.service /重新启动服务

[root@node1 elasticsearch-head]#

[root@node1 elasticsearch-head]# npm run start & /后台启动

[1] 57690

[root@node1 elasticsearch-head]#

> elasticsearch-head@0.0.0 start /usr/local/src/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

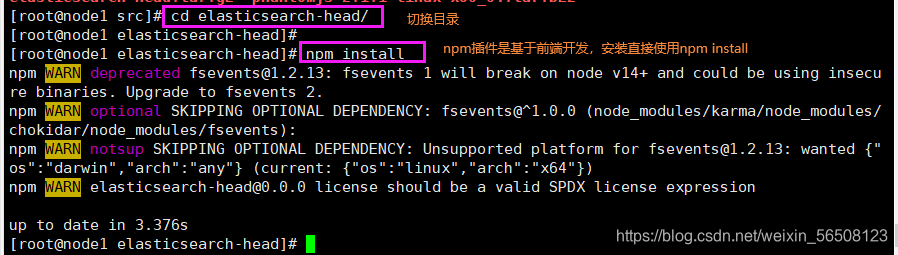

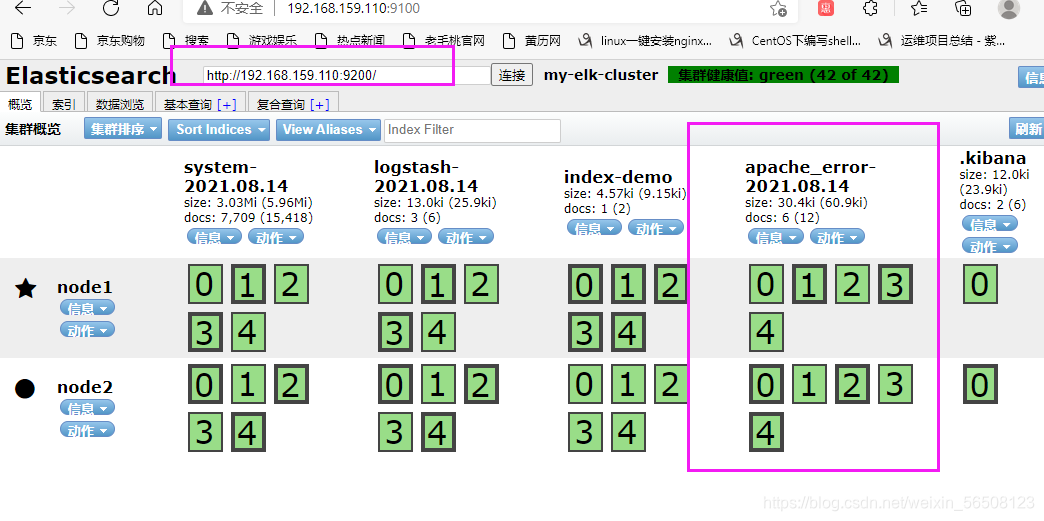

5.4 创建索引

[root@node1 elasticsearch-head]# curl -XPUT 'localhost:9200/index-demo/test/1?pretty' -H 'content-Type:application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{

"_index" : "index-demo",

"_type" : "test",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"created" : true

}

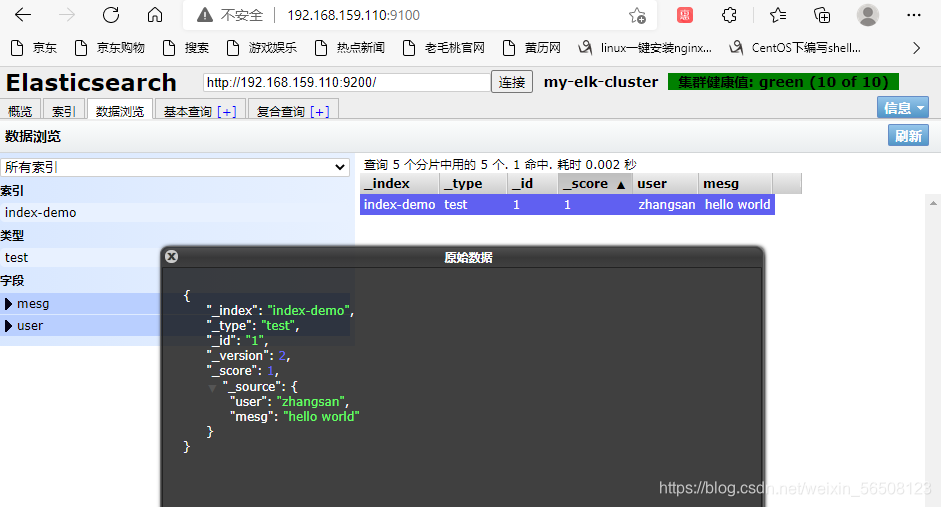

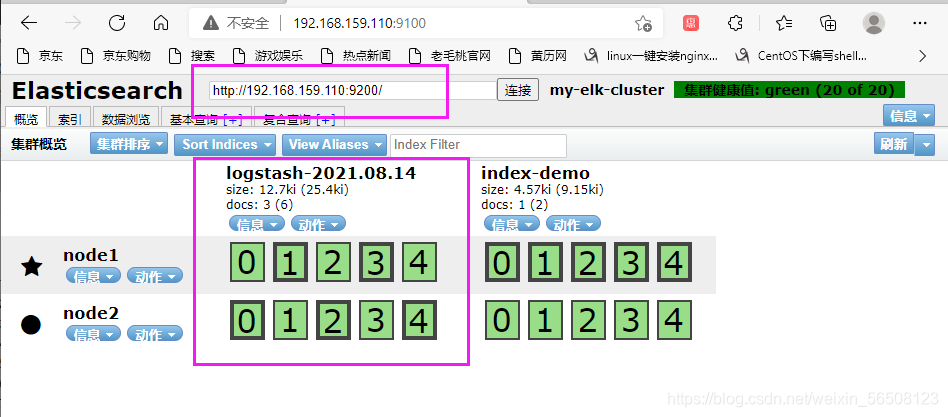

5.5 在宿主机上查看创建的索引

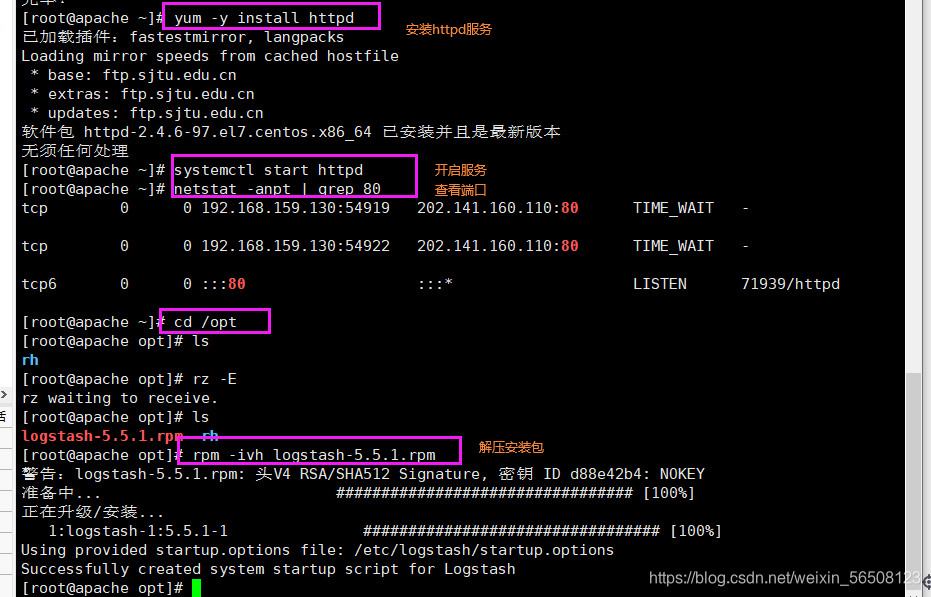

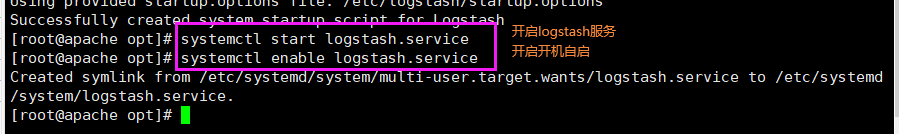

6、安装logstash

6.1 测试功能是否正常

[root@apache opt]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/ /创建软连接

[root@apache opt]# logstash -e 'input { stdin{} } output { stdout{} }'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

14:37:24.780 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>500}

14:37:24.821 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

14:37:24.907 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com /做个测试

2021-08-14T06:39:57.742Z apache www.baidu.com

www.sina.com.cn

2021-08-14T06:40:09.682Z apache www.sina.com.cn

6.2 使用rubydebug显示详细输出

[root@apache opt]# logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug} }'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

14:44:36.025 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>500}

14:44:36.042 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

14:44:36.070 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com

{

"@timestamp" => 2021-08-14T06:45:22.173Z,

"@version" => "1",

"host" => "apache",

"message" => "www.baidu.com"

}

www.sina.com

{

"@timestamp" => 2021-08-14T06:45:38.794Z,

"@version" => "1",

"host" => "apache",

"message" => "www.sina.com"

}

6.3 对接测试

[root@apache opt]# logstash -e 'input { stdin{} } output { elasticsearch { hosts=>["192.168.159.110:9200"]} }'

14:47:49.949 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com

www.sina.com.cn

www.google.com.cn

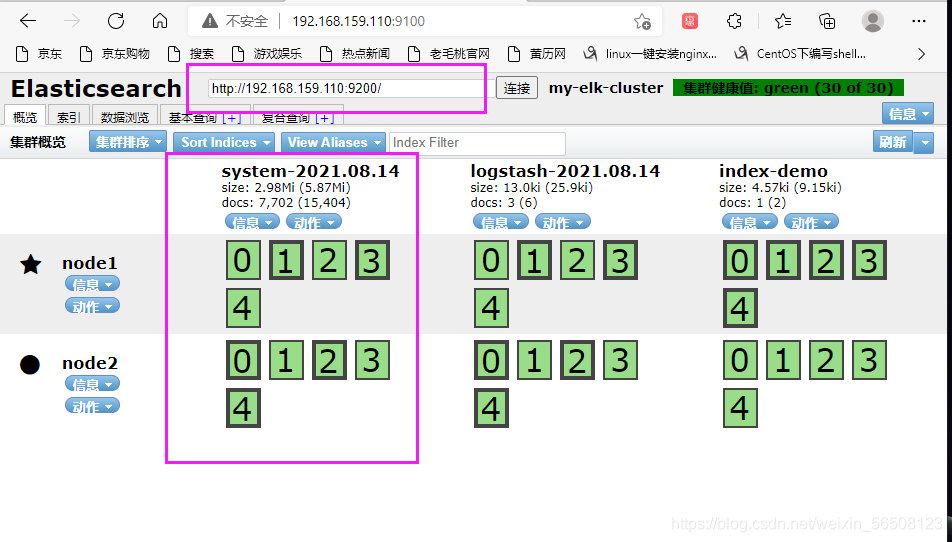

6.4 对接配置

[root@apache ~]# chmod o+r /var/log/messages /给其他用户可读权限

[root@apache ~]# ll /var/log/messages

-rw----r--. 1 root root 736886 8月 14 14:54 /var/log/messages

[root@apache ~]# vim /etc/logstash/conf.d/system.conf /编辑配置文件

[root@apache ~]# cat /etc/logstash/conf.d/system.conf

input {

file{

path => "/var/log/messages" /路径

type => "system" /类型

start_position => "beginning" /起始点

}

}

output {

elasticsearch {

hosts => ["192.168.159.110:9200"] /主机 192.168.159.110:9200

index => "system-%{+YYYY.MM.dd}" /索引

}

}

[root@apache ~]# systemctl restart logstash.service

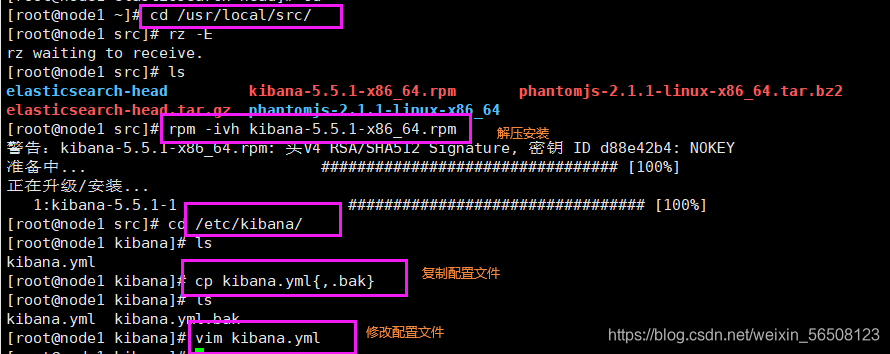

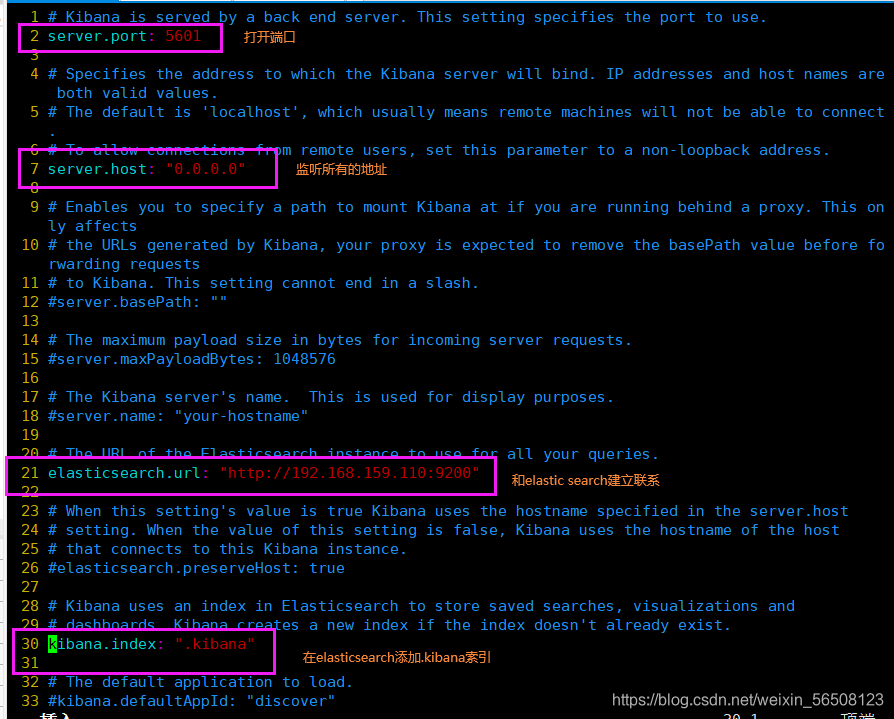

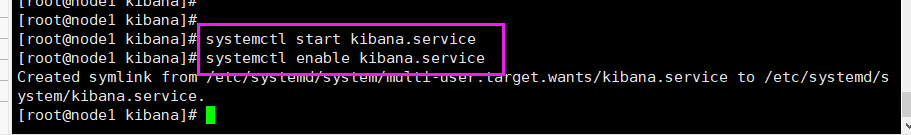

7、在node1主机安装kibana

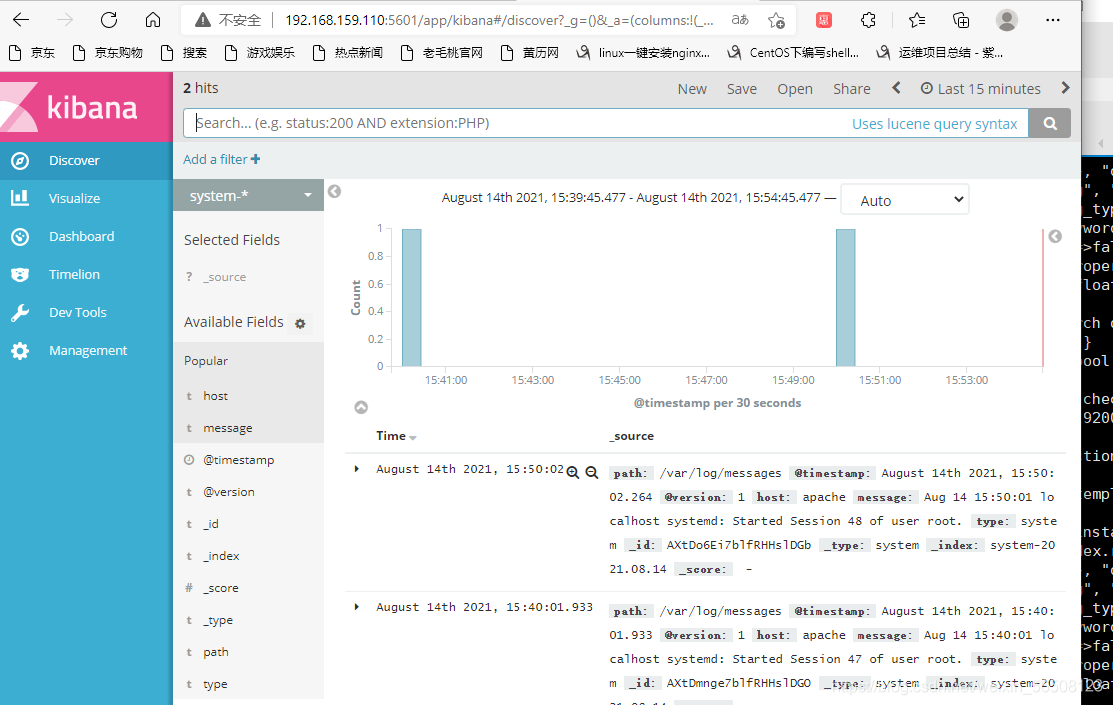

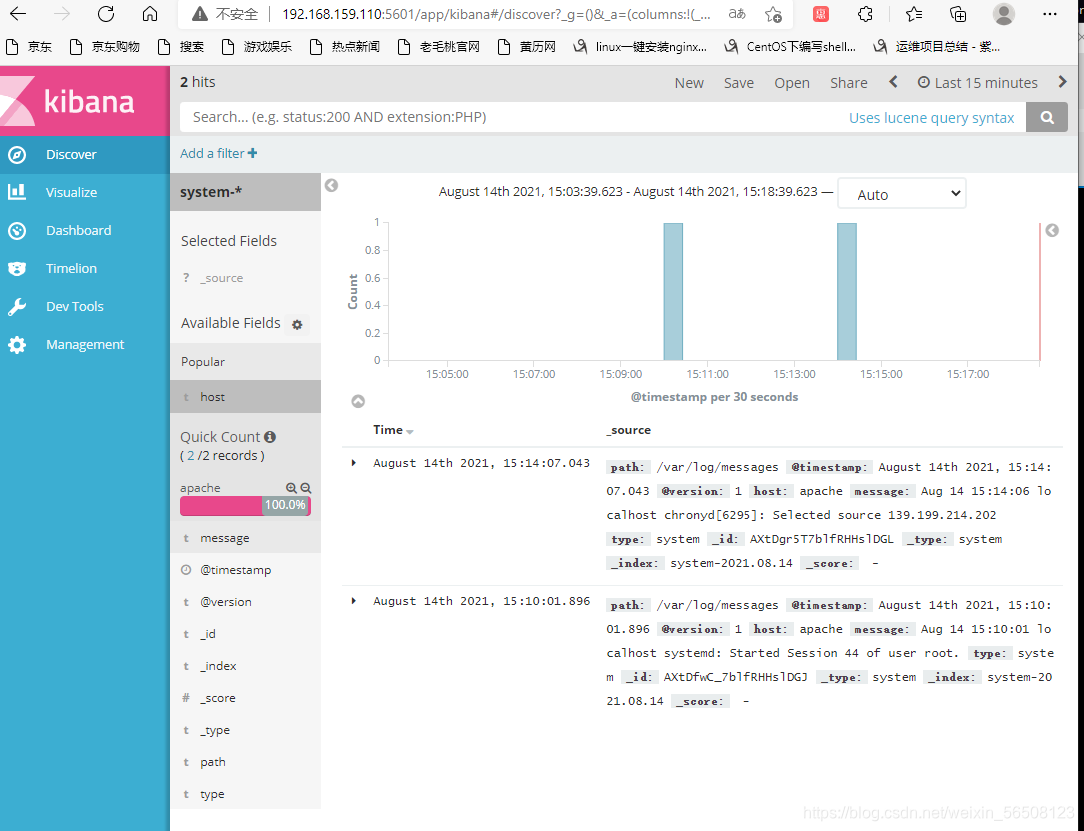

7.1 宿主机访问

7.2 对接apache日志文件

[root@apache ~]# cd /etc/logstash/conf.d/

[root@apache conf.d]# vim apache_log.conf

input {

file{

path => "/etc/httpd/logs/access_log"

type => "access"

start_position => "beginning"

}

file{

path => "/etc/httpd/logs/error_log"

type => "error"

start_position => "beginning"

}

}

output {

if [type] == "access" {

elasticsearch {

hosts => ["192.168.159.110:9200"]

index => "apache_access-%{+YYYY.MM.dd}"

}

}

if [type] == "error" {

elasticsearch {

hosts => ["192.168.159.110:9200"]

index => "apache_error-%{+YYYY.MM.dd}"

}

}

}

[root@apache conf.d]# /usr/share/logstash/bin/logstash -f apache_log.conf

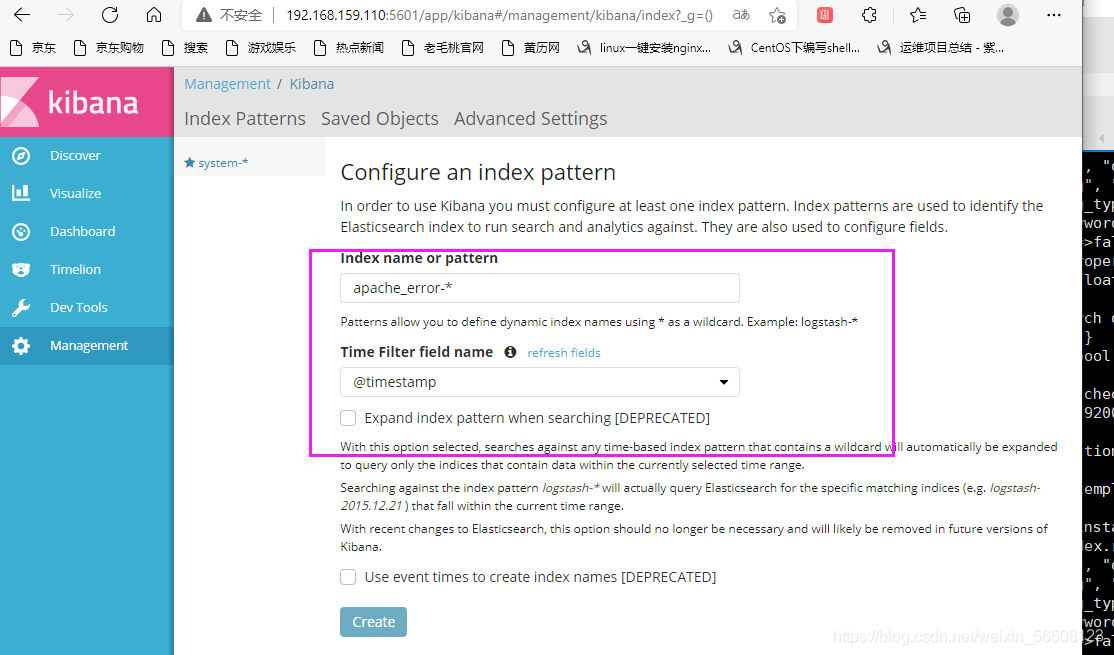

7.3创建索引名称

7.4 查看信息