目录

数据清洗

需求分析

针对算法产生的日志数据进行清洗拆分

1:算法产生的日志数据是嵌套json格式,需要拆分打平

2:针对算法中的国家字段进行大区转换

3:最后把不同类型的日志数据分别进行存储

架构图

代码实现

package henry.flink;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import henry.flink.customSource.MyRedisSource;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.contrib.streaming.state.RocksDBStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoFlatMapFunction;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011;

import org.apache.flink.streaming.util.serialization.KeyedSerializationSchemaWrapper;

import org.apache.flink.util.Collector;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.HashMap;

import java.util.Properties;

/**

* @Author: Henry

* @Description: 数据清洗需要

* 组装代码

*

* 创建kafka topic命令:

* ./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 5 --topic allData

* ./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 5 --topic allDataClean

*

* @Date: Create in 2019/5/25 17:47

**/

public class DataClean {

private static Logger logger = LoggerFactory.getLogger(DataClean.class); //log.info() 调用

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 修改并行度

env.setParallelism(5);

//checkpoint配置

env.enableCheckpointing(60000); // 设置 1分钟=60秒

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.getCheckpointConfig().setMinPauseBetweenCheckpoints(30000);

env.getCheckpointConfig().setCheckpointTimeout(10000);

env.getCheckpointConfig().setMaxConcurrentCheckpoints(1);

env.getCheckpointConfig().enableExternalizedCheckpoints(

CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

//设置statebackend

env.setStateBackend(new RocksDBStateBackend("hdfs://master:9000/flink/checkpoints",true));

// 指定 Kafka Source

String topic = "allData";

Properties prop = new Properties();

prop.setProperty("bootstrap.servers", "master:9092");

prop.setProperty("group.id", "con1");

FlinkKafkaConsumer011<String> myConsumer = new FlinkKafkaConsumer011<String>(

topic, new SimpleStringSchema(),prop);

// 获取 Kafka 中的数据,Kakfa 数据格式如下:

// {"dt":"2019-01-01 11:11:11", "countryCode":"US","data":[{"type":"s1","score":0.3},{"type":"s1","score":0.3}]}

DataStreamSource<String> data = env.addSource(myConsumer); // 并行度根据 kafka topic partition数设定

// 对数据打平需要对 大区和国家之间的关系进行转换,由于存在对应关系变的可能性,所以不能写死

// 处理方法:再添加一个Source,把国家和大区之间的关系存到redis数据库中

// 对于 Redis,官方只提供了 Sink 的支持,没有提供 Source 的支持,所以需要自定义 Source

// 由于对应关系可能会变,所以隔一段时间从 Redis 取一次最新对应关系

// mapData 中存储最新的国家码和大区的映射关系

DataStream<HashMap<String,String>> mapData = env.addSource(new MyRedisSource())

.broadcast(); // 可以把数据发送到后面算子的所有并行实际例中进行计算,否则处理数据丢失数据

// 通过 connect 方法将两个数据流连接在一起,然后再flatMap

DataStream<String> resData = data.connect(mapData).flatMap(

//参数类型代表: data , mapData , 返回结果; Json

new CoFlatMapFunction<String, HashMap<String, String>, String>() {

// 存储国家和大区的映射关系

private HashMap<String, String> allMap = new HashMap<String, String>();

// flatMap1 处理 Kafka 中的数据

public void flatMap1(String value, Collector<String> out)

throws Exception {

// 原数据是 Json 格式

JSONObject jsonObject = JSONObject.parseObject(value);

String dt = jsonObject.getString("dt");

String countryCode = jsonObject.getString("countryCode");

// 获取大区

String area = allMap.get(countryCode);

// 迭代取数据,jsonArray每个数据都是一个jsonobject

JSONArray jsonArray = jsonObject.getJSONArray("data");

for (int i = 0; i < jsonArray.size(); i++) {

JSONObject jsonObject1 = jsonArray.getJSONObject(i);

System.out.println("areas : - " + area);

jsonObject1.put("area", area);

jsonObject1.put("dt", dt);

out.collect(jsonObject1.toJSONString());

}

}

// flatMap2 处理 Redis 返回的 map 类型的数据

public void flatMap2(HashMap<String, String> value, Collector<String> out)

throws Exception {

this.allMap = value;

}

});

String outTopic = "allDataClean";

Properties outprop= new Properties();

outprop.setProperty("bootstrap.servers", "master:9092");

//第一种解决方案,设置FlinkKafkaProducer011里面的事务超时时间

//设置事务超时时间

outprop.setProperty("transaction.timeout.ms",60000*15+"");

//第二种解决方案,设置kafka的最大事务超时时间

FlinkKafkaProducer011<String> myproducer = new FlinkKafkaProducer011<>(outTopic,

new KeyedSerializationSchemaWrapper<String>(

new SimpleStringSchema()), outprop,

FlinkKafkaProducer011.Semantic.EXACTLY_ONCE);

resData.addSink(myproducer);

env.execute("Data Clean");

}

}

功能: 自定义 Redis Source

由于存储的是 国家大区和编码的映射关系

类似于 k-v ,所以返回 HashMap 格式比较好在 Redis 中保存的国家和大区的关系

Redis中进行数据的初始化,数据格式:

????????Hash??????大区??????国家

????????hset areas;???AREA_US???? ?US

????????hset areas;???AREA_CT?? ?? TW,HK

????????hset areas ???AREA_AR???? ?PK,SA,KW

????????hset areas????AREA_IN???? IN

需要把大区和国家的对应关系组装成 java 的 hashmap

package henry.flink.customSource;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.exceptions.JedisConnectionException;

import java.util.HashMap;

import java.util.Map;

/**

* @Author: Henry

* @Description: 自定义 Redis Source

* 由于存储的是 国家大区和编码的映射关系

* 类似于 k-v ,所以返回 HashMap 格式比较好

*

* 在 Redis 中保存的国家和大区的关系

* Redis中进行数据的初始化,数据格式:

* Hash 大区 国家

* hset areas AREA_US US

* hset areas AREA_CT TW,HK

* hset areas AREA_AR PK,SA,KW

* hset areas AREA_IN IN

*

* 需要把大区和国家的对应关系组装成 java 的 hashmap

*

* @Date: Create in 2019/5/25 18:12

**/

public class MyRedisSource implements SourceFunction<HashMap<String,String>>{

private Logger logger = LoggerFactory.getLogger(MyRedisSource.class);

private final long SLEEP_MILLION = 60000 ;

private boolean isrunning = true;

private Jedis jedis = null;

public void run(SourceContext<HashMap<String, String>> ctx) throws Exception {

this.jedis = new Jedis("master", 6379);

// 存储所有国家和大区的对应关系

HashMap<String, String> keyValueMap = new HashMap<String, String>();

while (isrunning){

try{

// 每次执行前先清空,去除旧数据

keyValueMap.clear();

// 取出数据

Map<String, String> areas = jedis.hgetAll("areas");

// 进行迭代

for (Map.Entry<String, String> entry : areas.entrySet()){

String key = entry.getKey(); // 大区:AREA_AR

String value = entry.getValue(); // 国家:PK,SA,KW

String[] splits = value.split(",");

for (String split : splits){

// 这里 split 相当于key, key 是 value

keyValueMap.put(split, key); // 即 PK,AREA_AR

}

}

// 防止取到空数据

if(keyValueMap.size() > 0){

ctx.collect(keyValueMap);

}

else {

logger.warn("从Redis中获取到的数据为空!");

}

// 一分钟提取一次

Thread.sleep(SLEEP_MILLION);

}

// 捕获 Jedis 链接异常

catch (JedisConnectionException e){

// 重新获取链接

jedis = new Jedis("master", 6379);

logger.error("Redis链接异常,重新获取链接", e.getCause());

}// 捕获其他异常处理,通过日志记录

catch (Exception e){

logger.error("Source数据源异常", e.getCause());

}

}

}

/**

* 任务停止,设置 false

* */

public void cancel() {

isrunning = false;

// 这样可以只获取一次连接在while一直用

if(jedis != null){

jedis.close();

}

}

}

package henry.flink.utils;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.serialization.StringSerializer;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Properties;

import java.util.Random;

/**

* @Author: Henry

* @Description: Kafka 生产者

* @Date: Create in 2019/6/14 15:15

**/

public class kafkaProducer {

public static void main(String[] args) throws Exception{

Properties prop = new Properties();

//指定kafka broker地址

prop.put("bootstrap.servers", "master:9092");

//指定key value的序列化方式

prop.put("key.serializer", StringSerializer.class.getName());

prop.put("value.serializer", StringSerializer.class.getName());

//指定topic名称

String topic = "allData";

//创建producer链接

KafkaProducer<String, String> producer = new KafkaProducer<String,String>(prop);

// 生成消息数据格式:

//{"dt":"2018-01-01 10:11:11","countryCode":"US","data":[{"type":"s1","score":0.3,"level":"A"},{"type":"s2","score":0.2,"level":"B"}]}

while(true){

String message = "{\"dt\":\""+getCurrentTime()+"\",\"countryCode\":\""+getCountryCode()+"\",\"data\":[{\"type\":\""+getRandomType()+"\",\"score\":"+getRandomScore()+",\"level\":\""+getRandomLevel()+"\"},{\"type\":\""+getRandomType()+"\",\"score\":"+getRandomScore()+",\"level\":\""+getRandomLevel()+"\"}]}";

System.out.println(message);

producer.send(new ProducerRecord<String, String>(topic,message));

Thread.sleep(2000);

}

//关闭链接

//producer.close();

}

public static String getCurrentTime(){

SimpleDateFormat sdf = new SimpleDateFormat("YYYY-MM-dd HH:mm:ss");

return sdf.format(new Date());

}

public static String getCountryCode(){

String[] types = {"US","TW","HK","PK","KW","SA","IN"};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

public static String getRandomType(){

String[] types = {"s1","s2","s3","s4","s5"};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

public static double getRandomScore(){

double[] types = {0.3,0.2,0.1,0.5,0.8};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

public static String getRandomLevel(){

String[] types = {"A","A+","B","C","D"};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

}

实践运行

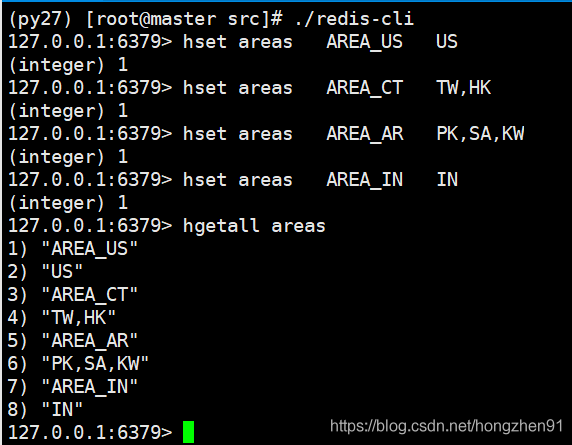

1.先从一个终端启动redis客户端,并插入数据

./redis-cli

127.0.0.1:6379> hset areas ? AREA_US ? US

(integer) 1

127.0.0.1:6379> hset areas ? AREA_CT ? TW,HK

(integer) 1

127.0.0.1:6379> hset areas ? AREA_AR ? PK,SA,KW

(integer) 1

127.0.0.1:6379> hset areas ? AREA_IN ? IN

(integer) 1

127.0.0.1:6379>hgetall查看插入数据情况:

2.kafka创建topc:

./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 5 --topic allData监控kafka topic:

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic allDataClean3.启动程序

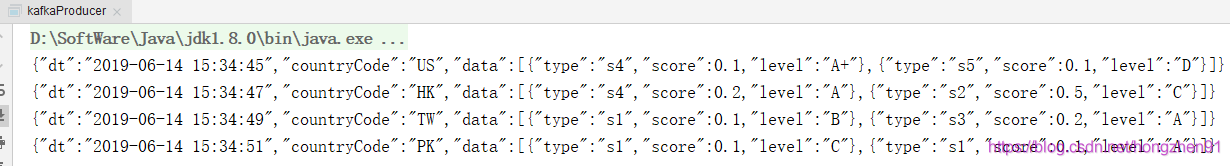

先启动 DataClean 程序,再启动生产者程序,kafka生产者产生数据如下:

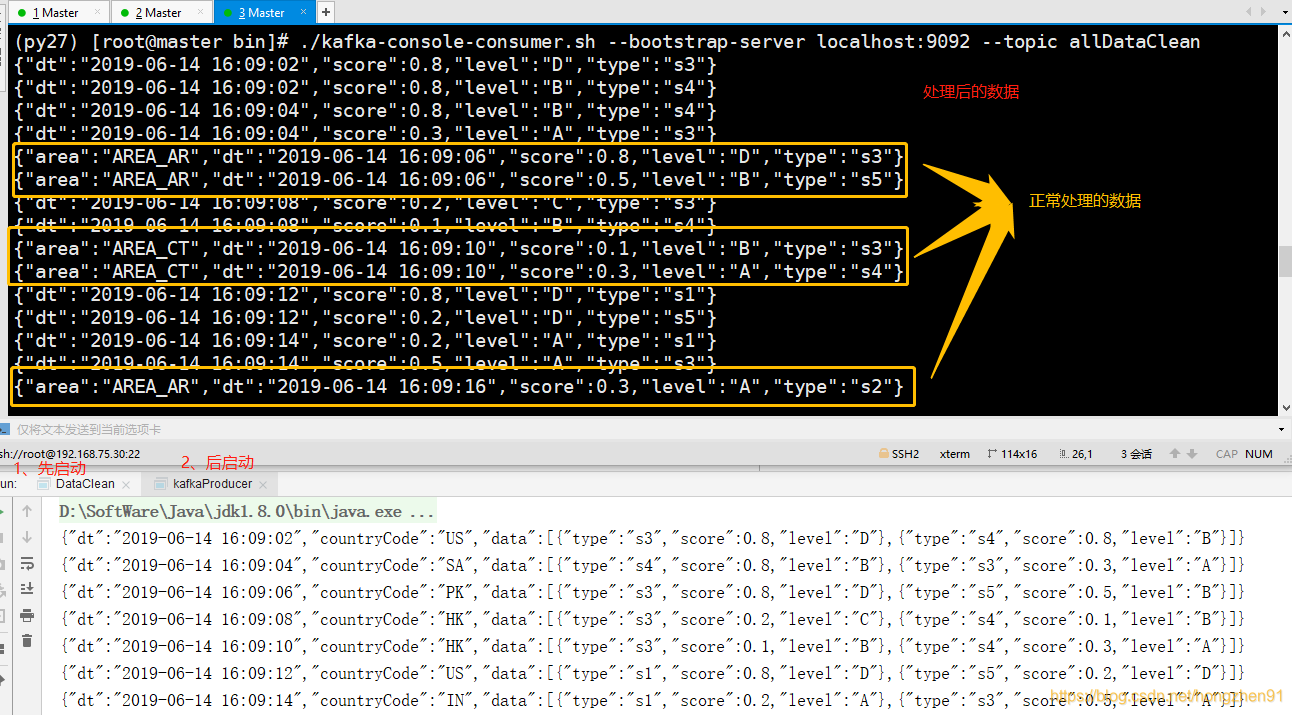

4.最后终端观察处理输出的数据:

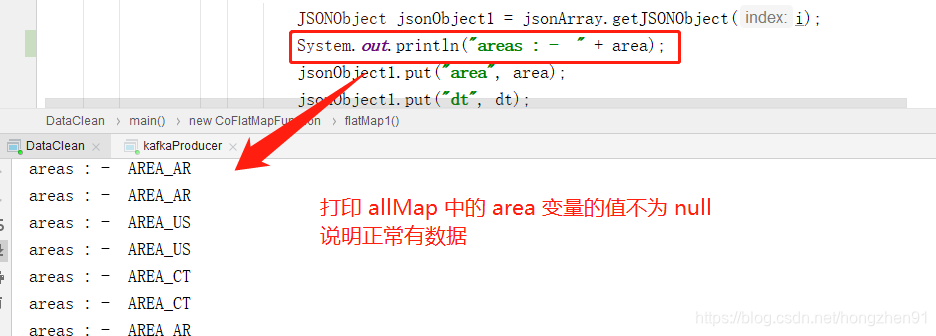

只有部分数据正确处理输出的原因是:代码中没有设置并行度,默认是按机器CPU核数跑的,所以有的线程 allMap 没有数据,有的有数据,所以会导致部分正确,这里需要通过 broadcast() 进行广播,让所有线程都接收到数据:

? ?DataStream<HashMap<String,String>> mapData = env.addSource(new MyRedisSource()).broadcast();

?运行结果:

控制台打印结果:?

?

数据报表

需求分析

主要针对直播/短视频平台审核指标的统计

1:统计不同大区每1 min内过审(上架)的数据量

2:统计不同大区每1 min内未过审(下架)的数据量

3:统计不同大区每1 min内加黑名单的数据量?

架构图

?存入es主要是方便kibana进行统计

代码实现

package henry.flink;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import henry.flink.function.MyAggFunction;

import henry.flink.watermark.*;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.RuntimeContext;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.contrib.streaming.state.RocksDBStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.elasticsearch.ElasticsearchSinkFunction;

import org.apache.flink.streaming.connectors.elasticsearch.RequestIndexer;

import org.apache.flink.streaming.connectors.elasticsearch6.ElasticsearchSink;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011;

import org.apache.flink.streaming.util.serialization.KeyedSerializationSchemaWrapper;

import org.apache.flink.util.OutputTag;

import org.apache.http.HttpHost;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.client.Requests;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.*;

/**

* @Author: Henry

* @Description: 数据报表

*

* 创建kafka topic的命令:

* bin/kafka-topics.sh --create --topic lateLog --zookeeper localhost:2181 --partitions 5 --replication-factor 1

* bin/kafka-topics.sh --create --topic auditLog --zookeeper localhost:2181 --partitions 5 --replication-factor 1

*

* @Date: Create in 2019/5/29 11:05

**/

public class DataReport {

private static Logger logger = LoggerFactory.getLogger(DataReport.class);

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度

env.setParallelism(5);

// 设置使用eventtime

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

// checkpoint配置

env.enableCheckpointing(60000);

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.getCheckpointConfig().setMinPauseBetweenCheckpoints(30000);

env.getCheckpointConfig().setCheckpointTimeout(10000);

env.getCheckpointConfig().setMaxConcurrentCheckpoints(1);

env.getCheckpointConfig().enableExternalizedCheckpoints(

CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

//设置statebackend

//env.setStateBackend(new RocksDBStateBackend("hdfs://master:9000/flink/checkpoints",true));

// 指定 Kafka Source

// 配置 kafkaSource

String topic = "auditLog"; // 审核日志

Properties prop = new Properties();

prop.setProperty("bootstrap.servers", "master:9092");

prop.setProperty("group.id", "con1");

FlinkKafkaConsumer011<String> myConsumer = new FlinkKafkaConsumer011<String>(

topic, new SimpleStringSchema(),prop);

/*

* 获取到kafka的数据

* 审核数据的格式:

* {"dt":"审核时间{年月日 时分秒}", "type":"审核类型","username":"审核人姓名","area":"大区"}

* 说明: json 格式占用的存储空间比较大

* */

DataStreamSource<String> data = env.addSource(myConsumer);

// 对数据进行清洗

DataStream<Tuple3<Long, String, String>> mapData = data.map(

new MapFunction<String, Tuple3<Long, String, String>>() {

@Override

public Tuple3<Long, String, String> map(String line) throws Exception {

JSONObject jsonObject = JSON.parseObject(line);

String dt = jsonObject.getString("dt");

long time = 0;

try {

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date parse = sdf.parse(dt);

time = parse.getTime();

} catch (ParseException e) {

//也可以把这个日志存储到其他介质中

logger.error("时间解析异常,dt:" + dt, e.getCause());

}

String type = jsonObject.getString("type");

String area = jsonObject.getString("area");

return new Tuple3<>(time, type, area);

}

});

// 过滤掉异常数据

DataStream<Tuple3<Long, String, String>> filterData = mapData.filter(

new FilterFunction<Tuple3<Long, String, String>>() {

@Override

public boolean filter(Tuple3<Long, String, String> value) throws Exception {

boolean flag = true;

if (value.f0 == 0) { // 即 time 字段为0

flag = false;

}

return flag;

}

});

// 保存迟到太久的数据

OutputTag<Tuple3<Long, String, String>> outputTag = new OutputTag<Tuple3<Long, String, String>>("late-data"){};

/*

* 窗口统计操作

* */

SingleOutputStreamOperator<Tuple4<String, String, String, Long>> resultData = filterData.assignTimestampsAndWatermarks(

new MyWatermark())

.keyBy(1, 2) // 根据第1、2个字段,即type、area分组,第0个字段是timestamp

.window(TumblingEventTimeWindows.of(Time.minutes(30))) // 每隔一分钟统计前一分钟的数据

.allowedLateness(Time.seconds(30)) // 允许迟到30s

.sideOutputLateData(outputTag) // 记录迟到太久的数据

.apply(new MyAggFunction());

// 获取迟到太久的数据

DataStream<Tuple3<Long, String, String>> sideOutput = resultData.getSideOutput(outputTag);

// 存储迟到太久的数据到kafka中

String outTopic = "lateLog";

Properties outprop = new Properties();

outprop.setProperty("bootstrap.servers", "master:9092");

// 设置事务超时时间

outprop.setProperty("transaction.timeout.ms", 60000*15+"");

FlinkKafkaProducer011<String> myProducer = new FlinkKafkaProducer011<String>(

outTopic,

new KeyedSerializationSchemaWrapper<String>(

new SimpleStringSchema()),

outprop,

FlinkKafkaProducer011.Semantic.EXACTLY_ONCE);

// 迟到太久的数据存储到 kafka 中

sideOutput.map(new MapFunction<Tuple3<Long, String, String>, String>() {

@Override

public String map(Tuple3<Long, String, String> value) throws Exception {

return value.f0+"\t"+value.f1+"\t"+value.f2;

}

}).addSink(myProducer);

/*

* 把计算的结存储到 ES 中

* */

List<HttpHost> httpHosts = new ArrayList<>();

httpHosts.add(new HttpHost("master", 9200, "http"));

ElasticsearchSink.Builder<Tuple4<String, String, String, Long>> esSinkBuilder = new ElasticsearchSink.Builder<

Tuple4<String, String, String, Long>>(

httpHosts,

new ElasticsearchSinkFunction<Tuple4<String, String, String, Long>>() {

public IndexRequest createIndexRequest(Tuple4<String, String, String, Long> element) {

Map<String, Object> json = new HashMap<>();

json.put("time",element.f0);

json.put("type",element.f1);

json.put("area",element.f2);

json.put("count",element.f3);

//使用time+type+area 保证id唯一

String id = element.f0.replace(" ","_")+"-"+element.f1+"-"+element.f2;

return Requests.indexRequest()

.index("auditindex")

.type("audittype")

.id(id)

.source(json);

}

@Override

public void process(Tuple4<String, String, String, Long> element,

RuntimeContext ctx, RequestIndexer indexer) {

indexer.add(createIndexRequest(element));

}

}

);

// 设置批量写数据的缓冲区大小,测试可以为1,实际工作中看时间,一般需要调大

// ES是有缓冲区的,这里设置1代表,每增加一条数据直接就刷新到ES

esSinkBuilder.setBulkFlushMaxActions(1);

resultData.addSink(esSinkBuilder.build());

env.execute("DataReport");

}

}

package henry.flink.function;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Collections;

import java.util.Date;

import java.util.Iterator;

/**

* @Author: Henry

* @Description: 聚合数据代码

* @Date: Create in 2019/5/29 14:35

**/

/**

*@参数 : IN: Tuple3<Long, String, String>

* OUT: Tuple4<String, String, String, Long>

* KEY: Tuple,表示分组字段,如果keyBy() 传递一个字段,则Tuple是一个字段

* 如果keyBy() 传递两个字段,则Tuple就是两个字段(代码38、39行)

* Window: TimeWindow

*@返回值 :

*/

public class MyAggFunction implements WindowFunction<Tuple3<Long, String, String>, Tuple4<String, String, String, Long>, Tuple, TimeWindow>{

@Override

public void apply(Tuple tuple,

TimeWindow window,

Iterable<Tuple3<Long, String, String>> input,

Collector<Tuple4<String, String, String, Long>> out)

throws Exception {

//获取分组字段信息

String type = tuple.getField(0).toString();

String area = tuple.getField(1).toString();

Iterator<Tuple3<Long, String, String>> it = input.iterator();

//存储时间,为了获取最后一条数据的时间

ArrayList<Long> arrayList = new ArrayList<>();

long count = 0;

while (it.hasNext()) {

Tuple3<Long, String, String> next = it.next();

arrayList.add(next.f0);

count++;

}

System.err.println(Thread.currentThread().getId()+",window触发了,数据条数:"+count);

//排序,默认正排

Collections.sort(arrayList);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String time = sdf.format(new Date(arrayList.get(arrayList.size() - 1)));

//组装结果

Tuple4<String, String, String, Long> res = new Tuple4<>(time, type, area, count);

out.collect(res);

}

}处理乱序的waterMark代码?

package henry.flink.watermark;

import org.apache.flink.streaming.api.functions.AssignerWithPeriodicWatermarks;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.watermark.Watermark;

import javax.annotation.Nullable;

/**

* @Author: Henry

* @Description: 自定义Watermark

* @Date: Create in 2019/5/29 11:53

**/

public class MyWatermark implements AssignerWithPeriodicWatermarks<Tuple3<Long, String, String>> {

Long currentMaxTimestamp = 0L;

final Long maxOutOfOrderness = 10000L;// 最大允许的乱序时间是10s,具体时间需要根据实际测

@Nullable

@Override

public Watermark getCurrentWatermark() {

return new Watermark(currentMaxTimestamp-maxOutOfOrderness);

}

@Override

public long extractTimestamp(Tuple3<Long, String, String> element, long previousElementTimestamp) {

Long timestamp = element.f0;

currentMaxTimestamp = Math.max(timestamp, currentMaxTimestamp);

return timestamp;

}

}

kafka数据源生产代码?

package henry.flink.utils;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.serialization.StringSerializer;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Properties;

import java.util.Random;

/**

* @Author: HongZhen

* @Description: 创建kafka topic的命令

*

* bin/kafka-topics.sh --create --topic auditLog --zookeeper localhost:2181 --partitions 5 --replication-factor 1

*

* @Date: Create in 2019/5/29 11:05

**/

public class kafkaProducerDataReport {

public static void main(String[] args) throws Exception{

Properties prop = new Properties();

//指定kafka broker地址

prop.put("bootstrap.servers", "master:9092");

//指定key value的序列化方式

prop.put("key.serializer", StringSerializer.class.getName());

prop.put("value.serializer", StringSerializer.class.getName());

//指定topic名称

String topic = "auditLog";

//创建producer链接

KafkaProducer<String, String> producer = new KafkaProducer<String,String>(prop);

//{"dt":"2018-01-01 10:11:22","type":"shelf","username":"shenhe1","area":"AREA_US"}

//生产消息

while(true){

String message = "{\"dt\":\""+getCurrentTime()+"\",\"type\":\""+getRandomType()+"\",\"username\":\""+getRandomUsername()+"\",\"area\":\""+getRandomArea()+"\"}";

System.out.println(message);

producer.send(new ProducerRecord<String, String>(topic,message));

Thread.sleep(2000);

}

//关闭链接

//producer.close();

}

public static String getCurrentTime(){

SimpleDateFormat sdf = new SimpleDateFormat("YYYY-MM-dd HH:mm:ss");

return sdf.format(new Date());

}

public static String getRandomArea(){

String[] types = {"AREA_US","AREA_CT","AREA_AR","AREA_IN","AREA_ID"};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

public static String getRandomType(){

String[] types = {"shelf","unshelf","black","chlid_shelf","child_unshelf"};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

public static String getRandomUsername(){

String[] types = {"shenhe1","shenhe2","shenhe3","shenhe4","shenhe5"};

Random random = new Random();

int i = random.nextInt(types.length);

return types[i];

}

}