ElasticSearch

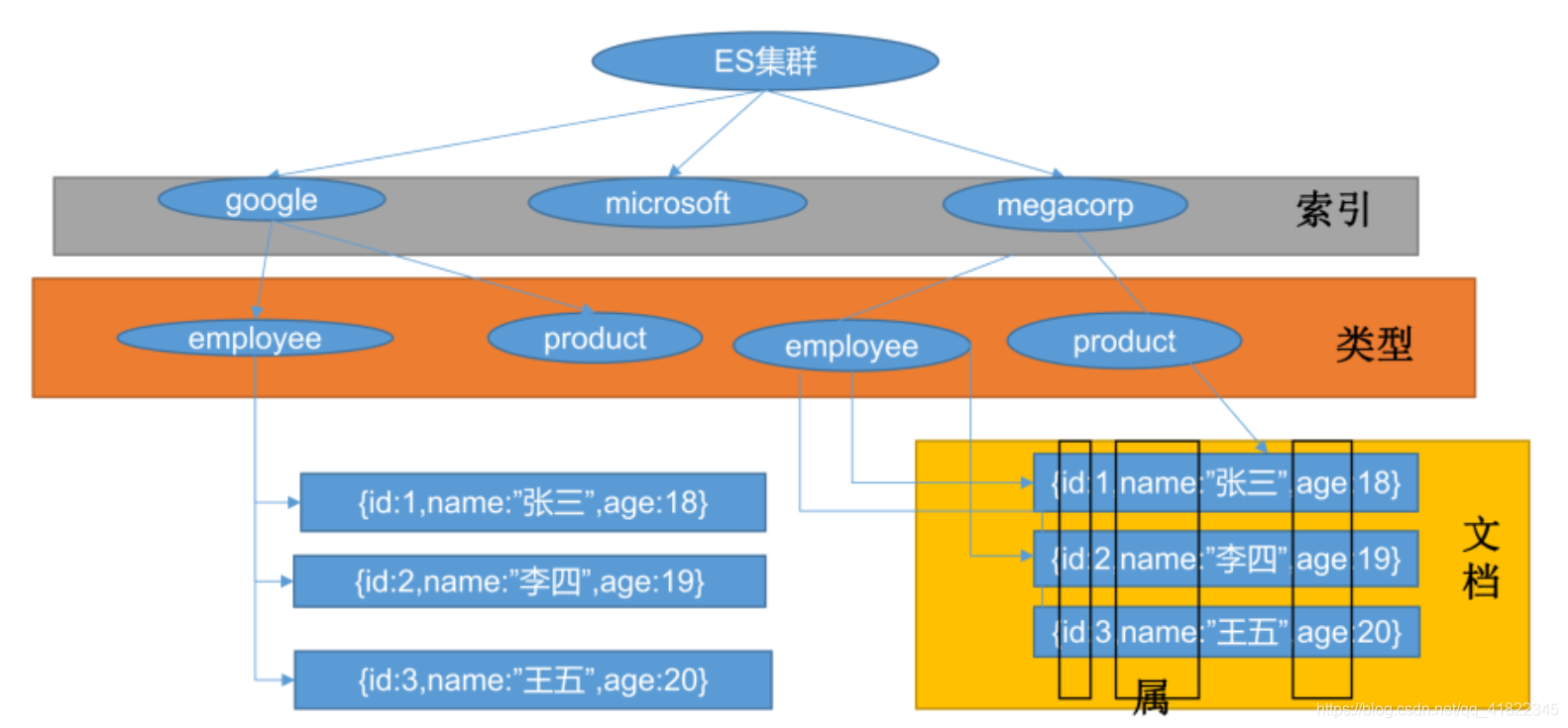

ES基本概念

index库>type表>document文档

-

index索引

动词:相当于mysql的insert。

名词:相当于mysql的db。 -

Type类型

在index中,可以定义一个或多个类型。

类似于mysql的table,每一种类型的数据放在一起。 -

Document文档

保存在某个index下,某种type的一个数据document,文档是json格式的,document就像是mysql中的某个table里面的内容。每一行对应的列叫属性。关系型数据库中两个数据表示是独立的,即使他们里面有相同名称的列也不影响使用,但ES中不是这样的。elasticsearch是基于Lucene开发的搜索引擎,而ES中不同type下名称相同的filed最终在Lucene中的处理方式是一样的。

ES安装步骤

- Step1:下载ealastic search(存储和检索)和kibana(可视化检索)

#版本要统一

docker pull elasticsearch:7.4.2

docker pull kibana:7.4.2

- step2:配置

# 将docker里的目录挂载到linux的/mydata目录中

# 修改/mydata就可以改掉docker里的

mkdir -p /mydata/elasticsearch/config

mkdir -p /mydata/elasticsearch/data

# es可以被远程任何机器访问

echo "http.host: 0.0.0.0" >/mydata/elasticsearch/config/elasticsearch.yml

# 递归更改权限,es需要访问

chmod -R 777 /mydata/elasticsearch/

- step3:启动ES

# 9200是用户交互端口 9300是集群心跳端口

# -e指定是单阶段运行

# -e指定占用的内存大小,生产时可以设置32G

docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms64m -Xmx512m" \

-v /mydata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

-v /mydata/elasticsearch/data:/usr/share/elasticsearch/data \

-v /mydata/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-d elasticsearch:7.4.2

# 设置开机启动elasticsearch

docker update elasticsearch --restart=always

- step4:启动Kibana

# kibana指定了了ES交互端口9200 # 5600位kibana主页端口

docker run --name kibana -e ELASTICSEARCH_HOSTS=http://192.168.168.101:9200 -p 5601:5601 -d kibana:7.4.2

# 设置开机启动kibana

docker update kibana --restart=always

- step5:测试

访问查看elasticsearch版本信息: http://192.168.168.101:9200

访问查看 Kibana控制台页面: http://192.168.168.101:5601/app/kibana

简单检索

查询

访问查看elasticsearch节点信息: http://192.168.168.101:9200/_cat/nodes

访问查看elasticsearch节点信息: http://192.168.168.101:9200/_cat/health

访问查看elasticsearch主节点: http://192.168.168.101:9200/_cat/master

查看所有索引,等价于mysql数据库的show databases;

http://192.168.168.101:9200/_cat/indices

新增文档

# # 在customer索引下的external类型下保存1号数据

PUT customer/external/1

# POSTMAN输入(选择POST或者PUT)

http://192.168.168.101:9200/customer/external/1

##Body raw json

{

"name":"John Doe"

}

返回如下:

带有下划线开头的,称为元数据,反映了当前的基本信息。

{

"_index": "customer", 表明该数据在哪个数据库下;

"_type": "external", 表明该数据在哪个类型下;

"_id": "1", 表明被保存数据的id;

"_version": 1, 被保存数据的版本

"result": "created", 这里是创建了一条数据,如果重新put一条数据,则该状态会变为updated,并且版本号也会发生变化。

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"_seq_no": 0,

"_primary_term": 1

}

查看文档

GET /customer/external/1

http://192.168.168.101:9200/customer/external/1

乐观锁:通过“if_seq_no=1&if_primary_term=1”,当序列号匹配的时候,才进行修改,否则不修改。

# POSTMAN输入(选择PUT)

http://192.168.168.101:9200/customer/external/1?if_seq_no=18&if_primary_term=6

更新文档

POST customer/externel/1/_update

POST customer/externel/1

PUT customer/externel/1

## 必须带有doc字段,如:

{

"doc":{

"name":"222"

}

}

区别:

POST操作会对比源文档数据,如果相同不会有什么操作,文档version不增加。

PUT操作总会重新保存并增加version版本。

使用场景:

对于大并发更新,不带update。

对于大并发查询偶尔更新,带update;对比更新,重新计算分配规则。

删除文档

##删除id=1的数据

DELETE customer/external/1

##删除整个索引

DELETE customer

# POSTMAN输入(选择DELETE)

http://192.168.168.101:9200/customer/external/1

# POSTMAN输入(选择DELETE)

http://192.168.168.101:9200/customer

批量操作

POST /customer/external/_bulk

{"index":{"_id":"1"}}

{"name":"John Doe"}

{"index":{"_id":"2"}}

{"name":"John Doe"}

POST /_bulk

{"delete":{"_index":"website","_type":"blog","_id":"123"}}

{"create":{"_index":"website","_type":"blog","_id":"123"}}

{"title":"my first blog post"}

{"index":{"_index":"website","_type":"blog"}}

{"title":"my second blog post"}

{"update":{"_index":"website","_type":"blog","_id":"123"}}

{"doc":{"title":"my updated blog post"}}

# POSTMAN输入(选择POST)

http://192.168.168.101:9200/_bulk

##Body raw json

{"delete":{"_index":"website","_type":"blog","_id":"123"}}

{"create":{"_index":"website","_type":"blog","_id":"123"}}

{"title":"my first blog post"}

{"index":{"_index":"website","_type":"blog"}}

{"title":"my second blog post"}

{"update":{"_index":"website","_type":"blog","_id":"123"}}

{"doc":{"title":"my updated blog post"}}

进阶检索

- 先拿到样本测试数据

https://github.com/elastic/elasticsearch/blob/master/docs/src/test/resources/accounts.json (原先的样例数据 accounts.json找不到 )

https://hub.fastgit.org/liuwen766/accounts.json/tree/main(新的样例数据)

POST bank/account/_bulk

# POSTMAN输入(选择POST)

http://192.168.168.101:9200/bank/account/_bulk

##Body raw json 导入下面测试数据

https://hub.fastgit.org/liuwen766/accounts.json/tree/main

信息检索

##请求参数方式检索

GET bank/_search?q=*&sort=account_number:asc

说明:

q=* # 查询所有

sort # 排序字段

asc #升序

##检索bank下所有信息,包括type和docs

GET bank/_search

##检索了1000条数据,但是根据相关性算法,只返回10条

GET bank/_search?q=*&sort=account_number:asc

##uri+请求体进行检索

GET /bank/_search

{

"query": { "match_all": {} },

"sort": [

{ "account_number": "asc" },

{ "balance":"desc"}

]

}

##DSL领域特定语言

##Elasticsearch提供了一个可以执行查询的Json风格的DSL(domain-specific language领域特定语言)。这个被称为Query DSL,该查询语言非常全面。

##示例 使用时不要加#注释内容

GET bank/_search

{

"query": { # 查询的字段

"match_all": {}

},

"from": 0, # 从第几条文档开始查

"size": 5,

"_source":["balance"],

"sort": [

{

"account_number": { # 返回结果按哪个列排序

"order": "desc" # 降序

}

}

]

}

##_source为要返回的字段

##from返回部分字段

GET bank/_search

{

"query": {

"match_all": {}

},

"from": 0,

"size": 5,

"sort": [

{

"account_number": {

"order": "desc"

}

}

],

"_source": ["balance","firstname"]

}

##query/match匹配查询

GET bank/_search

{

"query": {

"match": {

"account_number": "20"

}

}

}

##字符串,全文检索

GET bank/_search

{

"query": {

"match": {

"address": "kings"

}

}

}

##query/match

GET bank/_search

{

"query": {

"match": {

"address.keyword": "990 Mill" # 字段后面加上 .keyword

}

}

}

##query/match_phrase [不拆分匹配]

GET bank/_search

{

"query": {

"match_phrase": {

"address": "mill road" # 就是说不要匹配只有mill或只有road的,要匹配mill road一整个子串

}

}

}

##query/match和##query/match_phrase的区别(前者要求完全匹配符,后者要求包含即可)

##query/multi_math【多字段匹配】

GET bank/_search

{

"query": {

"multi_match": { # 前面的match仅指定了一个字段。

"query": "mill",

"fields": [ # state和address有mill子串 不要求都有

"state",

"address"

]

}

}

}

##query/bool/must复合查询

GET bank/_search

{

"query":{

"bool":{ #查询gender=m,并且address=mill的数据

"must":[ # 必须有这些字段

{"match":{"address":"mill"}},

{"match":{"gender":"M"}}

]

}

}

}

##must_not:必须不是指定的情况

GET bank/_search

{

"query": {

"bool": {

"must": [

{ "match": { "gender": "M" }},

{ "match": {"address": "mill"}}

],

"must_not": [ # 不可以是指定值

{ "match": { "age": "38" }}

]

}

}

##should:应该达到should列举的条件,如果到达会增加相关文档的评分,并不会改变查询的结果。如果query中只有should且只有一种匹配规则,那么should的条件就会被作为默认匹配条件二区改变查询结果。(相关度越高,得分也越高。)

GET bank/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"gender": "M"

}

},

{

"match": {

"address": "mill"

}

}

],

"must_not": [

{

"match": {

"age": "18"

}

}

],

"should": [

{

"match": { #匹配lastName应该等于Wallace的数据

"lastname": "Wallace"

}

}

]

}

}

}

##query/filter【结果过滤】must 贡献得分 should 贡献得分 must_not 不贡献得分 filter 不贡献得分

GET bank/_search

{

"query": {

"bool": {

"must": [

{ "match": {"address": "mill" } }

],

"filter": { # query.bool.filter

"range": {

"balance": { # 哪个字段

"gte": "10000",

"lte": "20000"

}

}

}

}

}

}

##query/term:和match一样。匹配某个属性的值。

##全文检索字段用match,其他非text字段匹配用term。(es默认存储text值时用分词分析,所以要搜索text值,使用match)

GET bank/_search

{

"query": {

"term": {

"address": "mill Road"

}

}

}

##aggs/agg1(聚合)

##聚合提供了从数据中分组和提取数据的能力。最简单的聚合方法大致等于SQL Group by和SQL聚合函数。

##示例1:搜索address中包含mill的所有人的年龄分布以及平均年龄,但不显示这些人的详情

GET bank/_search

{

"query": { # 查询出包含mill的

"match": {

"address": "Mill"

}

},

"aggs": { #基于查询聚合

"ageAgg": { # 聚合的名字,随便起

"terms": { # 看值的可能性分布

"field": "age",

"size": 10

}

},

"ageAvg": {

"avg": { # 看age值的平均

"field": "age"

}

},

"balanceAvg": {

"avg": { # 看balance的平均

"field": "balance"

}

}

},

"size": 0 # 不看详情

}

##aggs/aggName/aggs/aggName子聚合

##示例2:按照年龄聚合,并且求这些年龄段的这些人的平均薪资

GET bank/_search

{

"query": {

"match_all": {}

},

"aggs": {

"ageAgg": {

"terms": { # 看分布

"field": "age",

"size": 100

},

"aggs": { # 与terms并列

"ageAvg": { #平均

"avg": {

"field": "balance"

}

}

}

}

},

"size": 0

}

##示例3:查出所有年龄分布,并且这些年龄段中M的平均薪资和F的平均薪资以及这个年龄段的总体平均薪资

GET bank/_search

{

"query": {

"match_all": {}

},

"aggs": {

"ageAgg": {

"terms": { # 看age分布

"field": "age",

"size": 100

},

"aggs": { # 子聚合

"genderAgg": {

"terms": { # 看gender分布

"field": "gender.keyword" # 注意这里,文本字段应该用.keyword

},

"aggs": { # 子聚合

"balanceAvg": {

"avg": { # 男性的平均

"field": "balance"

}

}

}

},

"ageBalanceAvg": {

"avg": { #age分布的平均(男女)

"field": "balance"

}

}

}

}

},

"size": 0

}

Mapping字段映射

Mapping(映射)是用来定义一个文档(document),以及它所包含的属性(field)是如何存储和索引的。比如:使用maping来定义:

哪些字符串属性应该被看做全文本属性(full text fields);

哪些属性包含数字,日期或地理位置;

文档中的所有属性是否都嫩被索引(all 配置);

日期的格式;|

自定义映射规则来执行动态添加属性;

查看mapping信息:GET bank/_mapping

字段类型

-

字符串

text?于全?索引,搜索时会自动使用分词器进?分词再匹配

keyword不分词,搜索时需要匹配完整的值 -

数值型

整型: byte,short,integer,long

浮点型: float, half_float, scaled_float,double -

日期类型:date

-

范围型

integer_range, long_range, float_range,double_range,date_range -

布尔:boolean

-

二进制 :binary 会把值当做经过 base64 编码的字符串,默认不存储,且不可搜索

-

复杂数据类型

数组: Array

对象:object,object一个对象中也可以嵌套对象。

嵌套类型:nested 用于json对象数组

##创建映射

PUT /my_index

{

"mappings": {

"properties": {

"age": {

"type": "integer"

},

"email": {

"type": "keyword" # 指定为keyword

},

"name": {

"type": "text" # 全文检索。保存时候分词,检索时候进行分词匹配

}

}

}

}

##查看映射

GET /my_index

##添加新的字段映射

PUT /my_index/_mapping

{

"properties": {

"employee-id": {

"type": "keyword",

"index": false # 字段不能被检索。检索

}

}

}

##数据迁移

#6.0以后写法

POST reindex

{

"source":{

"index":"twitter"

},

"dest":{

"index":"new_twitters"

}

}

#老版本写法

POST reindex

{

"source":{

"index":"twitter",

"twitter":"twitter"

},

"dest":{

"index":"new_twitters"

}

}

##修改,想要将年龄修改为integer

GET /bank/_search

#查出

"age":{"type":"long"}

#先创建新的索引

PUT /newbank

{

"mappings": {

"properties": {

"account_number": {

"type": "long"

},

"address": {

"type": "text"

},

"age": {

"type": "integer"

},

"balance": {

"type": "long"

},

"city": {

"type": "keyword"

},

"email": {

"type": "keyword"

},

"employer": {

"type": "keyword"

},

"firstname": {

"type": "text"

},

"gender": {

"type": "keyword"

},

"lastname": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"state": {

"type": "keyword"

}

}

}

}

#再去查看

GET /bank/_search

#能够看到age的映射类型被修改为了integer.

"age":{"type":"integer"}

##将bank中的数据迁移到newbank中

POST _reindex

{

"source": {

"index": "bank",

"type": "account"

},

"dest": {

"index": "newbank"

}

}

分词

一个tokenizer(分词器)接收一个字符流,将之分割为独立的tokens(词元,通常是独立的单词),然后输出tokens流。 该tokenizer(分词器)还负责记录各个terms(词条)的顺序或position位置(用于phrase短语和word proximity词近邻查询),以及term(词条)所代表的原始word(单词)的start(起始)和end(结束)的character offsets(字符串偏移量)(用于高亮显示搜索的内容)。

elasticsearch提供了很多内置的分词器(标准分词器),可以用来构建custom analyzers(自定义分词器)。

所有的语言分词,默认使用的都是“Standard Analyzer”,但是这些分词器针对于中文的分词,并不友好。因此需要安装中文的分词器。下载地址: https://github.com/medcl/elasticsearch-analysis-ik/releases

- ik分词器安装步骤

[root@k8s101 ~]# curl http://localhost:9200

{

"name" : "4c163b58400e",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "M4JiMlNeSmeZkMngqp4c_A",

"version" : {

"number" : "7.4.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "2f90bbf7b93631e52bafb59b3b049cb44ec25e96",

"build_date" : "2019-10-28T20:40:44.881551Z",

"build_snapshot" : false,

"lucene_version" : "8.2.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

[root@k8s101 ~]# sudo docker exec -it elasticsearch /bin/bash

[root@66718a266132 elasticsearch]# pwd

/usr/share/elasticsearch

[root@66718a266132 elasticsearch]# pwd

/usr/share/elasticsearch

[root@66718a266132 elasticsearch]# yum install wget

#下载ik7.4.2

[root@66718a266132 elasticsearch]# wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.2/elasticsearch-analysis-ik-7.4.2.zip

[root@66718a266132 elasticsearch]# unzip elasticsearch-analysis-ik-7.4.2.zip -d ik

#移动到plugins目录下

[root@66718a266132 elasticsearch]# mv ik plugins/

[root@66718a266132 elasticsearch]# chmod -R 777 plugins/ik

[root@66718a266132 elasticsearch]# rm -rf elasticsearch-analysis-ik-7.6.2.zip

## 重启ES

docker restart elasticsearch

- 测试分词使用

POST _analyze

{

"analyzer": "standard",

"text": "I am the one."

}

GET _analyze

{

"text":"我是中国人"

}

GET _analyze

{

"analyzer": "ik_smart",

"text":"我是中国人"

}

GET _analyze

{

"analyzer": "ik_max_word",

"text":"我是中国人"

}

- 自定义分词

修改 /usr/share/elasticsearch/plugins/ik/config中的IKAnalyzer.cfg.xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置</comment>

<!--用户可以在这里配置自己的扩展字典 -->

<entry key="ext_dict"></entry>

<!--用户可以在这里配置自己的扩展停止词字典-->

<entry key="ext_stopwords"></entry>

<!--用户可以在这里配置远程扩展字典 -->

<entry key="remote_ext_dict">http://192.168.168.101/es/fenci.txt</entry>

<!--用户可以在这里配置远程扩展停止词字典-->

<!-- <entry key="remote_ext_stopwords">words_location</entry> -->

</properties>

修改完成后,需要重启elasticsearch容器,否则修改不生效。docker restart elasticsearch。

更新完成后,es只会对于新增的数据用更新分词。历史数据是不会重新分词的。如果想要历史数据重新分词,需要执行:

POST my_index/_update_by_query?conflicts=proceed