1 编写spark-submit脚本

#!/bin/sh

export HADOOP_USER_NAME=${9}

USER=${1}

echo “用户:”${USER}

L_DIR=${2}

JAR_PATH=${3}

echo “JAR_PATH:”${JAR_PATH}

MAIN_CALSS=${4}

echo “MAIN_CALSS:”${MAIN_CALSS}

EXE_MEM=${5}

echo “EXE_MEM:”${EXE_MEM}

EXE_NUM=${6}

echo “EXE_NUM:”${EXE_NUM}

EXE_CORE=${7}

echo “EXE_CORE:”${EXE_CORE}

PARAMS=${8}

echo “PARAMS:”${PARAMS}

TMP_DIR=$(cat /proc/sys/kernel/random/uuid)

# 在临时目录下创建文件夹

if [ ! -d "

L

D

I

R

/

{L_DIR}/

LD?IR/{USER}/

T

M

P

D

I

R

"

]

;

t

h

e

n

e

c

h

o

"

创

建

临

时

目

录

:

"

{TMP_DIR}" ];then echo "创建临时目录:"

TMPD?IR"];thenecho"创建临时目录:"{TMP_DIR}

mkdir -p

L

D

I

R

/

{L_DIR}/

LD?IR/{USER}/${TMP_DIR}

fi

# 下载JAR包

hdfs dfs -get ${JAR_PATH}

L

D

I

R

/

{L_DIR}/

LD?IR/{USER}/${TMP_DIR}

# 取出执行文件名称,不带路径

JAR_SPLIT=(echo ${JAR_PATH} | tr '/' ' ' )

# 执行目录授权

chmod -R 777\ ${L_DIR}/${USER}/${TMP_DIR}/

#修改执行用户

export HADOOP_USER_NAME=${1}

# 执行脚本

echo “/usr/bin/spark2-submit --class ${MAIN_CALSS} --driver-memory 6G --master yarn --deploy-mode client --executor-memory ${EXE_MEM}G --num-executors ${EXE_NUM} --executor-cores ${EXE_CORE} ${JAR_SPLIT[-1]} ${PARAMS}”

/usr/bin/spark2-submit --class ${MAIN_CALSS} --driver-memory 6G --master yarn --deploy-mode client --executor-memory ${EXE_MEM}G --num-executors ${EXE_NUM} --executor-cores ${EXE_CORE} --conf spark.yarn.executor.memoryOverhead=10240 ${L_DIR}/${USER}/${TMP_DIR}/${JAR_SPLIT[-1]} ${PARAMS}

echo “删除临时目录:”${TMP_DIR}

rm -rf ${L_DIR}/

U

S

E

R

/

{USER}/

USER/{TMP_DIR}

# 在临时目录下创建文件夹

if [ ! -d “${L_DIR}/

U

S

E

R

/

{USER}/

USER/{TMP_DIR}” ];then

echo “创建临时目录:”${TMP_DIR}

mkdir -p ${L_DIR}/${USER}/${TMP_DIR}

fi

# 下载JAR包

hdfs dfs -get ${JAR_PATH} ${L_DIR}/${USER}/${TMP_DIR}

# 取出执行文件名称,不带路径

JAR_SPLIT=(echo ${JAR_PATH} | tr '/' ' ' )

# 执行目录授权

chmod -R 777 ${L_DIR}/${USER}/${TMP_DIR}/

#修改执行用户

export HADOOP_USER_NAME=${1}

# 执行脚本

echo “/usr/bin/spark2-submit --class ${MAIN_CALSS} --driver-memory 6G --master yarn --deploy-mode client --executor-memory ${EXE_MEM}G --num-executors ${EXE_NUM} --executor-cores ${EXE_CORE} ${JAR_SPLIT[-1]} ${PARAMS}”

/usr/bin/spark2-submit --class ${MAIN_CALSS} --driver-memory 6G --master yarn --deploy-mode client --executor-memory ${EXE_MEM}G --num-executors ${EXE_NUM} --executor-cores ${EXE_CORE} --conf spark.yarn.executor.memoryOverhead=10240 ${L_DIR}/${USER}/${TMP_DIR}/${JAR_SPLIT[-1]} ${PARAMS}

echo “删除临时目录:”${TMP_DIR}

rm -rf ${L_DIR}/${USER}/${TMP_DIR}

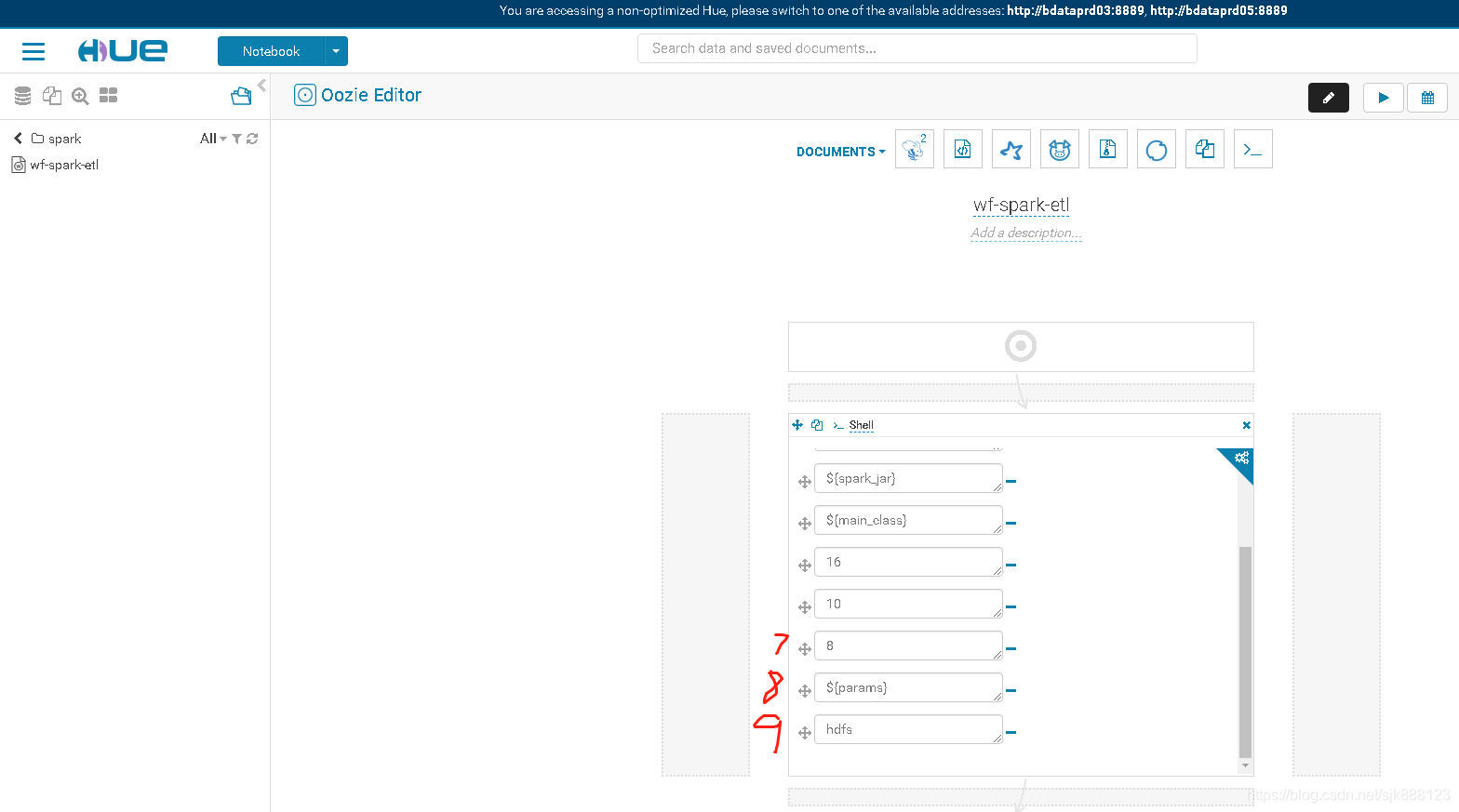

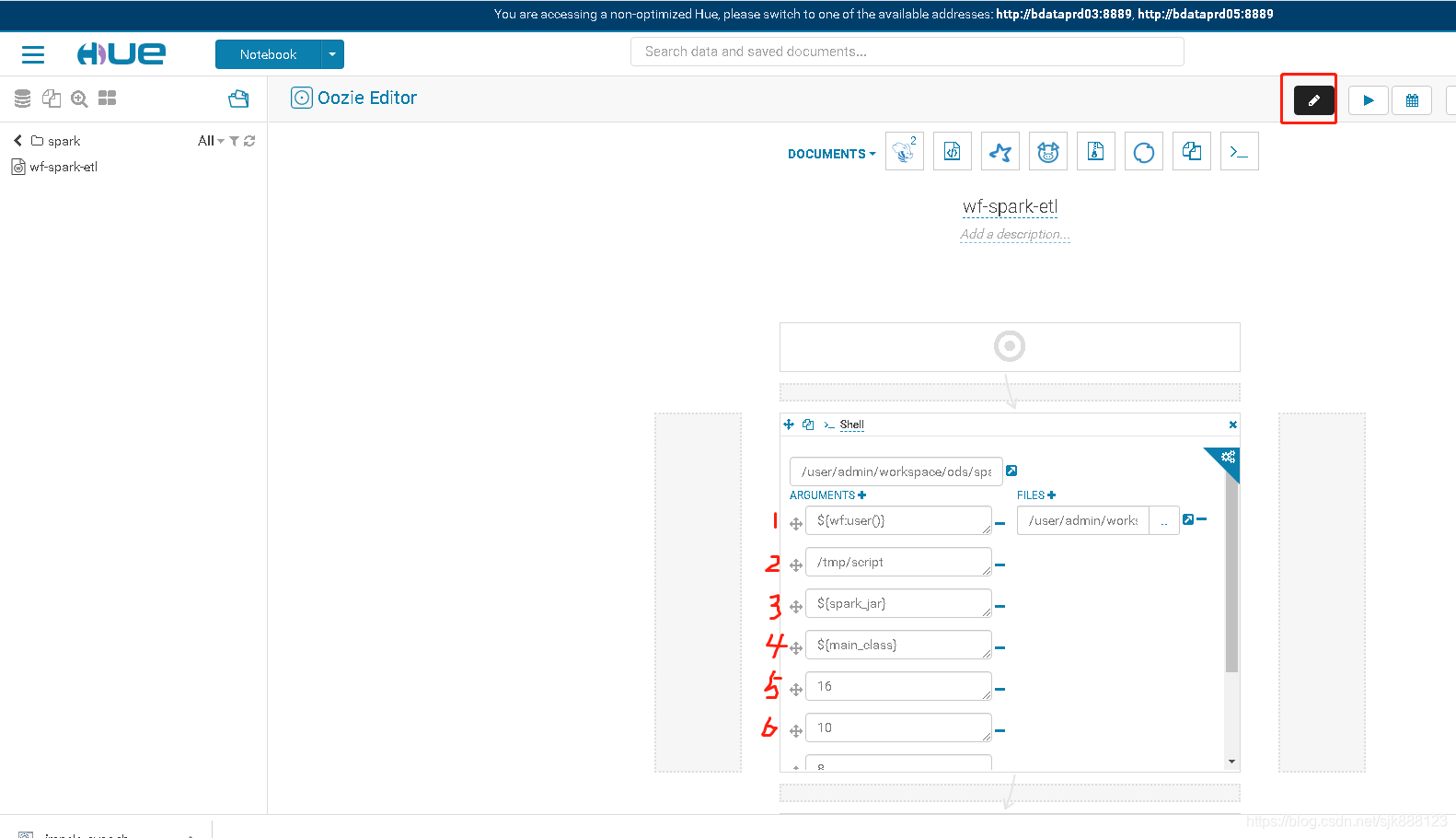

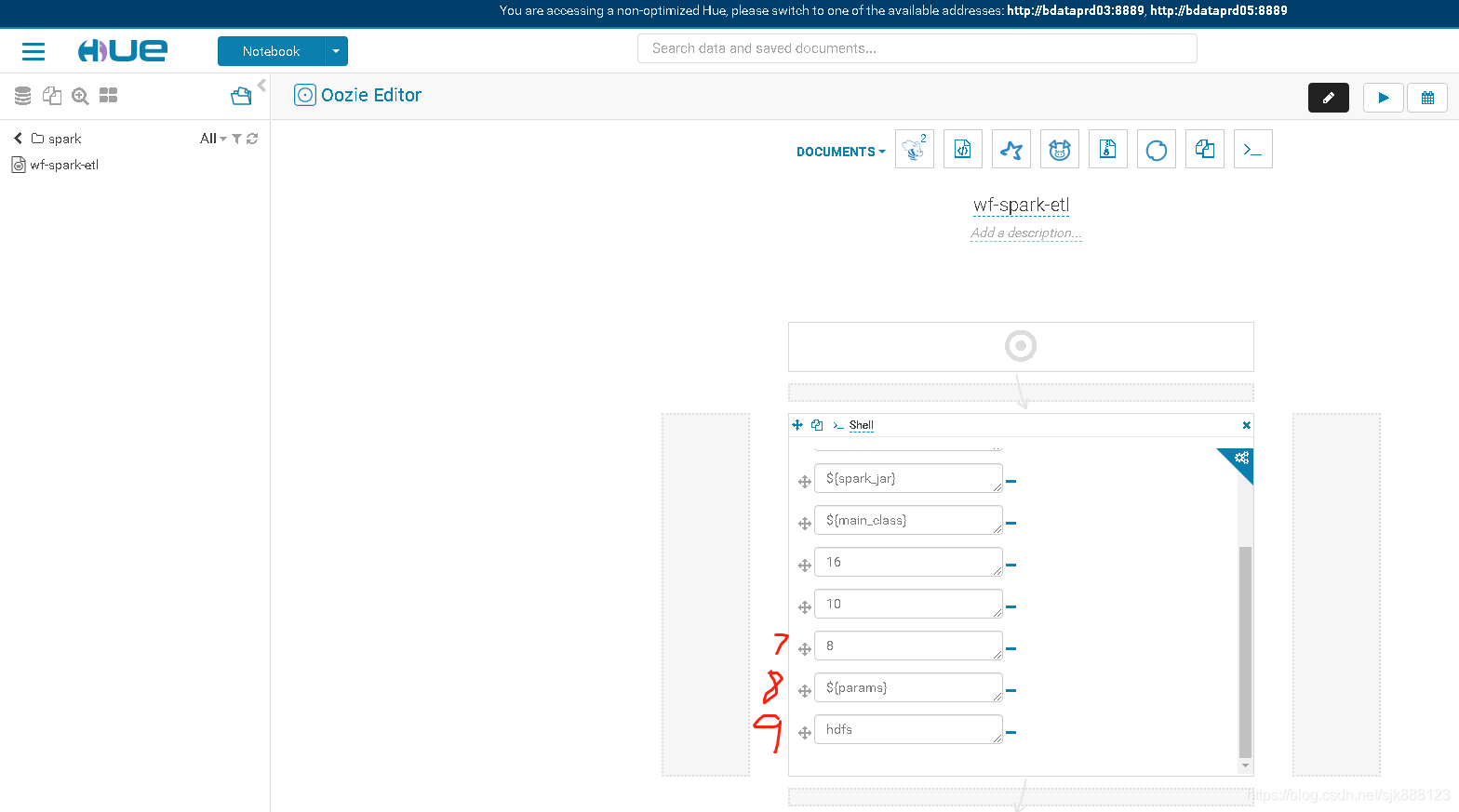

2 编辑脚本默认参数

1、2、3…中的值表示脚本中参数的值,带有${}表示提交任务时需要手动填写的参数

3 添加参数