?

Data Replication

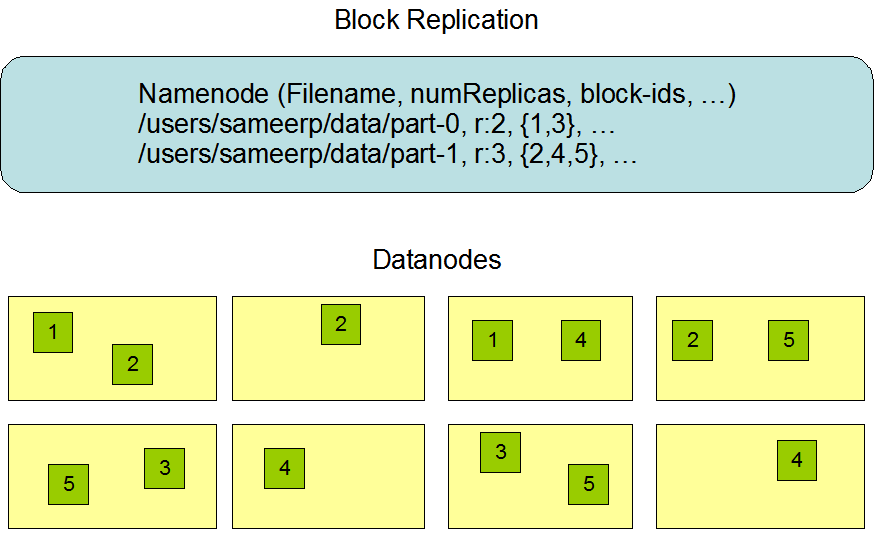

HDFS is designed to reliably store very large files across machines in a large cluster. It stores each file as a sequence of blocks. The blocks of a file are replicated for fault tolerance. The block size and replication factor are configurable per file.

All blocks in a file except the last block are the same size, while users can start a new block without filling out the last block to the configured block size after the support for variable length block was added to append and hsync.

An application can specify the number of replicas of a file. The replication factor can be specified at file creation time and can be changed later. Files in HDFS are write-once (except for appends and truncates) and have strictly one writer at any time.

The NameNode makes all decisions regarding replication of blocks. It periodically receives a Heartbeat and a Blockreport from each of the DataNodes in the cluster. Receipt of a Heartbeat implies that the DataNode is functioning properly. A Blockreport contains a list of all blocks on a DataNode.

HDFS设计用于在大型集群中跨机器可靠地存储非常大的文件。它将每个文件存储为一系列块。复制文件块以实现容错。每个文件的块大小和复制因子都是可配置的。

文件中除最后一个块外的所有块的大小都相同,而在将对可变长度块的支持添加到append和hsync后,用户可以启动新块,而无需将最后一个块填充到配置的块大小。

应用程序可以指定文件副本的数量。复制系数可以在文件创建时指定,以后可以更改。HDFS中的文件只写一次(除了附加和截断),并且在任何时候都有一个写入程序。

NameNode做出有关块复制的所有决策。它定期从集群中的每个数据节点接收心跳信号和块报告。接收到心跳信号意味着DataNode功能正常。Blockreport包含DataNode上所有块的列表。

The placement of replicas is critical to HDFS reliability and performance. Optimizing replica placement distinguishes HDFS from most other distributed file systems. This is a feature that needs lots of tuning and experience. The purpose of a rack-aware replica placement policy is to improve data reliability, availability, and network bandwidth utilization. The current implementation for the replica placement policy is a first effort in this direction. The short-term goals of implementing this policy are to validate it on production systems, learn more about its behavior, and build a foundation to test and research more sophisticated policies.

Large HDFS instances run on a cluster of computers that commonly spread across many racks. Communication between two nodes in different racks has to go through switches. In most cases, network bandwidth between machines in the same rack is greater than network bandwidth between machines in different racks.

The NameNode determines the rack id each DataNode belongs to via the process outlined in Hadoop Rack Awareness. A simple but non-optimal policy is to place replicas on unique racks. This prevents losing data when an entire rack fails and allows use of bandwidth from multiple racks when reading data. This policy evenly distributes replicas in the cluster which makes it easy to balance load on component failure. However, this policy increases the cost of writes because a write needs to transfer blocks to multiple racks.

For the common case, when the replication factor is three, HDFS’s placement policy is to put one replica on the local machine if the writer is on a datanode, otherwise on a random datanode in the same rack as that of the writer, another replica on a node in a different (remote) rack, and the last on a different node in the same remote rack. This policy cuts the inter-rack write traffic which generally improves write performance. The chance of rack failure is far less than that of node failure; this policy does not impact data reliability and availability guarantees. However, it does not reduce the aggregate network bandwidth used when reading data since a block is placed in only two unique racks rather than three. With this policy, the replicas of a block do not evenly distribute across the racks. Two replicas are on different nodes of one rack and the remaining replica is on a node of one of the other racks. This policy improves write performance without compromising data reliability or read performance.

If the replication factor is greater than 3, the placement of the 4th and following replicas are determined randomly while keeping the number of replicas per rack below the upper limit (which is basically (replicas - 1) / racks + 2).

Because the NameNode does not allow DataNodes to have multiple replicas of the same block, maximum number of replicas created is the total number of DataNodes at that time.

After the support for Storage Types and Storage Policies was added to HDFS, the NameNode takes the policy into account for replica placement in addition to the rack awareness described above. The NameNode chooses nodes based on rack awareness at first, then checks that the candidate node have storage required by the policy associated with the file. If the candidate node does not have the storage type, the NameNode looks for another node. If enough nodes to place replicas can not be found in the first path, the NameNode looks for nodes having fallback storage types in the second path.

The current, default replica placement policy described here is a work in progress.

副本的放置对于HDFS的可靠性和性能至关重要。优化副本放置使HDFS区别于大多数其他分布式文件系统。这是一个需要大量调整和经验的功能。机架感知副本放置策略的目的是提高数据可靠性、可用性和网络带宽利用率。副本放置策略的当前实现是这方面的第一项工作。实施这一政策的短期目标是在生产系统上验证它,了解它的行为,并为测试和研究更复杂的政策打下基础。

大型HDFS实例运行在通常分布在多个机架上的计算机集群上。不同机架中的两个节点之间的通信必须通过交换机进行。在大多数情况下,同一机架中机器之间的网络带宽大于不同机架中机器之间的网络带宽。

NameNode通过Hadoop rack Aware中概述的过程确定每个DataNode所属的机架id。一种简单但非最佳的策略是将副本放置在唯一的机架上。这样可以防止在整个机架出现故障时丢失数据,并允许在读取数据时使用多个机架的带宽。此策略在群集中均匀分布副本,这使得在组件出现故障时可以轻松平衡负载。但是,此策略会增加写入成本,因为写入需要将块传输到多个机架。

对于常见情况,当复制系数为3时,HDFS的放置策略是,如果写入程序位于datanode上,则在本地计算机上放置一个副本,否则在与写入程序位于同一机架的随机datanode上,在不同(远程)机架的节点上放置另一个副本,在同一远程机架的不同节点上放置最后一个副本。此策略减少机架间写入通信量,这通常会提高写入性能。机架故障的概率远小于节点故障的概率;此策略不会影响数据可靠性和可用性保证。但是,它不会减少读取数据时使用的总网络带宽,因为一个块只放在两个而不是三个唯一的机架中。使用此策略,块的副本不会均匀分布在机架上。两个副本位于一个机架的不同节点上,其余副本位于另一个机架的节点上。此策略在不影响数据可靠性或读取性能的情况下提高了写入性能。

如果复制系数大于3,则随机确定第4个和后续复制副本的位置,同时保持每个机架的复制副本数量低于上限(基本上为(复制副本-1)/机架+2)。

由于NameNode不允许DataNodes具有同一块的多个副本,因此创建的最大副本数是当时DataNodes的总数。

在HDFS中添加了对存储类型和存储策略的支持之后,除了上述机架感知之外,NameNode还考虑了副本放置的策略。NameNode首先基于机架感知选择节点,然后检查候选节点是否具有与文件关联的策略所需的存储。如果候选节点没有存储类型,NameNode将查找其他节点。如果在第一条路径中找不到足够放置副本的节点,NameNode将在第二条路径中查找具有回退存储类型的节点。

此处描述的当前默认副本放置策略正在执行中。

?

?

?