本文目录

1. cat

$ hadoop fs -help cat

-cat [-ignoreCrc] <src> ... :

Fetch all files that match the file pattern <src> and display their content on

stdout.

和Linux的cat命令一样,查看指定文件的内容。

hadoop fs -cat /shuguo/zhaoyun.txt

2. ls

$ hadoop fs -help ls

-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...] :

List the contents that match the specified file pattern. If path is not

specified, the contents of /user/<currentUser> will be listed. For a directory a

list of its direct children is returned (unless -d option is specified).

Directory entries are of the form:

permissions - userId groupId sizeOfDirectory(in bytes)

modificationDate(yyyy-MM-dd HH:mm) directoryName

and file entries are of the form:

permissions numberOfReplicas userId groupId sizeOfFile(in bytes)

modificationDate(yyyy-MM-dd HH:mm) fileName

-C Display the paths of files and directories only.

-d Directories are listed as plain files.

-h Formats the sizes of files in a human-readable fashion

rather than a number of bytes.

-q Print ? instead of non-printable characters.

-R Recursively list the contents of directories.

-t Sort files by modification time (most recent first).

-S Sort files by size.

-r Reverse the order of the sort.

-u Use time of last access instead of modification for

display and sorting.

-e Display the erasure coding policy of files and directories.

与Linux 的ls命令类似。显示指定路径下的内容,如果没有指定路径,则默认显示用户目录 /user/<currentUser> 下的内容。

$ hadoop fs -ls /

Found 3 items

drwxr-xr-x - pineapple supergroup 0 2021-08-18 15:33 /shuguo

drwx------ - pineapple supergroup 0 2021-08-12 18:46 /tmp

drwxr-xr-x - pineapple supergroup 0 2021-08-18 15:20 /weiguo

-C 选项只显示文件或目录(没有用户,组,权限,文件大小等信息)

$ hadoop fs -ls -C /

/shuguo

/tmp

/weiguo

-d 选项只显示指定的目录本身的信息(而不是目录下的内容)

$ hadoop fs -ls -d /

drwxr-xr-x - pineapple supergroup 0 2021-08-18 15:26 /

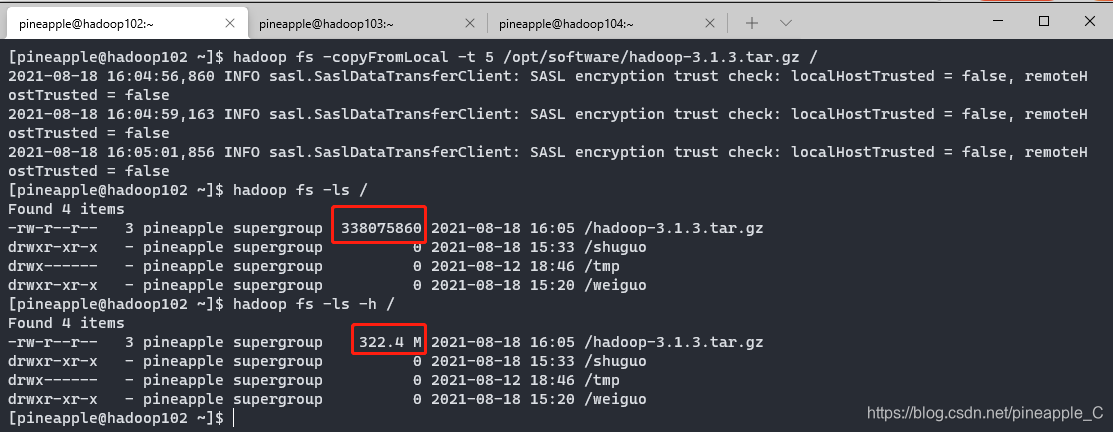

-h 选项以人类易读的方式显示,小文件看不出来,所以我们先上传一个大文件,两次查询对比一下

hadoop fs -copyFromLocal -t 5 /opt/software/hadoop-3.1.3.tar.gz /

hadoop fs -ls /

hadoop fs -ls -h /

-q 选项打印 ? 而不是不可打印字符,尚未理解,打印出的不都是正常的字符吗?

$ hadoop fs -ls -q /

Found 4 items

-rw-r--r-- 3 pineapple supergroup 338075860 2021-08-18 16:05 /hadoop-3.1.3.tar.gz

drwxr-xr-x - pineapple supergroup 0 2021-08-18 15:33 /shuguo

drwx------ - pineapple supergroup 0 2021-08-12 18:46 /tmp

drwxr-xr-x - pineapple supergroup 0 2021-08-18 15:20 /weiguo

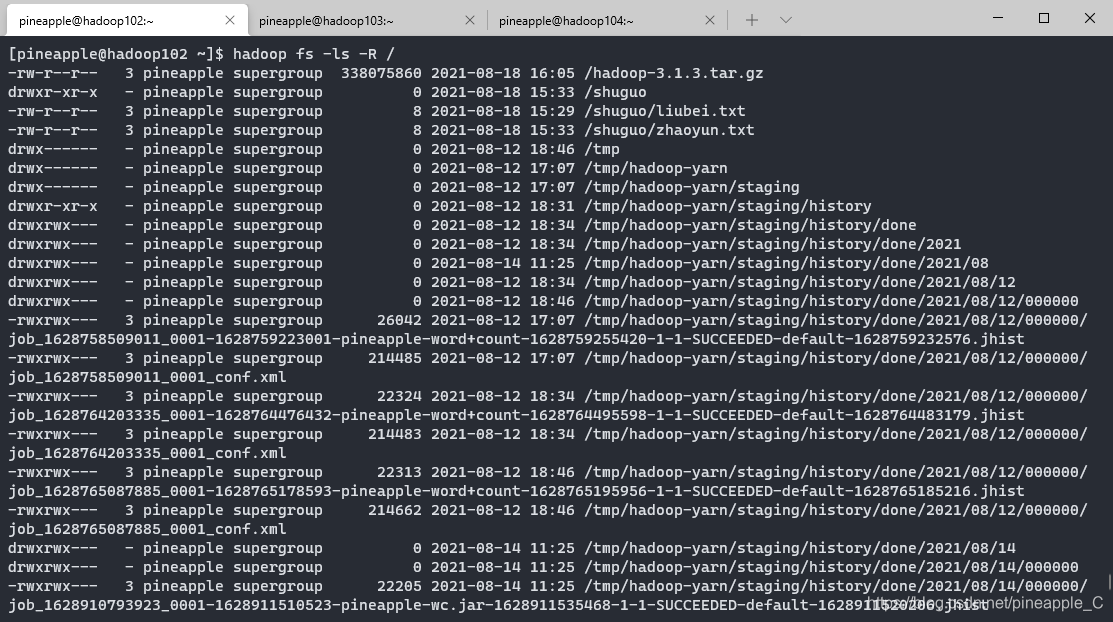

-R Recursively 递归地显示目录下所有文件

hadoop fs -ls -R /

-t 根据修改时间排序文件

$ hadoop fs -ls -t /weiguo

Found 5 items

-rw-r--r-- 3 pineapple supergroup 14 2021-08-18 15:20 /weiguo/zhangliao.txt

-rw-r--r-- 3 pineapple supergroup 8 2021-08-18 15:13 /weiguo/xuhuang.txt

-rw-r--r-- 1 pineapple supergroup 6 2021-08-18 15:02 /weiguo/xunyu.txt

-rwxrwxrwx 3 pineapple pineapple 7 2021-08-18 14:44 /weiguo/guojia.txt

-rw-r--r-- 3 pineapple supergroup 7 2021-08-18 14:38 /weiguo/caocao.txt

-S 更具文件大小排序文件

$ hadoop fs -ls -S /weiguo

Found 5 items

-rw-r--r-- 3 pineapple supergroup 14 2021-08-18 15:20 /weiguo/zhangliao.txt

-rw-r--r-- 3 pineapple supergroup 8 2021-08-18 15:13 /weiguo/xuhuang.txt

-rw-r--r-- 3 pineapple supergroup 7 2021-08-18 14:38 /weiguo/caocao.txt

-rwxrwxrwx 3 pineapple pineapple 7 2021-08-18 14:44 /weiguo/guojia.txt

-rw-r--r-- 1 pineapple supergroup 6 2021-08-18 15:02 /weiguo/xunyu.txt

-r 反序

$ hadoop fs -ls -S -r /weiguo

Found 5 items

-rw-r--r-- 1 pineapple supergroup 6 2021-08-18 15:02 /weiguo/xunyu.txt

-rw-r--r-- 3 pineapple supergroup 7 2021-08-18 14:38 /weiguo/caocao.txt

-rwxrwxrwx 3 pineapple pineapple 7 2021-08-18 14:44 /weiguo/guojia.txt

-rw-r--r-- 3 pineapple supergroup 8 2021-08-18 15:13 /weiguo/xuhuang.txt

-rw-r--r-- 3 pineapple supergroup 14 2021-08-18 15:20 /weiguo/zhangliao.txt

-u 按最后修改时间排序显示

$ hadoop fs -ls -u /weiguo

Found 5 items

-rw-r--r-- 3 pineapple supergroup 7 2021-08-18 15:42 /weiguo/caocao.txt

-rwxrwxrwx 3 pineapple pineapple 7 2021-08-18 15:47 /weiguo/guojia.txt

-rw-r--r-- 3 pineapple supergroup 8 2021-08-18 15:13 /weiguo/xuhuang.txt

-rw-r--r-- 1 pineapple supergroup 6 2021-08-18 15:02 /weiguo/xunyu.txt

-rw-r--r-- 3 pineapple supergroup 14 2021-08-18 15:20 /weiguo/zhangliao.txt

-e 显示文件和目录的擦除编码策略

$ hadoop fs -ls -e /weiguo

Found 5 items

-rw-r--r-- 3 pineapple supergroup Replicated 7 2021-08-18 14:38 /weiguo/caocao.txt

-rwxrwxrwx 3 pineapple pineapple Replicated 7 2021-08-18 14:44 /weiguo/guojia.txt

-rw-r--r-- 3 pineapple supergroup Replicated 8 2021-08-18 15:13 /weiguo/xuhuang.txt

-rw-r--r-- 1 pineapple supergroup Replicated 6 2021-08-18 15:02 /weiguo/xunyu.txt

-rw-r--r-- 3 pineapple supergroup Replicated 14 2021-08-18 15:20 /weiguo/zhangliao.txt

3. checksum

$ hadoop fs -help checksum

-checksum <src> ... :

Dump checksum information for files that match the file pattern <src> to stdout.

Note that this requires a round-trip to a datanode storing each block of the

file, and thus is not efficient to run on a large number of files. The checksum

of a file depends on its content, block size and the checksum algorithm and

parameters used for creating the file.

匹配指定的src参数,在标准输出 stdout 中抛出文件的校验和信息。注意,这需要到存储每个块的datanode上进行往返文件,所以如果文件很大的话,这是很低效的。文件的校验和取决于文件的内容,块大小,校验和算法和创建文件的参数。

$ hadoop fs -checksum /weiguo/caocao.txt

/weiguo/caocao.txt MD5-of-0MD5-of-512CRC32C 000002000000000000000000d55806fa6437e98198d3c198f4375c6d

$ hadoop fs -checksum /weiguo/caocao.txt >> caocao

$ cat caocao

/weiguo/caocao.txt MD5-of-0MD5-of-512CRC32C 000002000000000000000000d55806fa6437e98198d3c198f4375c6d

4. count

$ hadoop fs -help count

-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] [-e] <path> ... :

Count the number of directories, files and bytes under the paths

that match the specified file pattern. The output columns are:

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

or, with the -q option:

QUOTA REM_QUOTA SPACE_QUOTA REM_SPACE_QUOTA

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

The -h option shows file sizes in human readable format.

The -v option displays a header line.

The -x option excludes snapshots from being calculated.

The -t option displays quota by storage types.

It should be used with -q or -u option, otherwise it will be ignored.

If a comma-separated list of storage types is given after the -t option,

it displays the quota and usage for the specified types.

Otherwise, it displays the quota and usage for all the storage

types that support quota. The list of possible storage types(case insensitive):

ram_disk, ssd, disk and archive.

It can also pass the value '', 'all' or 'ALL' to specify all the storage types.

The -u option shows the quota and

the usage against the quota without the detailed content summary.The -e option

shows the erasure coding policy.

计算指定路径下的目录数,文件数,字节数。输出列是:DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME 也就是 目录数 文件数 字节数 路径

$ hadoop fs -count /weiguo

1 5 42 /weiguo

-q 选项还可以显示配额信息,具体是:

QUOTA REM_QUOTA SPACE_QUOTA REM_SPACE_QUOTA DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

$ hadoop fs -count -q /weiguo

none inf none inf 1 5 42 /weiguo

-h 以人类易读的形式显示文件大小,比如M,K等单位

$ hadoop fs -count -h /

23 21 323.6 M /

-v 显示列名

$ hadoop fs -count -h -v /

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

23 21 323.6 M /

-x 排除快照文件,目前尚无快照

$ hadoop fs -count -h -v -x /

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

23 21 323.6 M /

-u 显示配额和配额的使用情况,但不显示详细的内容摘要

$ hadoop fs -count -h -v -u /

QUOTA REM_QUOTA SPACE_QUOTA REM_SPACE_QUOTA PATHNAME

8.0 E 8.0 E none inf /

-t 显示各个存储类型的配额,需要配合 -q 和 -u 选项使用,否则会被自动忽略。-t 后可以指定存储类型(多类型用逗号分隔),可能的类型有:ram_disk,ssd,disk 和 archive

$ hadoop fs -count -h -v -q -t ram_disk /

RAM_DISK_QUOTA REM_RAM_DISK_QUOTA PATHNAME

none inf /

$ hadoop fs -count -h -v -q -t ssd /

SSD_QUOTA REM_SSD_QUOTA PATHNAME

none inf /

-e 显示擦除编码策略

$ hadoop fs -count -h -v -e /

DIR_COUNT FILE_COUNT CONTENT_SIZE ERASURECODING_POLICY PATHNAME

23 21 323.6 M EC: /

5. df

$ hadoop fs -help df

-df [-h] [<path> ...] :

Shows the capacity, free and used space of the filesystem. If the filesystem has

multiple partitions, and no path to a particular partition is specified, then

the status of the root partitions will be shown.

-h Formats the sizes of files in a human-readable fashion rather than a number

of bytes.

与Linux 的df命令类似,显示文件系统的容量,未使用,已使用的大小。

$ hadoop fs -df

Filesystem Size Used Available Use%

hdfs://hadoop102:8020 151298519040 1631666176 140081348608 1%

-h 选项易读。

$ hadoop fs -df -h

Filesystem Size Used Available Use%

hdfs://hadoop102:8020 140.9 G 1.5 G 130.5 G 1%

6. du

$ hadoop fs -help du

-du [-s] [-h] [-v] [-x] <path> ... :

Show the amount of space, in bytes, used by the files that match the specified

file pattern. The following flags are optional:

-s Rather than showing the size of each individual file that matches the

pattern, shows the total (summary) size.

-h Formats the sizes of files in a human-readable fashion rather than a number

of bytes.

-v option displays a header line.

-x Excludes snapshots from being counted.

Note that, even without the -s option, this only shows size summaries one level

deep into a directory.

The output is in the form

size disk space consumed name(full path)

与Linux 的du命令类似,显示指定路径的空间大小。输出的格式为:大小 占用磁盘空间 名称(全路径),因为HDFS上的文件默认有3个副本,所以占用磁盘空间一般为文件大小的3倍。

$ hadoop fs -du /

338075860 1014227580 /hadoop-3.1.3.tar.gz

16 48 /shuguo

1209412 3628236 /tmp

42 114 /weiguo

-s 显示指定路径的总空间大小

$ hadoop fs -du -s /

339285330 1017855978 /

-h 以人类易读的方式显示,比如M,K等单位

$ hadoop fs -du -h /

322.4 M 967.2 M /hadoop-3.1.3.tar.gz

16 48 /shuguo

1.2 M 3.5 M /tmp

42 114 /weiguo

-x 排除快照文件,目前尚未有快照

]$ hadoop fs -du -h -x /

322.4 M 967.2 M /hadoop-3.1.3.tar.gz

16 48 /shuguo

1.2 M 3.5 M /tmp

42 114 /weiguo

7. find

$ hadoop fs -help find

-find <path> ... <expression> ... :

Finds all files that match the specified expression and

applies selected actions to them. If no <path> is specified

then defaults to the current working directory. If no

expression is specified then defaults to -print.

The following primary expressions are recognised:

-name pattern

-iname pattern

Evaluates as true if the basename of the file matches the

pattern using standard file system globbing.

If -iname is used then the match is case insensitive.

-print

-print0

Always evaluates to true. Causes the current pathname to be

written to standard output followed by a newline. If the -print0

expression is used then an ASCII NULL character is appended rather

than a newline.

The following operators are recognised:

expression -a expression

expression -and expression

expression expression

Logical AND operator for joining two expressions. Returns

true if both child expressions return true. Implied by the

juxtaposition of two expressions and so does not need to be

explicitly specified. The second expression will not be

applied if the first fails.

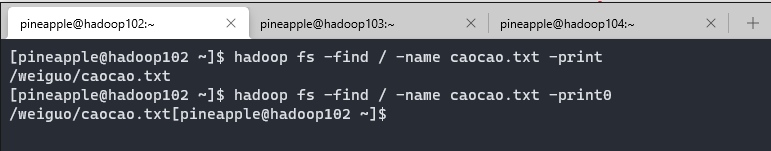

与Linux 的find命令类似,用来查找文件的位置。

$ hadoop fs -find / -name caocao.txt

/weiguo/caocao.txt

-print0 不输出最后的换行符

8. head

$ hadoop fs -help head

-head <file> :

Show the first 1KB of the file.

与Linux 的head命令类似,不过功能有点少,只能查看前1KB的数据。

hadoop fs -head /weiguo/caocao.txt

9. tail

$ hadoop fs -help tail

-tail [-f] [-s <sleep interval>] <file> :

Show the last 1KB of the file.

-f Shows appended data as the file grows.

-s With -f , defines the sleep interval between iterations in milliseconds.

与Linux 的tail命令类似,不过只能查看最后1KB的信息。

hadoop fs -tail /weiguo/caocao.txt

10. stat

$ hadoop fs -help stat

-stat [format] <path> ... :

Print statistics about the file/directory at <path>

in the specified format. Format accepts permissions in

octal (%a) and symbolic (%A), filesize in

bytes (%b), type (%F), group name of owner (%g),

name (%n), block size (%o), replication (%r), user name

of owner (%u), access date (%x, %X).

modification date (%y, %Y).

%x and %y show UTC date as "yyyy-MM-dd HH:mm:ss" and

%X and %Y show milliseconds since January 1, 1970 UTC.

If the format is not specified, %y is used by default.

与Linux 的stat命令类似,打印文件或目录的统计信息。

hadoop fs -stat "type:%F perm:%a %u:%g size:%b mtime:%y atime:%x name:%n" /weiguo/caocao.txt

type:regular file perm:644 pineapple:supergroup size:7 mtime:2021-08-18 06:38:13 atime:2021-08-18 09:08:59 name:caocao.txt

11. text

$ hadoop fs -help text

-text [-ignoreCrc] <src> ... :

Takes a source file and outputs the file in text format.

The allowed formats are zip and TextRecordInputStream and Avro.

获取源文件并以text的格式输出,好像和cat命令没什么区别,不解。

hadoop fs -text /weiguo/caocao.txt

12. getfacl

$ hadoop fs -help getfacl

-getfacl [-R] <path> :

Displays the Access Control Lists (ACLs) of files and directories. If a

directory has a default ACL, then getfacl also displays the default ACL.

-R List the ACLs of all files and directories recursively.

<path> File or directory to list.

与Linux 的getfacl命令类似,获取文件或目录的 访问控制列表

$ hadoop fs -getfacl /weiguo

# file: /weiguo

# owner: pineapple

# group: supergroup

user::rwx

group::r-x

other::r-x

-R 递归查询。

$ hadoop fs -getfacl -R /shuguo

# file: /shuguo

# owner: pineapple

# group: supergroup

user::rwx

group::r-x

other::r-x

# file: /shuguo/liubei.txt

# owner: pineapple

# group: supergroup

user::rw-

group::r--

other::r--

# file: /shuguo/zhaoyun.txt

# owner: pineapple

# group: supergroup

user::rw-

group::r--

other::r--

13. getfattr

$ hadoop fs -help getfattr

-getfattr [-R] {-n name | -d} [-e en] <path> :

Displays the extended attribute names and values (if any) for a file or

directory.

-R Recursively list the attributes for all files and directories.

-n name Dump the named extended attribute value.

-d Dump all extended attribute values associated with pathname.

-e <encoding> Encode values after retrieving them.Valid encodings are "text",

"hex", and "base64". Values encoded as text strings are enclosed

in double quotes ("), and values encoded as hexadecimal and

base64 are prefixed with 0x and 0s, respectively.

<path> The file or directory.

显示文件或目录的拓展属性信息。必须指定-n name 和 -d 选项

-d 显示与指定路径有关的所有拓展属性值

$ hadoop fs -getfattr -d /weiguo

# file: /weiguo

默认是没有任何属拓展性的,所以我们可以预先设置一下。用 setfattr 命令

$ hadoop fs -setfattr -n user.myAttr -v myValue /weiguo

$ hadoop fs -setfattr -n user.myTest -v myResult /weiguo

$ hadoop fs -getfattr -d /weiguo

# file: /weiguo

user.myAttr="myValue"

user.myTest="myResult"

-n name 显示指定的拓展属性

]$ hadoop fs -getfattr -n user.myAttr /weiguo

# file: /weiguo

user.myAttr="myValue"

-R recursively 递归的查询

$ hadoop fs -getfattr -R -d /weiguo

# file: /weiguo

user.myAttr="myValue"

user.myTest="myResult"

# file: /weiguo/caocao.txt

# file: /weiguo/guojia.txt

# file: /weiguo/xuhuang.txt

# file: /weiguo/xunyu.txt

# file: /weiguo/zhangliao.txt

-e <encoding> 检索之后对其进行编码,支持的编码格式有text,hex,base64三种,默认为text格式

hadoop fs -getfattr -d -e text /weiguo

hadoop fs -getfattr -d -e hex /weiguo

hadoop fs -getfattr -d -e base64 /weiguo