1. 理解

1.JdbcCatalog 使得用户可以将Flink通过JDBC协议连接到关系数据库。

2. PostgresCatalog 是当前实现的唯一一种JDBC Catalog

2. 需要引入的依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hive_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- Hive Dependency -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>3.1.2</version>

</dependency>

需要添加的 hive-site.xml 文件

3.实现

package com.wudl.flink.sql;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.TableResult;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.catalog.hive.HiveCatalog;

/**

* @ClassName : Flink_Sql_HiveCatalog

* @Description : Flink 操作HiveCatalog

* @Author :wudl

* @Date: 2021-08-20 21:50

*/

public class Flink_Sql_HiveCatalog {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 1. 创建HiveCatalog

HiveCatalog hiveCatalog = new HiveCatalog("myHive", "db_wudl", "wudl-flink-12/input");

//2.注册HiveCatalog

tableEnv.registerCatalog("myHive", hiveCatalog);

//4.使用HiveCatalog

tableEnv.useCatalog("myHive");

// tableEnv.executeSql("select * from dept_copy ").print();

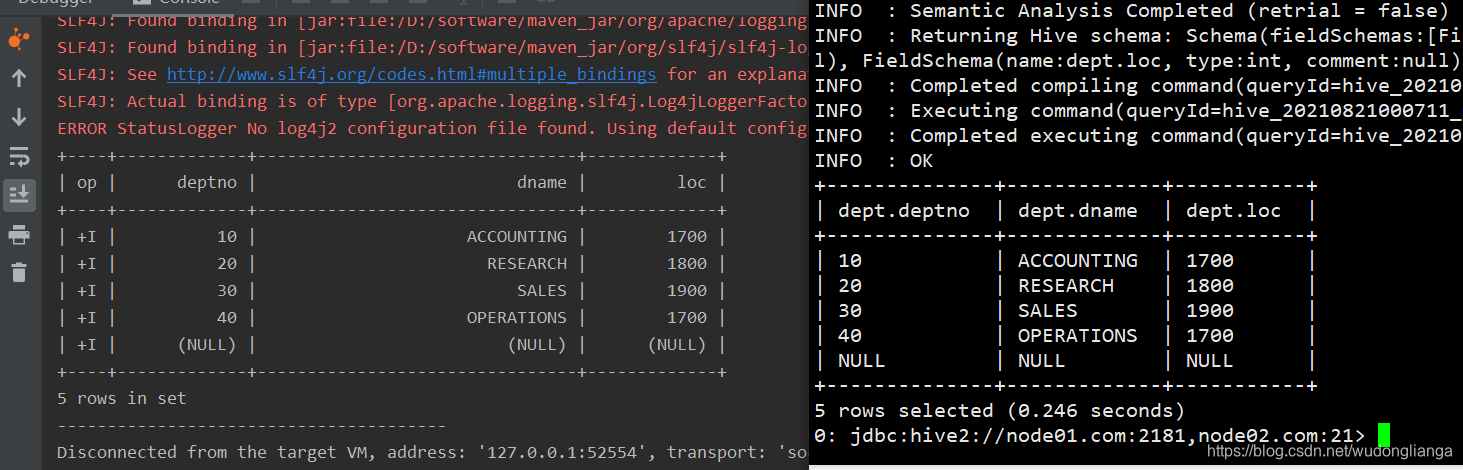

tableEnv.executeSql("select * from db_wudl.dept").print();

System.out.println("---------------------------------------");

// TableResult tableResult = tableEnv.executeSql("select * from db_wudl.dept");

// System.out.println(tableResult.getJobClient().get().getJobStatus());

// tableEnv.executeSql("CREATE TABLE IF NOT EXISTS db_wudl.dept_copy ( deptno INT, dname string, loc INT ) 'ROW' format delimited FIELDS TERMINATED BY '\t'");

// tableEnv.executeSql("insert into dept_copy select deptno, dname, loc from dept");

}

}