ETL

数据从来源端经过抽取(Extract)、转换(Transform)、加载(Load)至目的端的过程

清理的过程往往只需要运行 Mapper 程序,不需要运行 Reduce 程序。

案例

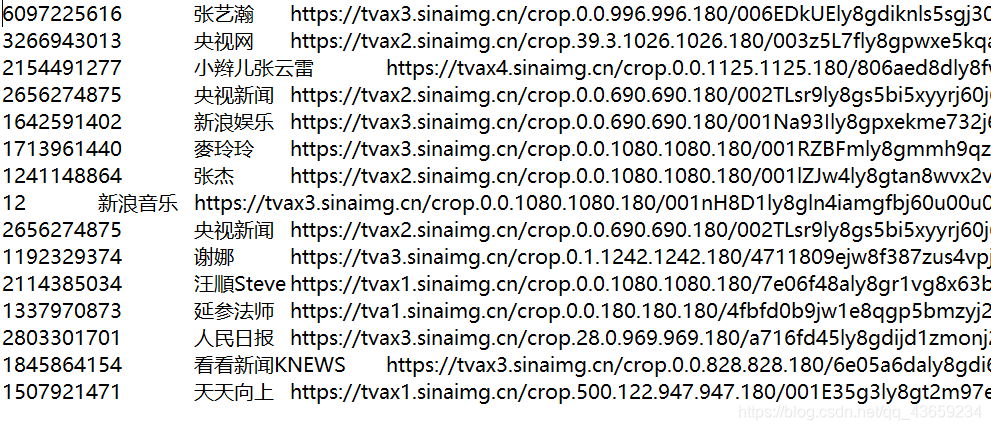

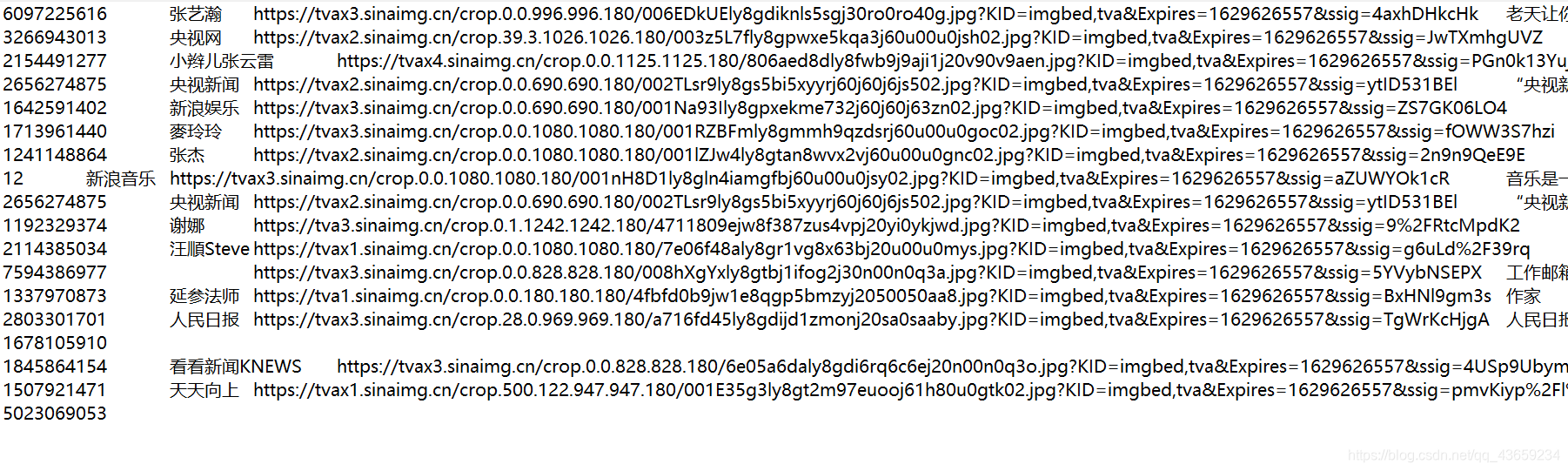

将含有空字段的行清洗

编写ETLMapper类

package ETL;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* @author 公羽

* date: 2021/8/22

* desc:

*/

public class ETLMapper extends Mapper<LongWritable, Text, Text , NullWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 获取每行数据

String line = value.toString();

// ETL清洗

boolean result = parselog(line, context);

if (!result){

return;

}

context.write(value,NullWritable.get());

}

private boolean parselog(String line, Context context) {

// 将每个字段进行切分

String[] split = line.split("\t");

// 判断字段数量

if (split.length > 5){

return true;

}else {

return false;

}

}

}

编写ETLDriver类

package ETL;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* @author 公羽

* date: 2021/8/22

* desc:

*/

public class ETLDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO 1.获取配置信息以及封装任务

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

// TODO 2.设置jar加载路径

job.setJarByClass(ETLDriver.class);

// TODO 3.设置map和reduce类

job.setMapperClass(ETLMapper.class);

// TODO 4.设置map输出路径

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

// TODO 5.设置最终输出kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

// TODO 6.设置reduce

job.setNumReduceTasks(0);

// TODO 7.设置输出和输入路径

FileInputFormat.setInputPaths(job, new Path("E:\\data.log"));

FileOutputFormat.setOutputPath(job, new Path("E:\\outdata_log"));

// TODO 8.提交

boolean b = job.waitForCompletion(true);

System.exit(b? 0:1);

}

}

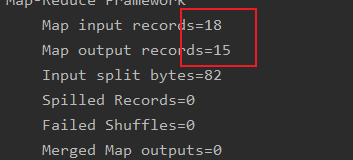

清理了三行数据