os: centos 7.6.1810

db: hbase 2.3.x

hadoop 2.10.x

zookeeper 3.6

jdk 1.8

hbase 是运行在 hadoop 之上,hadoop 又是运行在 jvm 之上。

索引 hbase、hadoop、jdk 的版本选择就得考虑兼容性

https://hbase.apache.org/book.html#java

https://hbase.apache.org/book.html#hadoop

节点规划,实验用

192.168.56.221 hb1

192.168.56.222 hb2

192.168.56.223 hb3

版本

# cat /etc/centos-release

CentOS Linux release 7.6.1810 (Core)

hadoop 2.10.x

所有节点创建用户,保持 id 一致

# groupadd -g 600 hadoop;

useradd -u 600 -g hadoop -G hadoop hadoop;

# passwd hadoop

所有节点 hadoop 用户下配置到其余节点 ssh 信任

# su - hadoop

$ ssh-keygen -t rsa

$ cd /home/hadoop/.ssh/

$ ls -l

total 8

-rw------- 1 hadoop hadoop 1675 Aug 19 15:21 id_rsa

-rw-r--r-- 1 hadoop hadoop 392 Aug 19 15:21 id_rsa.pub

$ touch authorized_keys;

chmod 600 authorized_keys;

$ ssh-copy-id hadoop@hb1;

$ ssh-copy-id hadoop@hb2;

$ ssh-copy-id hadoop@hb3;

$ chmod 700 ../.ssh/

# vi /etc/ssh/sshd_config

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

# service sshd restart

所有节点下载安装 hadoop(也可以从一台机器下载,然后拷贝到其余节点)

# cd /usr/local/

# wget https://downloads.apache.org/hadoop/common/hadoop-2.10.1/hadoop-2.10.1.tar.gz

# tar -zxvf ./hadoop-2.10.1.tar.gz

# chown -R hadoop:hadoop ./hadoop-2.10.1

# ln -s ./hadoop-2.10.1 hadoop-2.10

所有节点 /etc/profile 最后面追加环境变量

# vi /etc/profile

export HADOOP_HOME=/usr/local/hadoop-2.10

export PATH=.:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:${PATH}

所有节点重新登录,或者 source 下环境变量文件

# source /etc/profile

所有节点创建一些必要的目录

# su - hadoop

$

$ mkdir -p /home/hadoop/{data,tmp,name,journal}

hadoop 2.10.x 参数配置

仅在 hb1 节点下编辑如下文件。

- hadoop-env.sh 、yarn-env.sh 配置JAVA_HOME

$ vi ./etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

$ vi ./etc/hadoop/yarn-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

2.core-site.xml

不同节点需要适当调整下

$ vi ./etc/hadoop/core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-ha</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hb1:2181,hb2:2181,hd3:2181</value>

</property>

3.hdfs-site.xml

$ vi ./etc/hadoop/hdfs-site.xml

<property>

<name>dfs.nameservices</name>

<value>hadoop-ha</value>

</property>

<property>

<name>dfs.ha.namenodes.hadoop-ha</name>

<value>name-1,name-2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-ha.name-1</name>

<value>hb1:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-ha.name-1</name>

<value>hb1:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-ha.name-2</name>

<value>hb2:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-ha.name-2</name>

<value>hb2:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hb1:8485;hb2:8485;hb3:8485/hadoop-ha</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/journal</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.hadoop-ha</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

4.mapred-site.xml

$ cp ./etc/hadoop/mapred-site.xml.template ./etc/hadoop/mapred-site.xml

$ vi ./etc/hadoop/mapred-site.xml

<!-- mapreduce运行在yarn上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

5.yarn-site.xml

$ vi ./etc/hadoop/yarn-site.xml

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-ha</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>resource-1,resource-2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.resource-1</name>

<value>hb1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.resource-2</name>

<value>hb2</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hb1:2181,hb2:2181,hb3:2181</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

6.编辑 slaves 文件(所有数据节点)

$ vi ./etc/hadoop/slaves

hb1

hb2

hb3

hb1 节点上本地备份

cp /usr/local/hadoop-2.10/etc/hadoop/core-site.xml /home/hadoop/tmp_peiyb/core-site.xml;

cp /usr/local/hadoop-2.10/etc/hadoop/hdfs-site.xml /home/hadoop/tmp_peiyb/hdfs-site.xml;

cp /usr/local/hadoop-2.10/etc/hadoop/mapred-site.xml /home/hadoop/tmp_peiyb/mapred-site.xml;

cp /usr/local/hadoop-2.10/etc/hadoop/slaves /home/hadoop/tmp_peiyb/slaves;

cp /usr/local/hadoop-2.10/etc/hadoop/yarn-site.xml /home/hadoop/tmp_peiyb/yarn-site.xml;

cp /usr/local/hadoop-2.10/etc/hadoop/hadoop-env.sh /home/hadoop/tmp_peiyb/hadoop-env.sh;

cp /usr/local/hadoop-2.10/etc/hadoop/yarn-env.sh /home/hadoop/tmp_peiyb/yarn-env.sh;

hb1 节点上参数文件同步到 hb2、hb3 节点上

scp /usr/local/hadoop-2.10/etc/hadoop/core-site.xml hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/core-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/hdfs-site.xml hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/hdfs-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/mapred-site.xml hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/mapred-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/slaves hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/slaves;

scp /usr/local/hadoop-2.10/etc/hadoop/yarn-site.xml hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/yarn-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/hadoop-env.sh hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/hadoop-env.sh;

scp /usr/local/hadoop-2.10/etc/hadoop/yarn-env.sh hadoop@hb2:/usr/local/hadoop-2.10/etc/hadoop/yarn-env.sh;

scp /usr/local/hadoop-2.10/etc/hadoop/core-site.xml hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/core-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/hdfs-site.xml hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/hdfs-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/mapred-site.xml hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/mapred-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/slaves hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/slaves;

scp /usr/local/hadoop-2.10/etc/hadoop/yarn-site.xml hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/yarn-site.xml;

scp /usr/local/hadoop-2.10/etc/hadoop/hadoop-env.sh hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/hadoop-env.sh;

scp /usr/local/hadoop-2.10/etc/hadoop/yarn-env.sh hadoop@hb3:/usr/local/hadoop-2.10/etc/hadoop/yarn-env.sh;

格式化

hb1、hb2、hb3 节点依次启动JN。

$ hadoop-daemon.sh start journalnode

hb1 主节点执行,对HDFS进行格式化

$ hdfs namenode -format

hb1节点执行,启动NN

$ hadoop-daemon.sh start namenode

hb2节点执行,启动申请 standby 文件

$ hdfs namenode -bootstrapStandby

hb1 主节点执行,主节点切换控制信息进行格式化(仅执行一次)

$ hdfs zkfc -formatZK

启动 hadoop

hb1 主节点执行

$ start-dfs.sh

$ jps

hb1 主节点执行

$ start-yarn.sh

$ jps

849 JournalNode

2626 ResourceManager

2805 NodeManager

2470 DFSZKFailoverController

1063 NameNode

2108 DataNode

3213 Jps

查看 hdfs

$ hdfs dfsadmin -report

Configured Capacity: 151298519040 (140.91 GB)

Present Capacity: 126000730112 (117.35 GB)

DFS Remaining: 126000717824 (117.35 GB)

DFS Used: 12288 (12 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.56.221:50010 (hb1)

Hostname: hb1

Decommission Status : Normal

Configured Capacity: 50432839680 (46.97 GB)

DFS Used: 4096 (4 KB)

Non DFS Used: 8434872320 (7.86 GB)

DFS Remaining: 41997963264 (39.11 GB)

DFS Used%: 0.00%

DFS Remaining%: 83.28%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Aug 24 11:09:44 CST 2021

Last Block Report: Tue Aug 24 11:07:50 CST 2021

Name: 192.168.56.222:50010 (hb2)

Hostname: hb2

Decommission Status : Normal

Configured Capacity: 50432839680 (46.97 GB)

DFS Used: 4096 (4 KB)

Non DFS Used: 8430780416 (7.85 GB)

DFS Remaining: 42002055168 (39.12 GB)

DFS Used%: 0.00%

DFS Remaining%: 83.28%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Aug 24 11:09:44 CST 2021

Last Block Report: Tue Aug 24 11:07:50 CST 2021

Name: 192.168.56.223:50010 (hb3)

Hostname: hb3

Decommission Status : Normal

Configured Capacity: 50432839680 (46.97 GB)

DFS Used: 4096 (4 KB)

Non DFS Used: 8432136192 (7.85 GB)

DFS Remaining: 42000699392 (39.12 GB)

DFS Used%: 0.00%

DFS Remaining%: 83.28%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Aug 24 11:09:44 CST 2021

Last Block Report: Tue Aug 24 11:07:50 CST 2021

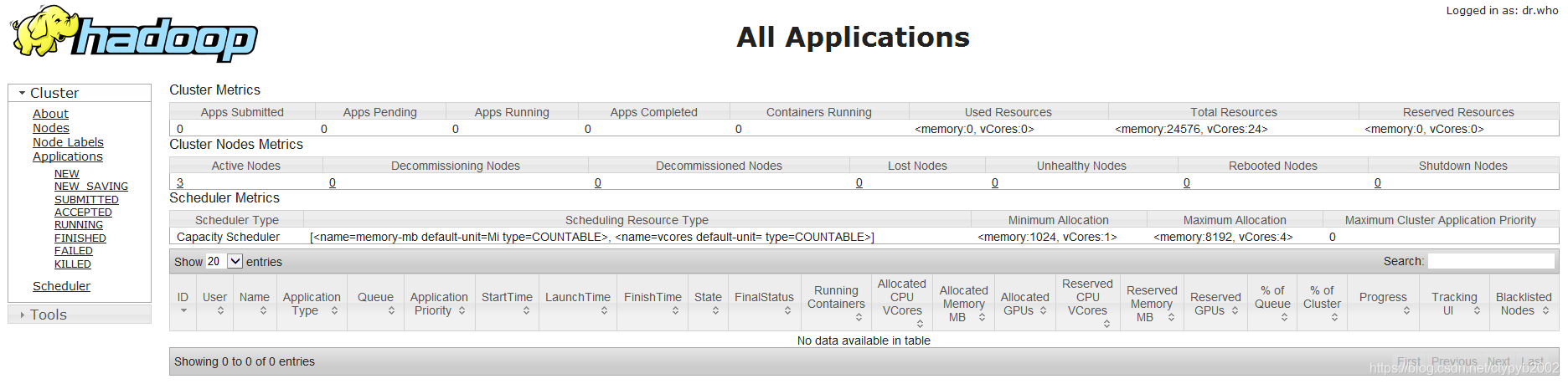

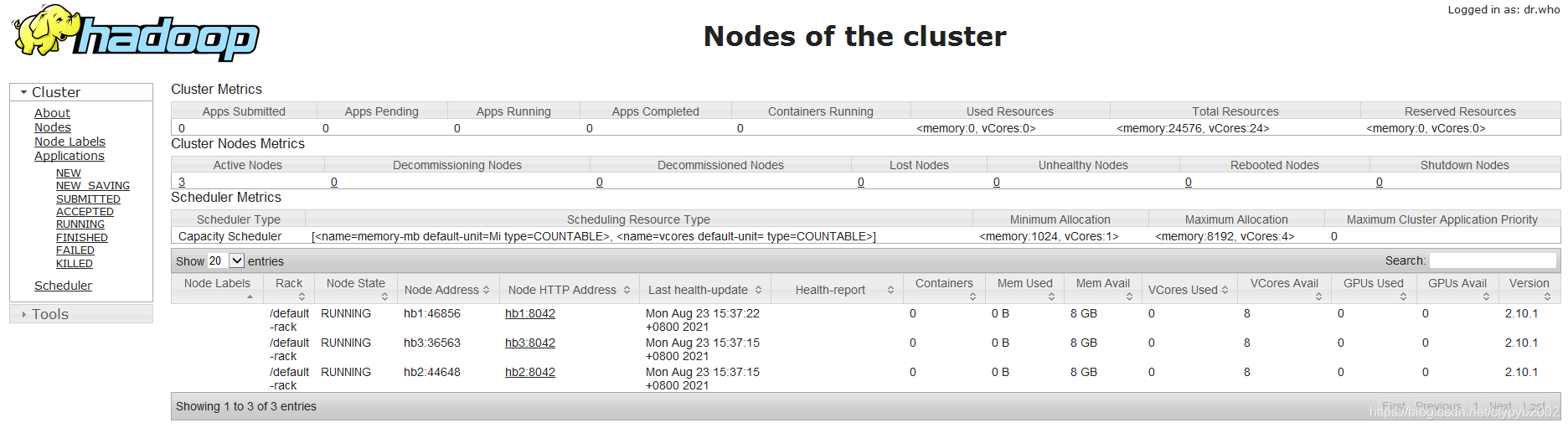

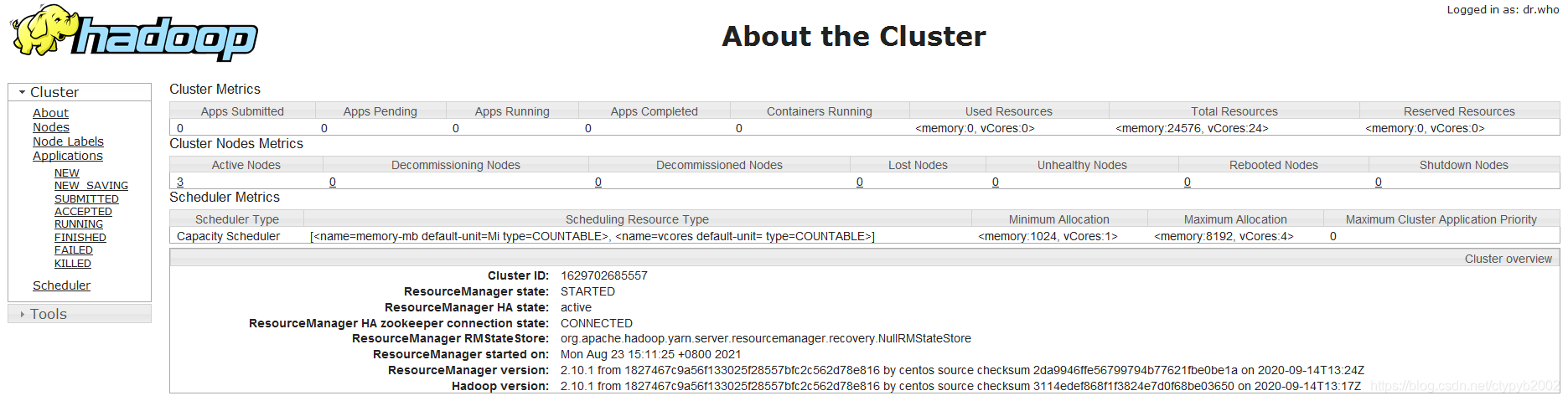

http://192.168.56.221:8088 查看资源

验证 hdfs

$ hadoop fs -mkdir -p /peiyb/tmp

$ hadoop fs -ls -R /

drwxr-xr-x - hadoop supergroup 0 2021-08-23 15:21 /peiyb

drwxr-xr-x - hadoop supergroup 0 2021-08-23 15:21 /peiyb/tmp

验证 mapreduce

$ hadoop jar /usr/local/hadoop-2.10/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.1.jar pi 2 1000

参考:

http://hadoop.apache.org/docs/r3.1.0/hadoop-project-dist/hadoop-common/SingleCluster.html

http://hadoop.apache.org/docs/r3.1.0/hadoop-project-dist/hadoop-common/ClusterSetup.html

https://blog.csdn.net/qq_37823605/article/details/90477863