什么是Spark Event Log

Spark Event Log是Spark内部主要的事件日志。比如Spark Application什么时候启动,什么时候关闭,什么时候Task开始,什么时候Task结束等。

为什么需要Spark Event Log

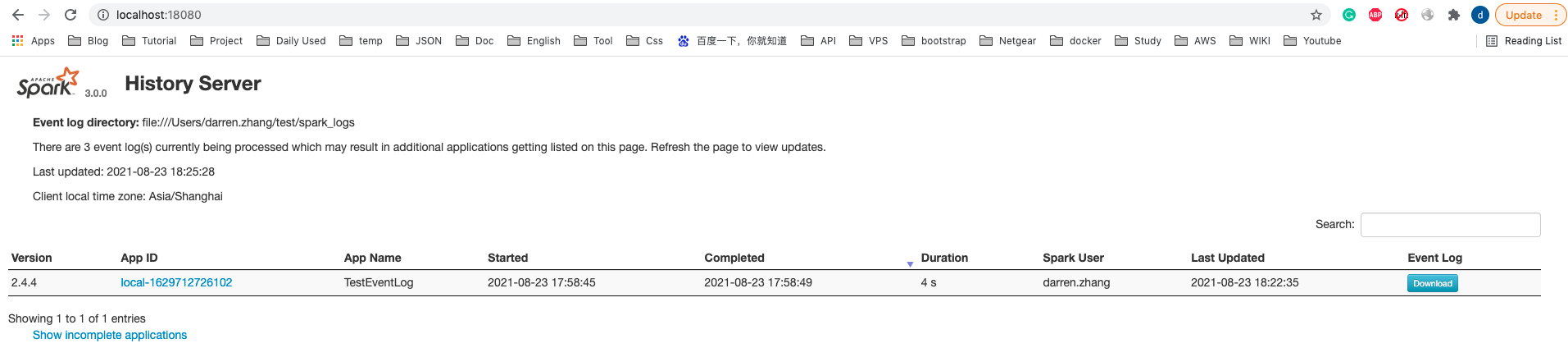

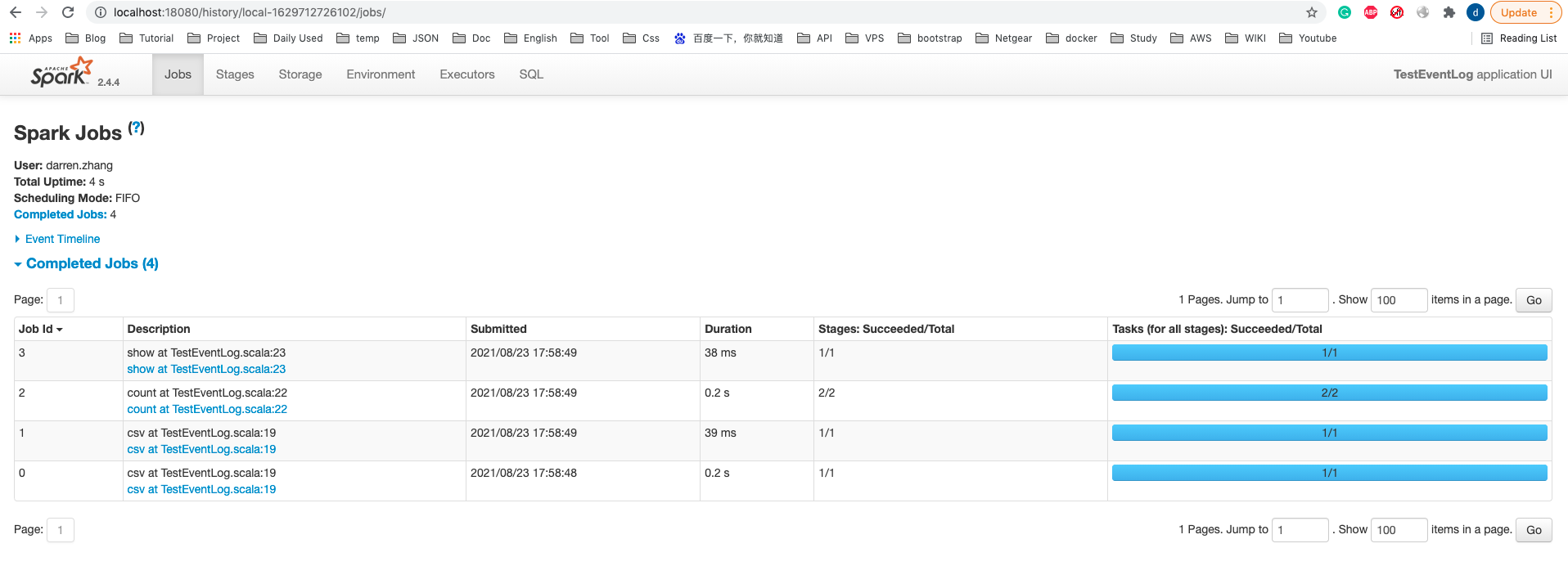

我们都知道Spark启动后回启动Spark UI,这个Spark UI可以帮助我们监控应用程序的状态。但是如果Spark应用跑完了,Spark UI就无法查看。如果Spark在执行过程中出了问题,我们没有办法去快速查找出问题的原因。所以我们需要把Spark Event持久化到磁盘。然后通过Spark History Server去读取Spark Event Log就可以重现运行时情况。可以快速的帮助我们分析问题。

如何开启Spark Event Log

Spark Event Log默认是没有开启的,如果要开启,需要指定如下参数:

spark.eventLog.enabled=true

spark.eventLog.dir=hdfs:///user/spark/applicationHistory

注:Spark Event Log分存储位置比较灵活,可以是HDFS,本地,也可以是S3

Spark Event Log张什么样子

{"Event":"SparkListenerLogStart","Spark Version":"2.4.4"}

{"Event":"SparkListenerExecutorAdded","Timestamp":1629712726157,"Executor ID":"driver","Executor Info":{"Host":"localhost","Total Cores":1,"Log Urls":{}}}

{"Event":"SparkListenerBlockManagerAdded","Block Manager ID":{"Executor ID":"driver","Host":"ip-172-29-6-153.ap-northeast-2.compute.internal","Port":55466},"Maximum Memory":2101975449,"Timestamp":1629712726182,"Maximum Onheap Memory":2101975449,"Maximum Offheap Memory":0}

{"Event":"SparkListenerEnvironmentUpdate","JVM Information":{"Java Home":"/Library/Java/JavaVirtualMachines/jdk1.8.0_231.jdk/Contents/Home/jre","Java Version":"1.8.0_231 (Oracle Corporation)","Scala Version":"version 2.11.12"}}

{"Event":"SparkListenerApplicationStart","App Name":"TestEventLog","App ID":"local-1629712726102","Timestamp":1629712725234,"User":"darren.zhang"}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerSQLExecutionStart","executionId":0,"description":"csv at TestEventLog.scala:19","details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","physicalPlanDescription":"== Parsed Logical Plan ==\nGlobalLimit 1\n+- LocalLimit 1\n +- Filter (length(trim(value#0, None)) > 0)\n +- Project [value#0]\n +- Relation[value#0] text\n\n== Analyzed Logical Plan ==\nvalue: string\nGlobalLimit 1\n+- LocalLimit 1\n +- Filter (length(trim(value#0, None)) > 0)\n +- Project [value#0]\n +- Relation[value#0] text\n\n== Optimized Logical Plan ==\nGlobalLimit 1\n+- LocalLimit 1\n +- Filter (length(trim(value#0, None)) > 0)\n +- Relation[value#0] text\n\n== Physical Plan ==\nCollectLimit 1\n+- *(1) Filter (length(trim(value#0, None)) > 0)\n +- *(1) FileScan text [value#0] Batched: false, Format: Text, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=2/file3.c..., PartitionFilters: [], PushedFilters: [], ReadSchema: struct<value:string>","sparkPlanInfo":{"nodeName":"CollectLimit","simpleString":"CollectLimit 1","children":[{"nodeName":"WholeStageCodegen","simpleString":"WholeStageCodegen","children":[{"nodeName":"Filter","simpleString":"Filter (length(trim(value#0, None)) > 0)","children":[{"nodeName":"Scan text ","simpleString":"FileScan text [value#0] Batched: false, Format: Text, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=2/file3.c..., PartitionFilters: [], PushedFilters: [], ReadSchema: struct<value:string>","children":[],"metadata":{"Location":"InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=2/file3.csv, file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=2/file4.csv, file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=3/file6.csv, file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=3/file5.csv, file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=1/file1.csv, file:/Users/darren.zhang/project/workspace/darren-spark/test_file/key=1/file2.csv]","ReadSchema":"struct<value:string>","Format":"Text","Batched":"false","PartitionFilters":"[]","PushedFilters":"[]"},"metrics":[{"name":"number of output rows","accumulatorId":2,"metricType":"sum"},{"name":"number of files","accumulatorId":3,"metricType":"sum"},{"name":"metadata time (ms)","accumulatorId":4,"metricType":"sum"},{"name":"scan time total (min, med, max)","accumulatorId":5,"metricType":"timing"}]}],"metadata":{},"metrics":[{"name":"number of output rows","accumulatorId":1,"metricType":"sum"}]}],"metadata":{},"metrics":[{"name":"duration total (min, med, max)","accumulatorId":0,"metricType":"timing"}]}],"metadata":{},"metrics":[]},"time":1629712728178}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerDriverAccumUpdates","executionId":0,"accumUpdates":[[3,6],[4,1]]}

{"Event":"SparkListenerJobStart","Job ID":0,"Submission Time":1629712728711,"Stage Infos":[{"Stage ID":0,"Stage Attempt ID":0,"Stage Name":"csv at TestEventLog.scala:19","Number of Tasks":1,"RDD Info":[{"RDD ID":3,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"4\",\"name\":\"map\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[2],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":0,"Name":"FileScanRDD","Scope":"{\"id\":\"0\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":1,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"0\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[0],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":2,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"3\",\"name\":\"mapPartitionsInternal\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[1],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","Accumulables":[]}],"Stage IDs":[0],"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"0","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerStageSubmitted","Stage Info":{"Stage ID":0,"Stage Attempt ID":0,"Stage Name":"csv at TestEventLog.scala:19","Number of Tasks":1,"RDD Info":[{"RDD ID":3,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"4\",\"name\":\"map\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[2],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":0,"Name":"FileScanRDD","Scope":"{\"id\":\"0\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":1,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"0\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[0],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":2,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"3\",\"name\":\"mapPartitionsInternal\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[1],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712728723,"Accumulables":[]},"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"0","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerTaskStart","Stage ID":0,"Stage Attempt ID":0,"Task Info":{"Task ID":0,"Index":0,"Attempt":0,"Launch Time":1629712728789,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":0,"Failed":false,"Killed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":0,"Stage Attempt ID":0,"Task Type":"ResultTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":0,"Index":0,"Attempt":0,"Launch Time":1629712728789,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1629712728902,"Failed":false,"Killed":false,"Accumulables":[{"ID":1,"Name":"number of output rows","Update":"1","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":2,"Name":"number of output rows","Update":"1","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":28,"Name":"internal.metrics.input.recordsRead","Update":1,"Value":1,"Internal":true,"Count Failed Values":true},{"ID":27,"Name":"internal.metrics.input.bytesRead","Update":25,"Value":25,"Internal":true,"Count Failed Values":true},{"ID":10,"Name":"internal.metrics.resultSize","Update":1275,"Value":1275,"Internal":true,"Count Failed Values":true},{"ID":9,"Name":"internal.metrics.executorCpuTime","Update":68016000,"Value":68016000,"Internal":true,"Count Failed Values":true},{"ID":8,"Name":"internal.metrics.executorRunTime","Update":72,"Value":72,"Internal":true,"Count Failed Values":true},{"ID":7,"Name":"internal.metrics.executorDeserializeCpuTime","Update":15237000,"Value":15237000,"Internal":true,"Count Failed Values":true},{"ID":6,"Name":"internal.metrics.executorDeserializeTime","Update":17,"Value":17,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":17,"Executor Deserialize CPU Time":15237000,"Executor Run Time":72,"Executor CPU Time":68016000,"Result Size":1275,"JVM GC Time":0,"Result Serialization Time":0,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":0,"Fetch Wait Time":0,"Remote Bytes Read":0,"Remote Bytes Read To Disk":0,"Local Bytes Read":0,"Total Records Read":0},"Shuffle Write Metrics":{"Shuffle Bytes Written":0,"Shuffle Write Time":0,"Shuffle Records Written":0},"Input Metrics":{"Bytes Read":25,"Records Read":1},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerStageCompleted","Stage Info":{"Stage ID":0,"Stage Attempt ID":0,"Stage Name":"csv at TestEventLog.scala:19","Number of Tasks":1,"RDD Info":[{"RDD ID":3,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"4\",\"name\":\"map\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[2],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":0,"Name":"FileScanRDD","Scope":"{\"id\":\"0\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":1,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"0\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[0],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":2,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"3\",\"name\":\"mapPartitionsInternal\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[1],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712728723,"Completion Time":1629712728910,"Accumulables":[{"ID":8,"Name":"internal.metrics.executorRunTime","Value":72,"Internal":true,"Count Failed Values":true},{"ID":2,"Name":"number of output rows","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":7,"Name":"internal.metrics.executorDeserializeCpuTime","Value":15237000,"Internal":true,"Count Failed Values":true},{"ID":1,"Name":"number of output rows","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":28,"Name":"internal.metrics.input.recordsRead","Value":1,"Internal":true,"Count Failed Values":true},{"ID":10,"Name":"internal.metrics.resultSize","Value":1275,"Internal":true,"Count Failed Values":true},{"ID":27,"Name":"internal.metrics.input.bytesRead","Value":25,"Internal":true,"Count Failed Values":true},{"ID":9,"Name":"internal.metrics.executorCpuTime","Value":68016000,"Internal":true,"Count Failed Values":true},{"ID":6,"Name":"internal.metrics.executorDeserializeTime","Value":17,"Internal":true,"Count Failed Values":true}]}}

{"Event":"SparkListenerJobEnd","Job ID":0,"Completion Time":1629712728913,"Job Result":{"Result":"JobSucceeded"}}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerSQLExecutionEnd","executionId":0,"time":1629712728918}

{"Event":"SparkListenerJobStart","Job ID":1,"Submission Time":1629712729028,"Stage Infos":[{"Stage ID":1,"Stage Attempt ID":0,"Stage Name":"csv at TestEventLog.scala:19","Number of Tasks":1,"RDD Info":[{"RDD ID":8,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"13\",\"name\":\"mapPartitions\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[7],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":4,"Name":"FileScanRDD","Scope":"{\"id\":\"10\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":6,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"9\",\"name\":\"DeserializeToObject\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[5],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":7,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"12\",\"name\":\"mapPartitions\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[6],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":5,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"10\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[4],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","Accumulables":[]}],"Stage IDs":[1],"Properties":{"spark.rdd.scope.noOverride":"true","spark.rdd.scope":"{\"id\":\"14\",\"name\":\"aggregate\"}"}}

{"Event":"SparkListenerStageSubmitted","Stage Info":{"Stage ID":1,"Stage Attempt ID":0,"Stage Name":"csv at TestEventLog.scala:19","Number of Tasks":1,"RDD Info":[{"RDD ID":8,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"13\",\"name\":\"mapPartitions\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[7],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":4,"Name":"FileScanRDD","Scope":"{\"id\":\"10\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":6,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"9\",\"name\":\"DeserializeToObject\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[5],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":7,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"12\",\"name\":\"mapPartitions\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[6],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":5,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"10\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[4],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729029,"Accumulables":[]},"Properties":{"spark.rdd.scope.noOverride":"true","spark.rdd.scope":"{\"id\":\"14\",\"name\":\"aggregate\"}"}}

{"Event":"SparkListenerTaskStart","Stage ID":1,"Stage Attempt ID":0,"Task Info":{"Task ID":1,"Index":0,"Attempt":0,"Launch Time":1629712729035,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":0,"Failed":false,"Killed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":1,"Stage Attempt ID":0,"Task Type":"ResultTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":1,"Index":0,"Attempt":0,"Launch Time":1629712729035,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1629712729066,"Failed":false,"Killed":false,"Accumulables":[{"ID":31,"Name":"number of output rows","Update":"12","Value":"12","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":35,"Name":"duration total (min, med, max)","Update":"17","Value":"16","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":58,"Name":"internal.metrics.input.recordsRead","Update":12,"Value":12,"Internal":true,"Count Failed Values":true},{"ID":57,"Name":"internal.metrics.input.bytesRead","Update":133,"Value":133,"Internal":true,"Count Failed Values":true},{"ID":40,"Name":"internal.metrics.resultSize","Update":1529,"Value":1529,"Internal":true,"Count Failed Values":true},{"ID":39,"Name":"internal.metrics.executorCpuTime","Update":19235000,"Value":19235000,"Internal":true,"Count Failed Values":true},{"ID":38,"Name":"internal.metrics.executorRunTime","Update":21,"Value":21,"Internal":true,"Count Failed Values":true},{"ID":37,"Name":"internal.metrics.executorDeserializeCpuTime","Update":5552000,"Value":5552000,"Internal":true,"Count Failed Values":true},{"ID":36,"Name":"internal.metrics.executorDeserializeTime","Update":6,"Value":6,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":6,"Executor Deserialize CPU Time":5552000,"Executor Run Time":21,"Executor CPU Time":19235000,"Result Size":1529,"JVM GC Time":0,"Result Serialization Time":0,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":0,"Fetch Wait Time":0,"Remote Bytes Read":0,"Remote Bytes Read To Disk":0,"Local Bytes Read":0,"Total Records Read":0},"Shuffle Write Metrics":{"Shuffle Bytes Written":0,"Shuffle Write Time":0,"Shuffle Records Written":0},"Input Metrics":{"Bytes Read":133,"Records Read":12},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerStageCompleted","Stage Info":{"Stage ID":1,"Stage Attempt ID":0,"Stage Name":"csv at TestEventLog.scala:19","Number of Tasks":1,"RDD Info":[{"RDD ID":8,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"13\",\"name\":\"mapPartitions\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[7],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":4,"Name":"FileScanRDD","Scope":"{\"id\":\"10\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":6,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"9\",\"name\":\"DeserializeToObject\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[5],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":7,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"12\",\"name\":\"mapPartitions\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[6],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":5,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"10\",\"name\":\"WholeStageCodegen\"}","Callsite":"csv at TestEventLog.scala:19","Parent IDs":[4],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.DataFrameReader.csv(DataFrameReader.scala:467)\nTestEventLog$.main(TestEventLog.scala:19)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729029,"Completion Time":1629712729066,"Accumulables":[{"ID":35,"Name":"duration total (min, med, max)","Value":"16","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":38,"Name":"internal.metrics.executorRunTime","Value":21,"Internal":true,"Count Failed Values":true},{"ID":31,"Name":"number of output rows","Value":"12","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":58,"Name":"internal.metrics.input.recordsRead","Value":12,"Internal":true,"Count Failed Values":true},{"ID":40,"Name":"internal.metrics.resultSize","Value":1529,"Internal":true,"Count Failed Values":true},{"ID":37,"Name":"internal.metrics.executorDeserializeCpuTime","Value":5552000,"Internal":true,"Count Failed Values":true},{"ID":36,"Name":"internal.metrics.executorDeserializeTime","Value":6,"Internal":true,"Count Failed Values":true},{"ID":57,"Name":"internal.metrics.input.bytesRead","Value":133,"Internal":true,"Count Failed Values":true},{"ID":39,"Name":"internal.metrics.executorCpuTime","Value":19235000,"Internal":true,"Count Failed Values":true}]}}

{"Event":"SparkListenerJobEnd","Job ID":1,"Completion Time":1629712729067,"Job Result":{"Result":"JobSucceeded"}}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerSQLExecutionStart","executionId":1,"description":"count at TestEventLog.scala:22","details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","physicalPlanDescription":"== Parsed Logical Plan ==\nAggregate [count(1) AS count#43L]\n+- Relation[id#10,name#11,age#12,key#13] csv\n\n== Analyzed Logical Plan ==\ncount: bigint\nAggregate [count(1) AS count#43L]\n+- Relation[id#10,name#11,age#12,key#13] csv\n\n== Optimized Logical Plan ==\nAggregate [count(1) AS count#43L]\n+- Project\n +- InMemoryRelation [id#10, name#11, age#12, key#13], StorageLevel(disk, memory, deserialized, 1 replicas)\n +- *(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n\n== Physical Plan ==\n*(2) HashAggregate(keys=[], functions=[count(1)], output=[count#43L])\n+- Exchange SinglePartition\n +- *(1) HashAggregate(keys=[], functions=[partial_count(1)], output=[count#66L])\n +- InMemoryTableScan\n +- InMemoryRelation [id#10, name#11, age#12, key#13], StorageLevel(disk, memory, deserialized, 1 replicas)\n +- *(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>","sparkPlanInfo":{"nodeName":"WholeStageCodegen","simpleString":"WholeStageCodegen","children":[{"nodeName":"HashAggregate","simpleString":"HashAggregate(keys=[], functions=[count(1)])","children":[{"nodeName":"InputAdapter","simpleString":"InputAdapter","children":[{"nodeName":"Exchange","simpleString":"Exchange SinglePartition","children":[{"nodeName":"WholeStageCodegen","simpleString":"WholeStageCodegen","children":[{"nodeName":"HashAggregate","simpleString":"HashAggregate(keys=[], functions=[partial_count(1)])","children":[{"nodeName":"InputAdapter","simpleString":"InputAdapter","children":[{"nodeName":"InMemoryTableScan","simpleString":"InMemoryTableScan","children":[],"metadata":{},"metrics":[{"name":"number of output rows","accumulatorId":76,"metricType":"sum"},{"name":"scan time total (min, med, max)","accumulatorId":77,"metricType":"timing"}]}],"metadata":{},"metrics":[]}],"metadata":{},"metrics":[{"name":"spill size total (min, med, max)","accumulatorId":73,"metricType":"size"},{"name":"aggregate time total (min, med, max)","accumulatorId":74,"metricType":"timing"},{"name":"peak memory total (min, med, max)","accumulatorId":72,"metricType":"size"},{"name":"number of output rows","accumulatorId":71,"metricType":"sum"},{"name":"avg hash probe (min, med, max)","accumulatorId":75,"metricType":"average"}]}],"metadata":{},"metrics":[{"name":"duration total (min, med, max)","accumulatorId":70,"metricType":"timing"}]}],"metadata":{},"metrics":[{"name":"data size total (min, med, max)","accumulatorId":63,"metricType":"size"}]}],"metadata":{},"metrics":[]}],"metadata":{},"metrics":[{"name":"spill size total (min, med, max)","accumulatorId":67,"metricType":"size"},{"name":"aggregate time total (min, med, max)","accumulatorId":68,"metricType":"timing"},{"name":"peak memory total (min, med, max)","accumulatorId":66,"metricType":"size"},{"name":"number of output rows","accumulatorId":65,"metricType":"sum"},{"name":"avg hash probe (min, med, max)","accumulatorId":69,"metricType":"average"}]}],"metadata":{},"metrics":[{"name":"duration total (min, med, max)","accumulatorId":64,"metricType":"timing"}]},"time":1629712729135}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerDriverAccumUpdates","executionId":1,"accumUpdates":[[79,6],[80,0]]}

{"Event":"SparkListenerJobStart","Job ID":2,"Submission Time":1629712729223,"Stage Infos":[{"Stage ID":2,"Stage Attempt ID":0,"Stage Name":"count at TestEventLog.scala:22","Number of Tasks":1,"RDD Info":[{"RDD ID":16,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"23\",\"name\":\"Exchange\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[15],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":10,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[9],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":11,"Name":"*(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[10],"Storage Level":{"Use Disk":true,"Use Memory":true,"Deserialized":true,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":9,"Name":"FileScanRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":13,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[11],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":14,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[13],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":15,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"24\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[14],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","Accumulables":[]},{"Stage ID":3,"Stage Attempt ID":0,"Stage Name":"count at TestEventLog.scala:22","Number of Tasks":1,"RDD Info":[{"RDD ID":19,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"30\",\"name\":\"mapPartitionsInternal\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[18],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":17,"Name":"ShuffledRowRDD","Scope":"{\"id\":\"23\",\"name\":\"Exchange\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[16],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":18,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"20\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[17],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[2],"Details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","Accumulables":[]}],"Stage IDs":[2,3],"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.rdd.scope":"{\"id\":\"31\",\"name\":\"collect\"}","spark.rdd.scope.noOverride":"true","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"1","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerStageSubmitted","Stage Info":{"Stage ID":2,"Stage Attempt ID":0,"Stage Name":"count at TestEventLog.scala:22","Number of Tasks":1,"RDD Info":[{"RDD ID":16,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"23\",\"name\":\"Exchange\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[15],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":10,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[9],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":11,"Name":"*(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[10],"Storage Level":{"Use Disk":true,"Use Memory":true,"Deserialized":true,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":9,"Name":"FileScanRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":13,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[11],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":14,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[13],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":15,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"24\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[14],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729228,"Accumulables":[]},"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.rdd.scope":"{\"id\":\"31\",\"name\":\"collect\"}","spark.rdd.scope.noOverride":"true","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"1","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerTaskStart","Stage ID":2,"Stage Attempt ID":0,"Task Info":{"Task ID":2,"Index":0,"Attempt":0,"Launch Time":1629712729250,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":0,"Failed":false,"Killed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":2,"Stage Attempt ID":0,"Task Type":"ShuffleMapTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":2,"Index":0,"Attempt":0,"Launch Time":1629712729250,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1629712729392,"Failed":false,"Killed":false,"Accumulables":[{"ID":63,"Name":"data size total (min, med, max)","Update":"15","Value":"14","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":71,"Name":"number of output rows","Update":"1","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":70,"Name":"duration total (min, med, max)","Update":"14","Value":"13","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":76,"Name":"number of output rows","Update":"6","Value":"6","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":78,"Name":"number of output rows","Update":"6","Value":"6","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":82,"Name":"duration total (min, med, max)","Update":"115","Value":"114","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":105,"Name":"internal.metrics.input.recordsRead","Update":6,"Value":6,"Internal":true,"Count Failed Values":true},{"ID":104,"Name":"internal.metrics.input.bytesRead","Update":133,"Value":133,"Internal":true,"Count Failed Values":true},{"ID":103,"Name":"internal.metrics.shuffle.write.writeTime","Update":10557643,"Value":10557643,"Internal":true,"Count Failed Values":true},{"ID":102,"Name":"internal.metrics.shuffle.write.recordsWritten","Update":1,"Value":1,"Internal":true,"Count Failed Values":true},{"ID":101,"Name":"internal.metrics.shuffle.write.bytesWritten","Update":59,"Value":59,"Internal":true,"Count Failed Values":true},{"ID":89,"Name":"internal.metrics.resultSerializationTime","Update":2,"Value":2,"Internal":true,"Count Failed Values":true},{"ID":87,"Name":"internal.metrics.resultSize","Update":1952,"Value":1952,"Internal":true,"Count Failed Values":true},{"ID":86,"Name":"internal.metrics.executorCpuTime","Update":126048000,"Value":126048000,"Internal":true,"Count Failed Values":true},{"ID":85,"Name":"internal.metrics.executorRunTime","Update":130,"Value":130,"Internal":true,"Count Failed Values":true},{"ID":84,"Name":"internal.metrics.executorDeserializeCpuTime","Update":5819000,"Value":5819000,"Internal":true,"Count Failed Values":true},{"ID":83,"Name":"internal.metrics.executorDeserializeTime","Update":6,"Value":6,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":6,"Executor Deserialize CPU Time":5819000,"Executor Run Time":130,"Executor CPU Time":126048000,"Result Size":1952,"JVM GC Time":0,"Result Serialization Time":2,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":0,"Fetch Wait Time":0,"Remote Bytes Read":0,"Remote Bytes Read To Disk":0,"Local Bytes Read":0,"Total Records Read":0},"Shuffle Write Metrics":{"Shuffle Bytes Written":59,"Shuffle Write Time":10557643,"Shuffle Records Written":1},"Input Metrics":{"Bytes Read":133,"Records Read":6},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerStageCompleted","Stage Info":{"Stage ID":2,"Stage Attempt ID":0,"Stage Name":"count at TestEventLog.scala:22","Number of Tasks":1,"RDD Info":[{"RDD ID":16,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"23\",\"name\":\"Exchange\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[15],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":10,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[9],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":11,"Name":"*(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[10],"Storage Level":{"Use Disk":true,"Use Memory":true,"Deserialized":true,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":9,"Name":"FileScanRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":13,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[11],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":14,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[13],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":15,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"24\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[14],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729228,"Completion Time":1629712729393,"Accumulables":[{"ID":83,"Name":"internal.metrics.executorDeserializeTime","Value":6,"Internal":true,"Count Failed Values":true},{"ID":101,"Name":"internal.metrics.shuffle.write.bytesWritten","Value":59,"Internal":true,"Count Failed Values":true},{"ID":104,"Name":"internal.metrics.input.bytesRead","Value":133,"Internal":true,"Count Failed Values":true},{"ID":86,"Name":"internal.metrics.executorCpuTime","Value":126048000,"Internal":true,"Count Failed Values":true},{"ID":89,"Name":"internal.metrics.resultSerializationTime","Value":2,"Internal":true,"Count Failed Values":true},{"ID":71,"Name":"number of output rows","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":82,"Name":"duration total (min, med, max)","Value":"114","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":85,"Name":"internal.metrics.executorRunTime","Value":130,"Internal":true,"Count Failed Values":true},{"ID":76,"Name":"number of output rows","Value":"6","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":103,"Name":"internal.metrics.shuffle.write.writeTime","Value":10557643,"Internal":true,"Count Failed Values":true},{"ID":70,"Name":"duration total (min, med, max)","Value":"13","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":87,"Name":"internal.metrics.resultSize","Value":1952,"Internal":true,"Count Failed Values":true},{"ID":105,"Name":"internal.metrics.input.recordsRead","Value":6,"Internal":true,"Count Failed Values":true},{"ID":78,"Name":"number of output rows","Value":"6","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":63,"Name":"data size total (min, med, max)","Value":"14","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":84,"Name":"internal.metrics.executorDeserializeCpuTime","Value":5819000,"Internal":true,"Count Failed Values":true},{"ID":102,"Name":"internal.metrics.shuffle.write.recordsWritten","Value":1,"Internal":true,"Count Failed Values":true}]}}

{"Event":"SparkListenerStageSubmitted","Stage Info":{"Stage ID":3,"Stage Attempt ID":0,"Stage Name":"count at TestEventLog.scala:22","Number of Tasks":1,"RDD Info":[{"RDD ID":19,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"30\",\"name\":\"mapPartitionsInternal\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[18],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":17,"Name":"ShuffledRowRDD","Scope":"{\"id\":\"23\",\"name\":\"Exchange\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[16],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":18,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"20\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[17],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[2],"Details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729403,"Accumulables":[]},"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.rdd.scope":"{\"id\":\"31\",\"name\":\"collect\"}","spark.rdd.scope.noOverride":"true","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"1","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerTaskStart","Stage ID":3,"Stage Attempt ID":0,"Task Info":{"Task ID":3,"Index":0,"Attempt":0,"Launch Time":1629712729411,"Executor ID":"driver","Host":"localhost","Locality":"ANY","Speculative":false,"Getting Result Time":0,"Finish Time":0,"Failed":false,"Killed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":3,"Stage Attempt ID":0,"Task Type":"ResultTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":3,"Index":0,"Attempt":0,"Launch Time":1629712729411,"Executor ID":"driver","Host":"localhost","Locality":"ANY","Speculative":false,"Getting Result Time":0,"Finish Time":1629712729462,"Failed":false,"Killed":false,"Accumulables":[{"ID":68,"Name":"aggregate time total (min, med, max)","Update":"5","Value":"4","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":65,"Name":"number of output rows","Update":"1","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":64,"Name":"duration total (min, med, max)","Update":"5","Value":"4","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":125,"Name":"internal.metrics.shuffle.read.recordsRead","Update":1,"Value":1,"Internal":true,"Count Failed Values":true},{"ID":124,"Name":"internal.metrics.shuffle.read.fetchWaitTime","Update":0,"Value":0,"Internal":true,"Count Failed Values":true},{"ID":123,"Name":"internal.metrics.shuffle.read.localBytesRead","Update":59,"Value":59,"Internal":true,"Count Failed Values":true},{"ID":122,"Name":"internal.metrics.shuffle.read.remoteBytesReadToDisk","Update":0,"Value":0,"Internal":true,"Count Failed Values":true},{"ID":121,"Name":"internal.metrics.shuffle.read.remoteBytesRead","Update":0,"Value":0,"Internal":true,"Count Failed Values":true},{"ID":120,"Name":"internal.metrics.shuffle.read.localBlocksFetched","Update":1,"Value":1,"Internal":true,"Count Failed Values":true},{"ID":119,"Name":"internal.metrics.shuffle.read.remoteBlocksFetched","Update":0,"Value":0,"Internal":true,"Count Failed Values":true},{"ID":114,"Name":"internal.metrics.resultSerializationTime","Update":1,"Value":1,"Internal":true,"Count Failed Values":true},{"ID":112,"Name":"internal.metrics.resultSize","Update":1825,"Value":1825,"Internal":true,"Count Failed Values":true},{"ID":111,"Name":"internal.metrics.executorCpuTime","Update":31424000,"Value":31424000,"Internal":true,"Count Failed Values":true},{"ID":110,"Name":"internal.metrics.executorRunTime","Update":32,"Value":32,"Internal":true,"Count Failed Values":true},{"ID":109,"Name":"internal.metrics.executorDeserializeCpuTime","Update":1987000,"Value":1987000,"Internal":true,"Count Failed Values":true},{"ID":108,"Name":"internal.metrics.executorDeserializeTime","Update":2,"Value":2,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":2,"Executor Deserialize CPU Time":1987000,"Executor Run Time":32,"Executor CPU Time":31424000,"Result Size":1825,"JVM GC Time":0,"Result Serialization Time":1,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":1,"Fetch Wait Time":0,"Remote Bytes Read":0,"Remote Bytes Read To Disk":0,"Local Bytes Read":59,"Total Records Read":1},"Shuffle Write Metrics":{"Shuffle Bytes Written":0,"Shuffle Write Time":0,"Shuffle Records Written":0},"Input Metrics":{"Bytes Read":0,"Records Read":0},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerStageCompleted","Stage Info":{"Stage ID":3,"Stage Attempt ID":0,"Stage Name":"count at TestEventLog.scala:22","Number of Tasks":1,"RDD Info":[{"RDD ID":19,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"30\",\"name\":\"mapPartitionsInternal\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[18],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":17,"Name":"ShuffledRowRDD","Scope":"{\"id\":\"23\",\"name\":\"Exchange\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[16],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":18,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"20\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[17],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[2],"Details":"org.apache.spark.sql.Dataset.count(Dataset.scala:2835)\nTestEventLog$.main(TestEventLog.scala:22)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729403,"Completion Time":1629712729465,"Accumulables":[{"ID":110,"Name":"internal.metrics.executorRunTime","Value":32,"Internal":true,"Count Failed Values":true},{"ID":119,"Name":"internal.metrics.shuffle.read.remoteBlocksFetched","Value":0,"Internal":true,"Count Failed Values":true},{"ID":122,"Name":"internal.metrics.shuffle.read.remoteBytesReadToDisk","Value":0,"Internal":true,"Count Failed Values":true},{"ID":68,"Name":"aggregate time total (min, med, max)","Value":"4","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":125,"Name":"internal.metrics.shuffle.read.recordsRead","Value":1,"Internal":true,"Count Failed Values":true},{"ID":65,"Name":"number of output rows","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":124,"Name":"internal.metrics.shuffle.read.fetchWaitTime","Value":0,"Internal":true,"Count Failed Values":true},{"ID":64,"Name":"duration total (min, med, max)","Value":"4","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":109,"Name":"internal.metrics.executorDeserializeCpuTime","Value":1987000,"Internal":true,"Count Failed Values":true},{"ID":112,"Name":"internal.metrics.resultSize","Value":1825,"Internal":true,"Count Failed Values":true},{"ID":121,"Name":"internal.metrics.shuffle.read.remoteBytesRead","Value":0,"Internal":true,"Count Failed Values":true},{"ID":120,"Name":"internal.metrics.shuffle.read.localBlocksFetched","Value":1,"Internal":true,"Count Failed Values":true},{"ID":114,"Name":"internal.metrics.resultSerializationTime","Value":1,"Internal":true,"Count Failed Values":true},{"ID":123,"Name":"internal.metrics.shuffle.read.localBytesRead","Value":59,"Internal":true,"Count Failed Values":true},{"ID":108,"Name":"internal.metrics.executorDeserializeTime","Value":2,"Internal":true,"Count Failed Values":true},{"ID":111,"Name":"internal.metrics.executorCpuTime","Value":31424000,"Internal":true,"Count Failed Values":true}]}}

{"Event":"SparkListenerJobEnd","Job ID":2,"Completion Time":1629712729465,"Job Result":{"Result":"JobSucceeded"}}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerSQLExecutionEnd","executionId":1,"time":1629712729468}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerSQLExecutionStart","executionId":2,"description":"show at TestEventLog.scala:23","details":"org.apache.spark.sql.Dataset.show(Dataset.scala:719)\nTestEventLog$.main(TestEventLog.scala:23)\nTestEventLog.main(TestEventLog.scala)","physicalPlanDescription":"== Parsed Logical Plan ==\nGlobalLimit 21\n+- LocalLimit 21\n +- Project [cast(id#10 as string) AS id#75, cast(name#11 as string) AS name#76, cast(age#12 as string) AS age#77, cast(key#13 as string) AS key#78]\n +- Relation[id#10,name#11,age#12,key#13] csv\n\n== Analyzed Logical Plan ==\nid: string, name: string, age: string, key: string\nGlobalLimit 21\n+- LocalLimit 21\n +- Project [cast(id#10 as string) AS id#75, cast(name#11 as string) AS name#76, cast(age#12 as string) AS age#77, cast(key#13 as string) AS key#78]\n +- Relation[id#10,name#11,age#12,key#13] csv\n\n== Optimized Logical Plan ==\nGlobalLimit 21\n+- LocalLimit 21\n +- Project [cast(id#10 as string) AS id#75, name#11, cast(age#12 as string) AS age#77, cast(key#13 as string) AS key#78]\n +- InMemoryRelation [id#10, name#11, age#12, key#13], StorageLevel(disk, memory, deserialized, 1 replicas)\n +- *(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n\n== Physical Plan ==\nCollectLimit 21\n+- *(1) Project [cast(id#10 as string) AS id#75, name#11, cast(age#12 as string) AS age#77, cast(key#13 as string) AS key#78]\n +- InMemoryTableScan [age#12, id#10, key#13, name#11]\n +- InMemoryRelation [id#10, name#11, age#12, key#13], StorageLevel(disk, memory, deserialized, 1 replicas)\n +- *(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>","sparkPlanInfo":{"nodeName":"CollectLimit","simpleString":"CollectLimit 21","children":[{"nodeName":"WholeStageCodegen","simpleString":"WholeStageCodegen","children":[{"nodeName":"Project","simpleString":"Project [cast(id#10 as string) AS id#75, name#11, cast(age#12 as string) AS age#77, cast(key#13 as string) AS key#78]","children":[{"nodeName":"InputAdapter","simpleString":"InputAdapter","children":[{"nodeName":"InMemoryTableScan","simpleString":"InMemoryTableScan [age#12, id#10, key#13, name#11]","children":[],"metadata":{},"metrics":[{"name":"number of output rows","accumulatorId":134,"metricType":"sum"},{"name":"scan time total (min, med, max)","accumulatorId":135,"metricType":"timing"}]}],"metadata":{},"metrics":[]}],"metadata":{},"metrics":[]}],"metadata":{},"metrics":[{"name":"duration total (min, med, max)","accumulatorId":133,"metricType":"timing"}]}],"metadata":{},"metrics":[]},"time":1629712729493}

{"Event":"SparkListenerJobStart","Job ID":3,"Submission Time":1629712729533,"Stage Infos":[{"Stage ID":4,"Stage Attempt ID":0,"Stage Name":"show at TestEventLog.scala:23","Number of Tasks":1,"RDD Info":[{"RDD ID":25,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"47\",\"name\":\"map\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[24],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":10,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[9],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":11,"Name":"*(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[10],"Storage Level":{"Use Disk":true,"Use Memory":true,"Deserialized":true,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":9,"Name":"FileScanRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":22,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"45\",\"name\":\"InMemoryTableScan\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[21],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":21,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"45\",\"name\":\"InMemoryTableScan\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[11],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":23,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"42\",\"name\":\"WholeStageCodegen\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[22],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":24,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"46\",\"name\":\"mapPartitionsInternal\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[23],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.Dataset.show(Dataset.scala:719)\nTestEventLog$.main(TestEventLog.scala:23)\nTestEventLog.main(TestEventLog.scala)","Accumulables":[]}],"Stage IDs":[4],"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"2","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerStageSubmitted","Stage Info":{"Stage ID":4,"Stage Attempt ID":0,"Stage Name":"show at TestEventLog.scala:23","Number of Tasks":1,"RDD Info":[{"RDD ID":25,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"47\",\"name\":\"map\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[24],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":10,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[9],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":11,"Name":"*(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[10],"Storage Level":{"Use Disk":true,"Use Memory":true,"Deserialized":true,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":9,"Name":"FileScanRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":22,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"45\",\"name\":\"InMemoryTableScan\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[21],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":21,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"45\",\"name\":\"InMemoryTableScan\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[11],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":23,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"42\",\"name\":\"WholeStageCodegen\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[22],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":24,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"46\",\"name\":\"mapPartitionsInternal\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[23],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.Dataset.show(Dataset.scala:719)\nTestEventLog$.main(TestEventLog.scala:23)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729534,"Accumulables":[]},"Properties":{"spark.driver.host":"ip-172-29-6-153.ap-northeast-2.compute.internal","spark.eventLog.enabled":"true","spark.driver.port":"55465","spark.app.name":"TestEventLog","spark.executor.id":"driver","spark.master":"local","spark.eventLog.dir":"file:///Users/darren.zhang/test/","spark.sql.execution.id":"2","spark.app.id":"local-1629712726102"}}

{"Event":"SparkListenerTaskStart","Stage ID":4,"Stage Attempt ID":0,"Task Info":{"Task ID":4,"Index":0,"Attempt":0,"Launch Time":1629712729540,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":0,"Failed":false,"Killed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":4,"Stage Attempt ID":0,"Task Type":"ResultTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":4,"Index":0,"Attempt":0,"Launch Time":1629712729540,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1629712729570,"Failed":false,"Killed":false,"Accumulables":[{"ID":133,"Name":"duration total (min, med, max)","Update":"2","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":134,"Name":"number of output rows","Update":"6","Value":"6","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":158,"Name":"internal.metrics.input.recordsRead","Update":1,"Value":1,"Internal":true,"Count Failed Values":true},{"ID":157,"Name":"internal.metrics.input.bytesRead","Update":624,"Value":624,"Internal":true,"Count Failed Values":true},{"ID":140,"Name":"internal.metrics.resultSize","Update":1655,"Value":1655,"Internal":true,"Count Failed Values":true},{"ID":139,"Name":"internal.metrics.executorCpuTime","Update":22736000,"Value":22736000,"Internal":true,"Count Failed Values":true},{"ID":138,"Name":"internal.metrics.executorRunTime","Update":24,"Value":24,"Internal":true,"Count Failed Values":true},{"ID":137,"Name":"internal.metrics.executorDeserializeCpuTime","Update":3428000,"Value":3428000,"Internal":true,"Count Failed Values":true},{"ID":136,"Name":"internal.metrics.executorDeserializeTime","Update":4,"Value":4,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":4,"Executor Deserialize CPU Time":3428000,"Executor Run Time":24,"Executor CPU Time":22736000,"Result Size":1655,"JVM GC Time":0,"Result Serialization Time":0,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":0,"Fetch Wait Time":0,"Remote Bytes Read":0,"Remote Bytes Read To Disk":0,"Local Bytes Read":0,"Total Records Read":0},"Shuffle Write Metrics":{"Shuffle Bytes Written":0,"Shuffle Write Time":0,"Shuffle Records Written":0},"Input Metrics":{"Bytes Read":624,"Records Read":1},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerStageCompleted","Stage Info":{"Stage ID":4,"Stage Attempt ID":0,"Stage Name":"show at TestEventLog.scala:23","Number of Tasks":1,"RDD Info":[{"RDD ID":25,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"47\",\"name\":\"map\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[24],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":10,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[9],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":11,"Name":"*(1) FileScan csv [id#10,name#11,age#12,key#13] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/Users/darren.zhang/project/workspace/darren-spark/test_file], PartitionCount: 3, PartitionFilters: [], PushedFilters: [], ReadSchema: struct<id:int,name:string,age:int>\n","Scope":"{\"id\":\"27\",\"name\":\"InMemoryTableScan\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[10],"Storage Level":{"Use Disk":true,"Use Memory":true,"Deserialized":true,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":9,"Name":"FileScanRDD","Scope":"{\"id\":\"28\",\"name\":\"WholeStageCodegen\"}","Callsite":"count at TestEventLog.scala:22","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":22,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"45\",\"name\":\"InMemoryTableScan\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[21],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":21,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"45\",\"name\":\"InMemoryTableScan\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[11],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":23,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"42\",\"name\":\"WholeStageCodegen\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[22],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":24,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"46\",\"name\":\"mapPartitionsInternal\"}","Callsite":"show at TestEventLog.scala:23","Parent IDs":[23],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":1,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.sql.Dataset.show(Dataset.scala:719)\nTestEventLog$.main(TestEventLog.scala:23)\nTestEventLog.main(TestEventLog.scala)","Submission Time":1629712729534,"Completion Time":1629712729571,"Accumulables":[{"ID":137,"Name":"internal.metrics.executorDeserializeCpuTime","Value":3428000,"Internal":true,"Count Failed Values":true},{"ID":158,"Name":"internal.metrics.input.recordsRead","Value":1,"Internal":true,"Count Failed Values":true},{"ID":140,"Name":"internal.metrics.resultSize","Value":1655,"Internal":true,"Count Failed Values":true},{"ID":134,"Name":"number of output rows","Value":"6","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":133,"Name":"duration total (min, med, max)","Value":"1","Internal":true,"Count Failed Values":true,"Metadata":"sql"},{"ID":136,"Name":"internal.metrics.executorDeserializeTime","Value":4,"Internal":true,"Count Failed Values":true},{"ID":157,"Name":"internal.metrics.input.bytesRead","Value":624,"Internal":true,"Count Failed Values":true},{"ID":139,"Name":"internal.metrics.executorCpuTime","Value":22736000,"Internal":true,"Count Failed Values":true},{"ID":138,"Name":"internal.metrics.executorRunTime","Value":24,"Internal":true,"Count Failed Values":true}]}}

{"Event":"SparkListenerJobEnd","Job ID":3,"Completion Time":1629712729571,"Job Result":{"Result":"JobSucceeded"}}

{"Event":"org.apache.spark.sql.execution.ui.SparkListenerSQLExecutionEnd","executionId":2,"time":1629712729573}

{"Event":"SparkListenerApplicationEnd","Timestamp":1629712729577}

注意:第一个Event是SparkListenerLogStart,最后一个Event是SparkListenerApplicationEnd

简单分析Spark Event Log

可以看到Spark Event Log是JSON文件,我们可以使用Spark读取一下,简单分析一下Spark Event Log。

import org.apache.spark.sql.SparkSession

object ReadEventLog {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().master("local").appName("readEventLog").getOrCreate()

val df = spark.read.json("file:///Users/darren.zhang/test/local-1629712726102")

df.printSchema()

df.select("Event").groupBy("Event").count.show(20, false)

spark.stop()

}

}

root

|-- App ID: string (nullable = true)

|-- App Name: string (nullable = true)

|-- Block Manager ID: struct (nullable = true)

| |-- Executor ID: string (nullable = true)

| |-- Host: string (nullable = true)

| |-- Port: long (nullable = true)

|-- Classpath Entries: struct (nullable = true)

| |-- /Users/darren.zhang/.gradle/caches/modules-2/files-2.1/com.fasterxml.jackson.core/jackson-core/2.7.9/9b530cec4fd2eb841ab8e79f19fc7cf0ec487b2/jackson-core-2.7.9.jar: string (nullable = true)

|-- Completion Time: long (nullable = true)

|-- Event: string (nullable = true)

|-- Executor ID: string (nullable = true)

|-- Executor Info: struct (nullable = true)

| |-- Host: string (nullable = true)

| |-- Total Cores: long (nullable = true)

|-- JVM Information: struct (nullable = true)

| |-- Java Home: string (nullable = true)

| |-- Java Version: string (nullable = true)

| |-- Scala Version: string (nullable = true)

|-- Job ID: long (nullable = true)

|-- Job Result: struct (nullable = true)

| |-- Result: string (nullable = true)

|-- Maximum Memory: long (nullable = true)

|-- Maximum Offheap Memory: long (nullable = true)

|-- Maximum Onheap Memory: long (nullable = true)

|-- Properties: struct (nullable = true)

| |-- spark.app.id: string (nullable = true)

| |-- spark.app.name: string (nullable = true)

|-- Spark Properties: struct (nullable = true)

| |-- spark.app.id: string (nullable = true)

| |-- spark.app.name: string (nullable = true)

|-- Spark Version: string (nullable = true)

|-- Stage Attempt ID: long (nullable = true)

|-- Stage ID: long (nullable = true)

|-- Stage IDs: array (nullable = true)

| |-- element: long (containsNull = true)

|-- Stage Info: struct (nullable = true)

| |-- Accumulables: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- Count Failed Values: boolean (nullable = true)

| | | |-- ID: long (nullable = true)

| | | |-- Internal: boolean (nullable = true)

| | | |-- Metadata: string (nullable = true)

| | | |-- Name: string (nullable = true)

| | | |-- Value: string (nullable = true)

| |-- Completion Time: long (nullable = true)

| |-- Details: string (nullable = true)

| |-- Number of Tasks: long (nullable = true)

| |-- Parent IDs: array (nullable = true)

| | |-- element: long (containsNull = true)

| |-- RDD Info: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- Callsite: string (nullable = true)

| | | |-- Disk Size: long (nullable = true)

| | | |-- Memory Size: long (nullable = true)

| | | |-- Name: string (nullable = true)

| | | |-- Number of Cached Partitions: long (nullable = true)

| | | |-- Number of Partitions: long (nullable = true)

| | | |-- Parent IDs: array (nullable = true)

| | | | |-- element: long (containsNull = true)

| | | |-- RDD ID: long (nullable = true)

| | | |-- Scope: string (nullable = true)

| | | |-- Storage Level: struct (nullable = true)

| | | | |-- Deserialized: boolean (nullable = true)

| | | | |-- Replication: long (nullable = true)

| | | | |-- Use Disk: boolean (nullable = true)

| | | | |-- Use Memory: boolean (nullable = true)

| |-- Stage Attempt ID: long (nullable = true)

| |-- Stage ID: long (nullable = true)

| |-- Stage Name: string (nullable = true)

| |-- Submission Time: long (nullable = true)

|-- Stage Infos: array (nullable = true)

| |-- element: struct (containsNull = true)

| | |-- Accumulables: array (nullable = true)

| | | |-- element: string (containsNull = true)

| | |-- Details: string (nullable = true)

| | |-- Number of Tasks: long (nullable = true)

| | |-- Parent IDs: array (nullable = true)

| | | |-- element: long (containsNull = true)

| | |-- RDD Info: array (nullable = true)

| | | |-- element: struct (containsNull = true)

| | | | |-- Callsite: string (nullable = true)

| | | | |-- Disk Size: long (nullable = true)

| | | | |-- Memory Size: long (nullable = true)

| | | | |-- Name: string (nullable = true)

| | | | |-- Number of Cached Partitions: long (nullable = true)

| | | | |-- Number of Partitions: long (nullable = true)

| | | | |-- Parent IDs: array (nullable = true)

| | | | | |-- element: long (containsNull = true)

| | | | |-- RDD ID: long (nullable = true)

| | | | |-- Scope: string (nullable = true)

| | | | |-- Storage Level: struct (nullable = true)

| | | | | |-- Deserialized: boolean (nullable = true)

| | | | | |-- Replication: long (nullable = true)

| | | | | |-- Use Disk: boolean (nullable = true)

| | | | | |-- Use Memory: boolean (nullable = true)

| | |-- Stage Attempt ID: long (nullable = true)

| | |-- Stage ID: long (nullable = true)

| | |-- Stage Name: string (nullable = true)

|-- Submission Time: long (nullable = true)

|-- System Properties: struct (nullable = true)

| |-- awt.toolkit: string (nullable = true)

|-- Task End Reason: struct (nullable = true)

| |-- Reason: string (nullable = true)

|-- Task Info: struct (nullable = true)

| |-- Accumulables: array (nullable = true)

| | |-- element: struct (containsNull = true)

| | | |-- Count Failed Values: boolean (nullable = true)

| | | |-- ID: long (nullable = true)

| | | |-- Internal: boolean (nullable = true)

| | | |-- Metadata: string (nullable = true)

| | | |-- Name: string (nullable = true)

| | | |-- Update: string (nullable = true)

| | | |-- Value: string (nullable = true)

| |-- Attempt: long (nullable = true)

| |-- Executor ID: string (nullable = true)

| |-- Failed: boolean (nullable = true)

| |-- Finish Time: long (nullable = true)

| |-- Getting Result Time: long (nullable = true)

| |-- Host: string (nullable = true)

| |-- Index: long (nullable = true)

| |-- Killed: boolean (nullable = true)

| |-- Launch Time: long (nullable = true)

| |-- Locality: string (nullable = true)

| |-- Speculative: boolean (nullable = true)

| |-- Task ID: long (nullable = true)

|-- Task Metrics: struct (nullable = true)

| |-- Disk Bytes Spilled: long (nullable = true)

| |-- Executor CPU Time: long (nullable = true)

| |-- Executor Deserialize CPU Time: long (nullable = true)

| |-- Executor Deserialize Time: long (nullable = true)

| |-- Executor Run Time: long (nullable = true)

| |-- Input Metrics: struct (nullable = true)

| | |-- Bytes Read: long (nullable = true)

| | |-- Records Read: long (nullable = true)

| |-- JVM GC Time: long (nullable = true)

| |-- Memory Bytes Spilled: long (nullable = true)

| |-- Output Metrics: struct (nullable = true)

| | |-- Bytes Written: long (nullable = true)

| | |-- Records Written: long (nullable = true)

| |-- Result Serialization Time: long (nullable = true)

| |-- Result Size: long (nullable = true)

| |-- Shuffle Read Metrics: struct (nullable = true)

| | |-- Fetch Wait Time: long (nullable = true)

| | |-- Local Blocks Fetched: long (nullable = true)

| | |-- Local Bytes Read: long (nullable = true)

| | |-- Remote Blocks Fetched: long (nullable = true)

| | |-- Remote Bytes Read: long (nullable = true)

| | |-- Remote Bytes Read To Disk: long (nullable = true)

| | |-- Total Records Read: long (nullable = true)

| |-- Shuffle Write Metrics: struct (nullable = true)

| | |-- Shuffle Bytes Written: long (nullable = true)

| | |-- Shuffle Records Written: long (nullable = true)

| | |-- Shuffle Write Time: long (nullable = true)