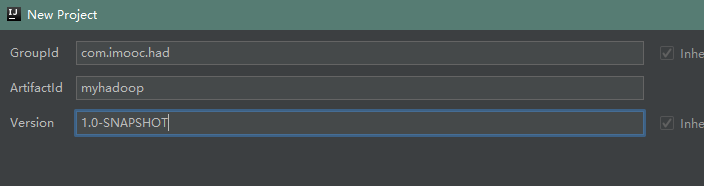

一、新建项目project

选择maven;

填写maven的坐标,“groupId”,“artifactId”,以及“version”,其中groupId是公司域名的反写,而artifactId是项目名或模块名,而version就是该项目或模块所对应的版本号,点击next:

next之后,finish;

二、配置pom

打开 pom.xml,进行修改:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.hsd.counter</groupId>

<artifactId>hdfs-api-exise</artifactId>

<version>1.0-SNAPSHOT</version>

<!-- 添加下述文件 -->

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.version>2.5.0</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

</project>?注意:hadoop version要改为自己安装的版本;

三、开始测试

? 创建一个java类:

3.1、在hdfs上创建目录

package com.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* 测试一下向hdfs新建目录,类HdfsClient.java

*/

public class HdfsClient {

public static void main(String[] args) throws IOException, URISyntaxException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://1270.0.0.1:9000");

//返回默认文件系统

FileSystem fileSystem = FileSystem.get(uri,conf,"root");

//在hdfs上创建路径

Path path = new Path("/man");

fileSystem.mkdirs(path);

//关闭资源

fileSystem.close();

}

}

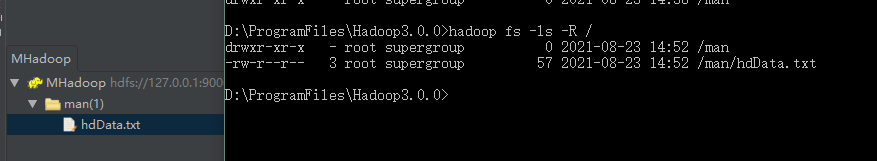

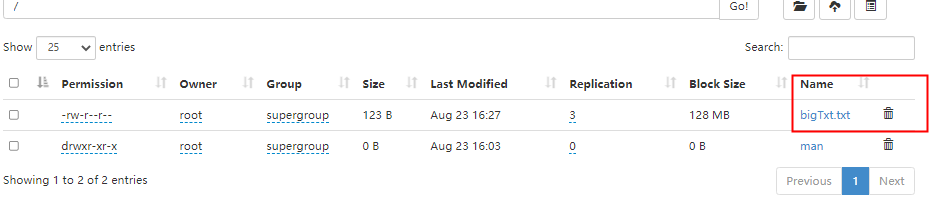

hadoop插件中可以看到 ,创建成功:

?3.2、把本地文件上传到hdfs文件系统中:

/**

* 上传文件到HDFS

*/

public static void copyFromLocal() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

Path localPath = new Path("C:/Users/Administrator/Desktop/songjiang.txt");//本地地址

Path hdfsPath = new Path("/man/songjiang.txt");//目标地址

fileSystem.copyFromLocalFile(localPath,hdfsPath);

fileSystem.close();

}

————————————————

版权声明:本文为CSDN博主「Swordsman-Wang」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_45894479/article/details/114950199?运行结果:

?

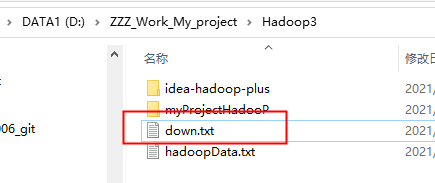

?3.3、从hdfs文件系统拷贝文件到本地:

/**

* 将文件从hdfs拷贝到本地

*/

public static void copyToLocal() throws IOException, InterruptedException, URISyntaxException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

Path localPath = new Path("D:/ZZZ_Work_My_project/Hadoop3/down.txt");

Path hdfsPath = new Path("/man/hdData.txt");

fileSystem.copyToLocalFile(false,hdfsPath,localPath,true);

fileSystem.close();

}

————————————————结果:

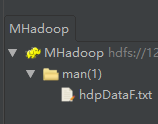

3.4、文件更名

/**

* 文件更名

*/

public static void reName() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

Path hdfsOldPath = new Path("/man/hdData.txt");

Path hdfsNewPath = new Path("/man/hdDataF.txt");

fileSystem.rename(hdfsOldPath,hdfsNewPath);

fileSystem.close();

}

————————————————

版权声明:本文为CSDN博主「Swordsman-Wang」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_45894479/article/details/114950199结果,更名成功:?

?3.5、其他

/**

* 查看文件详情

*/

public static void listFile() throws URISyntaxException, IOException, InterruptedException {

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

RemoteIterator<LocatedFileStatus> listFiles = fileSystem.listFiles(new Path("/"), true);

while (listFiles.hasNext()){

LocatedFileStatus fileStatus = listFiles.next();

System.out.println("============="+fileStatus.getPath().getName()+"=============");

System.out.println("文件名称:"+fileStatus.getPath().getName()+"\n文件路径:"+fileStatus.getPath()+"\n文件权限:"+fileStatus.getPermission()+"\n文件大小:"+fileStatus.getLen()

+"\n分区大小:"+fileStatus.getBlockSize()+"\n文件分组:"+fileStatus.getGroup()+"\n文件所有者:"+fileStatus.getOwner());

BlockLocation[] blockLocations = fileStatus.getBlockLocations();

for (BlockLocation blockLocation:blockLocations){

String[] hosts = blockLocation.getHosts();

System.out.printf("所在区间:");

for (String host:hosts){

System.out.printf(host+"\t");

}

System.out.println();

}

}

fileSystem.close();

}

————————————————

版权声明:本文为CSDN博主「Swordsman-Wang」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_45894479/article/details/114950199/**

* 判断是文件还是文件夹

*/

public static void listStatus() throws URISyntaxException, IOException, InterruptedException {

System.out.println(111111111);

Configuration conf = new Configuration();

//获取hdfs客户端对象

URI uri = new URI("hdfs://hadoop01:9000");

FileSystem fileSystem = FileSystem.get(uri, conf, "root");

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/"));

for (FileStatus fileStatuse:fileStatuses){

if (fileStatuse.isFile()){

System.out.println("文件:"+fileStatuse.getPath().getName());

}else {

System.out.println("文件夹:"+fileStatuse.getPath().getName());

}

}

fileSystem.close();

}

————————————————

版权声明:本文为CSDN博主「Swordsman-Wang」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_45894479/article/details/1149501993.6、遍历:

?

?

?3.7、小文件合并

?

/**

* 小文件合并

* @throws URISyntaxException

* @throws IOException

* @throws InterruptedException

*/

public void mergeSmallFiles() throws URISyntaxException, IOException, InterruptedException {

//获取分布式文件系统hdfs;第三个参数指定hdfs的用户

FileSystem fileSystem = FileSystem.get(new URI("hdfs://127.0.01:9000"), new Configuration(), "root");

FSDataOutputStream fsDataOutputStream = fileSystem.create(new Path("/bigTxt.txt"));

//读取所有本地小文件,写入到hdfs的大文件里面去

//获取本地文件系统 localFileSystem

LocalFileSystem localFileSystem = FileSystem.getLocal(new Configuration());

//读取本地的小文件们

FileStatus[] fileStatuses = localFileSystem.listStatus(new Path("F:\\testDatas"));

for (FileStatus fileStatus : fileStatuses) {

//获取每一个本地小文件的路径

Path path = fileStatus.getPath();

//读取本地小文件

FSDataInputStream fsDataInputStream = localFileSystem.open(path);

IOUtils.copy(fsDataInputStream,fsDataOutputStream);

IOUtils.closeQuietly(fsDataInputStream);

}

IOUtils.closeQuietly(fsDataOutputStream);

localFileSystem.close();

fileSystem.close();

}

————————————————

版权声明:本文为CSDN博主「深圳四月红」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weixin_43230682/article/details/107814553结果:

?

?