目录

? ? ? ? ?连接拒绝?

前言

? ? ? ? 还是之前kettle中那个hive环境,这次不用kettle将数据加载到hive中去,而是用写文件的方式。

? ? ? ? 环境介绍:

? ? ? ? 三个hadoop节点

192.168.10.211 ?node01? (master)

192.168.10.212 ?node02? ? (slave)

192.168.10.213 ?node03? ? ?(slave)

hive安装在 192.168.10.212 上。

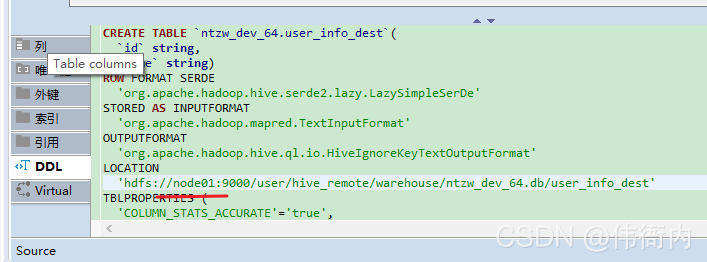

? ? ? ? hive上的user_info_dest表的DDL语句,

CREATE TABLE `ntzw_dev_64.user_info_dest`(

`id` string,

`name` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://node01:9000/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest'

TBLPROPERTIES (

'COLUMN_STATS_ACCURATE'='true',

'numFiles'='7',

'numRows'='6',

'rawDataSize'='231',

'totalSize'='237',

'transient_lastDdlTime'='1629798016')? ? ? ? hadoop的命令:?http://hadoop.apache.org/docs/r1.0.4/cn/hdfs_shell.html

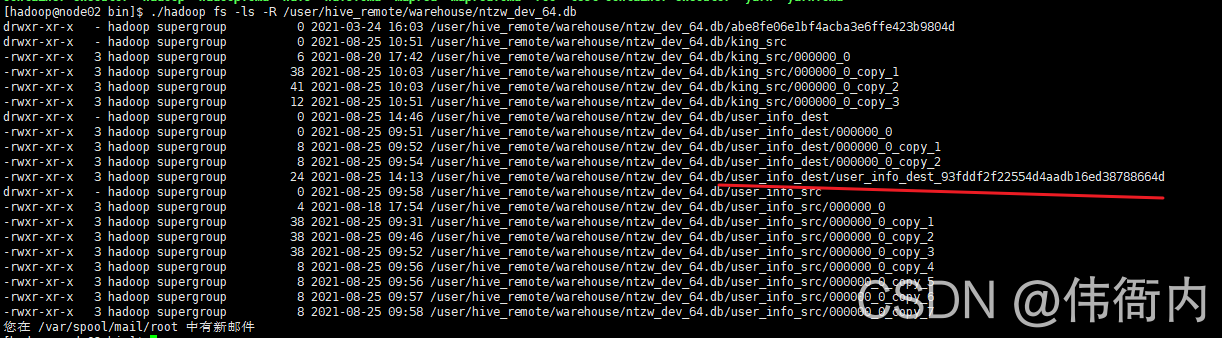

环境查看

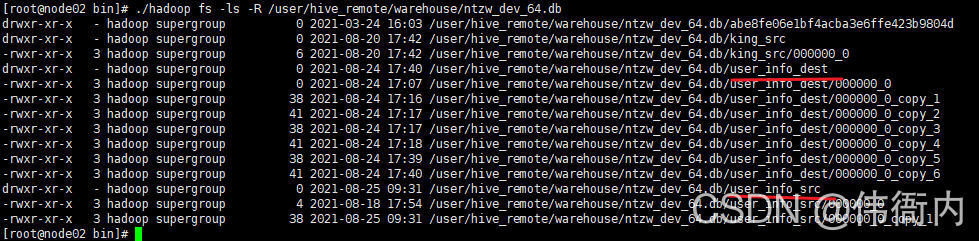

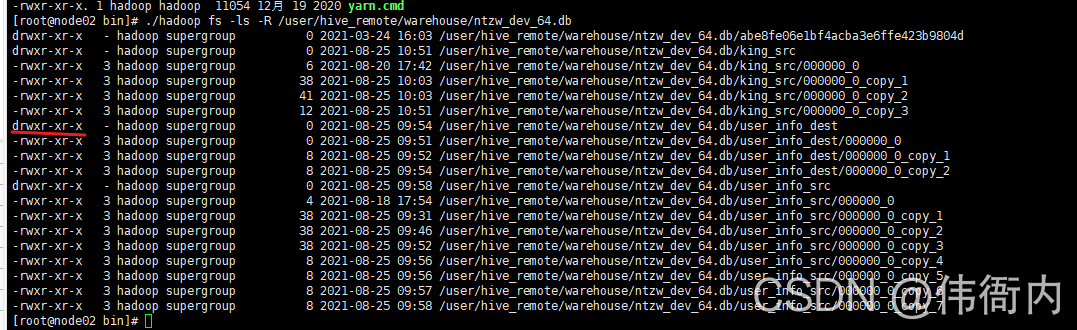

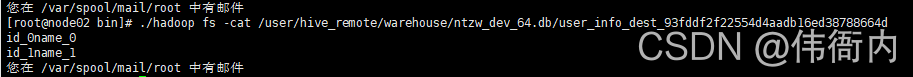

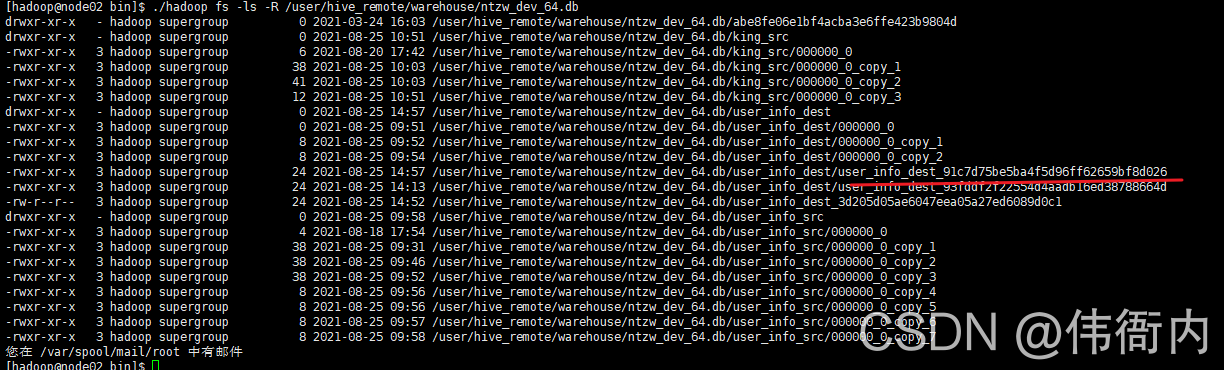

? ? ? ? 首先去hadoop的安装目录,查看文件系统中的文件,

????????./hadoop fs -ls -R /user/hive_remote/warehouse/ntzw_dev_64.db

????????/user/hive_remote/warehouse/ntzw_dev_64.db??这个是上面DDL语句出location的路径。

? ? ? ? 如下图所示,这是当初在搞kettle的时候建立的两个表,这是以目录形式存在,然后表数据以文件形式存在,

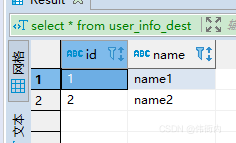

?使用如下语句将表清空,然后重新造了两条数据,

insert overwrite table user_info_dest select * from user_info_dest where 1=0;

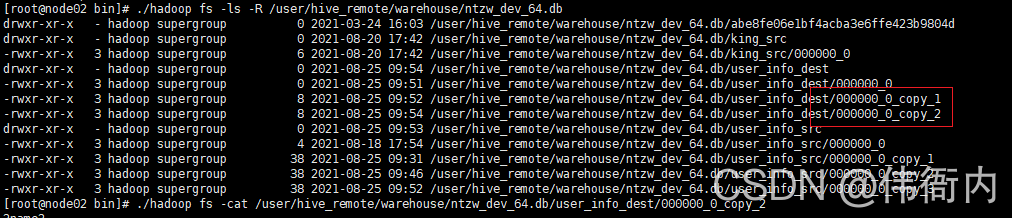

? ? ? ? 继续看下文件系统中的样子,有3个user_info_dest目录下的文件,看下这三个文件内容

?????????可以看到copy_1和copy_2中分散放了这两条数据

?????????那么如果对这些文件操作,是否就可以在Hive中看到了呢?这就要用到hive的一个SQL,load data

编写代码

pom中引入包

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>1.2.1</version>

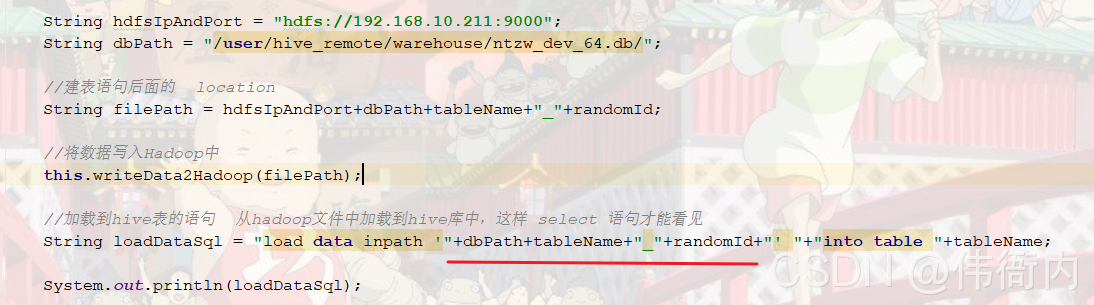

</dependency>? ? ? ? 写一个junit测试类,这个是主要的内容,先往hadoop中写入,然后利用load data这个方法来加载到hive库中。完整的代码会在最后贴出来。

/**

* 通过写hive的文件系统,将数据录入进去

*/

@Test

public void writeHiveFile() throws SQLException, IOException {

String randomId = UUID.randomUUID().toString().replace("-","");

String tableName= "user_info_dest";

String hdfsIpAndPort = "hdfs://192.168.10.212:9000";

String dbPath = "/user/hive_remote/warehouse/ntzw_dev_64.db/";

//建表语句后面的 location

String filePath = hdfsIpAndPort+dbPath+tableName+"_"+randomId;

//将数据写入Hadoop中

this.writeData2Hadoop(filePath);

//加载到hive表的语句 从hadoop文件中加载到hive库中,这样 select 语句才能看见

String loadDataSql = "load data inpath '"+filePath+"' "+"into table "+tableName;

System.out.println(loadDataSql);

//获取连接,并执行加载语句

Connection connection = this.getHiveConnection();

Statement stmt = connection.createStatement();

stmt.execute(loadDataSql);

stmt.close();

connection.close();

//加载完成后需要删除临时文件

this.deleteHiveTmpFile(filePath);

}/**

* 往hadoop文件系统里面写文件

* @param filePath

* @throws IOException

*/

private void writeData2Hadoop(String filePath) throws IOException {

Configuration conf = new Configuration();

conf.setBoolean("dfs.support.append", true);

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

//获取文件系统对象

FileSystem fs = FileSystem.get(URI.create(filePath),conf);

OutputStream output = null;

//文件检查

Path path = new Path(filePath);

if (!(fs.exists(path)&&fs.isFile(path))) {

log.info("不存在文件[{}],开始创建文件.",filePath);

fs.createNewFile(path);

output = fs.create(path);

} else {

log.info("文件[{}]已存在,追加内容到文件",filePath);

output = fs.append(path);

}

//往文件里面写数据

List<UserInfoDest> dataList = this.initData();

for (UserInfoDest data : dataList) {

//目标表只有两列 id,name

StringBuilder sb = new StringBuilder();

sb.append(data.id);//表的id

//hive的表的默认分割符,\t,如果指定了其他列分割符,那么此处也要改变

sb.append("\001");

sb.append(data.name);//表的name

sb.append("\n");//一行内容结束后换行

output.write(sb.toString().getBytes());

}

output.flush();

output.close();

fs.close();

}错误解决

? ? ? ? 连接拒绝?

? ? ? ?连接拒绝,咋还能拒绝呢,幸好报错后面有链接。

??报错后面的链接,https://cwiki.apache.org/confluence/display/HADOOP2/ConnectionRefused

??报错后面的链接,https://cwiki.apache.org/confluence/display/HADOOP2/ConnectionRefused

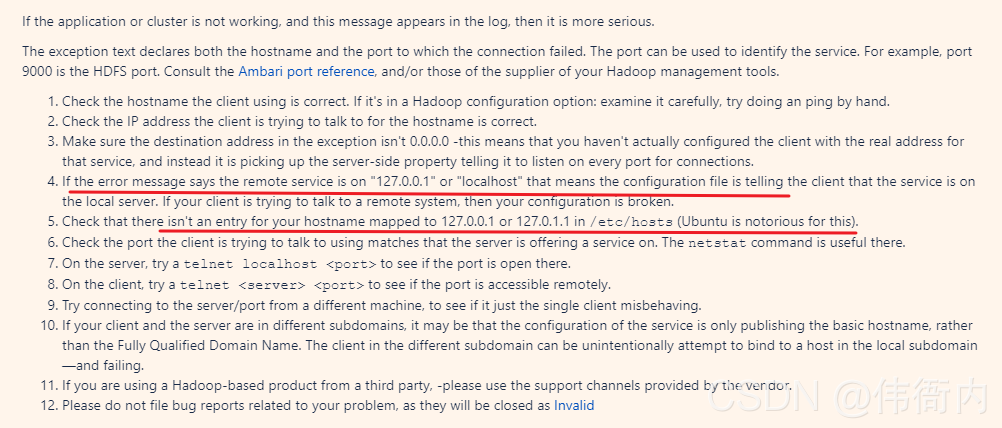

? ? ? ? ?打开以后,看到如下图,上面说可能是/etc/hosts文件中有127.0.0.1或者localhost这种映射。

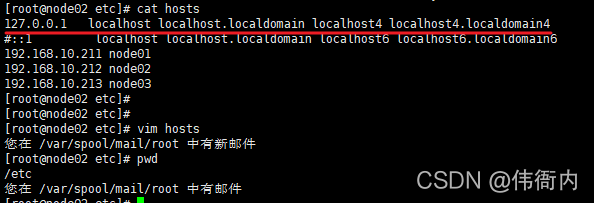

? ? ? ? ?查看192.168.10.212,安装了hadoop一个节点的敌方,也是安装hive的机器,好家伙,还真是,立刻注释掉。

? ? ? ? ?然后重启了hadoop(其实压根不需要重启,重启毛线啊)

? ? ? ? 重新运行代码,发现还是连接拒绝,然后检查一下代码,突然灵光一闪,我看到了hdfs写的是192.168.10.212,这个是hive的安装路径。

? ? ? ? 然后我看了一下user_info_dest的DDL语句中location,上面是node01,node01不是192.168.10.211的IP么?

? ? ? ? ?将代码中filePath的改为IP改为192.168.10.211

//建表语句后面的 location

String filePath = "hdfs://192.168.10.211:9000/user/hive_remote/warehouse/ntzw_dev_64.db/"+tableName+"_"+randomId;? ? ? ? Permission denied

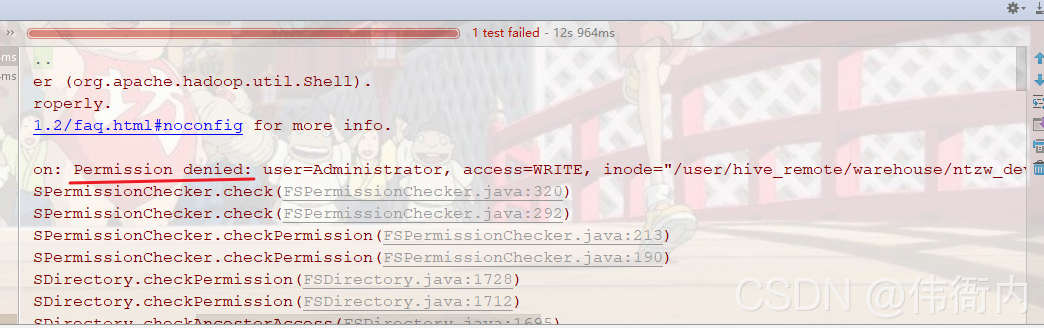

? ? ? ? 继续运行,新的报错,权限问题。

? ? ? ? 完整点的报错如下所示,?

org.apache.hadoop.security.AccessControlException: Permission denied: user=Administrator, access=WRITE, inode="/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest_0730452cf2c94db38a32a0f32b499646":hadoop:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:320)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:292)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:213)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1728)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1712)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1695)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2515)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2450)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2334)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:624)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:397)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2045)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73)

at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:1653)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1689)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1624)

at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:448)

at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:444)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:459)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:387)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:911)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:892)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:854)

at org.apache.hadoop.fs.FileSystem.createNewFile(FileSystem.java:1154)

at com.lw.kettle.HiveOper.writeData2Hadoop(HiveOper.java:76)

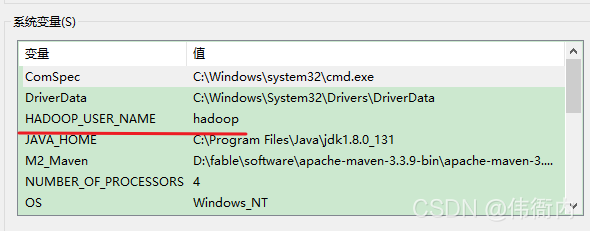

????????分析上述报错,使用用户Administrator,没有写权限,这个Administrator不是我windows本地的用户么?

????????在hadoop上安装程序使用的用户是新建的hadoop/hadoop用户,就算是root用户我也认啊,怎么是windows用户呢?

? ? ? ? 然后我百度了一下,https://blog.csdn.net/zhangjunli/article/details/106321516

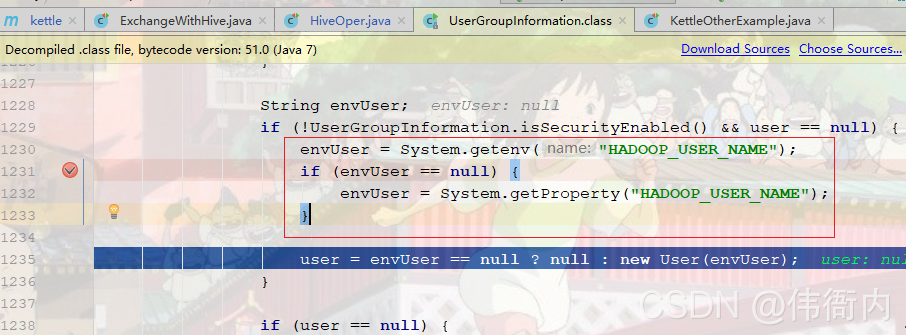

????????上面这个博客告诉了我原因,原来会先登录,登录使用的是环境变量的配置,如果没有配置获取windows用户。

???????查看了权限,果然是 r_x,那么此处或许可以如下命令,改变权限,但我觉得还是用HADOOP_USER_NAME好一点。

? ? ? ? 修改权限(不推荐):

?????????????????hadoop fs -chmod 777 /user/hive_remote/warehouse/ntzw_dev_64.db

? ? ? ? ?决定采用配置环境变量的方式,

? ? ? ? ?还是不行,调试代码发现获取为空,

? ? ? ? ?然后自己写了一个Test,还是为空,

@Test

public void test(){

????String name = "HADOOP_USER_NAME";

????String hadNameEnv = System.getenv(name);

????String hadNamePro = System.getProperty(name);

????System.out.println(hadNameEnv+" ??"+hadNamePro);

}????????如上test方法一直是空,估摸着是环境变量没有生效,是不是重启电脑?那还是算了吧。

????????直接自己设置下即可,

@Before

public void init(){

System.setProperty("HADOOP_USER_NAME","hadoop");

}? ? ? ? 问题解决,然后新的错误在等待。

????????路径不对

? ? ? ? 继续运行代码,报如下错误,

org.apache.hive.service.cli.HiveSQLException: Error while compiling statement: FAILED: SemanticException [Error 10028]: Line 1:17 Path is not legal ''hdfs://192.168.10.211:9000/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest_93fddf2f22554d4aadb16ed38788664d'': Move from: hdfs://192.168.10.211:9000/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest_93fddf2f22554d4aadb16ed38788664d to: hdfs://node01:9000/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest is not valid. Please check that values for params "default.fs.name" and "hive.metastore.warehouse.dir" do not conflict.

at org.apache.hive.jdbc.Utils.verifySuccess(Utils.java:256)

at org.apache.hive.jdbc.Utils.verifySuccessWithInfo(Utils.java:242)

at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:254)

at com.lw.kettle.HiveOper.writeHiveFile(HiveOper.java:53)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

at com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:68)

at com.intellij.rt.execution.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:47)

at com.intellij.rt.execution.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:242)

at com.intellij.rt.execution.junit.JUnitStarter.main(JUnitStarter.java:70)

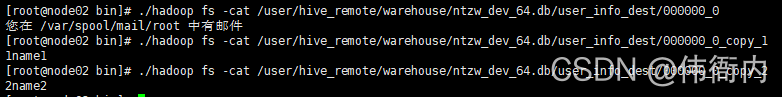

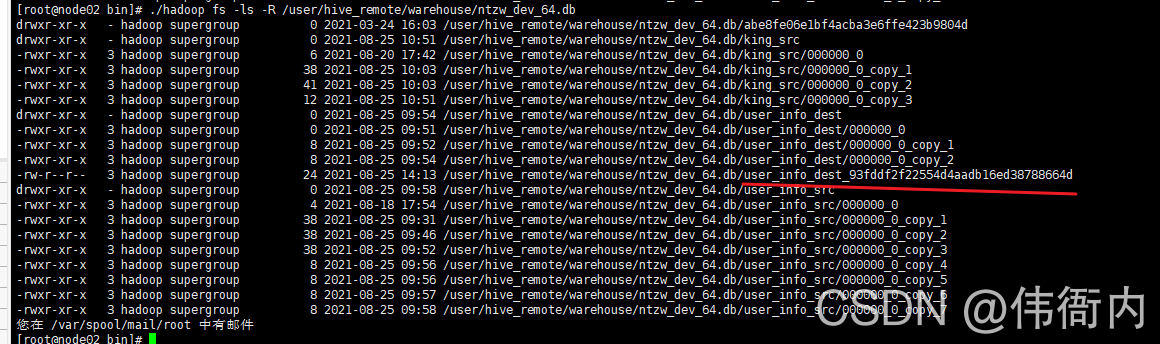

????????看一眼Hadoop上,确实有我们的文件了,那么就是还没有加载到hive中去,????????

? ? ? ? ?查看下文件数据,数据正常,是我们生成数据,

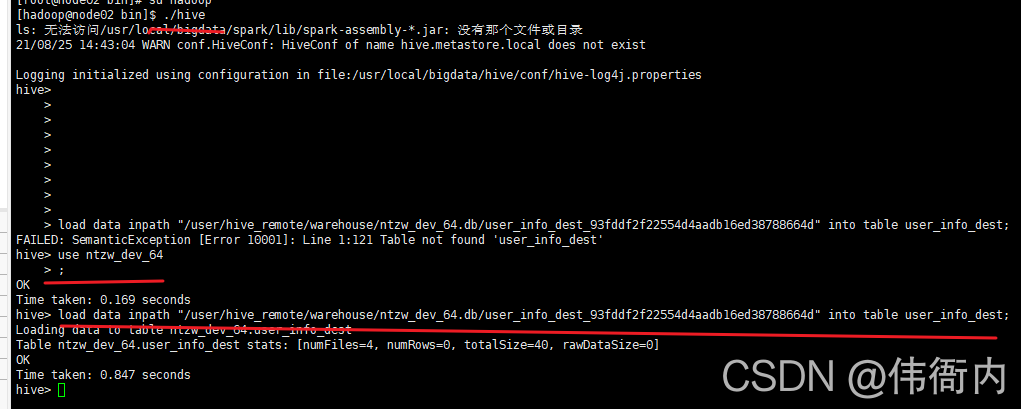

?????????将直接在212上手工执行,结果发现是可以通过load data inpath加载到Hive库,那说明这个方法没问题啊!

?

?数据添加进去了,但是为何代码执行不可?

????????那猜测一点,Hive是安装在212,也就是子节点上,而连接是连接的主节点211,所以无法将211的文件放入212的hive表中,可为何ddl语句是node01,而不是node02呢?

????????在看文件,加载后路径改变了,

从/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest_93fddf2f22554d4aadb16ed38788664d

到/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest/user_info_dest_93fddf2f22554d4aadb16ed38788664d

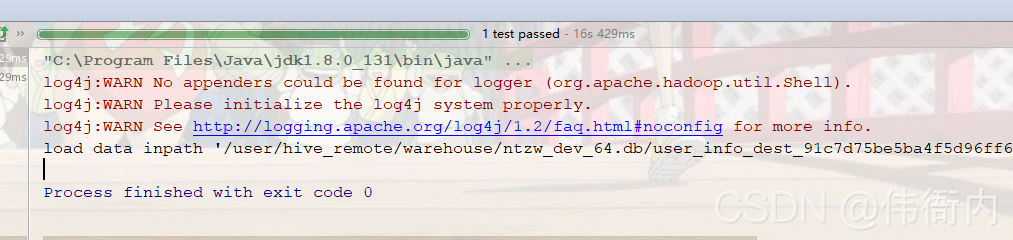

?????????重新运行一遍,打印了SQL,

load data inpath 'hdfs://192.168.10.211:9000/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest_3d205d05ae6047eea05a27ed6089d0c1' into table user_info_dest

? ? ? ? 对比刚才手工执行的,很明显是多了hdfs://192.168.10.211:9000,我靠。

load data inpath "/user/hive_remote/warehouse/ntzw_dev_64.db/user_info_dest_93fddf2f22554d4aadb16ed38788664d" into table user_info_dest;

最终将代码路径改为如下,

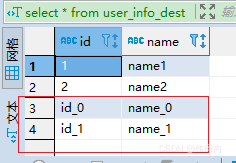

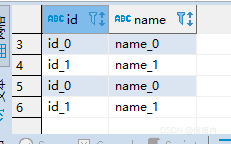

?看到这个绿色是多么令人兴奋啊,

?查看表数据也有了,

?

完整代码

package com.lw.kettle;

import lombok.extern.slf4j.Slf4j;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Before;

import org.junit.Test;

import java.io.IOException;

import java.io.OutputStream;

import java.net.URI;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.*;

/**

* @author lw

* @date 2021/8/24 0024

* @description

*/

@Slf4j

public class HiveOper {

@Before

public void init(){

System.setProperty("HADOOP_USER_NAME","hadoop");

}

/**

* 通过写hive的文件系统,将数据录入进去

*/

@Test

public void writeHiveFile() throws SQLException, IOException {

String randomId = UUID.randomUUID().toString().replace("-","");

String tableName= "user_info_dest";

String hdfsIpAndPort = "hdfs://192.168.10.211:9000";

String dbPath = "/user/hive_remote/warehouse/ntzw_dev_64.db/";

//建表语句后面的 location

String filePath = hdfsIpAndPort+dbPath+tableName+"_"+randomId;

//将数据写入Hadoop中

this.writeData2Hadoop(filePath);

//加载到hive表的语句 从hadoop文件中加载到hive库中,这样 select 语句才能看见

String loadDataSql = "load data inpath '"+dbPath+tableName+"_"+randomId+"' "+"into table "+tableName;

System.out.println(loadDataSql);

//获取连接,并执行加载语句

Connection connection = this.getHiveConnection();

Statement stmt = connection.createStatement();

stmt.execute(loadDataSql);

stmt.close();

connection.close();

//加载完成后需要删除临时文件

this.deleteHiveTmpFile(filePath);

}

/**

* 往hadoop文件系统里面写文件

* @param filePath

* @throws IOException

*/

private void writeData2Hadoop(String filePath) throws IOException {

Configuration conf = new Configuration();

conf.setBoolean("dfs.support.append", true);

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

//获取文件系统对象

FileSystem fs = FileSystem.get(URI.create(filePath),conf);

OutputStream output = null;

//文件检查

Path path = new Path(filePath);

if (!(fs.exists(path)&&fs.isFile(path))) {

log.info("不存在文件[{}],开始创建文件.",filePath);

fs.createNewFile(path);

output = fs.create(path);

} else {

log.info("文件[{}]已存在,追加内容到文件",filePath);

output = fs.append(path);

}

//往文件里面写数据

List<UserInfoDest> dataList = this.initData();

for (UserInfoDest data : dataList) {

//目标表只有两列 id,name

StringBuilder sb = new StringBuilder();

sb.append(data.id);//表的id

//hive的表的默认分割符,\t,如果指定了其他列分割符,那么此处也要改变

sb.append("\001");

sb.append(data.name);//表的name

sb.append("\n");//一行内容结束后换行

output.write(sb.toString().getBytes());

}

output.flush();

output.close();

fs.close();

}

/**

* 删除Hive临时文件

* @param filePath

*/

private void deleteHiveTmpFile(String filePath){

Configuration conf = new Configuration();

conf.setBoolean("dfs.support.append", true);

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = null;

Path path = new Path(filePath);

try{

fs = FileSystem.get(URI.create(filePath), conf);

if ((fs.exists(path)&&fs.isFile(path))) {

fs.delete(path,false);

}

}catch (Exception e){

e.printStackTrace();

}

}

/**

* 获取hive的连接

* @return

*/

private Connection getHiveConnection(){

String driver = "org.apache.hive.jdbc.HiveDriver";

String url = "jdbc:hive2://192.168.10.212:10000/ntzw_dev_64";

try {

Class.forName(driver);

Connection conn = DriverManager.getConnection(url,"hadoop","hadoop");

return conn;

} catch (ClassNotFoundException e) {

e.printStackTrace();

} catch (SQLException e) {

e.printStackTrace();

}

return null;

}

/**

* 初始化数据

* @return

*/

private List<UserInfoDest> initData(){

String[] cols = new String[]{"id","name"};

List<UserInfoDest> retList = new ArrayList<UserInfoDest>();

//初始化两条数据

for (int i = 0; i < 2; i++) {

UserInfoDest dest = new UserInfoDest();

dest.id = cols[0]+"_"+i;

dest.name = cols[1]+"_"+i;

retList.add(dest);

}

return retList;

}

}

/**

* hive的表对象

*/

class UserInfoDest{

public String id;

public String name;

}

? 当然,此处依旧是一个简单模型,在项目中真正用的时候比这个复杂很多,不过简单模型能帮助自己看懂和扩展。