Spark环境搭建

Spark环境搭建

搭建过程参考:https://blog.csdn.net/llwy1428/article/details/111569392

配置,参照官方文档:

- https://spark.apache.org/docs/latest/configuration.html#yarn

- https://spark.apache.org/docs/latest/spark-standalone.html#cluster-launch-scripts

下载spark

地址:https://www.apache.org/dyn/closer.lua/spark/spark-3.1.2/spark-3.1.2-bin-hadoop3.2.tgz

下载后,放到/home/spark目录下

解压缩过程省略

配置过程

[hadoop@node1 ~]$ cd $SPARK_HOME/conf

#配置环境变量,参考上面hadoop搭建过程的环境变量配置

#复制编辑工作节点worker

[hadoop@node1 conf]$ cp workers.template workers

[hadoop@node1 conf]$ vim workers

#localhost

node2

node3

node4

[hadoop@node1 conf]$ cp spark-env.sh.template spark-env.sh

[hadoop@node1 conf]$ vim spark-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.302.b08-0.el7_9.x86_64/jre

#export SCALA_HOME=/usr/share/scala-2.11

export HADOOP_HOME=/home/hadoop/hadoop-3.3.1

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export SPARK_MASTER_HOST=node1

export SPARK_LOCAL_DIRS=/data/spark/local/data

export SPARK_DRIVER_MEMORY=4g #内存

export SPARK_WORKER_CORES=8 #cpus核心数

export SPARK_WORKER_MEMORY=28g #worker运行spark使用的总内存

export SPARK_EXECUTOR_MEMORY=2g

export SPARK_MASTER_WEBUI_PORT=9080

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_WEBUI_PORT=9090

export SPARK_WORKER_DIR=/data/spark/worker/data

export SPARK_LOCAL_IP=192.168.111.49 #这里很重要,必须得填写能够互通的worker本地ip,网上很多说些0.0.0.0或者127.0.0.1的,是因为网卡本身就在一个网段,或者本身就是伪分布式环境

#修改名称,提高辨识度

[hadoop@node1 conf]$ mv $SPARK_HOME/sbin/start-all.sh $SPARK_HOME/sbin/start-spark.sh

编辑spark-config.sh

[hadoop@node1 conf] cd $SPARK_HOME/sbin

[hadoop@node1 sbin]$ vim spark-config.sh

#开头处加入

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.302.b08-0.el7_9.x86_64/jre

复制spark到各个节点

[hadoop@node1 ~]$ scp -r spark-3.1.2-bin-hadoop3.2/ node2:/home/hadoop/

[hadoop@node1 ~]$ scp -r spark-3.1.2-bin-hadoop3.2/ node3:/home/hadoop/

[hadoop@node1 ~]$ scp -r spark-3.1.2-bin-hadoop3.2/ node4:/home/hadoop/

#在各个节点上面修改spark-env.sh

export SPARK_LOCAL_IP=192.168.111.50#各个节点的ip

启动spark

[hadoop@node1 sbin]$ ./start-spark.sh

starting org.apache.spark.deploy.master.Master, logging to /home/hadoop/spark-3.1.2-bin-hadoop3.2/logs/spark-hadoop-org.apache.spark.deploy.master.Master-1-node1.out

node4: starting org.apache.spark.deploy.worker.Worker, logging to /home/hadoop/spark-3.1.2-bin-hadoop3.2/logs/spark-hadoop-org.apache.spark.deploy.worker.Worker-1-node4.out

node2: starting org.apache.spark.deploy.worker.Worker, logging to /home/hadoop/spark-3.1.2-bin-hadoop3.2/logs/spark-hadoop-org.apache.spark.deploy.worker.Worker-1-node2.out

node3: starting org.apache.spark.deploy.worker.Worker, logging to /home/hadoop/spark-3.1.2-bin-hadoop3.2/logs/spark-hadoop-org.apache.spark.deploy.worker.Worker-1-node3.out

启动timelineserver服务

参考:https://blog.csdn.net/llwy1428/article/details/112417384

在hadoop2.4版本之前对任务执行的监控只开发了针对MR的 Job History Server,它可以提供给用户用户查询已经运行完成的作业的信息,但是后来,随着在YARN上面集成的越来越多的计算框架,比如spark、Tez,也有必要为基于这些计算引擎的技术开发相应的作业任务监控工具,所以hadoop的开发人员就考虑开发一款更加通用的Job History Server,即YARN Timeline Server。

在yarn-site.xml中添加如下配置:

<!--开始配置timeline service-->

<property>

<name>yarn.timeline-service.enabled</name>

<value>true</value>

<description>Indicate to clients whether Timeline service is enabled or not. If enabled, the TimelineClient

library used by end-users will post entities and events to the Timeline server.

</description>

</property>

<property>

<name>yarn.timeline-service.hostname</name>

<value>node1</value>

<description>The hostname of the Timeline service web application.</description>

</property>

<property>

<name>yarn.timeline-service.address</name>

<value>node1:10200</value>

<description>Address for the Timeline server to start the RPC server.</description>

</property>

<property>

<name>yarn.timeline-service.webapp.address</name>

<value>node1:8188</value>

<description>The http address of the Timeline service web application.</description>

</property>

<property>

<name>yarn.timeline-service.webapp.https.address</name>

<value>node1:8190</value>

<description>The https address of the Timeline service web application.</description>

</property>

<property>

<name>yarn.timeline-service.handler-thread-count</name>

<value>10</value>

<description>Handler thread count to serve the client RPC requests.</description>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.enabled</name>

<value>false</value>

<description>Enables cross-origin support (CORS) for web services where cross-origin web response headers are

needed. For example, javascript making a web services request to the timeline server,是否支持请求头跨域

</description>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.allowed-origins</name>

<value>*</value>

<description>Comma separated list of origins that are allowed for web services needing cross-origin (CORS) support. Wildcards (*) and patterns allowed,#需要跨域源支持的web服务所允许的以逗号分隔的列表

</description>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.allowed-methods</name>

<value>GET,POST,HEAD</value>

<description>Comma separated list of methods that are allowed for web services needing cross-origin (CORS) support.,跨域所允许的请求操作

</description>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.allowed-headers</name>

<value>X-Requested-With,Content-Type,Accept,Origin</value>

<description>Comma separated list of headers that are allowed for web services needing cross-origin (CORS) support.允许用于web的标题的逗号分隔列表

</description>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.max-age</name>

<value>1800</value>

<description>The number of seconds a pre-flighted request can be cached for web services needing cross-origin (CORS) support.可以缓存预先传送的请求的秒数

</description>

</property>

<property>

<name>yarn.timeline-service.generic-application-history.enabled</name>

<value>true</value>

<description>Indicate to clients whether to query generic application data from timeline history-service or not. If not enabled then application data is queried only from Resource Manager.

向资源管理器和客户端指示是否历史记录-服务是否启用。如果启用,资源管理器将启动

记录工时记录服务可以使用历史数据。同样,当应用程序如果启用此选项,请完成.

</description>

</property>

<property>

<name>yarn.timeline-service.generic-application-history.store-class</name>

<value>org.apache.hadoop.yarn.server.applicationhistoryservice.FileSystemApplicationHistoryStore</value>

<description>Store class name for history store, defaulting to file system store</description>

</property>

<property>

<description>Store class name for timeline store.</description>

<name>yarn.timeline-service.store-class</name>

<value>org.apache.hadoop.yarn.server.timeline.LeveldbTimelineStore</value>

</property>

<property>

<description>Enable age off of timeline store data.</description>

<name>yarn.timeline-service.ttl-enable</name>

<value>true</value>

</property>

<property>

<description>Time to live for timeline store data in milliseconds.</description>

<name>yarn.timeline-service.ttl-ms</name>

<value>6048000000</value>

</property>

<property>

<name>hadoop.zk.address</name>

<value>node2:2181,node3:2181,node4:2181</value>

</property>

<property>

<name>yarn.resourcemanager.system-metrics-publisher.enabled</name>

<value>true</value>

<description>The setting that controls whether yarn system metrics is published on the timeline server or not by RM.

</description>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

<description>解决不能查看日志的问题</description>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/data/hadoop/tmp/logs</value>

</property>

重启yarn服务

参照上文中resourcemanager和nodemanager重启过程

启动timelineserver服务

[hadoop@node1 sbin]$ yarn --daemon start timelineserver

验证

访问:http://node1:8188/applicationhistory

spark-shell

参考:https://blog.csdn.net/llwy1428/article/details/112502667

spark on hive配置

参见:https://www.cnblogs.com/yangxusun9/p/12890770.html

通过spark-sql连接

- 将hive-site.xml复制到$SPARK_HOME/conf/目录下

- 注释掉hive-site中的查询引擎

<!-- <property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>

-->

- 拷贝mysql驱动到$SPARK_HOME/jars目录下

- 将core-site.xml和hdfs-site.xml拷贝到$SPARK_HOME/conf目录下(可选)

- 如果hive中表采用lzo或snappy等压缩格式,则需要配置spark-defaults.conf

- 在脚本中(或者命令行),用

spark-sql --master yarn来替代hive,提高运行速度

[hadoop@node3 conf]$ spark-sql --master yarn

2021-08-24 11:02:19,112 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

2021-08-24 11:02:21,129 WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

2021-08-24 11:02:21,794 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

2021-08-24 11:02:38,502 WARN conf.HiveConf: HiveConf of name hive.stats.jdbc.timeout does not exist

2021-08-24 11:02:38,503 WARN conf.HiveConf: HiveConf of name hive.stats.retries.wait does not exist

Spark master: yarn, Application Id: application_1629688359089_0008

spark-sql (default)> show databases;

namespace

caoyong_test

default

Time taken: 2.195 seconds, Fetched 2 row(s)

spark-sql (default)> use caoyong_test;

2021-08-24 11:03:02,369 WARN metastore.ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

Response code

Time taken: 0.056 seconds

spark-sql (default)> show tables;

database tableName isTemporary

caoyong_test student false

Time taken: 0.235 seconds, Fetched 1 row(s)

spark-sql (default)> select * from student;

2021-08-24 11:03:19,703 WARN session.SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

id name

1005 zhangsan

1003 node3

1002 node2

101 beeline by spark

102 beeline by spark

1000 aoaoao

100 spark-sql

103 beeline by spark aoaoao

Time taken: 3.672 seconds, Fetched 8 row(s)

spark-sql (default)> insert into student values(111,"spark-sql --master yarn");

2021-08-24 11:04:09,037 WARN conf.HiveConf: HiveConf of name hive.internal.ss.authz.settings.applied.marker does not exist

2021-08-24 11:04:09,037 WARN conf.HiveConf: HiveConf of name hive.stats.jdbc.timeout does not exist

2021-08-24 11:04:09,038 WARN conf.HiveConf: HiveConf of name hive.stats.retries.wait does not exist

Response code

Time taken: 2.724 seconds

spark-sql (default)> select * from student;

id name

1005 zhangsan

1003 node3

1002 node2

101 beeline by spark

102 beeline by spark

1000 aoaoao

111 spark-sql --master yarn

100 spark-sql

103 beeline by spark aoaoao

Time taken: 0.304 seconds, Fetched 9 row(s)

spark-sql (default)>

使用thriftserver服务,利用beeline连接

-

在$SPARK_HOME/conf/hive-site.xml中,配置:

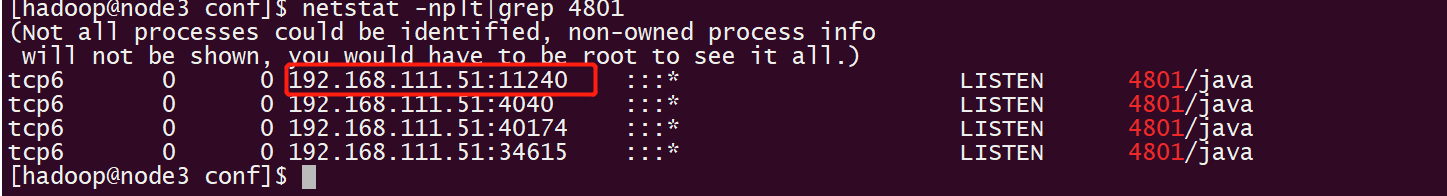

<property> <name>hive.server2.thrift.port</name> <value>11240</value> </property> <property> <name>hive.server2.thrift.bind.host</name> <value>node3</value> </property> <!--实际上,上文已有这两个配置--> -

然后执行如下命令:

[hadoop@node3 ~]$ start-thriftserver.sh --master yarn [hadoop@node3 ~]$ jps 6288 JournalNode 27985 Worker 4801 SparkSubmit 6856 NodeManager 4954 ExecutorLauncher 14203 Jps 24956 QuorumPeerMain 6605 DataNode

-

使用beeline登录

[hadoop@node2 conf]$ beeline Beeline version 2.3.7 by Apache Hive beeline> !connect jdbc:hive2://node2:11240 Connecting to jdbc:hive2://node2:11240 Enter username for jdbc:hive2://node2:11240: hadoop Enter password for jdbc:hive2://node2:11240: ********** 2021-08-24 11:56:42,404 INFO jdbc.Utils: Supplied authorities: node2:11240 2021-08-24 11:56:42,405 INFO jdbc.Utils: Resolved authority: node2:11240 Connected to: Spark SQL (version 3.1.2) Driver: Hive JDBC (version 2.3.7) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://node2:11240> show databases; +---------------+ | namespace | +---------------+ | caoyong_test | | default | +---------------+ 2 rows selected (0.871 seconds) 0: jdbc:hive2://node2:11240> use caoyong_test; +---------+ | Result | +---------+ +---------+ No rows selected (0.08 seconds) 0: jdbc:hive2://node2:11240> select * from student; +-------+--------------------------+ | id | name | +-------+--------------------------+ | 1005 | zhangsan | | 1003 | node3 | | 1002 | node2 | | 101 | beeline by spark | | 102 | beeline by spark | | 1000 | aoaoao | | 111 | spark-sql --master yarn | | 100 | spark-sql | | 103 | beeline by spark aoaoao | +-------+--------------------------+ 9 rows selected (3.956 seconds) 0: jdbc:hive2://node2:11240> insert into student values(2222,"node2"); +---------+ | Result | +---------+ +---------+ No rows selected (2.088 seconds) 0: jdbc:hive2://node2:11240> select * from student; +-------+--------------------------+ | id | name | +-------+--------------------------+ | 1005 | zhangsan | | 1003 | node3 | | 1002 | node2 | | 101 | beeline by spark | | 102 | beeline by spark | | 1000 | aoaoao | | 111 | spark-sql --master yarn | | 100 | spark-sql | | 2222 | node2 | | 103 | beeline by spark aoaoao | +-------+--------------------------+ 10 rows selected (0.366 seconds) 0: jdbc:hive2://node2:11240>

备注:spark的thriftserver没有做ha,如果需要ha,则需要修改spark源码,添加注册zookeeper

代码方式(暂略)

设定Spark动态资源分配

当通过上面thriftserver进行sql执行的时候,通过http://node1:8088/cluster发现,Excutor不能正常释放,表现为sql执行完成后,YarnCoarseGrainedExecutorBackend不主动释放,造成资源被无辜占用,浪费啊浪费,这个问题必须解决;因此,需要设置spark动态资源分配的方式,对于执行完成的job,释放YarnCoarseGrainedExecutorBackend进程。

参见:https://blog.csdn.net/WindyQCF/article/details/109127569

注:对于Spark应用来说,资源是影响Spark应用执行效率的一个重要因素。当一个长期运行的服务,若分配给它多个Executor,可是却没有任何任务分配给它,而此时有其他的应用却资源紧张,这就造成了很大的资源浪费和资源不合理的调度。

动态资源调度就是为了解决这种场景,根据当前应用任务的负载情况,实时的增减Executor个数,从而实现动态分配资源,使整个Spark系统更加健康。

配置过程

修改yarn配置

首先需要对YARN进行配置,使其支持Spark的Shuffle Service。

修改每台集群上的yarn-site.xml:

<!--修改-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle,spark_shuffle</value>

</property>

<!--增加-->

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.class</name>

<value>org.apache.spark.network.yarn.YarnShuffleService</value>

</property>

<property>

<name>spark.shuffle.service.port</name>

<value>7337</value>

</property>

拷贝 S P A R K H O M E / y a r n / s p a r k ? 3.1.2 ? y a r n ? s h u f f l e . j a r 到 SPARK_HOME/yarn/spark-3.1.2-yarn-shuffle.jar到 SPARKH?OME/yarn/spark?3.1.2?yarn?shuffle.jar到HADOOP_HOME/share/hadoop/yarn/lib/目录下,重启yarn:

[hadoop@node1 ~]$ yarn--workers --daemon stop nodemanager

[hadoop@node1 ~]$ yarn--daemon stop resourcemanager

[hadoop@node2 ~]$ yarn--daemon stop resourcemanager

[hadoop@node1 ~]$ yarn--daemon start resourcemanager

[hadoop@node2 ~]$ yarn--daemon start resourcemanager

[hadoop@node1 ~]$ yarn--workers --daemon start nodemanager

增加Spark配置

配置$SPARK_HOME/conf/spark-defaults.conf,增加以下参数:

spark.shuffle.service.enabled true //启用External shuffle Service服务

spark.shuffle.service.port 7337 //Shuffle Service默认服务端口,必须和yarn-site中的一致

spark.dynamicAllocation.enabled true //开启动态资源分配

spark.dynamicAllocation.minExecutors 1 //每个Application最小分配的executor数

spark.dynamicAllocation.maxExecutors 30 //每个Application最大并发分配的executor数

spark.dynamicAllocation.schedulerBacklogTimeout 1s

spark.dynamicAllocation.sustainedSchedulerBacklogTimeout 5s

启动shtiftserver服务

$SPARK_HOME/sbin/start-thriftserver.sh \

--master yarn \ #很重要,否则不能通过命令行或者web看到application,以及任务执行情况

--executor-memory 6g \ #如果资源允许,尽可能增加mamory和cores等配置,可以增加多线程task并行度哦

--executor-cores 6 \

--driver-memory 3g \

--driver-cores 2 \

--conf spark.dynamicAllocation.enabled=true \

--conf spark.shuffle.service.enabled=true \

--conf spark.dynamicAllocation.initialExecutors=1 \ #初始化一个Excutor

--conf spark.dynamicAllocation.minExecutors=1 \

--conf spark.dynamicAllocation.maxExecutors=4 \

--conf spark.dynamicAllocation.executorIdleTimeout=30s \

--conf spark.dynamicAllocation.schedulerBacklogTimeout=10s

启动后,会在机器上面增加三个服务

可以通过命令行看到yarn增加了一个长期application:

[hadoop@node4 yarn]$ yarn application -list

2021-08-27 09:24:41,846 INFO client.AHSProxy: Connecting to Application History server at node1/192.168.111.49:10200

Total number of applications (application-types: [], states: [SUBMITTED, ACCEPTED, RUNNING] and tags: []):1

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

application_1629977232578_0003 Thrift JDBC/ODBC Server SPARK hadoop default RUNNING UNDEFINED 10% http://node4:4040

在Applications页面中也可以看到增加的application:

进入AM(ApplicationMaster)详情页可以看到启动的一个Excutor:

任务执行

通过beeline登录,执行一个作业job,如下:

[hadoop@node2 conf]$ beeline

Beeline version 2.3.7 by Apache Hive

beeline> use caoyong_test;

No current connection

beeline> !connect jdbc:hive2://node4:11240

Connecting to jdbc:hive2://node4:11240

Enter username for jdbc:hive2://node4:11240: hadoop

Enter password for jdbc:hive2://node4:11240: **********

2021-08-27 09:21:44,517 INFO jdbc.Utils: Supplied authorities: node4:11240

2021-08-27 09:21:44,517 INFO jdbc.Utils: Resolved authority: node4:11240

Connected to: Spark SQL (version 3.1.2)

Driver: Hive JDBC (version 2.3.7)

Transaction isolation: TRANSACTION_REPEATABLE_READ

1: jdbc:hive2://node4:11240> SELECT min(auto_index) min_index,max(auto_index) max_index,count(*) count_,count(distinct mtm) mtm_count from caoyong_test.ship_ib;

+------------+------------+------------+------------+

| min_index | max_index | count_ | mtm_count |

+------------+------------+------------+------------+

| 1 | 588578233 | 184826995 | 4735 |

+------------+------------+------------+------------+

1 row selected (31.119 seconds)

1: jdbc:hive2://node4:11240>

查询1.8亿数据量,用时31.119s,比单台sqlserver(sqlserver用时42.725s)速度还快

在AM详情页面可以看到,动态增加了三个Excutor

进入job详情页面,可以看到DAG的stage,总的任务数等等

在等待30s后,动态增加的Excutor被释放掉,如下图:

其他常用命令

nohup hive --service hiveserver2 > /home/hadoop/hive-3.1.2/logs/hive.log 2>&1

nohup hiveserver2 > $HIVE_HOME/logs/hive.log 2>&1 &

!connect jdbc:hive2://node3:11240

!connect jdbc:hive2://node2:2181,node3:2181,node4:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hive_zk

!connect jdbc:hive2://$aa

spark-submit --master yarn --deploy-mode cluster --driver-memory 1g --executor-memory 512m --class com.lenovo.ai.bigdata.spark.WordCount bigdata-0.0.1-SNAPSHOT.jar /demo/input/ /demo/output-spark/

spark-submit --master local[2] --class com.lenovo.ai.bigdata.spark.WordCount bigdata-0.0.1-SNAPSHOT.jar ./ ./output

spark-submit --master yarn --deploy-mode cluster --driver-memory 1g --executor-memory 512m --class com.lenovo.ai.bigdata.spark.hive.HiveOnSparkTest bigdata-0.0.1-SNAPSHOT.jar

mapred --daemon start historyserver

mapred --daemon start timelineserver

create table student(id int,name string);

insert into student values(1005,"zhangsan");

nohup hiveserver2 > $HIVE_HOME/logs/hive.log 2>&1

spark-sql --master yarn --deploy-mode cluster

hdfs:///demo/input/hive/kv1.txt