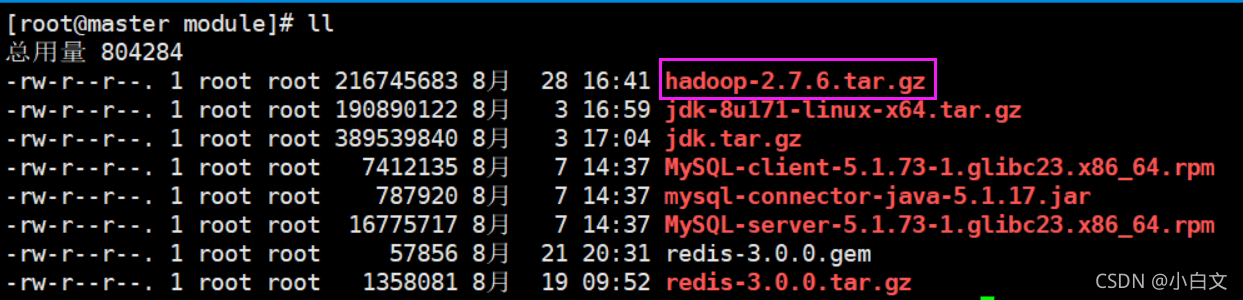

1、上传安装包并解压

#使用xftp上传压缩包至master的/usr/local/soft/module

cd /usr/local/soft/module

#解压

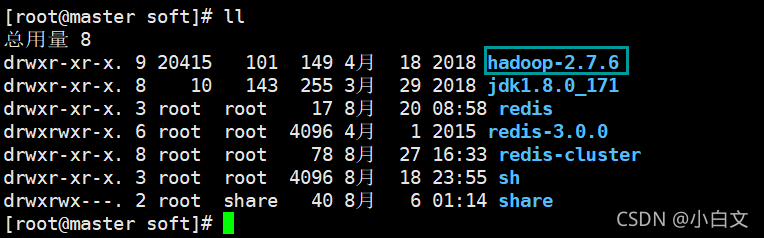

tar -zxvf hadoop-2.7.6.tar.gz -C /usr/local/soft/

2、配置环境变量

vim /etc/profile

#jdk

export JAVA_HOME=/usr/local/soft/jdk1.8.0_171

export REDIS_HOME=/usr/local/soft/redis/

#hadoop

export HADOOP_HOME=/usr/local/soft/hadoop-2.7.6

export PATH=$JAVA_HOME/bin:$REDIS_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

#重新加载环境变量

source /etc/profile

3、修改Hadoop配置文件

cd /usr/local/soft/hadoop-2.7.6/etc/hadoop/

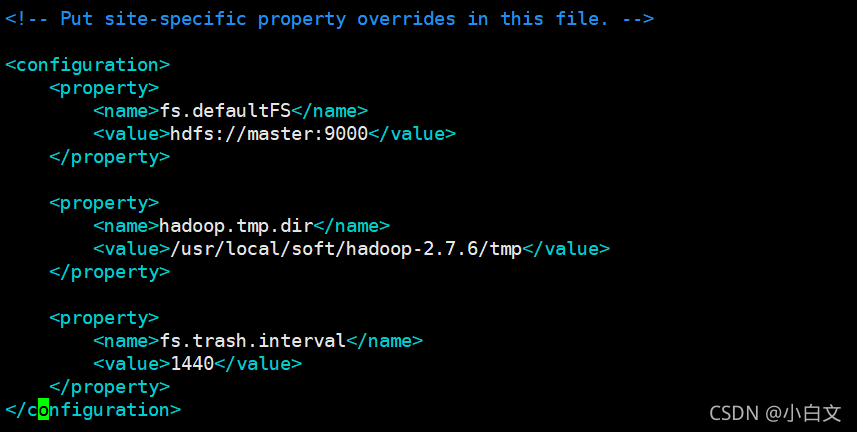

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/soft/hadoop-2.7.6/tmp</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

</configuration>

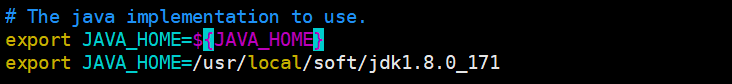

hadoop-env.sh

export JAVA_HOME=/usr/local/soft/jdk1.8.0_171

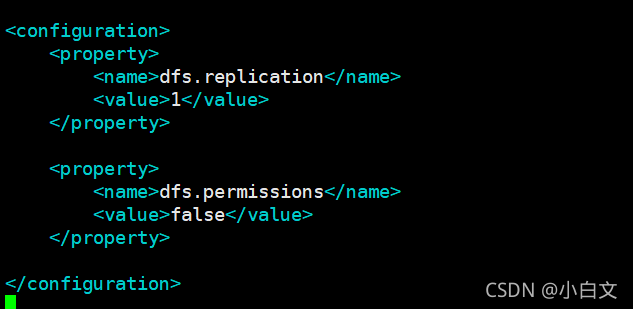

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

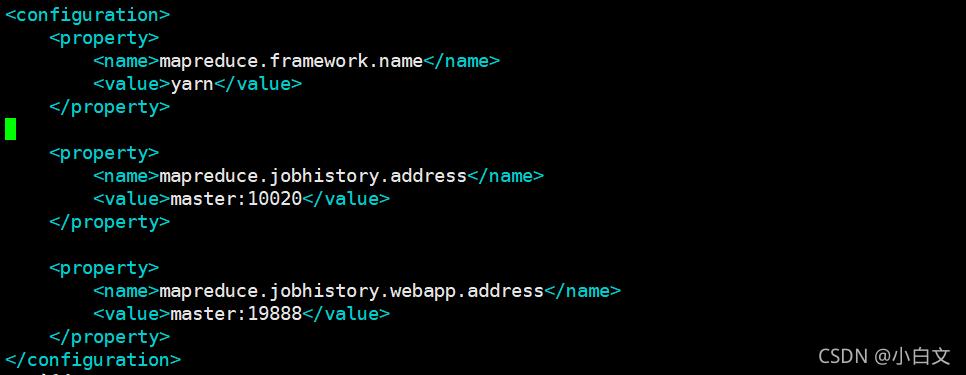

mapred-site.xml.template

#1.重命名文件

cp mapred-site.xml.template mapred-site.xml

#2.修改

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

slaves

node1

node2

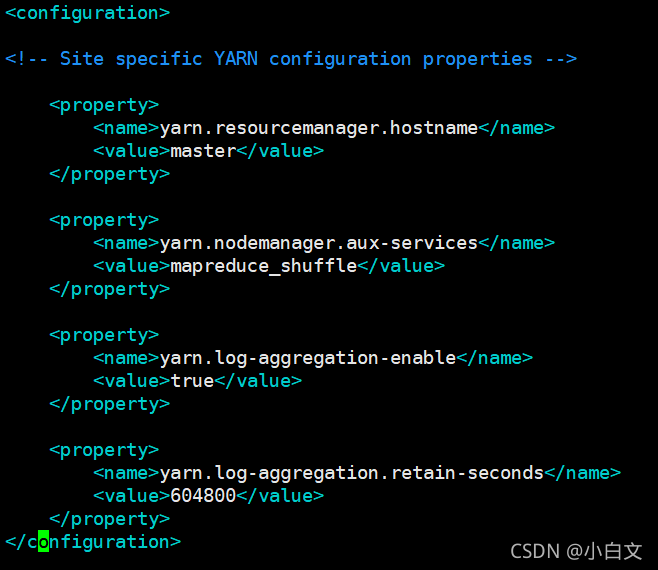

yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

4、分发Hadoop到node1、node2

cd /usr/local/soft/

scp -r hadoop-2.7.6/ node1:`pwd`

scp -r hadoop-2.7.6/ node2:`pwd`

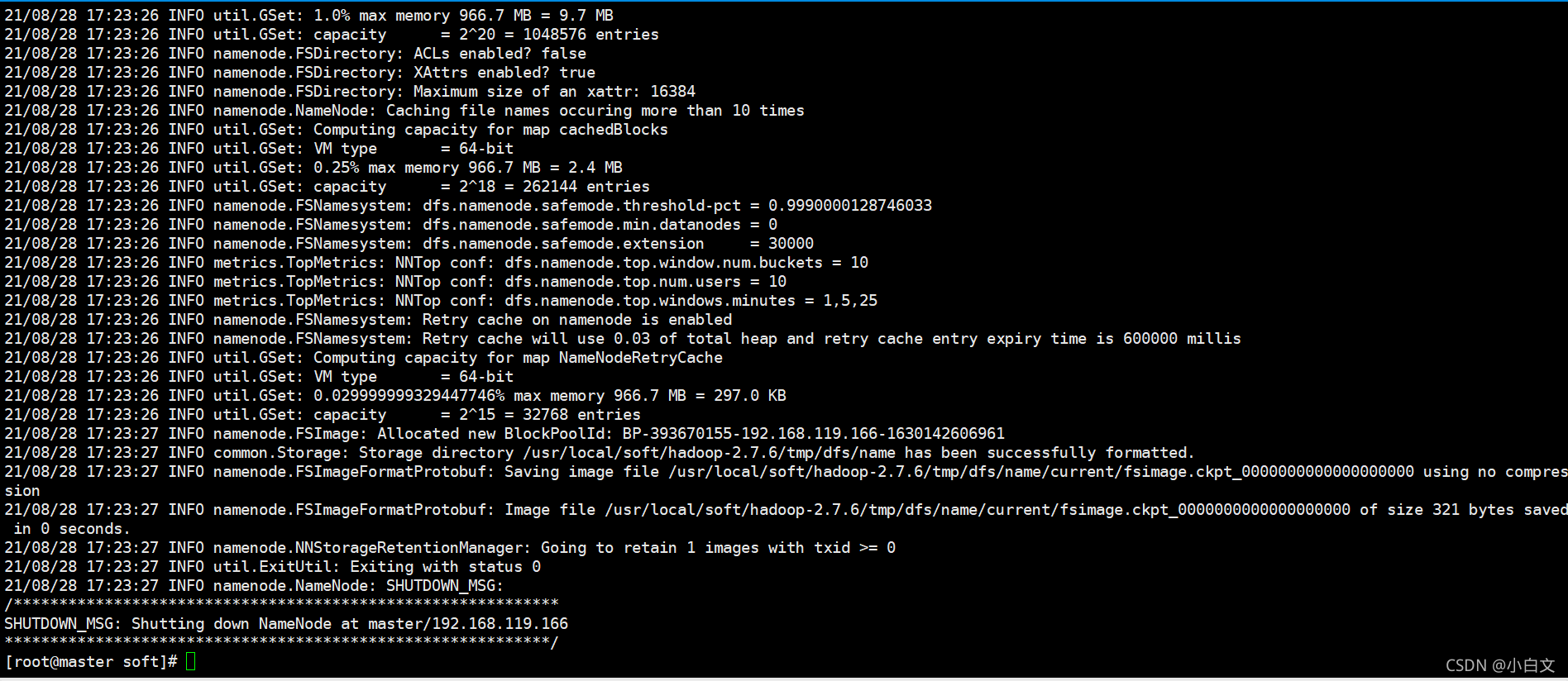

5、格式化namenode(第一次启动的时候需要执行)

hdfs namenode -format

6、启动Hadoop集群

start-all.sh

7、检查master、node1、node2上的进程

master:

[root@master soft]# jps

8565 Jps

8153 SecondaryNameNode

8298 ResourceManager

7967 NameNode

node1:

[root@node1 ~]# jps

3267 NodeManager

3160 DataNode

3385 Jps

node2:

[root@node2 ~]# jps

3300 NodeManager

3416 Jps

3193 DataNode

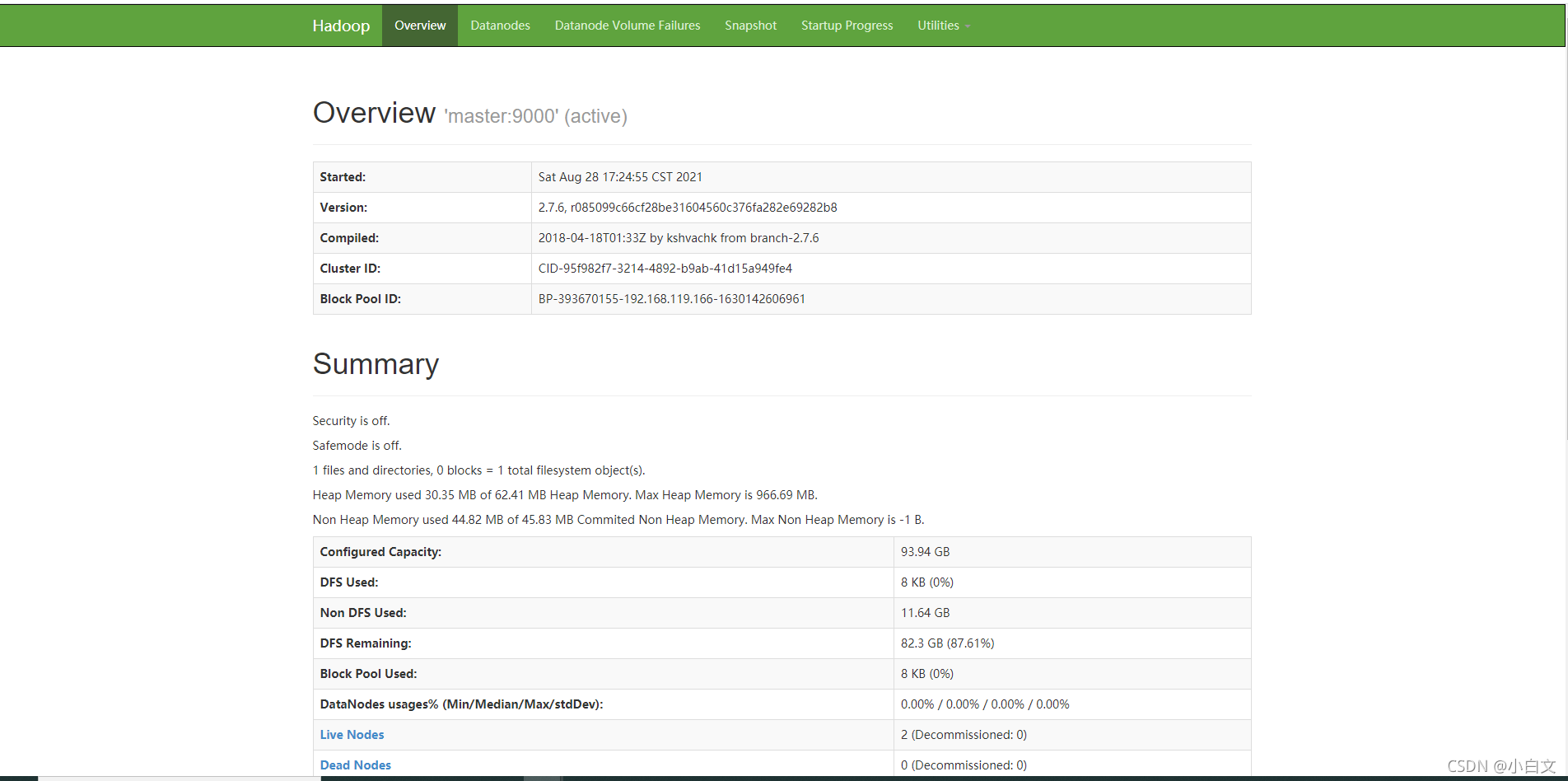

8、访问HDFS的WEB界面

http://master:50070

9、访问YARN的WEB界面

http://master:8088