一、Spark不同运行模式

首先来看Spark关于Driver和Executor的解释:

- Driver:运行Application的main()函数并创建SparkContext(应用程序的入口)。驱动程序,负责向ClusterManager提交作业。和集群的executor进行交互

- Executor:在worker节点上启动的进程,执行器,在worker node上执行任务的组件、用于启动线程池运行任务。每个Application拥有独立的一组Executors。

Spark支持多种模式,包括local、standalone、yarn、mesoso和k8s:

1. local模式

- local[N]模式

该模式被称为单机Local[N]模式,是用单机的多个线程来模拟Spark分布式计算,通常用来验证开发出来的应用程序逻辑上有没有问题。其中N代表可以使用N个线程,每个线程拥有一个core。如果不指定N,则默认是1个线程(该线程有1个core)。

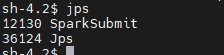

使用该模式提交spark程序,只会生成一个SparkSubmit进程,这个sparkSubmit进程既是提交任务的Client进程、又是Spark的driver程序、还充当着Spark执行Task的Executor角色。

通过Spark的webui也可以看到,local模式下是不起executor进程的,所有task的执行都是在driver中。

- ?local-cluster模式

local模式下还可以使用local-cluster模式,即使用单机模拟伪分布式集群方式,通常也是用来验证开发出来的应用程序逻辑上有没有问题,或者想使用Spark的计算框架而没有太多资源。

具体使用方式为提交应用程序时使用local-cluster[x,y,z]参数:x代表要生成的executor数,y和z分别代表每个executor所拥有的core和memory数。使用示例:

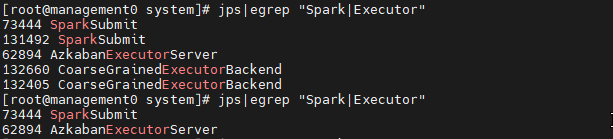

spark-submit --master local-cluster[2,2,1024] --conf spark.dynamicAllocation.enabled=false --class org.apache.spark.examples.SparkPi /usr/hdp/3.0.1.0-187/spark2/examples/jars/spark-examples_2.11-2.4.0-cdh6.2.0.jar这种方式会产生如下进程:

其中SparkSubmit既做为client也作为driver,CoarseGrainedExecutorBackend为启动的Executor进程,从Spark作业UI也可以看到对应启动了一个Driver和两个Executor

2. standalone模式

这种模式为Spark自带的分布式集群模式,不依赖于YARN、MESOS或k8s调度框架,自己来进行资源管理和任务调度,使用这种模式需要先启动Spark的Master和Worker进程。启动命令:

start-master.sh? ?//启动spark master进程,负责资源分配,状态监控等

start-slave.sh -h hostname url:master //启动worker进程,负责启动exector,执行具体task

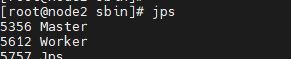

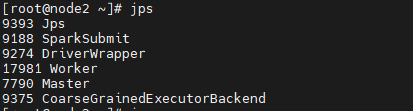

服务启动后对应进程名为Master和Worker:

?启动Master后会有个UI页面,可以查看提交的作业情况:

通过

spark-submit --master spark://node2:7077 --conf spark.enentLog.enable=false --class org.apache.spark.examples.SparkPi /usr/hdp/3.0.1.0-187/Spark2/spark2/examples/jars/spark-examples_2.11-2.4.0-cdh6.2.0.jar

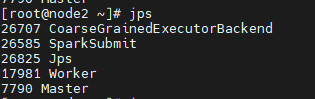

提交作业时未指定deployMode,默认为client模式,也可以通过--deploy-mode cluster指定cluster模式,client会启动SparkSubmit和CoarseGrainedExecutorBackend进程:

- ?Master进程做为cluster manager,用来对应用程序申请的资源进行管理;

- SparkSubmit 做为Client端和运行driver程序;

- CoarseGrainedExecutorBackend 用来并发执行应用程序;

?使用cluster模式提交作业时,会在wroker节点启动单独DriverWrapper进程运行Driver:

- SparkSubmit 仅做为Client端;

- DriverWrapper做为Driver进程,初始化SparkSession及SparkContext等

- CoarseGrainedExecutorBackend 用来并发执行应用程序;

3. YARN模式

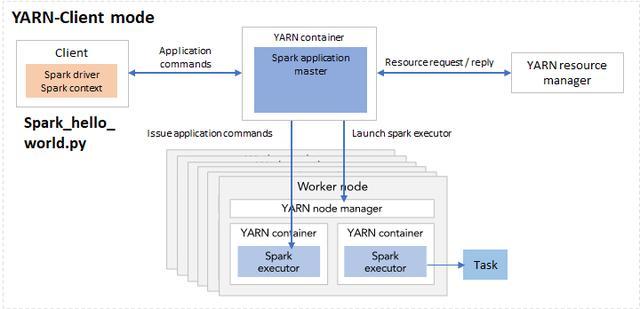

YARN模式目前使用场景最多,可以很好与Hadoop生态结合使用,与其他MR、Flink等框架统一资源调度,具体提交作业时也支持client和cluster两种deploy mode,具体区别参见下面两张图。

?yarn-cluster和yarn-client模式的区别其实就是Application Master进程的区别:

- yarn-cluster模式下,driver运行在AM(Application Master)中,它负责向YARN申请资源,并监督作业的运行状况。当用户提交了作业之后,就可以关掉Client,作业会继续在YARN上运行。然而yarn-cluster模式不适合运行交互类型的作业。

- 而yarn-client模式下,Application Master仅仅向YARN请求executor,client会和请求的container通信来调度他们工作,也就是说Client不能离开。

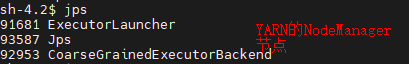

client模式下提交作业后启动进程如下:

- ?SparkSubmit 做为Client端和运行driver程序;

- ContainerLocalizer是YARN啓動container時负责资源本地化的实际线程/进程,比如ExecutorLauncher和ApplicationMaster的进程

- ExecutorLauncher是启动一个container??->?CoarseGraineExecutorBackend 的进程

- CoarseGraineExecutorBackend 是YARN模式下运行Spark Executor的具体进程

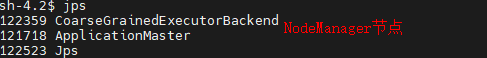

cluster模式下提交作业后启动进程如下:

![]() ?

?

- ?SparkSubmit 做为Client端;

- ContainerLocalizer是YARN啓動container時负责资源本地化的实际线程/进程,比如ExecutorLauncher和ApplicationMaster的进程

- ApplicationMatser(1)创建一个Driver子线程来执行用户主类(在这期间会初始化SparkContext等)(2)向集群管理器ResourceManager注册并且申请资源(3)获取资源后,向某个(或者多个)DataNode的发送command指令,在容器中启动CoarseGrainedExecutorBackend进程

- CoarseGraineExecutorBackend 是YARN模式下运行Spark Executor的具体进程

?

4. MESOS模式

spark on mesos 有粗粒度(coarse-grained)和细粒度(fine-grained)两种运行模式,细粒度模式在spark2.0后开始弃用。

?1:粗粒度模式(Coarse-grained Mode):每个应用程序的运行环境由一个Dirver和若干个Executor组成,其中,每个Executor占用若干资源,内部可运行多个Task(对应多少个“slot”)。应用程序的各个任务正式运行之前,需要将运行环境中的资源全部申请好,且运行过程中要一直占用这些资源,即使不用,最后程序运行结束后,回收这些资源。

2:细粒度模式(Fine-grained Mode):鉴于粗粒度模式会造成大量资源浪费,Spark On Mesos还提供了另外一种调度模式:细粒度模式,这种模式类似于现在的云计算,思想是按需分配.。

spark默认运行的就是细粒度模式,这种模式支持资源的抢占,spark和其他frameworks以非常细粒度的运行在同一个集群中,每个application可以根据任务运行的情况在运行过程中动态的获得更多或更少的资源(mesos动态资源分配),但是这会在每个task启动的时候增加一些额外的开销。这个模式不适合于一些低延时场景例如交互式查询或者web服务请求等。

Spark on MESOS在实际生产生使用的不多,这里就不多介绍了。

5. K8S模式

?spark on k8s也支持多个模式:

- Standalone:在 K8S 启动一个长期运行的集群,所有 Job 都通过 spark-submit 向这个集群提交。这种模式是在k8s的pod启动Spark的standalone集群,服务端的资源弹性无法享受到,相比直接部署spark集群,没有太大优势,生产上很少用到,具体使用就不多介绍。

- Kubernetes Native:通过 spark-submit 直接向 K8S 的 API Server 提交,申请到资源后启动 Pod 做为 Driver 和 Executor 执行 Job,参考?http://spark.apache.org/docs/2.4.6/running-on-kubernetes.html。这种方式是spark社区支持k8s这种资源管理框架而引入的k8s client的实现。

- Spark Operator:k8s社区为了支持spark而开发的一种operator,首先安装 Spark Operator,然后定义 spark-app.yaml,再执行 kubectl apply -f spark-app.yaml,这种申明式 API 和调用方式是 K8S 的典型应用方式,参考?https://github.com/GoogleCloudPlatform/spark-on-k8s-operator

| 对比项 | Native | Operator |

| 提交方式 | spark-submit方式提交,通过--conf配置spark运行参数和k8s的参数。缺点是App管理不方便。 | 通过配置yaml方式提交,所有配置项在yaml文件中,一个spark App对应一个配置文件,并提供list、kill、restart、reschedule等命令,易于管理。 |

| 安装 | 按照官网安装,需要k8s pod的create list edit delete权限,且需要自己编译源码进行镜像的构建,构建过程繁琐 | 需要k8s admin安装incubator/sparkoperator,需要pod create list edit delete的权限 |

| 实现方式 | 对于spark提交方式来说,无论是client提交还是cluster提交,入口都是继承SparkApplication。以client提交,子类则是JavaMainApplication,该方式以反射运行,对于k8s任务来分析,clusterManager为KubernetesClusterManager,该方式和向yarn提交任务的方式没什么区别;以cluster方式提交,对于k8s任务来说,spark程序的入口为KubernetesClientApplication,client端会建立clusterIp为None的service,executor跟该service进行rpc,如任务的提交的交互,且会建立以driver-conf-map后缀的configMap,该configMap在建立spark driver pod的时候,以volumn挂载的形式被引用,而该文件的内容最终在driver提交任务的时候以–properties-file形式提交给spark driver,从而spark.driver.host等配置项就传输给了driver,与此同时也会建立以-hadoop-config为后缀的configMap,可是 k8s 镜像怎么区分是运行executor还是driver的呢?一切都在dockerfile(具体构建的时候根据hadoop和kerbeors环境的不一样进行区别配置)和entrypoint中,其中shell中是区分driver和executor的;?? ? ? | 采用k8s CRD Controller的机制,自定义CRD,根据operator SDK,监听对应的增删改查event,如监听到对应的CRD的创建事件,则根据对应yaml文件配置项,建立pod,进行spark任务的提交,具体的实现,可参考spark on k8s operator design,具体以cluster和client模式提交的原理和spark on k8s一致,因为镜像复用的是spark的官方镜像 |

Spark on k8s目前还有很多不完善的地方,存在以下问题:

scheduler 差异

资源调度器可以简单分类成集中式资源调度器和两级资源调度器。两级资源调度器有一个中央调度器负责宏观资源调度,对于某个应用的调度则由下面分区资源调度器来做。两级资源调度器对于大规模应用的管理调度往往能有一个良好的支持,比如性能方面,缺点也很明显,实现复杂。其实这种设计思想在很多地方都有应用,比如内存管理里面的 tcmalloc 算法,Go 语言的内存管理实现。大数据的资源调度器 Mesos/Yarn,某种程度上都可以归类为两级资源调度器。

集中式资源调度器对于所有的资源请求进行响应和决策,这在集群规模大了之后难免会导致一个单点瓶颈,毋庸置疑。但是 Kubernetes 的 scheduler 还有一点不同的是,它是一种升级版,一种基于共享状态的集中式资源调度器。Kubernetes 通过将整个集群的资源缓存到 scheduler 本地,在进行资源调度的时候在根据缓存的资源状态来做一个 “乐观” 分配(assume + commit)来实现调度器的高性能。

Kubernetes 的默认调度器在某种程度上并不能很好的 match Spark 的 job 调度需求,对此一种可行的技术方案是再提供一种 custom scheduler 或者直接重写,比如 Spark on Kubernetes Native 方式的参与者之一的大数据公司 Palantir 就开源了他们的 custom scheduler,github repo:?https://github.com/palantir/k8s-spark-scheduler。

Shuffle 处理

由于 Kubernetes 的 Executor Pod 的 Shuffle 数据是存储在 PV 里面,一旦作业失败就需要重新挂载新的 PV 从头开始计算。针对这个问题,Facebook 提出了一种 Remote Shuffle Service 的方案,简单来说就是将 Shuffle 数据写在远端。直观感受上来说写远端怎么可能比写本地快呢?而写在远端的一个好处是 Failover 的时候不需要重新计算,这个特性在作业的数据规模异常大的时候比较有用。

集群规模

基本上现在可以确定的是 Kubernetes 会在集群规模达到五千台的时候出现瓶颈,但是在很早期的时候 Spark 发表论文的时候就声称 Spark Standalone 模式可以支持一万台规模。Kubernetes 的瓶颈主要体现在 master 上,比如用来做元数据存储的基于 raft 一致性协议的 etcd 和 apiserver 等。对此在刚过去的 2019 上海 KubeCon 大会上,阿里巴巴做了一个关于提高 master 性能的 session: 了解 Kubernetes Master 的可扩展性和性能,感兴趣的可以自行了解。

Pod 驱逐(Eviction)问题

在 Kubernetes 中,资源分为可压缩资源(比如 CPU)和不可压缩资源(比如内存),当不可压缩资源不足的时候就会将一些 Pod 驱逐出当前 Node 节点。国内某个大厂在使用 Spark on kubernetes 的时候就遇到因为磁盘 IO 不足导致 Spark 作业失败,从而间接导致整个测试集都没有跑出来结果。如何保证 Spark 的作业 Pod (Driver/Executor) 不被驱逐呢?这就涉及到优先级的问题,1.10 之后开始支持。但是说到优先级,有一个不可避免的问题就是如何设置我们的应用的优先级?常规来说,在线应用或者 long-running 应用优先级要高于 batch job,但是显然对于 Spark 作业来说这并不是一种好的方式。

作业日志

Spark on Yarn 的模式下,我们可以将日志进行 aggregation 然后查看,但是在 Kubernetes 中暂时还是只能通过 Pod 的日志查看,这块如果要对接 Kubernetes 生态的话可以考虑使用 fluentd 或者 filebeat 将 Driver 和 Executor Pod 的日志汇总到 ELK 中进行查看。

Prometheus 生态

Prometheus 作为 CNCF 毕业的第二个项目,基本是 Kubernetes 监控的标配,目前 Spark 并没有提供 Prometheus Sink。而且 Prometheus 的数据读取方式是 pull 的方式,对于 Spark 中 batch job 并不适合使用 pull 的方式,可能需要引入 Prometheus 的 pushgateway。

二、不同模式作业提交分析

第二部分回到源码上来,具体看下不同模式下作业如何提交和初始化,只考虑spark-submit方式。在第二篇介绍SparkSUbmit提交作业时,介绍到在SparkSubmit中提交作业前需要调用prepareSubmitEnvironment准备环境变量,这个方法中针对不同模式会返回对应的childArgs, childClasspath, sparkConf, childMainClass:

private[deploy] def prepareSubmitEnvironment(

args: SparkSubmitArguments,

conf: Option[HadoopConfiguration] = None)

: (Seq[String], Seq[String], SparkConf, String) = {

// Return values

val childArgs = new ArrayBuffer[String]()

val childClasspath = new ArrayBuffer[String]()

val sparkConf = new SparkConf()

var childMainClass = ""

// 设置不同模式下cluster manager,YARN = 1 STANDALONE = 2 MESOS = 4 LOCAL = 8 KUBERNETES = 16

val clusterManager: Int = args.master match {

case "yarn" => YARN

case "yarn-client" | "yarn-cluster" =>

logWarning(s"Master ${args.master} is deprecated since 2.0." +

" Please use master \"yarn\" with specified deploy mode instead.")

YARN

case m if m.startsWith("spark") => STANDALONE

case m if m.startsWith("mesos") => MESOS

case m if m.startsWith("k8s") => KUBERNETES

case m if m.startsWith("local") => LOCAL

case _ =>

error("Master must either be yarn or start with spark, mesos, k8s, or local")

-1

}

// 设置deploy mode;默认client。从spark2.0开始不建议使用spark-client或spark-cluster,而是用deploy-mode

var deployMode: Int = args.deployMode match {

case "client" | null => CLIENT

case "cluster" => CLUSTER

case _ =>

error("Deploy mode must be either client or cluster")

-1

}

// 对yarn-cluster和yarn-client用法进行处理,后面代码进行统一

if (clusterManager == YARN) {

(args.master, args.deployMode) match {

case ("yarn-cluster", null) =>

deployMode = CLUSTER

args.master = "yarn"

case ("yarn-cluster", "client") =>

error("Client deploy mode is not compatible with master \"yarn-cluster\"")

case ("yarn-client", "cluster") =>

error("Cluster deploy mode is not compatible with master \"yarn-client\"")

case (_, mode) =>

args.master = "yarn"

}

// 确保编译的spark package包含yarn模块

if (!Utils.classIsLoadable(YARN_CLUSTER_SUBMIT_CLASS) && !Utils.isTesting) {

error(

"Could not load YARN classes. " +

"This copy of Spark may not have been compiled with YARN support.")

}

}

if (clusterManager == KUBERNETES) {

//检查给定Kubernetes主URL的有效性并返回已解析的URL。前缀“k8s://”附加到解析的URL,因为KubernetesClusterManager在canCreate中使用前缀来确定是否应使用KubernetesClusterManager。

args.master = Utils.checkAndGetK8sMasterUrl(args.master)

// Make sure KUBERNETES is included in our build if we're trying to use it

if (!Utils.classIsLoadable(KUBERNETES_CLUSTER_SUBMIT_CLASS) && !Utils.isTesting) {

error(

"Could not load KUBERNETES classes. " +

"This copy of Spark may not have been compiled with KUBERNETES support.")

}

}

// 下面几种模式不支持或不能使用,包括:python下的STANDALONE CLUSTER模式、R下的STANDALONE CLUSTER模式、local模式下指定cluster,以及spark-shell、spark-sql和spark thriftserver下都不可使用cluster模式

(clusterManager, deployMode) match {

case (STANDALONE, CLUSTER) if args.isPython =>

error("Cluster deploy mode is currently not supported for python " +

"applications on standalone clusters.")

case (STANDALONE, CLUSTER) if args.isR =>

error("Cluster deploy mode is currently not supported for R " +

"applications on standalone clusters.")

case (LOCAL, CLUSTER) =>

error("Cluster deploy mode is not compatible with master \"local\"")

case (_, CLUSTER) if isShell(args.primaryResource) =>

error("Cluster deploy mode is not applicable to Spark shells.")

case (_, CLUSTER) if isSqlShell(args.mainClass) =>

error("Cluster deploy mode is not applicable to Spark SQL shell.")

case (_, CLUSTER) if isThriftServer(args.mainClass) =>

error("Cluster deploy mode is not applicable to Spark Thrift server.")

case _ =>

}

// 如果args.deployMode为空进行更新,同时设置isYarnCluster 、isMesosCluster、isKubernetesCluster 等参数

(args.deployMode, deployMode) match {

case (null, CLIENT) => args.deployMode = "client"

case (null, CLUSTER) => args.deployMode = "cluster"

case _ =>

}

val isYarnCluster = clusterManager == YARN && deployMode == CLUSTER

val isMesosCluster = clusterManager == MESOS && deployMode == CLUSTER

val isStandAloneCluster = clusterManager == STANDALONE && deployMode == CLUSTER

val isKubernetesCluster = clusterManager == KUBERNETES && deployMode == CLUSTER

val isMesosClient = clusterManager == MESOS && deployMode == CLIENT

if (!isMesosCluster && !isStandAloneCluster) {

// 解析maven依赖项,并将类路径添加到JAR。将它们添加到py文件中

//对于包含Python代码的包也是如此

val resolvedMavenCoordinates = DependencyUtils.resolveMavenDependencies(

args.packagesExclusions, args.packages, args.repositories, args.ivyRepoPath,

args.ivySettingsPath)

if (!StringUtils.isBlank(resolvedMavenCoordinates)) {

args.jars = mergeFileLists(args.jars, resolvedMavenCoordinates)

if (args.isPython || isInternal(args.primaryResource)) {

args.pyFiles = mergeFileLists(args.pyFiles, resolvedMavenCoordinates)

}

}

// 安装可能通过--jars或--packages传递的任何R包。Spark包可能在jar中包含R源代码。

if (args.isR && !StringUtils.isBlank(args.jars)) {

RPackageUtils.checkAndBuildRPackage(args.jars, printStream, args.verbose)

}

}

//创建hadoopConf,包括--conf指定参数,以及s3的AK、SK。部分spark写法参数转换为hadoop参数写法,如spark.buffer.size转为io.file.buffer.size,默认65536

args.sparkProperties.foreach { case (k, v) => sparkConf.set(k, v) }

val hadoopConf = conf.getOrElse(SparkHadoopUtil.newConfiguration(sparkConf))

val targetDir = Utils.createTempDir()

// standalone和mesos cluster 模式不支持kerberos

if (clusterManager != STANDALONE

&& !isMesosCluster

&& args.principal != null

&& args.keytab != null) {

// client模式确保keytab是本地路径.

if (deployMode == CLIENT && Utils.isLocalUri(args.keytab)) {

args.keytab = new URI(args.keytab).getPath()

}

if (!Utils.isLocalUri(args.keytab)) {

require(new File(args.keytab).exists(), s"Keytab file: ${args.keytab} does not exist")

UserGroupInformation.loginUserFromKeytab(args.principal, args.keytab)

}

}

// Resolve glob path for different resources.

args.jars = Option(args.jars).map(resolveGlobPaths(_, hadoopConf)).orNull

args.files = Option(args.files).map(resolveGlobPaths(_, hadoopConf)).orNull

args.pyFiles = Option(args.pyFiles).map(resolveGlobPaths(_, hadoopConf)).orNull

args.archives = Option(args.archives).map(resolveGlobPaths(_, hadoopConf)).orNull

lazy val secMgr = new SecurityManager(sparkConf)

// client模式下载远端文件.

var localPrimaryResource: String = null

var localJars: String = null

var localPyFiles: String = null

if (deployMode == CLIENT) {

localPrimaryResource = Option(args.primaryResource).map {

//将文件从远程下载到本地临时目录。如果输入路径指向本地路径,返回不进行任何操作。

downloadFile(_, targetDir, sparkConf, hadoopConf, secMgr)

}.orNull

localJars = Option(args.jars).map {

downloadFileList(_, targetDir, sparkConf, hadoopConf, secMgr)

}.orNull

localPyFiles = Option(args.pyFiles).map {

downloadFileList(_, targetDir, sparkConf, hadoopConf, secMgr)

}.orNull

}

//在yarn中运行时,对于具有schema的某些远程资源:1.Hadoop文件系统不支持它们。2.我们使用“spark.Thread.dist.forceDownloadSchemes”明确绕过Hadoop文件系统。

//在添加到YARN的分布式缓存之前,先把它们下载到本地磁盘。对于YARN client模式,因为我们已经下载了上面的代码,所以我们只需要找出本地路径并替换远程路径即可。

if (clusterManager == YARN) {

val forceDownloadSchemes = sparkConf.get(FORCE_DOWNLOAD_SCHEMES)

def shouldDownload(scheme: String): Boolean = {

forceDownloadSchemes.contains("*") || forceDownloadSchemes.contains(scheme) ||

Try { FileSystem.getFileSystemClass(scheme, hadoopConf) }.isFailure

}

def downloadResource(resource: String): String = {

val uri = Utils.resolveURI(resource)

uri.getScheme match {

case "local" | "file" => resource

case e if shouldDownload(e) =>

val file = new File(targetDir, new Path(uri).getName)

if (file.exists()) {

file.toURI.toString

} else {

downloadFile(resource, targetDir, sparkConf, hadoopConf, secMgr)

}

case _ => uri.toString

}

}

args.primaryResource = Option(args.primaryResource).map { downloadResource }.orNull

args.files = Option(args.files).map { files =>

Utils.stringToSeq(files).map(downloadResource).mkString(",")

}.orNull

args.pyFiles = Option(args.pyFiles).map { pyFiles =>

Utils.stringToSeq(pyFiles).map(downloadResource).mkString(",")

}.orNull

args.jars = Option(args.jars).map { jars =>

Utils.stringToSeq(jars).map(downloadResource).mkString(",")

}.orNull

args.archives = Option(args.archives).map { archives =>

Utils.stringToSeq(archives).map(downloadResource).mkString(",")

}.orNull

}

// 如果运行的是 python app,把 main class 设置为指定的 python runner

if (args.isPython && deployMode == CLIENT) {

if (args.primaryResource == PYSPARK_SHELL) {

args.mainClass = "org.apache.spark.api.python.PythonGatewayServer"

} else {

// If a python file is provided, add it to the child arguments and list of files to deploy.

// Usage: PythonAppRunner <main python file> <extra python files> [app arguments]

args.mainClass = "org.apache.spark.deploy.PythonRunner"

args.childArgs = ArrayBuffer(localPrimaryResource, localPyFiles) ++ args.childArgs

}

if (clusterManager != YARN) {

// The YARN backend handles python files differently, so don't merge the lists.

args.files = mergeFileLists(args.files, args.pyFiles)

}

}

if (localPyFiles != null) {

sparkConf.set("spark.submit.pyFiles", localPyFiles)

}

// 在yarn模式下的R APP,将SparkR包存档和包含所有构建R库的R包存档添加到存档中,以便它们可以与作业一起分发

if (args.isR && clusterManager == YARN) {

val sparkRPackagePath = RUtils.localSparkRPackagePath

if (sparkRPackagePath.isEmpty) {

error("SPARK_HOME does not exist for R application in YARN mode.")

}

val sparkRPackageFile = new File(sparkRPackagePath.get, SPARKR_PACKAGE_ARCHIVE)

if (!sparkRPackageFile.exists()) {

error(s"$SPARKR_PACKAGE_ARCHIVE does not exist for R application in YARN mode.")

}

val sparkRPackageURI = Utils.resolveURI(sparkRPackageFile.getAbsolutePath).toString

// Distribute the SparkR package.

// Assigns a symbol link name "sparkr" to the shipped package.

args.archives = mergeFileLists(args.archives, sparkRPackageURI + "#sparkr")

// Distribute the R package archive containing all the built R packages.

if (!RUtils.rPackages.isEmpty) {

val rPackageFile =

RPackageUtils.zipRLibraries(new File(RUtils.rPackages.get), R_PACKAGE_ARCHIVE)

if (!rPackageFile.exists()) {

error("Failed to zip all the built R packages.")

}

val rPackageURI = Utils.resolveURI(rPackageFile.getAbsolutePath).toString

// Assigns a symbol link name "rpkg" to the shipped package.

args.archives = mergeFileLists(args.archives, rPackageURI + "#rpkg")

}

}

// TODO: Support distributing R packages with standalone cluster

if (args.isR && clusterManager == STANDALONE && !RUtils.rPackages.isEmpty) {

error("Distributing R packages with standalone cluster is not supported.")

}

// TODO: Support distributing R packages with mesos cluster

if (args.isR && clusterManager == MESOS && !RUtils.rPackages.isEmpty) {

error("Distributing R packages with mesos cluster is not supported.")

}

// R应用程序指定main class为 R runner

if (args.isR && deployMode == CLIENT) {

if (args.primaryResource == SPARKR_SHELL) {

args.mainClass = "org.apache.spark.api.r.RBackend"

} else {

// If an R file is provided, add it to the child arguments and list of files to deploy.

// Usage: RRunner <main R file> [app arguments]

args.mainClass = "org.apache.spark.deploy.RRunner"

args.childArgs = ArrayBuffer(localPrimaryResource) ++ args.childArgs

args.files = mergeFileLists(args.files, args.primaryResource)

}

}

if (isYarnCluster && args.isR) {

// In yarn-cluster mode for an R app, add primary resource to files

// that can be distributed with the job

args.files = mergeFileLists(args.files, args.primaryResource)

}

// Special flag to avoid deprecation warnings at the client

sys.props("SPARK_SUBMIT") = "true"

// 将每个参数映射到每个部署模式中的系统属性或命令行选项的规则列表

val options = List[OptionAssigner](

// All cluster managers

OptionAssigner(args.master, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES, confKey = "spark.master"),

OptionAssigner(args.deployMode, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.submit.deployMode"),

OptionAssigner(args.name, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES, confKey = "spark.app.name"),

OptionAssigner(args.ivyRepoPath, ALL_CLUSTER_MGRS, CLIENT, confKey = "spark.jars.ivy"),

OptionAssigner(args.driverMemory, ALL_CLUSTER_MGRS, CLIENT,

confKey = "spark.driver.memory"),

OptionAssigner(args.driverExtraClassPath, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.driver.extraClassPath"),

OptionAssigner(args.driverExtraJavaOptions, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.driver.extraJavaOptions"),

OptionAssigner(args.driverExtraLibraryPath, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.driver.extraLibraryPath"),

// Propagate attributes for dependency resolution at the driver side

OptionAssigner(args.packages, STANDALONE | MESOS, CLUSTER, confKey = "spark.jars.packages"),

OptionAssigner(args.repositories, STANDALONE | MESOS, CLUSTER,

confKey = "spark.jars.repositories"),

OptionAssigner(args.ivyRepoPath, STANDALONE | MESOS, CLUSTER, confKey = "spark.jars.ivy"),

OptionAssigner(args.packagesExclusions, STANDALONE | MESOS,

CLUSTER, confKey = "spark.jars.excludes"),

// Yarn only

OptionAssigner(args.queue, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.queue"),

OptionAssigner(args.numExecutors, YARN, ALL_DEPLOY_MODES,

confKey = "spark.executor.instances"),

OptionAssigner(args.pyFiles, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.pyFiles"),

OptionAssigner(args.jars, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.jars"),

OptionAssigner(args.files, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.files"),

OptionAssigner(args.archives, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.archives"),

OptionAssigner(args.principal, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.principal"),

OptionAssigner(args.keytab, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.keytab"),

// Other options

OptionAssigner(args.executorCores, STANDALONE | YARN | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.executor.cores"),

OptionAssigner(args.executorMemory, STANDALONE | MESOS | YARN | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.executor.memory"),

OptionAssigner(args.totalExecutorCores, STANDALONE | MESOS | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.cores.max"),

OptionAssigner(args.files, LOCAL | STANDALONE | MESOS | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.files"),

OptionAssigner(args.jars, LOCAL, CLIENT, confKey = "spark.jars"),

OptionAssigner(args.jars, STANDALONE | MESOS | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.jars"),

OptionAssigner(args.driverMemory, STANDALONE | MESOS | YARN | KUBERNETES, CLUSTER,

confKey = "spark.driver.memory"),

OptionAssigner(args.driverCores, STANDALONE | MESOS | YARN | KUBERNETES, CLUSTER,

confKey = "spark.driver.cores"),

OptionAssigner(args.supervise.toString, STANDALONE | MESOS, CLUSTER,

confKey = "spark.driver.supervise"),

OptionAssigner(args.ivyRepoPath, STANDALONE, CLUSTER, confKey = "spark.jars.ivy"),

// An internal option used only for spark-shell to add user jars to repl's classloader,

// previously it uses "spark.jars" or "spark.yarn.dist.jars" which now may be pointed to

// remote jars, so adding a new option to only specify local jars for spark-shell internally.

OptionAssigner(localJars, ALL_CLUSTER_MGRS, CLIENT, confKey = "spark.repl.local.jars")

)

// 在客户端模式下,直接启动应用程序主类 ,并将主应用程序jar和任何添加的jar(如果有)添加到类路径中

if (deployMode == CLIENT) {

childMainClass = args.mainClass

if (localPrimaryResource != null && isUserJar(localPrimaryResource)) {

childClasspath += localPrimaryResource

}

if (localJars != null) { childClasspath ++= localJars.split(",") }

}

//将主应用程序jar和任何添加的jar添加到类路径中,以防yarn client需要这些jar。假设primaryResource和用户JAR都是本地JAR,或者已经通过配置“spark.Thread.dist.forceDownloadSchemes”下载到本地,否则它将不会添加到yarnclient的类路径中。

if (isYarnCluster) {

if (isUserJar(args.primaryResource)) {

childClasspath += args.primaryResource

}

if (args.jars != null) { childClasspath ++= args.jars.split(",") }

}

if (deployMode == CLIENT) {

if (args.childArgs != null) { childArgs ++= args.childArgs }

}

// Map all arguments to command-line options or system properties for our chosen mode

for (opt <- options) {

if (opt.value != null &&

(deployMode & opt.deployMode) != 0 &&

(clusterManager & opt.clusterManager) != 0) {

if (opt.clOption != null) { childArgs += (opt.clOption, opt.value) }

if (opt.confKey != null) { sparkConf.set(opt.confKey, opt.value) }

}

}

// 对于Shell,spark.ui.showConsoleProgress可以默认为true,也可以由用户设置为true。

if (isShell(args.primaryResource) && !sparkConf.contains(UI_SHOW_CONSOLE_PROGRESS)) {

sparkConf.set(UI_SHOW_CONSOLE_PROGRESS, true)

}

// 自动添加应用程序jar,这样用户就不必调用sc.addJar。对于yarn集群模式,jar已作为“app.jar”分布在每个节点上。对于python和R文件,主资源已经作为常规文件分发

if (!isYarnCluster && !args.isPython && !args.isR) {

var jars = sparkConf.getOption("spark.jars").map(x => x.split(",").toSeq).getOrElse(Seq.empty)

if (isUserJar(args.primaryResource)) {

jars = jars ++ Seq(args.primaryResource)

}

sparkConf.set("spark.jars", jars.mkString(","))

}

// 在独立群集模式下,使用REST客户端提交应用程序(Spark 1.3+)。所有Spark参数都应通过系统属性传递给客户端。如果useRest为true,使用RestSubmissionClientApp类,否则使用ClientApp类

if (args.isStandaloneCluster) {

if (args.useRest) {

childMainClass = REST_CLUSTER_SUBMIT_CLASS

childArgs += (args.primaryResource, args.mainClass)

} else {

// In legacy standalone cluster mode, use Client as a wrapper around the user class

childMainClass = STANDALONE_CLUSTER_SUBMIT_CLASS

if (args.supervise) { childArgs += "--supervise" }

Option(args.driverMemory).foreach { m => childArgs += ("--memory", m) }

Option(args.driverCores).foreach { c => childArgs += ("--cores", c) }

childArgs += "launch"

childArgs += (args.master, args.primaryResource, args.mainClass)

}

if (args.childArgs != null) {

childArgs ++= args.childArgs

}

}

// 设置spark.yarn.isPython为true标识为pyspark.

if (clusterManager == YARN) {

if (args.isPython) {

sparkConf.set("spark.yarn.isPython", "true")

}

}

if ((clusterManager == MESOS || clusterManager == KUBERNETES)

&& UserGroupInformation.isSecurityEnabled) {

setRMPrincipal(sparkConf)

}

// yarn cluster模式childMainClass为YarnClusterApplication

if (isYarnCluster) {

childMainClass = YARN_CLUSTER_SUBMIT_CLASS

if (args.isPython) {

childArgs += ("--primary-py-file", args.primaryResource)

childArgs += ("--class", "org.apache.spark.deploy.PythonRunner")

} else if (args.isR) {

val mainFile = new Path(args.primaryResource).getName

childArgs += ("--primary-r-file", mainFile)

childArgs += ("--class", "org.apache.spark.deploy.RRunner")

} else {

if (args.primaryResource != SparkLauncher.NO_RESOURCE) {

childArgs += ("--jar", args.primaryResource)

}

childArgs += ("--class", args.mainClass)

}

if (args.childArgs != null) {

args.childArgs.foreach { arg => childArgs += ("--arg", arg) }

}

}

// MESOS模式下为RestSubmissionClientApp

if (isMesosCluster) {

assert(args.useRest, "Mesos cluster mode is only supported through the REST submission API")

childMainClass = REST_CLUSTER_SUBMIT_CLASS

if (args.isPython) {

// Second argument is main class

childArgs += (args.primaryResource, "")

if (args.pyFiles != null) {

sparkConf.set("spark.submit.pyFiles", args.pyFiles)

}

} else if (args.isR) {

// Second argument is main class

childArgs += (args.primaryResource, "")

} else {

childArgs += (args.primaryResource, args.mainClass)

}

if (args.childArgs != null) {

childArgs ++= args.childArgs

}

}

//k8s模式下KubernetesClientApplication

if (isKubernetesCluster) {

childMainClass = KUBERNETES_CLUSTER_SUBMIT_CLASS

if (args.primaryResource != SparkLauncher.NO_RESOURCE) {

if (args.isPython) {

childArgs ++= Array("--primary-py-file", args.primaryResource)

childArgs ++= Array("--main-class", "org.apache.spark.deploy.PythonRunner")

if (args.pyFiles != null) {

childArgs ++= Array("--other-py-files", args.pyFiles)

}

} else if (args.isR) {

childArgs ++= Array("--primary-r-file", args.primaryResource)

childArgs ++= Array("--main-class", "org.apache.spark.deploy.RRunner")

}

else {

childArgs ++= Array("--primary-java-resource", args.primaryResource)

childArgs ++= Array("--main-class", args.mainClass)

}

} else {

childArgs ++= Array("--main-class", args.mainClass)

}

if (args.childArgs != null) {

args.childArgs.foreach { arg =>

childArgs += ("--arg", arg)

}

}

}

// Load any properties specified through --conf and the default properties file

for ((k, v) <- args.sparkProperties) {

sparkConf.setIfMissing(k, v)

}

// Ignore invalid spark.driver.host in cluster modes.

if (deployMode == CLUSTER) {

sparkConf.remove("spark.driver.host")

}

// Resolve paths in certain spark properties

val pathConfigs = Seq(

"spark.jars",

"spark.files",

"spark.yarn.dist.files",

"spark.yarn.dist.archives",

"spark.yarn.dist.jars")

pathConfigs.foreach { config =>

// Replace old URIs with resolved URIs, if they exist

sparkConf.getOption(config).foreach { oldValue =>

sparkConf.set(config, Utils.resolveURIs(oldValue))

}

}

// Resolve and format python file paths properly before adding them to the PYTHONPATH.

// The resolving part is redundant in the case of --py-files, but necessary if the user

// explicitly sets `spark.submit.pyFiles` in his/her default properties file.

sparkConf.getOption("spark.submit.pyFiles").foreach { pyFiles =>

val resolvedPyFiles = Utils.resolveURIs(pyFiles)

val formattedPyFiles = if (!isYarnCluster && !isMesosCluster) {

PythonRunner.formatPaths(resolvedPyFiles).mkString(",")

} else {

// Ignoring formatting python path in yarn and mesos cluster mode, these two modes

// support dealing with remote python files, they could distribute and add python files

// locally.

resolvedPyFiles

}

sparkConf.set("spark.submit.pyFiles", formattedPyFiles)

}

(childArgs, childClasspath, sparkConf, childMainClass)

}childMainClass为具体提交作业时反射类,,总结起来不同模式下对应MainClass为:

| 模式 | childMainClass |

| client(不区分引擎类型) | 1、pyspark-shell提交org.apache.spark.api.python.PythonGatewayServer 2、pyspark提交 org.apache.spark.deploy.PythonRunner 3、sparkr-shell提交 org.apache.spark.api.r.RBackend 4、其他sparkr提交方式: org.apache.spark.deploy.RRunner 其余方式为提交作业时--class指定的类名,最终再通过 JavaMainApplication进行封装一层,反射调起 |

Standalone? CLUSTER模式 | useRest设置为True: RestSubmissionClientApp useRest设置为False: ClientApp |

| YARN CLUSTER模式 | org.apache.spark.deploy.yarn.YarnClusterApplication |

| MESOS CLUSTER模式 | RestSubmissionClientApp |

| KUBERNETS CLUSTER模式 | org.apache.spark.deploy.k8s.submit.KubernetesClientApplication |

以YARN的CLuster模式为例,作业提交命令:

spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi /usr/hdp/3.0.1.0-187/spark2/examples/jars/spark-examples_2.11-2.4.0-cdh6.2.0.jar

SparkSubmit提交作业时会判断childMainClass是否为SparkApplication的子类,然后直接调用start方法:

//YarnClusterApplication 为 SparkApplication 子类,会调用Client的run方法

private[spark] class YarnClusterApplication extends SparkApplication {

override def start(args: Array[String], conf: SparkConf): Unit = {

// SparkSubmit would use yarn cache to distribute files & jars in yarn mode,

// so remove them from sparkConf here for yarn mode.

conf.remove("spark.jars")

conf.remove("spark.files")

new Client(new ClientArguments(args), conf).run()

}

}

// Client.scala

def run(): Unit = {

//提交Application,这个是关键一步

this.appId = submitApplication()

if (!launcherBackend.isConnected() && fireAndForget) {

val report = getApplicationReport(appId)

val state = report.getYarnApplicationState

logInfo(s"Application report for $appId (state: $state)")

logInfo(formatReportDetails(report))

if (state == YarnApplicationState.FAILED || state == YarnApplicationState.KILLED) {

throw new SparkException(s"Application $appId finished with status: $state")

}

} else {

val YarnAppReport(appState, finalState, diags) = monitorApplication(appId)

if (appState == YarnApplicationState.FAILED || finalState == FinalApplicationStatus.FAILED) {

diags.foreach { err =>

logError(s"Application diagnostics message: $err")

}

throw new SparkException(s"Application $appId finished with failed status")

}

if (appState == YarnApplicationState.KILLED || finalState == FinalApplicationStatus.KILLED) {

throw new SparkException(s"Application $appId is killed")

}

if (finalState == FinalApplicationStatus.UNDEFINED) {

throw new SparkException(s"The final status of application $appId is undefined")

}

}

}

//向ResourceManager提交运行ApplicationMaster的应用程序。YARN API提供了一种方便的方法(YarnClient#createApplication)用于创建应用程序并设置应用程序提交上下文。

def submitApplication(): ApplicationId = {

ResourceRequestHelper.validateResources(sparkConf)

var appId: ApplicationId = null

try {

launcherBackend.connect()

//初始化并启动yarn客户端

yarnClient.init(hadoopConf)

yarnClient.start()

logInfo("Requesting a new application from cluster with %d NodeManagers"

.format(yarnClient.getYarnClusterMetrics.getNumNodeManagers))

// 创建一个YARN的Application

val newApp = yarnClient.createApplication()

val newAppResponse = newApp.getNewApplicationResponse()

appId = newAppResponse.getApplicationId()

new CallerContext("CLIENT", sparkConf.get(APP_CALLER_CONTEXT),

Option(appId.toString)).setCurrentContext()

// Verify whether the cluster has enough resources for our AM

verifyClusterResources(newAppResponse)

//设置上下文启动AM,createContainerLaunchContext设置启动环境、java选项和启动AM的命令。

val containerContext = createContainerLaunchContext(newAppResponse)

val appContext = createApplicationSubmissionContext(newApp, containerContext)

// 最后提交并监控Application

logInfo(s"Submitting application $appId to ResourceManager")

yarnClient.submitApplication(appContext)

launcherBackend.setAppId(appId.toString)

reportLauncherState(SparkAppHandle.State.SUBMITTED)

appId

} catch {

case e: Throwable =>

if (appId != null) {

cleanupStagingDir(appId)

}

throw e

}

}

private def createContainerLaunchContext(newAppResponse: GetNewApplicationResponse)

: ContainerLaunchContext = {

logInfo("Setting up container launch context for our AM")

val appId = newAppResponse.getApplicationId

...

//设置环境变量及相关依赖等

val launchEnv = setupLaunchEnv(appStagingDirPath, pySparkArchives)

...

val javaOpts = ListBuffer[String]()

// Set the environment variable through a command prefix

// to append to the existing value of the variable

var prefixEnv: Option[String] = None

// 设置AM内存,client模式为Driver内存,CLuster模式为spark.yarn.am.memory配置

javaOpts += "-Xmx" + amMemory + "m"

// 设置获取操作系统缓存目录

val tmpDir = new Path(Environment.PWD.$$(), YarnConfiguration.DEFAULT_CONTAINER_TEMP_DIR)

javaOpts += "-Djava.io.tmpdir=" + tmpDir

// TODO: Remove once cpuset version is pushed out.

// The context is, default gc for server class machines ends up using all cores to do gc -

// hence if there are multiple containers in same node, Spark GC affects all other containers'

// performance (which can be that of other Spark containers)

// Instead of using this, rely on cpusets by YARN to enforce "proper" Spark behavior in

// multi-tenant environments. Not sure how default Java GC behaves if it is limited to subset

// of cores on a node.

val useConcurrentAndIncrementalGC = launchEnv.get("SPARK_USE_CONC_INCR_GC").exists(_.toBoolean)

if (useConcurrentAndIncrementalGC) {

// In our expts, using (default) throughput collector has severe perf ramifications in

// multi-tenant machines

javaOpts += "-XX:+UseConcMarkSweepGC"

javaOpts += "-XX:MaxTenuringThreshold=31"

javaOpts += "-XX:SurvivorRatio=8"

javaOpts += "-XX:+CMSIncrementalMode"

javaOpts += "-XX:+CMSIncrementalPacing"

javaOpts += "-XX:CMSIncrementalDutyCycleMin=0"

javaOpts += "-XX:CMSIncrementalDutyCycle=10"

}

// Include driver-specific java options if we are launching a driver

if (isClusterMode) {

// 通过spark.driver.extraJavaOptions设置driver javaOpt配置

sparkConf.get(DRIVER_JAVA_OPTIONS).foreach { opts =>

javaOpts ++= Utils.splitCommandString(opts)

.map(Utils.substituteAppId(_, appId.toString))

.map(YarnSparkHadoopUtil.escapeForShell)

}

val libraryPaths = Seq(sparkConf.get(DRIVER_LIBRARY_PATH),

sys.props.get("spark.driver.libraryPath")).flatten

if (libraryPaths.nonEmpty) {

prefixEnv = Some(createLibraryPathPrefix(libraryPaths.mkString(File.pathSeparator),

sparkConf))

}

if (sparkConf.get(AM_JAVA_OPTIONS).isDefined) {

logWarning(s"${AM_JAVA_OPTIONS.key} will not take effect in cluster mode")

}

} else {

// spark.yarn.am.extraJavaOptions参数尽在yarn-client 模式生效.

sparkConf.get(AM_JAVA_OPTIONS).foreach { opts =>

if (opts.contains("-Dspark")) {

val msg = s"${AM_JAVA_OPTIONS.key} is not allowed to set Spark options (was '$opts')."

throw new SparkException(msg)

}

if (opts.contains("-Xmx")) {

val msg = s"${AM_JAVA_OPTIONS.key} is not allowed to specify max heap memory settings " +

s"(was '$opts'). Use spark.yarn.am.memory instead."

throw new SparkException(msg)

}

javaOpts ++= Utils.splitCommandString(opts)

.map(Utils.substituteAppId(_, appId.toString))

.map(YarnSparkHadoopUtil.escapeForShell)

}

sparkConf.get(AM_LIBRARY_PATH).foreach { paths =>

prefixEnv = Some(createLibraryPathPrefix(paths, sparkConf))

}

}

// For log4j configuration to reference

javaOpts += ("-Dspark.yarn.app.container.log.dir=" + ApplicationConstants.LOG_DIR_EXPANSION_VAR)

// 获取具体应用程序执行类

val userClass =

if (isClusterMode) {

Seq("--class", YarnSparkHadoopUtil.escapeForShell(args.userClass))

} else {

Nil

}

val userJar =

if (args.userJar != null) {

Seq("--jar", args.userJar)

} else {

Nil

}

val primaryPyFile =

if (isClusterMode && args.primaryPyFile != null) {

Seq("--primary-py-file", new Path(args.primaryPyFile).getName())

} else {

Nil

}

val primaryRFile =

if (args.primaryRFile != null) {

Seq("--primary-r-file", args.primaryRFile)

} else {

Nil

}

// am类cluster下为ApplicationMaster,client下为ExecutorLauncher

val amClass =

if (isClusterMode) {

Utils.classForName("org.apache.spark.deploy.yarn.ApplicationMaster").getName

} else {

Utils.classForName("org.apache.spark.deploy.yarn.ExecutorLauncher").getName

}

if (args.primaryRFile != null && args.primaryRFile.endsWith(".R")) {

args.userArgs = ArrayBuffer(args.primaryRFile) ++ args.userArgs

}

val userArgs = args.userArgs.flatMap { arg =>

Seq("--arg", YarnSparkHadoopUtil.escapeForShell(arg))

}

val amArgs =

Seq(amClass) ++ userClass ++ userJar ++ primaryPyFile ++ primaryRFile ++ userArgs ++

Seq("--properties-file",

buildPath(Environment.PWD.$$(), LOCALIZED_CONF_DIR, SPARK_CONF_FILE)) ++

Seq("--dist-cache-conf",

buildPath(Environment.PWD.$$(), LOCALIZED_CONF_DIR, DIST_CACHE_CONF_FILE))

// Command for the ApplicationMaster

val commands = prefixEnv ++

Seq(Environment.JAVA_HOME.$$() + "/bin/java", "-server") ++

javaOpts ++ amArgs ++

Seq(

"1>", ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/stdout",

"2>", ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/stderr")

// TODO: it would be nicer to just make sure there are no null commands here

val printableCommands = commands.map(s => if (s == null) "null" else s).toList

amContainer.setCommands(printableCommands.asJava)

logDebug("===============================================================================")

logDebug("YARN AM launch context:")

logDebug(s" user class: ${Option(args.userClass).getOrElse("N/A")}")

logDebug(" env:")

if (log.isDebugEnabled) {

Utils.redact(sparkConf, launchEnv.toSeq).foreach { case (k, v) =>

logDebug(s" $k -> $v")

}

}

logDebug(" resources:")

localResources.foreach { case (k, v) => logDebug(s" $k -> $v")}

logDebug(" command:")

logDebug(s" ${printableCommands.mkString(" ")}")

logDebug("===============================================================================")

// send the acl settings into YARN to control who has access via YARN interfaces

val securityManager = new SecurityManager(sparkConf)

amContainer.setApplicationACLs(

YarnSparkHadoopUtil.getApplicationAclsForYarn(securityManager).asJava)

setupSecurityToken(amContainer)

amContainer

}代码中比较关键点为使用YarnClient创建Application,其中在createContainerLaunchContext方法会构造启动AppMaster的java?command指令。command中封装的主类,有client和cluster两种模式,cluster模式的指令为?/bin/java org.apache.spark.deploy.yarn.ApplicationMaster,client模式的指令为org.apache.spark.deploy.yarn.ExecutorLauncher。本例中使用Cluster模,具体的java command为:

LD_LIBRARY_PATH=\"/usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64:$LD_LIBRARY_PATH\" {{JAVA_HOME}}/bin/java -server -Xmx1024m -Djava.io.tmpdir={{PWD}}/tmp '-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=5055' -Dspark.yarn.app.container.log.dir=<LOG_DIR> org.apache.spark.deploy.yarn.ApplicationMaster --class 'org.apache.spark.examples.SparkPi' --jar file:/usr/hdp/3.0.1.0-187/spark2/examples/jars/spark-examples_2.11-2.4.0-cdh6.2.0.jar --properties-file {{PWD}}/__spark_conf__/__spark_conf__.properties --dist-cache-conf {{PWD}}/__spark_conf__/__spark_dist_cache__.properties 1> <LOG_DIR>/stdout 2> <LOG_DIR>/stderr

进入到ApplicationMaster代码可以看到main函数中首先会构造ApplicationMaster对象,然后调用run方法:

def main(args: Array[String]): Unit = {

SignalUtils.registerLogger(log)

val amArgs = new ApplicationMasterArguments(args)

//构造ApplicationMaster

master = new ApplicationMaster(amArgs)

//适配kerberos情况,进行认证

val ugi = master.sparkConf.get(PRINCIPAL) match {

case Some(principal) =>

val originalCreds = UserGroupInformation.getCurrentUser().getCredentials()

SparkHadoopUtil.get.loginUserFromKeytab(principal, master.sparkConf.get(KEYTAB).orNull)

val newUGI = UserGroupInformation.getCurrentUser()

// Transfer the original user's tokens to the new user, since it may contain needed tokens

// (such as those user to connect to YARN).

newUGI.addCredentials(originalCreds)

newUGI

case _ =>

SparkHadoopUtil.get.createSparkUser()

}

// 调用run方法

ugi.doAs(new PrivilegedExceptionAction[Unit]() {

override def run(): Unit = System.exit(master.run())

})

}在run方法中也会区分是否Client模式还是Cluster模式,Cluster会走runDriver,CLient模式走runExecutorLauncher,这两个方法区别就是runDriver多了一步startUserApplication用来启动应用程序,构造Driver线程通过反射调用具体程序执行类:

final def run(): Int = {

try {

// Cluster模式设置特定参数

...

if (isClusterMode) {

// runDriver内部多了一步startUserApplication启动mainClass,然后调用createAllocator申请资源

runDriver()

} else {

runExecutorLauncher()

}

...

}

private def runDriver(): Unit = {

setupRmProxy(None)

// 获取 --class 指定类名,并构造Driver线程反射调起该类

userClassThread = startUserApplication()

// This a bit hacky, but we need to wait until the spark.driver.port property has

// been set by the Thread executing the user class.

logInfo("Waiting for spark context initialization...")

val totalWaitTime = sparkConf.get(AM_MAX_WAIT_TIME)

try {

val sc = ThreadUtils.awaitResult(sparkContextPromise.future,

Duration(totalWaitTime, TimeUnit.MILLISECONDS))

if (sc != null) {

rpcEnv = sc.env.rpcEnv

val userConf = sc.getConf

val host = userConf.get("spark.driver.host")

val port = userConf.get("spark.driver.port").toInt

//注册AM,使用YarnRMClient#registerApplicationMaster注册真正的AppMaster

registerAM(host, port, userConf, sc.ui.map(_.webUrl))

val driverRef = rpcEnv.setupEndpointRef(

RpcAddress(host, port),

YarnSchedulerBackend.ENDPOINT_NAME)

//通过YarnAllocator申请executor资源,这里下节详细介绍

createAllocator(driverRef, userConf)

...

}