一、连接网络

1、设置主机ip

vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static #将原来的模式 更改为static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=3c40f6ea-1fbb-4c93-aab5-2c1d7951dcf8

DEVICE=ens33

ONBOOT=yes #将no改为yes

打开VMware下编辑-虚拟网络编辑器-VM net8 查看IP地址,子网掩码,网关

IPADDR=192.168.255.128 #DHCP设置下查看128~254网段皆可

NETMASK=255.255.255.0 #DHCP设置下查看

GATEWAY=192.168.255.2 #NAT设置下查看

在本机下 win+R键 然后 cmd

进入命令窗口 ipconfig /all 查看 DNS地址(本人是无线局域网)

DNS1=10.100.0.6

DNS2=10.40.235.2

全部运行后查看/etc/resolv.conf下有没有添加上 DNS地址 没有就手动添加

2.更改主机名和IP地址对应

(1).vi /etc/hosts

添加 192.168.255.128 root #自己IP地址 用户名

注:创建虚拟机时添加一个普通用户

(2).hostnamectl set-hostname hadoop(所需要的主机名)

3.关闭防火墙,启动network服务

systemctl stop firewalld.service

systemctl disable firewalld.service

service network restart

4.pingIP地址和网关

ping通即可

5.连接Xshell

二、Hadoop安装部署

1.准备工作

虚拟机下 mkdir -p /root/hadoop

连接Xftp,上传jdk,hadoop,mysql,hive,sqoop到 /root/hadoop

上传mysql数据库信息,mysql-connector-java-5.1.28-bin包到 /root/hadoop

2.搭建JDK环境

2.1检查是否有内置JDK,如果有,卸载

rpm -qa | grep jdk

yum -y remove *jdk*

2.2.解压jdk到/usr/local下,并改名jdk

[root@hadoop local] tar -xf /root/hadoop/jdk-8u221-linux-x64.tar.gz

[root@hadoop local] mv jdk1.8.0_221/ jdk

2.3.配置环境变量

[root@hadoop local] vim /etc/profile

# Java Environment 添加在文末即可

export JAVA_HOME=/usr/local/jdk

export PATH=$PATH:$JAVA_HOME/bin

2.4.重新刷新环境变量

[root@hadoop local]# source /etc/profile

2.5.验证Java搭建

[root@hadoop local] java -version

[root@hadoop local] javac

三、Hadoop安装

3.1.解压,改名

[root@hadoop local] tar -xf /root/hadoop/hadoop-2.7.6.tar.gz

[root@hadoop local] mv hadoop-2.7.6/ hadoop

3.2.配置Hadoop环境变量

[root@hadoop local] vim /etc/profile

# Hadoop Environment

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

3.3.刷新环境变量

[root@hadoop local] source /etc/profile

3.4.验证

[root@hadoop local] hadoop version

四、伪分布式集群搭建

4.1.防火墙关闭确认

[root@hadoop local] systemctl stop firewalld

[root@hadoop local] systemctl disable firewalld.service

[root@hadoop local] systemctl stop NetworkManager

[root@hadoop local] systemctl disable NetworkManager

# 最好关闭selinux

[root@hadoop local]# vi /etc/selinux/config

SELINUX=disabled

注:systemctl is-enabled 服务器 查询状态

**

[root@hadoop local]# systemctl is-enabled firewalld.service

disabled

**

4.2.免密登录

# 使用rsa加密技术,生成公钥和私钥,一路回车即可

[root@hadoop local] ssh-keygen -t rsa

[root@hadoop local] ssh-copy-id root@localhost

#验证登陆,无密码情况下登录即可

[root@hadoop local] ssh localhost

4.3.伪分布相关文件配置

4.3.1.core-site.xml配置

[root@hadoop hadoop] cd /usr/local/hadoop/etc/hadoop/

[root@hadoop hadoop] vi core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:8020/</value>

</property>

</configuration>

4.3.2.hdfs-site.xml配置

[root@hadoop hadoop] vi hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

4.3.3.hadoop-env.sh配置

[root@hadoop hadoop] vi hadoop-env.sh

# The java implementation to use.

export JAVA_HOME=/usr/local/jdk

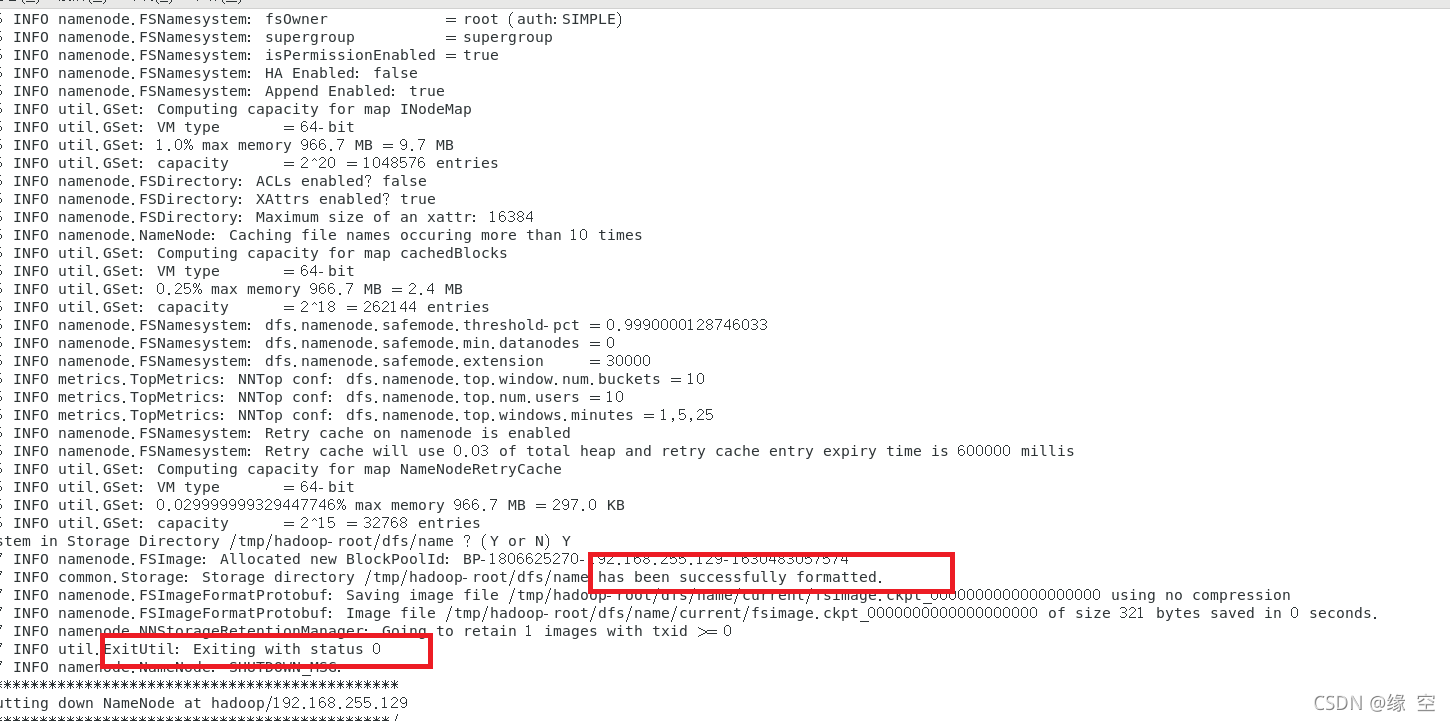

4.3.4.格式化NameNode

1.格式化命令

[root@hadoop hadoop] hdfs namenode -format

2.格式化日志解读

4.3.4.启动HDFS

1.启动伪分布式

[root@hadoop hadoop] start-all.sh

查看进程

[root@hadoop hadoop]# jps

57505 Jps

56818 DataNode

57239 NodeManager

56697 NameNode

56972 SecondaryNameNode

57117 ResourceManager

五、配置安装MySQL(离线安装)

注:在线安装在5.7

5.1.查询并卸载系统自带的Mariadb

rpm -qa | grep mariadb

rpm -e --nodeps 文件名

# example

[root@hadoop hadoop]# rpm -qa | grep mariadb

mariadb-libs-5.5.68-1.el7.x86_64

[root@hadoop hadoop]# rpm -e --nodeps mariadb-libs-5.5.68-1.el7.x86_64

[root@hadoop hadoop]# rpm -qa | grep mariadb

[root@hadoop hadoop]#

5.2.查询并卸载系统老旧版本的Mysql

rpm -qa | grep mysql

rpm -e --nodeps 文件名

5.3.安装libaio环境

[root@hadoop hadoop] yum -y install libaio

5.4.1.解压

[root@hadoop local] mkdir /usr/local/mysql

[root@hadoop local] cd mysql/

[root@hadoop mysql] tar -xf /root/hadoop/mysql-5.7.28-1.el7.x86_64.rpm-bundle.tar

5.4.2.执行RPM包安装(按顺序安装)

[root@hadoop mysql] rpm -ivh mysql-community-common-5.7.28-1.el7.x86_64.rpm

[root@hadoop mysql] rpm -ivh mysql-community-libs-5.7.28-1.el7.x86_64.rpm

[root@hadoop mysql] rpm -ivh mysql-community-devel-5.7.28-1.el7.x86_64.rpm

[root@hadoop mysql] rpm -ivh mysql-community-libs-compat-5.7.28-1.el7.x86_64.rpm

[root@hadoop mysql] rpm -ivh mysql-community-client-5.7.28-1.el7.x86_64.rpm

[root@hadoop mysql] rpm -ivh mysql-community-server-5.7.28-1.el7.x86_64.rpm

5.5.启动MySQL服务

5.5.1.查看mysql服务是否启动

service mysqld status

5.5.2.启动服务:

systemctl start mysqld

5.5.3.设置开机自启

[root@hadoop mysql] systemctl enable mysqld

5.6.设置MySQL密码

5.6.1.查看生成的随机密码

[root@hadoop mysql] grep 'password' /var/log/mysqld.log

2021-07-12T02:58:00.615225Z 1 [Note] A temporary password is generated for root@localhost: ,gfDy1o.qEg:

5.6.2.登录

[root@hadoop mysql] mysql -uroot -p

5.6.3.更改密码

mysql> set global validate_password_policy=low;

mysql> set global validate_password_length=6;

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY '123456';

注: 远程授权,远程连接MySQL,需进行远程授权操作(关闭防火墙!!!)

mysql> grant all privileges on *.* to root@"%" identified by '123456' with grant option;

*5.7.安装MySQL(在线安装)

5.7.1. 安装

[root@hadoop ~]# wget https://dev.mysql.com/get/mysql57-community-release-el7-11.noarch.rpm

[root@hadoop ~] rpm -ivh mysql57-community-release-el7-11.noarch.rpm

[root@hadoop ~] yum repolist all| grep mysql

[root@hadoop ~] yum install -y mysql-community-server

5.7.2.重复 5.5 以下步骤

**

六、配置Hive环境

**

6.1.解压,改名

[root@hadoop local] tar -xf /root/hadoop/apache-hive-2.1.1-bin.tar.gz

[root@hadoop local] mv apache-hive-2.1.1-bin/ hive

6.2.配置hive环境变量

[root@hadoop local] vi /etc/profile

export HIVE_HOME=/usr/local/hive

export PATH=$HIVE_HOME/bin:$PATH

6.3.刷新环境变量

[root@hadoop local] source /etc/profile

6.4.1.配置hive-env.sh

[root@hadoop hive] cd /usr/local/hive/conf/

[root@hadoop conf] cp hive-env.sh.template hive-env.sh

[root@hadoop conf] vim hive-env.sh

#文末添加即可

export HIVE_CONF_DIR=/usr/local/hive/conf

export JAVA_HOME=/usr/local/jdk

export HADOOP_HOME=/usr/local/hadoop

export HIVE_AUX_JARS_PATH=/usr/local/hive/lib

6.4.2.配置hive-site.xml

- 注意事项

hive2.1.1 默认没有hive-site.xml 可以用hive-default.xml.template拷贝使用

将hive-site.xml中包含${system:java.io.tmpdir} 替换成/usr/local/hive/iotmp

如果没有指定系统用户名 将${system:user.name} 替换成当前用户名root

#将下面四个属性修改对应值

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

注:hive的元数据在MySQL库创建的数据库hive的编码最好设置成latin1

mysql> show variables like 'character%';

6.5.将MySQL驱动包上传到 $HIVE_HOME/lib 下

[root@hadoop lib]# pwd

/usr/local/hive/lib

[root@hadoop lib]

cp /root/hadoop/mysql-connector-java-5.1.28-bin.jar ./

6.6.初始化hive数据库,并启动

#初始化

[root@hadoop bin] ./schematool -initSchema -dbType mysql

#启动hive

[root@hadoop bin] ./hive

*6.7.远程模式

6.7.1.修改hive-site

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop:3306/hive?

createDatabaseIfNotExist=true&characterEncoding=latin1</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/hive/iotmp/root</value>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/usr/local/hive/iotmp/root/operation_logs</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/usr/local/hive/iotmp/root</value>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/usr/local/hive/iotmp/${hive.session.id}_resources</value>

</property>

注:使用远程模式,需要在Hadoop的core-site.xml文件中添加属性

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property> <property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

七、SQOOP配置和安装

7.1. 安装,直接解压到指定的路径下即可工作。

[root@hadoop local] tar -xf /root/hadoop/sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@hadoop local] mv sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop

7.2. 配置$SQOOP_HOME/conf/

[root@hadoop conf] cp sqoop-env-template.sh sqoop-env.sh

[root@hadoop conf] vim sqoop-env.sh

sqoop-env.sh文件,在里面添加上Hadoop和Hive的环境配置

export HADOOP_COMMON_HOME=/usr/local/hadoop

export HADOOP_MAPRED_HOME=/usr/local/hadoop

export HADOOP_HIVE_HOME=/usr/local/hive

7.3. 将MySQL的连接驱动包,放到$SQOOP_HOME/lib目录下

[root@hadoop lib] pwd

/usr/local/sqoop/lib

[root@hadoop lib] cp /root/hadoop/mysql-connector-java-5.1.28-bin.jar ./

7.4. 将Hive中lib目录下的hive-exec-2.1.1.jar拷贝到$SQOOP_HOME/lib

[root@hadoop lib] cp /usr/local/hive/lib/hive-exec-2.1.1.jar ./

7.5.配置环境变量

[root@hadoop hadoop] vim /etc/profile

export SQOOP_HOME=/usr/local/sqoop

export PATH=$SQOOP_HOME/bin:$PATH

7.6.刷新环境变量

[root@hadoop hadoop] source /etc/profile

八、其他

针对网络本来可以,然后就不行了,应该是虚拟机的配置有问题。网络的配置使用的都是NAT模式,加上虚拟机的静态IP。

- 检查是否是NAT模式

- 检查虚拟机的IP地址

- 检查网关和DNS的配置

- 检查防火墙是否关闭

- 检查NetworkManager是否关闭

针对本人win10机

1、打开虚拟机 编辑-虚拟网络编辑器,Nat 模式下 更改子网IP,然后应用。后面在换回来之前的IP地址。重启虚拟机

2、启动服务service network restartps:虚拟机可以ping通虚拟机主机,网关,ping不通win机,不能联网。win机下,可以ping通虚拟机主机

ps:此操作虽然不能通网。但是基本操作可以实现1.可以禁用网卡然后开启网卡VM8.

引用链接

注:大多数都是网上查到然后总结的,如有侵权,联系删除。