dokcer搭建单机hadoop和hive

dokcer搭建单机hadoop和hive

1. dokcer搭建单机hadoop

1.1 基础容器构建

参考:https://www.runoob.com/w3cnote/hadoop-setup.html

docker pull centos:8

docker run -d --name=java_ssh_proto --privileged centos:8 /usr/sbin/init

docker exec -it java_ssh_proto bash

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://mirror.centos.org/$contentdir|baseurl=https://mirrors.ustc.edu.cn/centos|g' \

-i.bak \

/etc/yum.repos.d/CentOS-Linux-AppStream.repo \

/etc/yum.repos.d/CentOS-Linux-BaseOS.repo \

/etc/yum.repos.d/CentOS-Linux-Extras.repo \

/etc/yum.repos.d/CentOS-Linux-PowerTools.repo \

/etc/yum.repos.d/CentOS-Linux-Plus.repo

yum makecache

yum install -y java-1.8.0-openjdk-devel openssh-clients openssh-server

systemctl enable sshd && systemctl start sshd

docker stop java_ssh_proto

docker commit java_ssh_proto java_ssh

1.2 Hadoop 安装

Hadoop 发行版本下载:https://hadoop.apache.org/releases.html

到这里下载比较快:https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.2/

docker run -d --name=hadoop_single --privileged java_ssh /usr/sbin/init

docker cp <你存放hadoop压缩包的路径> hadoop_single:/root/

docker exec -it hadoop_single bash

cd /root

mv hadoop-3.1.4 /usr/local/hadoop

echo 'export HADOOP_HOME=/usr/local/hadoop' >> /etc/bashrc

echo 'export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin' >> /etc/bashrc

. /etc/bashrc

echo "export JAVA_HOME=/usr" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "export HADOOP_HOME=/usr/local/hadoop" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

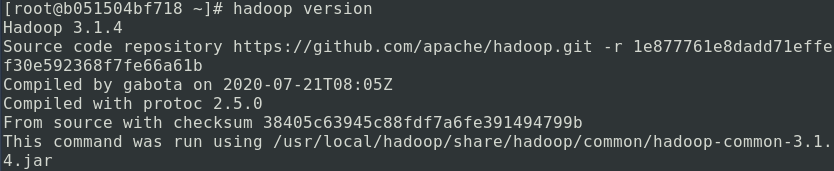

这两步配置了 hadoop 内置的环境变量,然后执行以下命令判断是否成功:hadoop version

到这里,说明你的 Hadoop 单机版已经配置成功了。

1.3 HDFS 配置和启动

hadoop version

adduser hadoop

yum install -y passwd sudo

# 接下来两次输入密码,一定要记住!密码设置为hadoop

chown -R hadoop /usr/local/hadoop

# 然后用文本编辑器修改 `/etc/sudoers` 文件,在`root ALL=(ALL) ALL`之后添加一行

hadoop ALL=(ALL) ALL

docker stop hadoop_single

docker commit hadoop_single hadoop_proto

1.4 启动 HDFS

docker run -d --name=hdfs_single --privileged hadoop_proto /usr/sbin/init

docker exec -it hdfs_single su hadoop

ssh-keygen -t rsa

# 这里可以一直按回车直到生成结束。

ssh-copy-id hadoop@{你的容器IP}

# 查看容器 IP 地址:ip addr | grep 172

cd $HADOOP_HOME/etc/hadoop

# 这里我们修改两个文件:core-site.xml 和 hdfs-site.xml

core-site.xml

[hadoop@9843628f09c8 hadoop]$ cat core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.17.0.3:9000</value>

</property>

</configuration>

[hadoop@9843628f09c8 hadoop]$

hdfs-site.xml

[hadoop@9843628f09c8 hadoop]$ cat hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

[hadoop@9843628f09c8 hadoop]$

# 格式化文件结构:

hdfs namenode -format

#然后启动 HDFS:

start-dfs.sh

#启动分三个步骤,分别启动 NameNode、DataNode 和 Secondary NameNode。

#

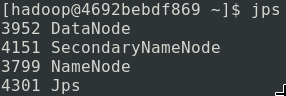

我们可以运行 jps 来查看 Java 进程:

1.5 HDFS 使用

# 显示根目录 / 下的文件和子目录,绝对路径

hadoop fs -ls /

# 新建文件夹,绝对路径

hadoop fs -mkdir /hello

# 上传文件

hadoop fs -put hello.txt /hello/

# 下载文件

hadoop fs -get /hello/hello.txt

# 输出文件内容

hadoop fs -cat /hello/hello.txt

2 hive安装

参考:https://blog.csdn.net/boling_cavalry/article/details/102310449

2.1 安装和配置MySQL(5.7.27版本)

docker run --name mysql -p 3306:3306 -e MYSQL_ROOT_PASSWORD=888888 -idt mysql:5.7.27

docker exec -it mysql /bin/bash

mysql -h127.0.0.1 -uroot -p

CREATE USER 'hive' IDENTIFIED BY '888888';

GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%' WITH GRANT OPTION;

flush privileges;

# 在宿主机的终端执行以下命令重启mysql服务:

docker exec mysql service mysql restart

# 再次进入mysql容器,以hive账号的身份登录mysql:

mysql -uhive -p

CREATE DATABASE hive;

2.2 安装hive

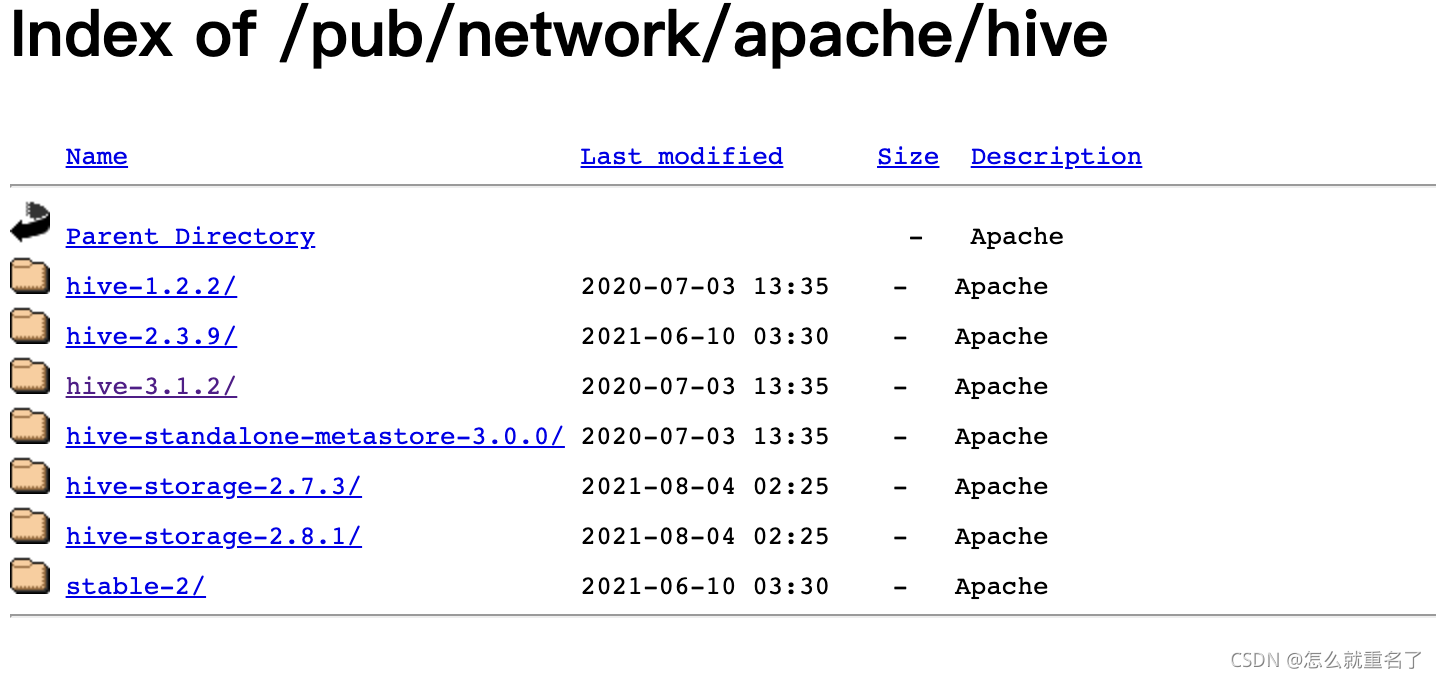

https://ftp.yz.yamagata-u.ac.jp/pub/network/apache/hive/hive-3.1.2/

- 注意:接下来的操作用的账号都不是root,而是hadoop

- 在hadoop账号的家目录下解压刚刚下载的apache-hive文件.

- 编辑hadoop账号的.bashrc文件,增加一个环境变量HIVE_HOME

[hadoop@9843628f09c8 ~]$ cat .bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# User specific environment

if ! [[ "$PATH" =~ "$HOME/.local/bin:$HOME/bin:" ]]

then

PATH="$HOME/.local/bin:$HOME/bin:$PATH"

fi

export PATH

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

export HIVE_HOME=/home/hadoop/apache-hive-3.1.2-bin

# User specific aliases and functions

[hadoop@9843628f09c8 ~]$

-

修改完毕后,重新打开一个ssh连接,或者执行source ~/.bashrc让环境变量立即生效;

-

进入目录conf/,用模板文件复制一份配置文件:

cp hive-default.xml.template hive-default.xml -

在此目录创建名为hive-site.xml的文件,内容如下:

[hadoop@9843628f09c8 conf]$ cat hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://172.17.0.4:3306/hive?serverTimezone=GMT&useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&zeroDateTimeBehavior=round</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>888888</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

</configuration>

[hadoop@9843628f09c8 ~]$

- 将mysql的JDBC包放在此目录:

lib/,我这里用的是mysql-connector-java-8.0.23.jar。

2.3 初始化和启动hive

- 进入目录

bin/,执行以下命令初始化:./schematool -initSchema -dbType mysql

如果报错可以网上查看原因。我的报错处理方法是把hadoop的lib目录下的gvaua覆盖到hive的lib下。因为两个版本不一样,hive的版本低了。 - 在mysql上看一下,数据库hvie下建了多个表

mysql> show tables;

+---------------------------+

| Tables_in_hive |

+---------------------------+

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| HIVE_LOCKS |

| IDXS |

| INDEX_PARAMS |

| MASTER_KEYS |

| NEXT_COMPACTION_QUEUE_ID |

| NEXT_LOCK_ID |

| NEXT_TXN_ID |

| NOTIFICATION_LOG |

| NOTIFICATION_SEQUENCE |

| NUCLEUS_TABLES |

| PARTITIONS |

| PARTITION_EVENTS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_PRIVS |

| PART_COL_STATS |

| PART_PRIVS |

| ROLES |

| ROLE_MAP |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| TBL_COL_PRIVS |

| TBL_PRIVS |

| TXNS |

| TXN_COMPONENTS |

| TYPES |

| TYPE_FIELDS |

| VERSION |

+---------------------------+

53 rows in set (0.00 sec)

- 在目录bin执行命令./hive即可启动;

验证

# 前面执行./hive之后,已进入了对话模式,输入以下命令创建名为test001的数据库:

CREATE database test001;

use test001;

create table test_table(

id INT,

word STRING

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE;

select * from test_table;

总结

docker stop hadoop_proto

docker commit hadoop_proto hadoop_322_hive_312

docker run -d --name=hadoop_322_hive_312 --privileged hadoop_322_hive_312 /usr/sbin/init

docker exec -it hadoop_322_hive_312 bash

[root@462b2baee20f /]# su hadoop

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$ start-dfs.sh

Starting namenodes on [462b2baee20f]

462b2baee20f: Warning: Permanently added '462b2baee20f' (ECDSA) to the list of known hosts.

Starting datanodes

Starting secondary namenodes [462b2baee20f]

2021-09-05 09:19:52,159 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$ hadoop fs -ls /

2021-09-05 09:19:59,514 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 4 items

drwxr-xr-x - hadoop supergroup 0 2021-09-05 06:20 /hello

-rw-r--r-- 1 hadoop supergroup 56 2021-09-05 06:27 /input.txt

drwx-wx-wx - hadoop supergroup 0 2021-09-05 07:36 /tmp

drwxr-xr-x - hadoop supergroup 0 2021-09-05 07:37 /user

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$ jps

486 NameNode

839 SecondaryNameNode

1022 Jps

639 DataNode

[hadoop@462b2baee20f /]$ cd home/hadoop/apache-hive-3.1.2-bin/bin

[hadoop@462b2baee20f bin]$

[hadoop@462b2baee20f bin]$ ./hive

./hive: line 289: which: command not found

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = df9b9750-333c-433e-b0d3-b86e370814dc

Logging initialized using configuration in jar:file:/home/hadoop/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive Session ID = 1739ae3c-932f-4e08-aa56-064b0544f177

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

>

> show tables;

OK

如何使用这个镜像:

docker pull xiaolixi/hadoop_322_hive_312

docker run -d --name=hadoop_322_hive_312 --privileged hadoop_322_hive_312 /usr/sbin/init

docker exec -it hadoop_322_hive_312 bash

[root@462b2baee20f /]# su hadoop

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$

[hadoop@462b2baee20f /]$ start-dfs.sh

[hadoop@462b2baee20f /]$ cd home/hadoop/apache-hive-3.1.2-bin/bin

[hadoop@462b2baee20f bin]$

[hadoop@462b2baee20f bin]$ ./hive