如果骄傲没被现实大海冷冷拍下,又怎会懂得要多努力才走得到远方!

一、引言

在分布式环境下,如果舍弃SpringCloud,使用其他的分布式框架,那么注册心中,配置集中管理,集群管理,分布式锁,分布式任务,队列的管理想单独实现怎么办?

二、Zookeeper介绍

Zookeeper本身是Hadoop生态园的中的一个组件,Zookeeper强大的功能,在Java分布式架构中,也会频繁的使用到Zookeeper↓

| zk作者Doug |

|---|

其实说的那么高大上,Zookeeper就是一个文件系统 + 监听通知机制的软件而已

三、Zookeeper安装

docker-compose.yml

version: "3.1"

services:

zk:

image: daocloud.io/daocloud/zookeeper:latest

restart: always

container_name: zk

ports:

- 2181:2181

创建文件夹里面编辑yml文件为上面内容,然后启动容器,进入容器内部↓

进入zk容器内部,进入bin目录执行下面的zk客户端命令↓

执行完zk客户端命令出现下面界面,说明zk安装成功↓

总的代码如下↓

[root@localhost ~]# cd /opt/docker_zk/

[root@localhost docker_zk]# ls

docker-compose.yml

[root@localhost docker_zk]# docker-compose up -d

[root@localhost docker_zk]# docker ps

b5e3889b3e91 daocloud.io/daocloud/zookeeper:latest "/opt/run.sh" 8 seconds ago Up 6 seconds 2888/tcp, 0.0.0.0:2181->2181/tcp, :::2181->2181/tcp, 3888/tcp

[root@localhost docker_zk]# docker exec -it b5 bash

root@b5e3889b3e91:/opt/zookeeper# ls

CHANGES.txt NOTICE.txt README_packaging.txt build.xml contrib docs ivysettings.xml recipes zookeeper-3.4.6.jar zookeeper-3.4.6.jar.md5

LICENSE.txt README.txt bin conf dist-maven ivy.xml lib src zookeeper-3.4.6.jar.asc zookeeper-3.4.6.jar.sha1

root@b5e3889b3e91:/opt/zookeeper# cd bin/

root@b5e3889b3e91:/opt/zookeeper/bin# ls

README.txt zkCleanup.sh zkCli.cmd zkCli.sh zkEnv.cmd zkEnv.sh zkServer.cmd zkServer.sh

root@b5e3889b3e91:/opt/zookeeper/bin# ./zkCli.sh

Connecting to localhost:2181

...

[zk: localhost:2181(CONNECTED) 0]

四、Zookeeper架构【重点】

4.1 Zookeeper的架构图

每一个节点都没称为znode

每一个znode中都可以存储数据

同一个节点下,节点名称是不允许重复的

| Zookeeper的架构图 |

|---|

4.2 znode类型

四种Znode

持久节点:永久的保存在你的Zookeeper

持久有序节点:永久的保存在你的Zookeeper.他会给节点添加一个有序的序号, /xx -> /xx0000001

临时节点:当存储的客户端和Zookeeper服务断开连接时,这个临时节点会自动删除

临时有序节点:当存储的客户端和Zookeeper服务断开连接时,这个临时节点会自动删除,他会给节点添加一个有序的序号,/xx -> /xx0000001

4.3 Zookeeper的监听通知机制

客户端可以去监听Zookeeper中的Znode节点

Znode改变时会通知监听当前Znode的客户端

| 监听通知机制 |

|---|

五、Zookeeper常用命令

Zookeeper针对增删改查的常用命令

# 查询当前节点下的全部子节点

ls 节点名称

# 例子 ls /

# 查询当前节点下的数据

get 节点名称

# 例子 get /zookeeper

# 创建节点

create [-s] [-e] znode名称 znode数据

# -s:sequence,有序节点

# -e:ephemeral,临时节点

# 修改节点值

set znode名称 新数据

# 删除节点

delete znode名称 # 删除没有子节点的znode

rmr znode名称 # 删除当前节点和全部的子节点

总的代码如下,用xterm进入到zk的leader容器的内部,进入bin目录,执行客户端命./zkCli.sh来执行下面相关命令↓

[root@localhost docker_zk]# docker exec -it zk容器id比如02f bash

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin# ls /

bin conf datalog docker-entrypoint.sh home lib64 media opt root sbin sys usr

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin# cd bin

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin/bin# ls

zkServer.sh zkCli.sh

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin/bin# ./zkCli.sh

Connecting to localhost:2181

# 查询当前节点下的全部子节点↓

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper]

# 查询当前节点下的数据↓

[zk: localhost:2181(CONNECTED) 1] get /zookeeper

cZxid = 0x0

ctime = Thu Jan 01 00:00:00 UTC 1970

mZxid = 0x0

mtime = Thu Jan 01 00:00:00 UTC 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

# 创建节点并指定节点数据↓

[zk: localhost:2181(CONNECTED) 2] create /zd1 zd1sj

Created /zd1

[zk: localhost:2181(CONNECTED) 3] get /zd1

zd1sj

cZxid = 0x2

ctime = Sun Jul 18 07:40:02 UTC 2021

mZxid = 0x2

mtime = Sun Jul 18 07:40:02 UTC 2021

pZxid = 0x2

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 0

# 创建有序节点并查看,注意查看时要加上创建的序号↓

[zk: localhost:2181(CONNECTED) 4] create -s /zd2 zd2sj

Created /zd20000000001

[zk: localhost:2181(CONNECTED) 5] get /zd2

Node does not exist: /zd2

[zk: localhost:2181(CONNECTED) 8] get /zd20000000001

zd2sj

cZxid = 0x3

ctime = Sun Jul 18 07:44:06 UTC 2021

mZxid = 0x3

mtime = Sun Jul 18 07:44:06 UTC 2021

pZxid = 0x3

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 0

# 创建临时节点并查看↓

[zk: localhost:2181(CONNECTED) 9] create -e /zd3 zd3sj

Created /zd3

[zk: localhost:2181(CONNECTED) 10] ls /

[zd20000000001, zd1, zd3, zookeeper]

[zk: localhost:2181(CONNECTED) 11] create -s -e /zd4 zd4sj

Created /zd40000000003

[zk: localhost:2181(CONNECTED) 12] ls /

[zd20000000001, zd1, zd3, zd40000000003, zookeeper]

# 退出,再连接,发现上面创建的临时节点都不见↓

[zk: localhost:2181(CONNECTED) 14] quit

root@b5e3889b3e91:/opt/zookeeper/bin# ./zkCli.sh

[zk: localhost:2181(CONNECTED) 0] ls /

[zd20000000001, zd1, zookeeper]

# 设置节点数据并查看↓

[zk: localhost:2181(CONNECTED) 1] set /zd1 zd1sjgx

[zk: localhost:2181(CONNECTED) 2] get /zd1

zd1sjgx

cZxid = 0x2

ctime = Sun Jul 18 07:40:02 UTC 2021

mZxid = 0x8

mtime = Sun Jul 18 07:47:06 UTC 2021

pZxid = 0x2

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 0

# 创建多级节点时,前面的节点需要存在,否则提示找不到↓

[zk: localhost:2181(CONNECTED) 7] ls /

[zd20000000001, zookeeper]

[zk: localhost:2181(CONNECTED) 8] create /zd/zd2 888

Node does not exist: /zd/zd2

[zk: localhost:2181(CONNECTED) 9] create /zd 1

Created /zd

[zk: localhost:2181(CONNECTED) 10] create /zd/zd2 888

Created /zd/zd2

[zk: localhost:2181(CONNECTED) 11] ls /

[zd20000000001, zd, zookeeper]

# delete命令只能删除没有节点的节点,要删除带有节点的节点用rmr

[zk: localhost:2181(CONNECTED) 13] delete /zd

Node not empty: /zd

[zk: localhost:2181(CONNECTED) 17] rmr /zd

[zk: localhost:2181(CONNECTED) 18] ls /

[zd20000000001, zookeeper]

[zk: localhost:2181(CONNECTED) 19] delete /zd20000000001

[zk: localhost:2181(CONNECTED) 20] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 21]

| 总结 |

|---|

六、Zookeeper集群【重点】

6.1 Zookeeper集群架构图

| 集群架构图 |

|---|

6.2 Zookeeper集群,节点的角色

- Leader:Master主节点

- Follower (默认的从节点):从节点,参与选举全新的Leader

- Observer:从节点,不参与投票

- Looking:正在找Leader节点

6.3 Zookeeper投票策略

每一个Zookeeper服务都会被分配一个全局唯一的myid,myid是一个数字

Zookeeper在执行写数据时,每一个节点都有一个自己的FIFO的队列,保证写每一个数据的时候,顺序是不会乱的,Zookeeper还会给每一个数据分配一个全局唯一的zxid,数据越新zxid就越大

选举Leader:

- 选举出zxid最大的节点作为Leader

- 在zxid相同的节点中,选举出一个myid最大的节点,作为Leader

6.4 搭建Zookeeper集群

配置文件docker-compose.yml,下面镜像写zookeeper默认会用最新版镜像↓

version: "3.1"

services:

zk1:

image: zookeeper

restart: always

container_name: zk1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=zk3:2888:3888;2181

zk2:

image: zookeeper

restart: always

container_name: zk2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=zk3:2888:3888;2181

zk3:

image: zookeeper

restart: always

container_name: zk3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=zk3:2888:3888;2181

为了避免端口冲突,这样简单粗暴,停止并删除单机版zk然后修改yml文件为集群配置,然后重启容器↓

[zk: localhost:2181(CONNECTED) 22] quit

Quitting...

2021-07-18 11:06:48,855 [myid:] - INFO [main:ZooKeeper@684] - Session: 0x17ab86a76100001 closed

2021-07-18 11:06:48,856 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@512] - EventThread shut down

root@b5e3889b3e91:/opt/zookeeper/bin# exit

exit

[root@localhost docker_zk]# ls

docker-compose.yml

[root@localhost docker_zk]# docker-compose down

Stopping zk ... done

Removing zk ... done

Removing network docker_zk_default

# 修改yml文件内容为上面的zk集群拷贝,然后重启容器

[root@localhost docker_zk]# ls

docker-compose.yml

[root@localhost docker_zk]# docker-compose up -d

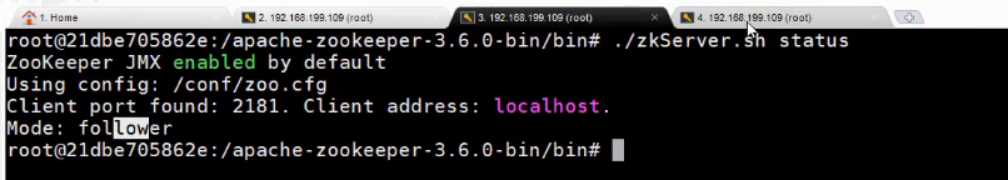

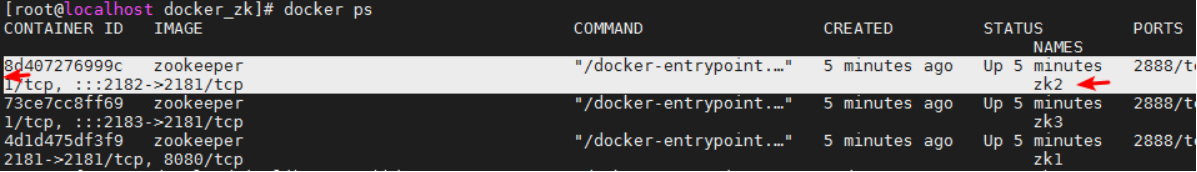

分别开启三个窗口,然后分别进入各自容器内部,查看状态信息,看看谁是leader↓

流程如下,进入容器以zk1为例子,其他两个进入容器套路一样↓

[root@localhost docker_zk]# docker exec -it zk1容器id比如上图的4d bash

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin# ls

LICENSE.txt NOTICE.txt README.md README_packaging.md bin conf docs lib

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin# cd bin

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin# ls

README.txt zkCli.cmd zkEnv.cmd zkServer-initialize.sh zkServer.sh

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin# ./zkServer.sh status

Mode: follower

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin#

exit退出zk3容器,然后停止zk3容器即现在的leader,然后来测试其他人是否变成了leader↓

由于zk2的myid相对zk1大,暂时成为了leader↓

用现在的leader即zk2执行客户端命令,然后创建一个数据,看看其他追随者即follower现在暂时是zk1能否查到↓

root@8d407276999c:/apache-zookeeper-3.7.0-bin/bin# ./zkServer.sh status

Mode: leader

root@8d407276999c:/apache-zookeeper-3.7.0-bin/bin# ls

zkServer.sh zkCli.sh

root@8d407276999c:/apache-zookeeper-3.7.0-bin/bin# ./zkCli.sh

Connecting to localhost:2181

[zk: localhost:2181(CONNECTED) 0] create /qf xxx

Created /qf

[zk: localhost:2181(CONNECTED) 1] ls /

[qf, zookeeper]

zk1也执行客户端命令,确实查看到了leader即zk2中创建的数据↓

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin# ./zkServer.sh status

Mode: follower

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin# ls

zkServer.sh zkCli.sh

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin# ./zkCli.sh

Connecting to localhost:2181

[zk: localhost:2181(CONNECTED) 0] ls /

[qf, zookeeper]

最后退出zk2容器,并停止zk2容器即现在的leader,来查看集群是否报错↓

[zk: localhost:2181(CONNECTED) 2] quit

root@8d407276999c:/apache-zookeeper-3.7.0-bin/bin# exit

[root@localhost ~]# docker stop zk2容器id比如上面的8d

这个时候在zk1的界面,就已经报错了,因为现在是集群而不是单机版,现在没有leader了↓

这个时候启动之前停止的zk3容器,由于zk1现在的zxid最新的,zk1成为了leader,验证我们上面说的leader选举策略↓

[root@localhost ~]# docker start zk3容器id比如上面的73

由于zk1现在的zxid是最新的,稍等一段时间,再重新查看zk1的状态,发现zk1确实成为了领导leader↓

root@4d1d475df3f9:/apache-zookeeper-3.7.0-bin/bin# ./zkServer.sh status

七、Java操作Zookeeper

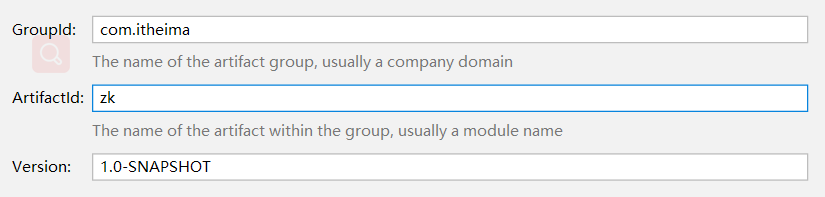

7.1 Java连接Zookeeper

测试之前,为了避免其他影响,停止之前的集群,再启动集群容器↓

[root@localhost docker_zk]# ls docker-compose.yml [root@localhost docker_zk]# docker-compose down [root@localhost docker_zk]# docker-compose up -d然后创建Maven基础工程起名叫zk

导入依赖

<dependencies>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-recipes</artifactId>

<version>4.0.1</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

</dependencies>

拷贝连接Zookeeper集群工具类

package com.xia.utils;

import org.apache.curator.RetryPolicy;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.retry.ExponentialBackoffRetry;

public class ZkUtil {

public static CuratorFramework cf(){

RetryPolicy retryPolicy = new ExponentialBackoffRetry(3000,2);//需重试策略,每隔3秒,重试2次

CuratorFramework cf = CuratorFrameworkFactory.builder()

.connectString("192.168.1.129:2181,192.168.1.129:2182,192.168.1.129:2183")

.retryPolicy(retryPolicy)

.build();

cf.start();

return cf;

}

}

写一个测试类,调用工具类,运行单元测试,如果没有报错,说明连接zk成功

package com.xia.test;

import com.xia.utils.ZkUtil;

import org.apache.curator.framework.CuratorFramework;

import org.junit.Test;

public class Test01 {

@Test

public void testConnectToZk() {

CuratorFramework cf = ZkUtil.cf();

System.out.println(cf);

}

}

| 结果如下 |

|---|

|

7.2 Java操作Znode节点

查询

public class Test02 {

CuratorFramework cf = ZkUtil.cf();

// 获取子节点

@Test

public void getChildren() throws Exception {

List<String> strings = cf.getChildren().forPath("/");

for (String string : strings) {

System.out.println(string);

}

}

// 获取节点数据,即获取数据

@Test

public void getData() throws Exception {

byte[] bytes = cf.getData().forPath("/qf");

System.out.println(new String(bytes,"UTF-8"));

}

}

创建

@Test

public void create() throws Exception {

cf.create().withMode(CreateMode.PERSISTENT).forPath("/qf2","uuuu".getBytes());

}

更新

@Test

public void update() throws Exception {

cf.setData().forPath("/qf2","oooo".getBytes());

}

删除

@Test

public void delete() throws Exception {

cf.delete().deletingChildrenIfNeeded().forPath("/qf2");

}

检查节点存在状态

@Test

public void stat() throws Exception {

Stat stat = cf.checkExists().forPath("/qf");

System.out.println(stat);

}

测试结果出来对应的状态对象的属性分别是↓

增删改查总的代码如下↓

package com.xia.test;

import com.xia.utils.ZkUtil;

import org.apache.curator.framework.CuratorFramework;

import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.data.Stat;

import org.junit.Test;

import java.util.List;

public class Test02 {

CuratorFramework cf = ZkUtil.cf();

//获取子节点,指定路径,父节点的↓

@Test

public void getChildren() throws Exception {

List<String> strings = cf.getChildren().forPath("/");

for (String string : strings) {

System.out.println(string);//zookeeper

}

}

@Test

public void create0() throws Exception {

cf.create().withMode(CreateMode.PERSISTENT).forPath("/qf","qfsj".getBytes());

}

//获取数据,指定路径,哪个节点的↓

@Test

public void getData() throws Exception {

byte[] bytes = cf.getData().forPath("/qf");

System.out.println(new String(bytes,"UTF-8"));//qfsj

}

//创建节点并伴随创建节点的类型,指定路径包装节点和数据↓

@Test

public void create() throws Exception {

cf.create().withMode(CreateMode.PERSISTENT).forPath("/qf2","uuuu".getBytes());

}

//设置数据,指定路径,哪个节点,和修改数据↓

@Test

public void update() throws Exception {

cf.setData().forPath("/qf2","oooo".getBytes());

}

//删除节点,如果有子孩子也随便删除,指定路径,哪个节点↓

@Test

public void delete() throws Exception {

cf.delete().deletingChildrenIfNeeded().forPath("/qf2");

}

//检查存在节点状态,指定路径,哪个节点↓

@Test

public void stat() throws Exception {

Stat stat = cf.checkExists().forPath("/qf");

System.out.println(stat);

}

}

用xterm进入到zk的leader容器的内部,进入bin目录,执行客户端命./zkCli.sh,来辅助查看上面Java代码执行结果↓

[root@localhost docker_zk]# docker exec -it leader容器id比如02f bash

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin# ls /

bin conf datalog docker-entrypoint.sh home lib64 media opt root sbin sys usr

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin# cd bin

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin/bin# ls

zkServer.sh zkCli.sh

root@02f8ebed544a:/apache-zookeeper-3.7.0-bin/bin# ./zkCli.sh

Connecting to localhost:2181

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /

[qf, zookeeper]

# Java代码增加查询↓

[zk: localhost:2181(CONNECTED) 1] get /qf2

uuuu

# Java代码修改查询↓

[zk: localhost:2181(CONNECTED) 2] get /qf2

oooo

# Java代码删除查询↓

[zk: localhost:2181(CONNECTED) 3] get /qf2

org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /qf2

[zk: localhost:2181(CONNECTED) 4] ls /

[qf, zookeeper]

[zk: localhost:2181(CONNECTED) 5] get /qf

qfsj

7.3 监听通知机制,一旦zk数据修改,Java代码能够监听的到

实现方式

package com.xia.test;

import com.xia.utils.ZkUtil;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.recipes.cache.NodeCache;

import org.apache.curator.framework.recipes.cache.NodeCacheListener;

import org.apache.zookeeper.data.Stat;

import org.junit.Test;

public class Test03 {

CuratorFramework cf = ZkUtil.cf();

@Test

public void listen() throws Exception {

//1. 创建NodeCache对象,指定要监听的znode

final NodeCache nodeCache = new NodeCache(cf,"/qf");

nodeCache.start();

//2. 添加一个监听器

nodeCache.getListenable().addListener(new NodeCacheListener() {

@Override

public void nodeChanged() throws Exception {

byte[] data = nodeCache.getCurrentData().getData();

Stat stat = nodeCache.getCurrentData().getStat();

String path = nodeCache.getCurrentData().getPath();

System.out.println("监听的节点是:" + path);

System.out.println("节点现在的数据是:" + new String(data,"UTF-8"));

System.out.println("节点状态是:" + stat);

}

});

System.out.println("开始监听!!");

//3. System.in.read();//让监听不退出

System.in.read();

}

}

运行上面的监听程序,然后用xterm进入到zk的leader容器的内部,执行客户端命令,修改数据,发现确实监听到改变↓