0.前言

最近有好几个朋友都有咨询这个问题,大概有两类:

1、为啥我用了with..as效率没有提高?

2、sql跑不动,改成with..as的写法,会不会更好些?

网上博客几乎都有结论with ... as语句会把数据放在内存:

?

一、?Hive-SQL

?在hive中有一个参数

hive.optimize.cte.materialize.threshold

?这个参数在默认情况下是-1(关闭的);当开启(大于0),比如设置为2,则如果with..as语句被引用2次及以上时,会把with..as语句生成的table物化,从而做到with..as语句只执行一次,来提高效率。

1.1 测试?

explain

with atable as (

SELECT id,source,channel

FROM test

)

select source from atable WHERE channel = '直播'

union ALL

select source from atable WHERE channel = '视频'?不设置该参数时,执行计划:

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: test

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (channel = '直播') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: source (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

TableScan

alias: test

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (channel = '视频') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: source (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink从执行计划上看,test表被读两次。

设置set hive.optimize.cte.materialize.threshold=1,执行计划

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-6 depends on stages: Stage-1 , consists of Stage-3, Stage-2, Stage-4

Stage-3

Stage-0 depends on stages: Stage-3, Stage-2, Stage-5

Stage-8 depends on stages: Stage-0

Stage-2

Stage-4

Stage-5 depends on stages: Stage-4

Stage-7 depends on stages: Stage-8

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: test

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: id (type: int), source (type: string), channel (type: string)

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: default.atable

Stage: Stage-6

Conditional Operator

Stage: Stage-3

Move Operator

files:

hdfs directory: true

destination: hdfs://localhost:9000/tmp/hive/bytedance/bae441cb-0ef6-4e9c-9f7a-8a5f97d0e560/_tmp_space.db/ce44793b-6eed-4299-b737-f05c66b2281b/.hive-staging_hive_2021-03-24_20-17-38_169_5695913330535939856-1/-ext-10002

Stage: Stage-0

Move Operator

files:

hdfs directory: true

destination: hdfs://localhost:9000/tmp/hive/bytedance/bae441cb-0ef6-4e9c-9f7a-8a5f97d0e560/_tmp_space.db/ce44793b-6eed-4299-b737-f05c66b2281b

Stage: Stage-8

Map Reduce

Map Operator Tree:

TableScan

alias: atable

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (channel = '直播') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: source (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

TableScan

alias: atable

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Filter Operator

predicate: (channel = '视频') (type: boolean)

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Select Operator

expressions: source (type: string)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 0 Basic stats: PARTIAL Column stats: NONE

Union

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 2 Data size: 0 Basic stats: PARTIAL Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-2

Map Reduce

Map Operator Tree:

TableScan

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: default.atable

Stage: Stage-4

Map Reduce

Map Operator Tree:

TableScan

File Output Operator

compressed: false

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: default.atable

Stage: Stage-5

Move Operator

files:

hdfs directory: true

destination: hdfs://localhost:9000/tmp/hive/bytedance/bae441cb-0ef6-4e9c-9f7a-8a5f97d0e560/_tmp_space.db/ce44793b-6eed-4299-b737-f05c66b2281b/.hive-staging_hive_2021-03-24_20-17-38_169_5695913330535939856-1/-ext-10002

Stage: Stage-7

Fetch Operator

limit: -1

Processor Tree:

ListSink可以看到test表被物化了。

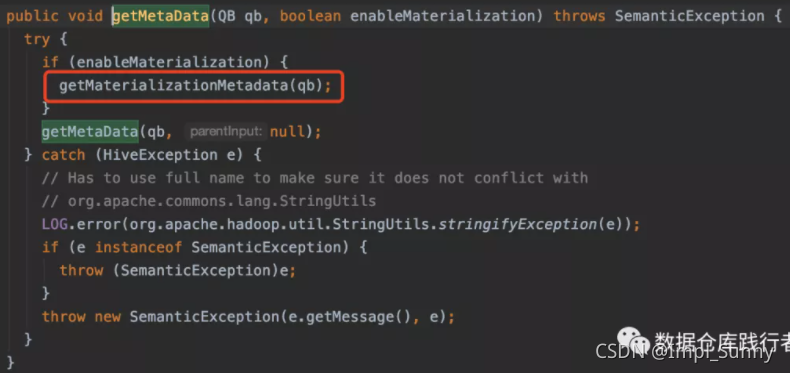

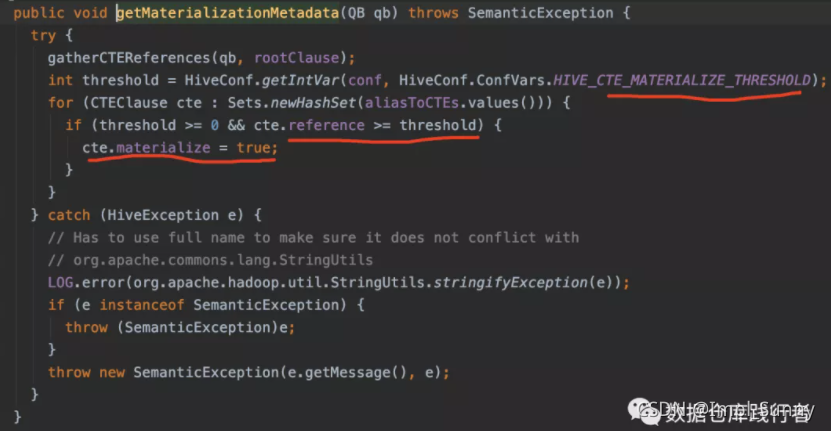

1.2 源码

?从源码看,在获取元数据时,会做参数判断,判断参数阈值及cte的引用次数

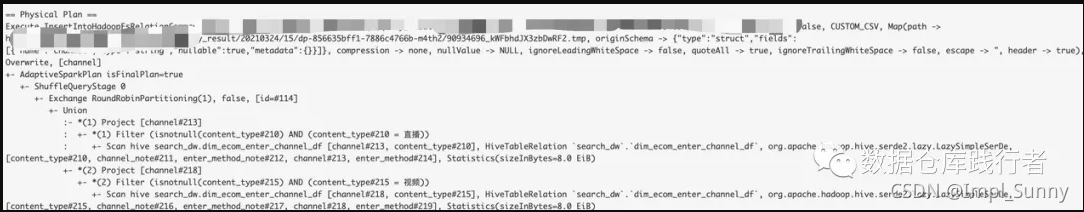

二、spark-sql

spark对cte的操作比较少,在spark侧,现在还没发现有相关的优化参数

with atable as (

SELECT content_type,

channel,

channel_note,

enter_method,

enter_method_note

FROM search_dw.dim_ecom_enter_channel_df

)

select channel from atable WHERE content_type = '直播'

union ALL

select channel from atable WHERE content_type = '视频'

?参考资料:公众号(数据仓库与Python大数据)-《with as 语句真的会把数据存内存嘛?(源码剖析)》

?参考资料:公众号(数据仓库与Python大数据)-《with as 语句真的会把数据存内存嘛?(源码剖析)》