2021SC@SDUSC

目录

2021SC@SDUSC

HBase概述

HBase 是一种分布式、高可靠性、高性能、面向列、可伸缩的 NoSQL 数据库。Hadoop HDFS为HBase提供了高可靠性的底层存储支持,Hadoop MapReduce为HBase提供了高性能的计算能力,Zookeeper为HBase提供了稳定服务和failover机制。

HBase集群安装

Hadoop安装与配置

- 创建虚拟机hadoop102,并完成相应配置

- 安装epel-release

yum install -y epel-release - 关闭防火墙,关闭防火墙开机自启

systemctl stop firewalld systemctl disable firewalld.service - 配置ycx用户具有root权限,方便后期执行root权限的命令

vim /etc/sudoers ycx ALL=(ALL) NOPASSWD:ALL - 在/opt目录下创建software和module文件夹,并修改所属主和所属组

- 卸载虚拟机自带JDK

rpm -qa | grep -i java | xargs -n1 rpm -e --nodeps - 利用hadoop102克隆虚拟机hadoop103和hadoop104

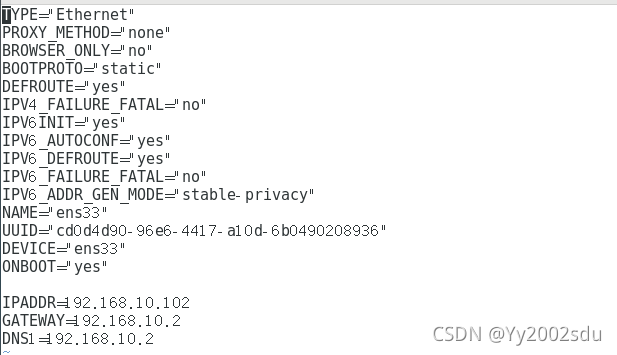

- 修改三台虚拟机为静态IP,以hadoop102为例

vim /etc/sysconfig/network-scripts/ifcfg-ens33

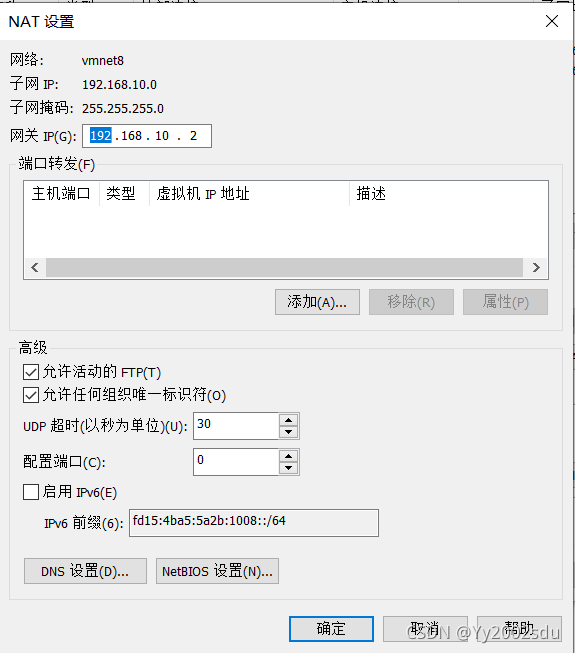

- ?设置VMware的网络虚拟编辑器

-

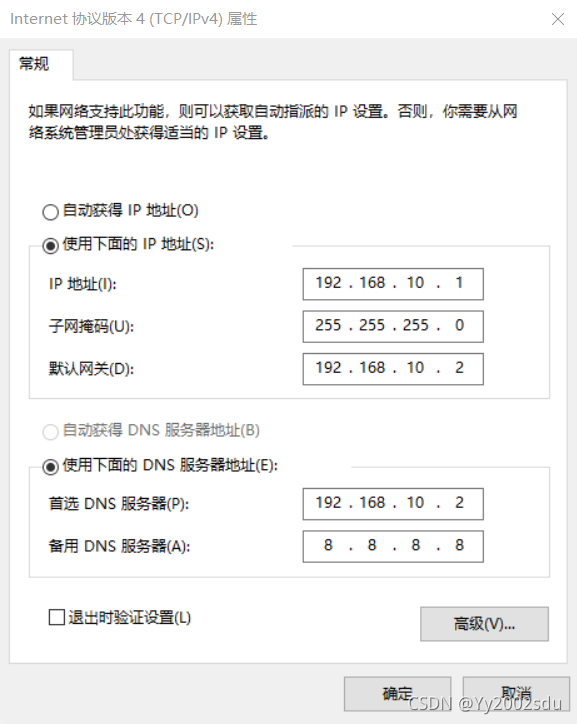

修改Windows系统适配器VMware Network Adapter VMnet8的IP地址

-

修改主机名称,配置Linux克隆机主机名称映射hosts文件,配置windows的主机映射hosts文件

192.168.10.102 hadoop102 192.168.10.103 hadoop103 192.168.10.104 hadoop104 -

安装xshell和xftp,并与三台虚拟机建立连接,并将JDK和hadoop所需的压缩包下载拷贝到虚拟机中

-

为hadoop102安装JDK,并配置环境变量,同时编写xsync分发脚本,将JDK分发至hadoop103和hadoop104

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/ sudo vim /etc/profile.d/my_env.sh #JAVA_HOME export JAVA_HOME=/opt/module/jdk1.8.0_212 export PATH=$PATH:$JAVA_HOME/bin source /etc/profile xsync分发脚本: #!/bin/bash if [ $# -lt 1 ] then echo Not Enough Arguement! exit; fi for host in hadoop102 hadoop103 hadoop104 do echo ==================== $host ==================== for file in $@ do if [ -e $file ] then pdir=$(cd -P $(dirname $file); pwd) fname=$(basename $file) ssh $host "mkdir -p $pdir" rsync -av $pdir/$fname $host:$pdir else echo $file does not exists! fi done done chmod 777 xsync xsync /opt/module/ sudo ./bin/xsync /etc/profile.d/my_env.sh -

?为hadoop102安装hadoop,并配置环境变量,同时分发至hadoop103、hadoop104

tar -zxvf hadoop-3.1.3.tar.gz -C /opt/module/ sudo vim /etc/profile.d/my_env.sh #HADOOP_HOME export HADOOP_HOME=/opt/module/hadoop-3.1.3 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin source /etc/profile xsync /opt/module/ sudo ./bin/xsync /etc/profile.d/my_env.sh - 为hadoop102、hadoop103、hadoop104配置SSH免密登录

hadoop102: ssh-keygen -t rsa ssh-copy-id hadoop102 ssh-copy-id hadoop103 ssh-copy-id hadoop104 - 配置集群

core-site.xml: <property> <name>fs.defaultFS</name> <value>hdfs://hadoop102:8020</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/opt/module/hadoop-3.1.3/data</value> </property> <property> <name>hadoop.http.staticuser.user</name> <value>ycx</value> </property> hdfs-site.xml: <property> <name>dfs.namenode.http-address</name> <value>hadoop102:9870</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>hadoop104:9868</value> </property> yarn-site.xml: <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>hadoop103</value> </property> <property> <name>yarn.nodemanager.env-whitelist</name> <value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value> </property> mapred-site.xml: <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> workers: hadoop102 hadoop103 hadoop104 xsync /opt/module/hadoop-3.1.3/etc/hadoop/ -

?启动集群

hdfs namenode -format sbin/start-dfs.sh sbin/start-yarn.sh

ZooKeeper安装与配置

- 下载ZooKeeper安装包,并利用xftp拷贝至虚拟机中

- 解压安装包

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /opt/module/ -

配置ZooKeeper?

mv zoo_sample.cfg zoo.cfg mkdir zkData vim myid myid文件: hadoop102为2 hadoop103为3 hadoop104为4 xsync apache-zookeeper-3.5.7-bin/ vim zoo.cfg 将zoo.cfg中的dataDir修改为/opt/module/zkData,并添加 server.2=hadoop102:2888:3888 server.3=hadoop103:2888:3888 server.4=hadoop104:2888:3888 xsync zoo.cfg -

启动ZooKeeper

bin/zkServer .sh start bin/zkCli.sh

Hbase安装与配置

- 下载Hbase安装包,并利用xftp拷贝至虚拟机中

- 解压安装包

tar -zxvf hbase-2.3.6-bin.tar.gz -C /opt/module -

配置Hbase

hbase-env.sh: export JAVA_HOME=/opt/module/jdk1.8.0_212 export HBASE_MANAGES_ZK=false hbase-site.xml: <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.master.port</name> <value>16000</value> </property> <property> <name>hbase.wal.provider</name> <value>filesystem</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>hadoop102,hadoop103,hadoop104</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/opt/module/zkData</value> </property> regionservers: hadoop102 hadoop103 hadoop104 ln -s /opt/module/hadoop-3.1.3/etc/hadoop/core-site.xml /opt/module/hbase/conf/core- site.xml ln -s /opt/module/hadoop-3.1.3/etc/hadoop/hdfs-site.xml /opt/module/hbase/conf/hdfs-site.xml xsync hbase-2.3.6/ -

启动Hbase

bin/start-hbase.sh

?Hbase源码下载

- 进入Hbase下载页面:https://hbase.apache.org/downloads.html

- 下载2.3.6版本

-

解压缩,maven编译

mvn clean compile package -DskipTests ?

? -

导入IDEA并配置

-

将conf目录下的文件拷贝到hbase-server的resources和hbase-shell的resources中

组内分工

我负责Hbase读写数据流程的源码分析,后续根据实际进度动态调整