搭建hadoop集群后,执行hadoop fs -put命令时有一个节点出现如下端口错误问题

location:org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1495)

throwable:java.io.IOException: Got error, status message , ack with firstBadLink as ip:port

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:142)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1482)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1385)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:554)

首先检查三台节点的防火墙是否关闭

firewall-cmd --state

关闭防火墙命令

systemctl stop firewalld.service

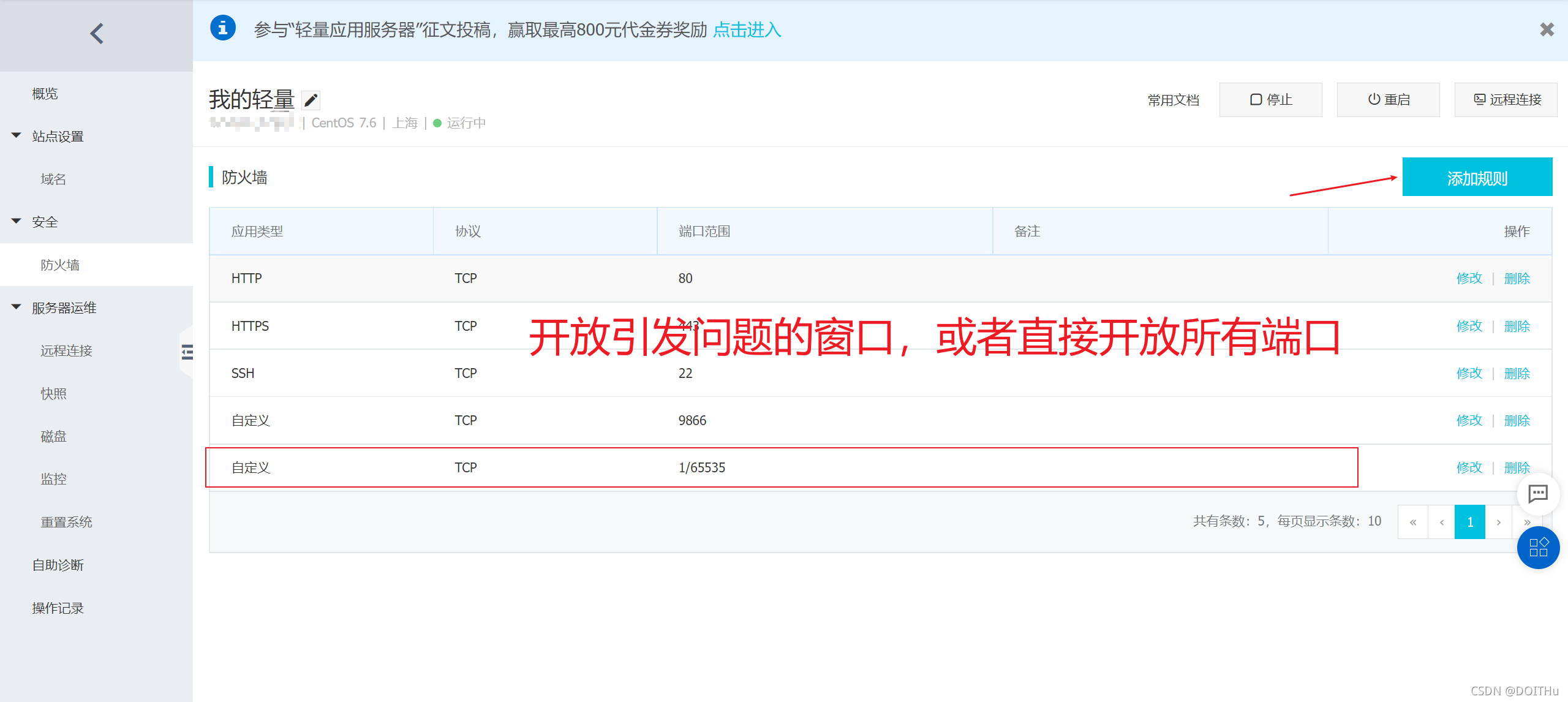

若是关闭防火墙后仍然出现这种状况则有而可能是云服务器的端口没有对外开放,以阿里云服务器为例(腾讯云默认开放所有端口):

设置完毕后再次执行命令即可成功。