下载

https://github.com/alibaba/DataX

部署

基础依赖 : python2

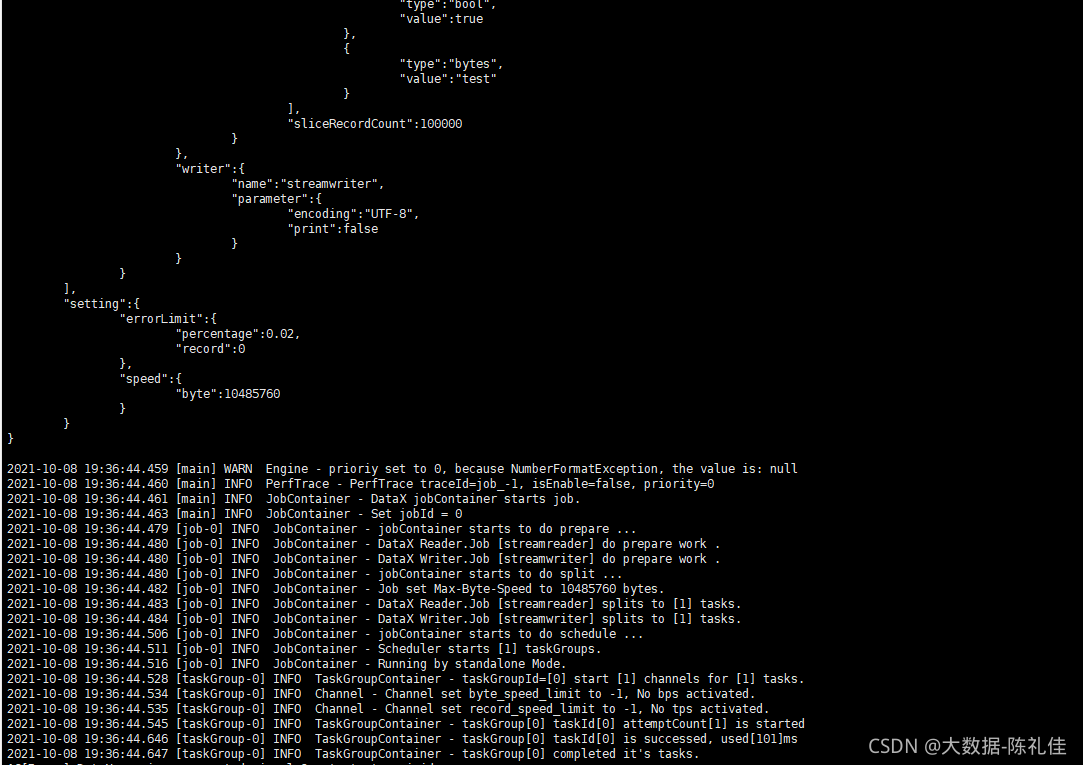

解压后运行自检脚本:

./bin/datax.py ./job/job.json

出现如下界面代表OK

使用

MySQL数据导入到HDFS

编辑 mysql2hdfs.json 文件

cd datax/job

vim mysql2hdfs.json

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"id",

"arth_type",

"content"

],

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://10.20.8.86:13306/test_db"

],

"table": [

"test_in"

]

}

],

"password": "123456",

"username": "clj"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name":"id",

"type":"int"

},

{

"name":"arth_type",

"type":"string"

},

{

"name":"content",

"type":"string"

}

],

"defaultFS": "hdfs://sentiment",

"hadoopConfig":{

"dfs.nameservices": "sentiment",

"dfs.ha.namenodes.sentiment": "namenode1,namenode2",

"dfs.namenode.rpc-address.sentiment.namenode1": "clj-mr-m1:8020",

"dfs.namenode.rpc-address.sentiment.namenode2": "clj-mr-m2:8020",

"dfs.client.failover.proxy.provider.sentiment": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"fieldDelimiter": "\t",

"fileName": "keyword.txt",

"fileType": "text",

"path": "/datax/test_in",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": "3"

}

}

}

}

注意点:

如果mysql版本比较高,需要更换驱动包,路径如下:

plugin/reader/mysqlreader/libs/

hdfswriter需要预选创建hive外部表

create external table test_in(

`id` bigint,

`arth_type` string,

`content` string

)

stored as textfile

LOCATION

'/datax/test_in';

高可用hdfs注意如下配置:

"defaultFS": "hdfs://sentiment",

"hadoopConfig":{

"dfs.nameservices": "sentiment",

"dfs.ha.namenodes.sentiment": "namenode1,namenode2",

"dfs.namenode.rpc-address.sentiment.namenode1": "clj-mr-m1:8020",

"dfs.namenode.rpc-address.sentiment.namenode2": "clj-mr-m2:8020",

"dfs.client.failover.proxy.provider.sentiment": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

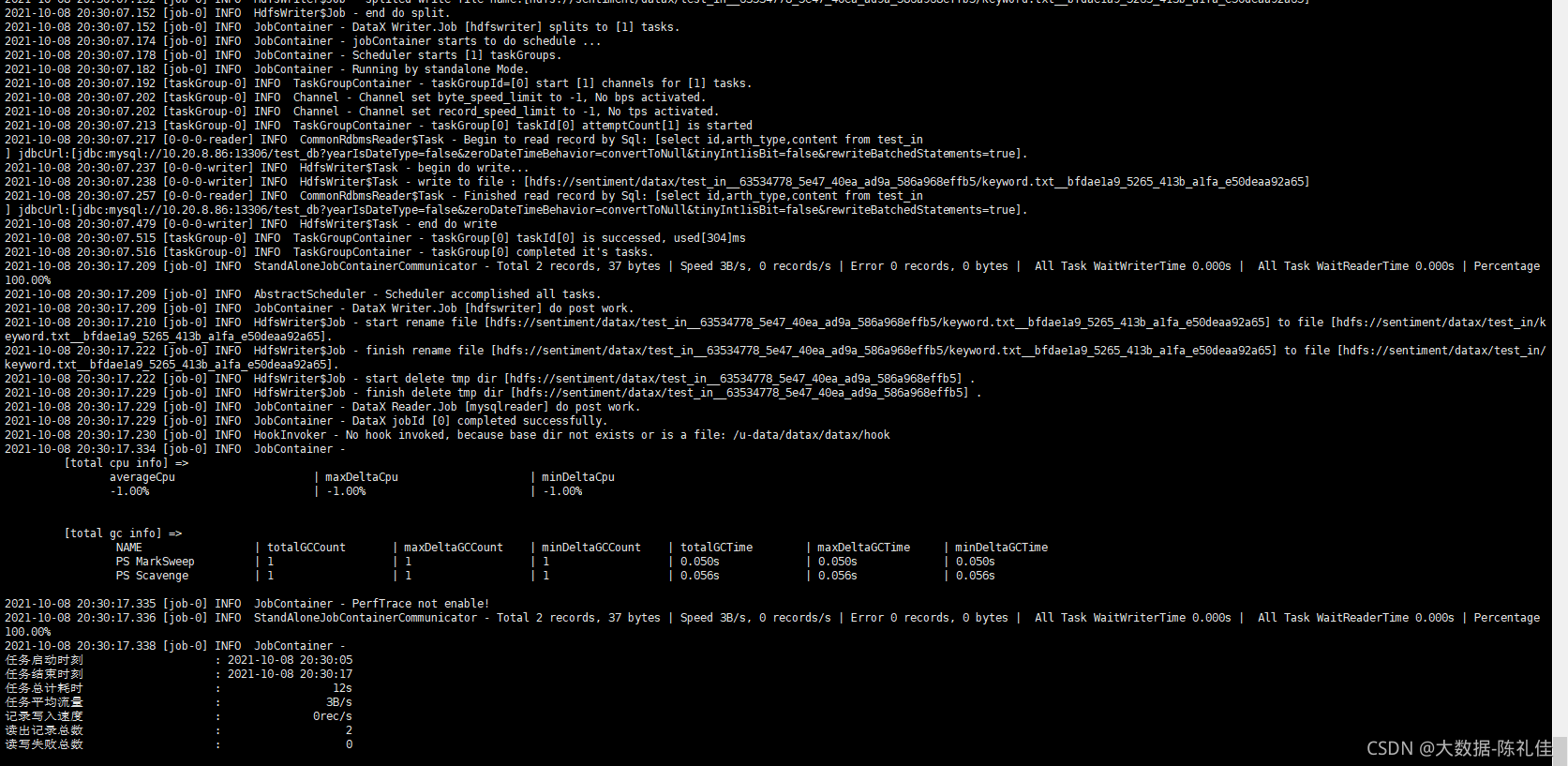

同步完成标志: