Spark读取MySQL

import org.apache.spark.SparkConf;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SparkSession;

import java.util.Properties;

public class FromMySQL {

public static void main(String[] args) {

SparkConf conf = new SparkConf().setMaster("local[2]").setAppName("spark_mysql");

//创建一个SparkSession类型的spark对象

SparkSession sparkSession = SparkSession.builder().config(conf).getOrCreate();

//创建Properties对象

Properties properties = new Properties();

properties.setProperty("user", "root"); // 用户名

properties.setProperty("password", "123456"); // 密码

properties.setProperty("driver", "com.mysql.cj.jdbc.Driver");

//设置分区数

properties.setProperty("numPartitions","2");

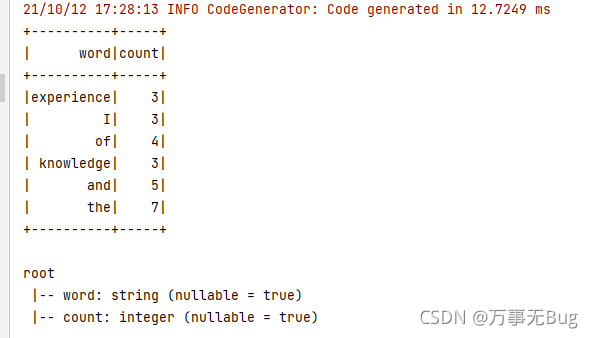

Dataset<Row> words1 = sparkSession.read().jdbc("jdbc:mysql://localhost:3306/test", "words1", properties).select("word","count").where("count>=3");

//输出数据

words1.show();

//输出表的结构

words1.printSchema();

sparkSession.stop();

}

}

结果: