前提条件:

Linux下安装好Hadoop2.7.3

Windows下安装好Maven

Windows系统下安装好idea

idea配置好Maven

新建好Maven工程

新建一个HDFS包并创建App类

修改pom.xml添加Hadoop依赖

在前一行添加如下语句:

没有 就创建一个

<!-- 添加相关依赖 -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

如果出现hadoop包不能引入则再加入

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<!--suppress UnresolvedMavenProperty -->

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

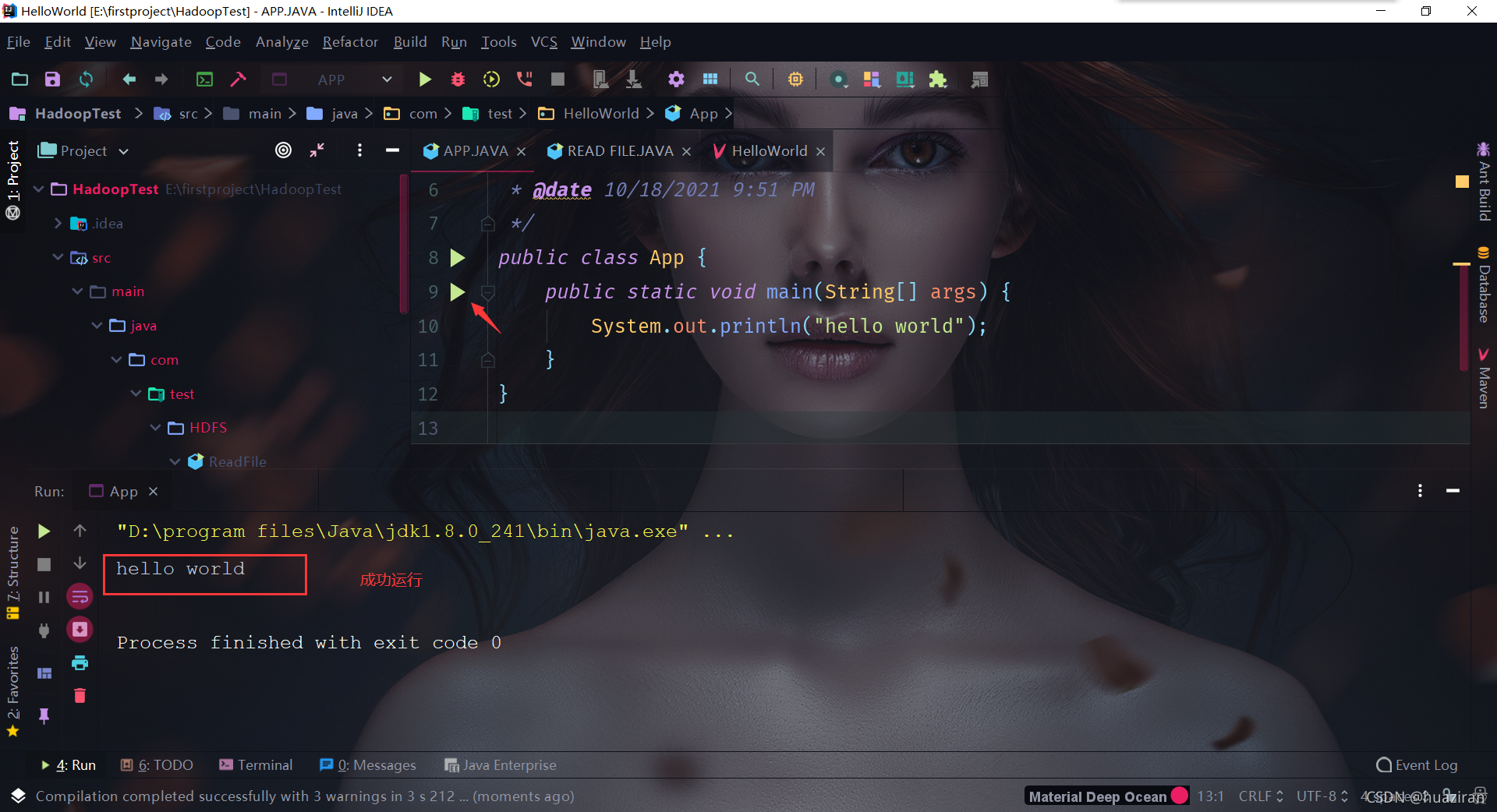

App类添加代码

package com.test.HelloWorld;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/18/2021 9:51 PM

*/

public class App {

public static void main(String[] args) {

System.out.println("hello world");

}

}

测试App包能不能正常运行

开始HDFS API编程

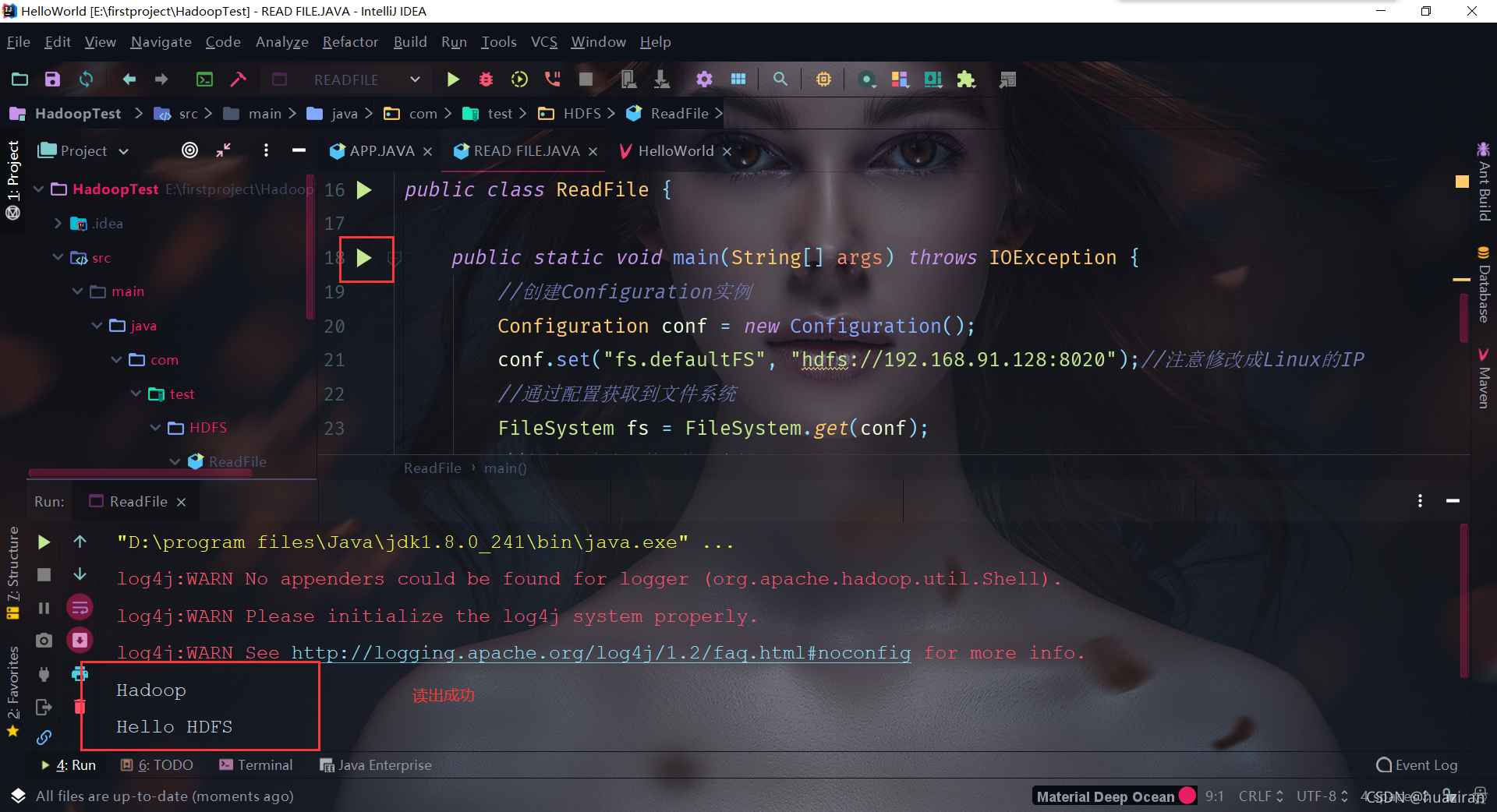

1.读取HDFS文件

新建ReadFile.java

添加代码

package com.test.HDFS;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/18/2021 10:07 PM

*/

public class ReadFile {

public static void main(String[] args) throws IOException {

//创建Configuration实例

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.91.128:8020");//注意修改成Linux的IP

//通过配置获取到文件系统

FileSystem fs = FileSystem.get(conf);

//定义要读取文件所在的HDFS路径

Path src=new Path("hdfs://192.168.91.128:8020/file.txt");//注意修改成Linux的IP

//通过文件系统的open()方法得到一个文件输入流,用于读取

FSDataInputStream dis = fs.open(src);

//用IOUtils下的copyBytes将流中的数据打印输出到控制台

IOUtils.copyBytes(dis, System.out, conf);

//关闭输入流

dis.close();

}

}

注意:192.168.134.128修改成自己Linux的IP

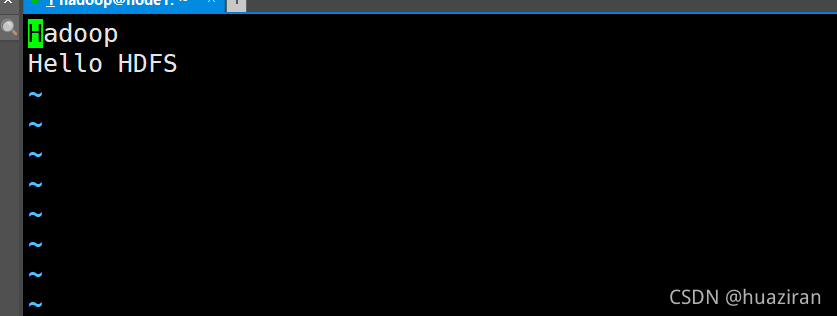

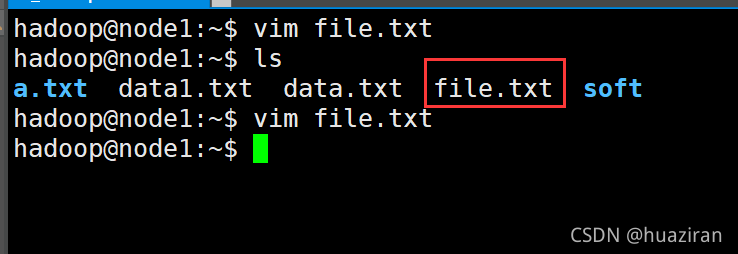

运行程序之前,先新建出测试文件:file.txt

$ vim file.txt

内容为:

Hello Hadoop

Hello HDFS:wq

上传file.txt到HDFS /目录下

$ hdfs dfs -put file.txt /

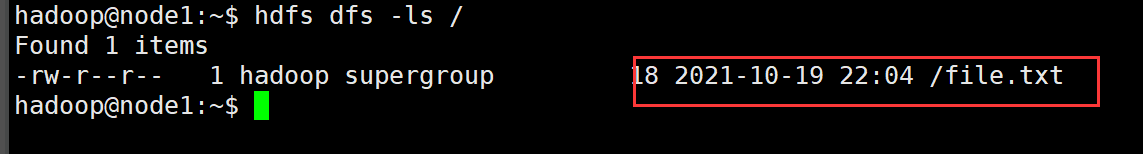

查看是否上传成功

$ hdfs dfs -ls /

运行:

红色的log4j只是警告,不影响我们读取HDFS文件,可以先不处理它。

以上程序完成了读取HDFS文件内容,接下来可以做的是:通过API完成文件的写入、文件的上传、下载等等!

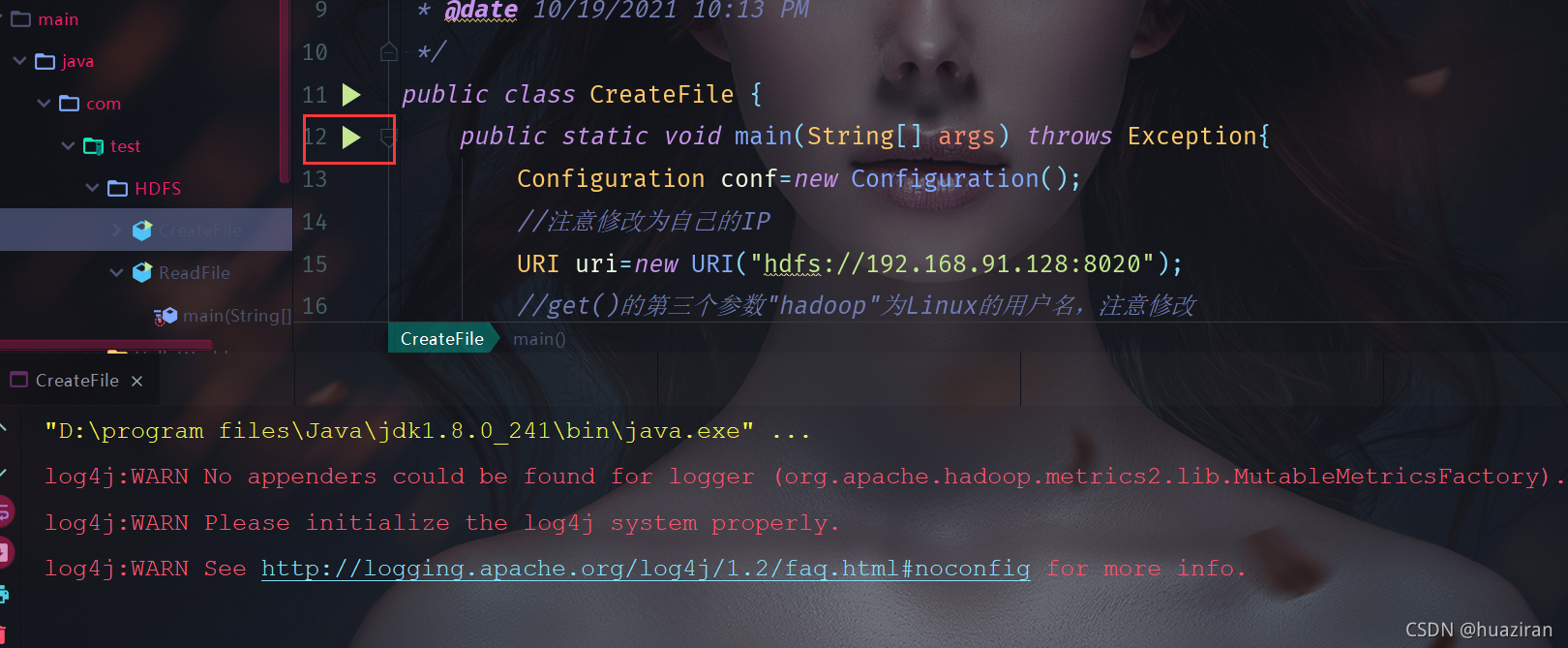

文件创建与写入

新建类

CreateFile.java

代码:

package com.test.HDFS;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.fs.*;

import java.net.URI;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/19/2021 10:13 PM

*/

public class CreateFile {

public static void main(String[] args) throws Exception{

Configuration conf=new Configuration();

//注意修改为自己的IP

URI uri=new URI("hdfs://192.168.134.128:8020");

//get()的第三个参数"hadoop"为Linux的用户名,注意修改

FileSystem fs=FileSystem.get(uri,conf,"hadoop");

//定义一个要创建文件的路径

Path dfs=new Path("/user/hadoop/1.txt");

FSDataOutputStream fos=fs.create(dfs,true);

//写入的内容

fos.writeBytes("hello hdfs\n");//不支持中文

fos.writeUTF("你好啊!\n");// 支持中文

//关闭流

fos.close();

}

}

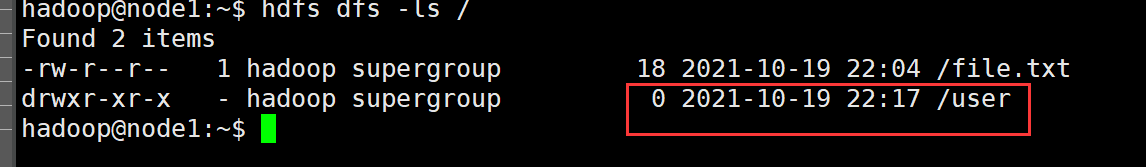

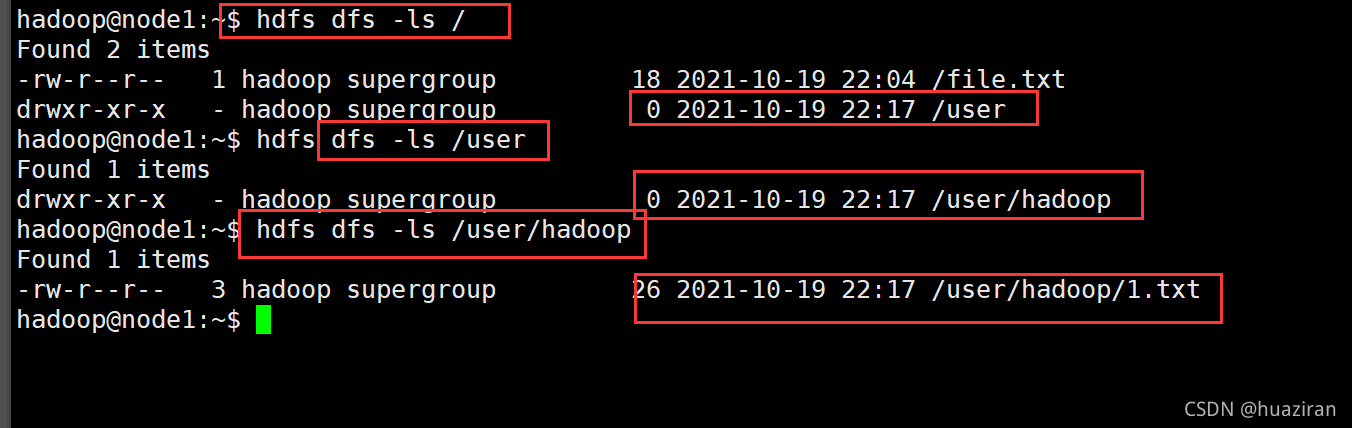

运行

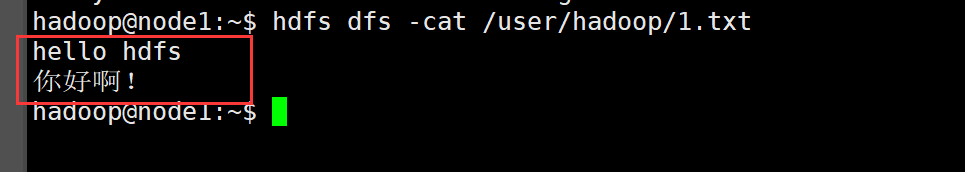

查看文件

hdfs dfs -ls /

命令查看

hdfs dfs -cat /user/hadoop/1.txt

成功创建与写入

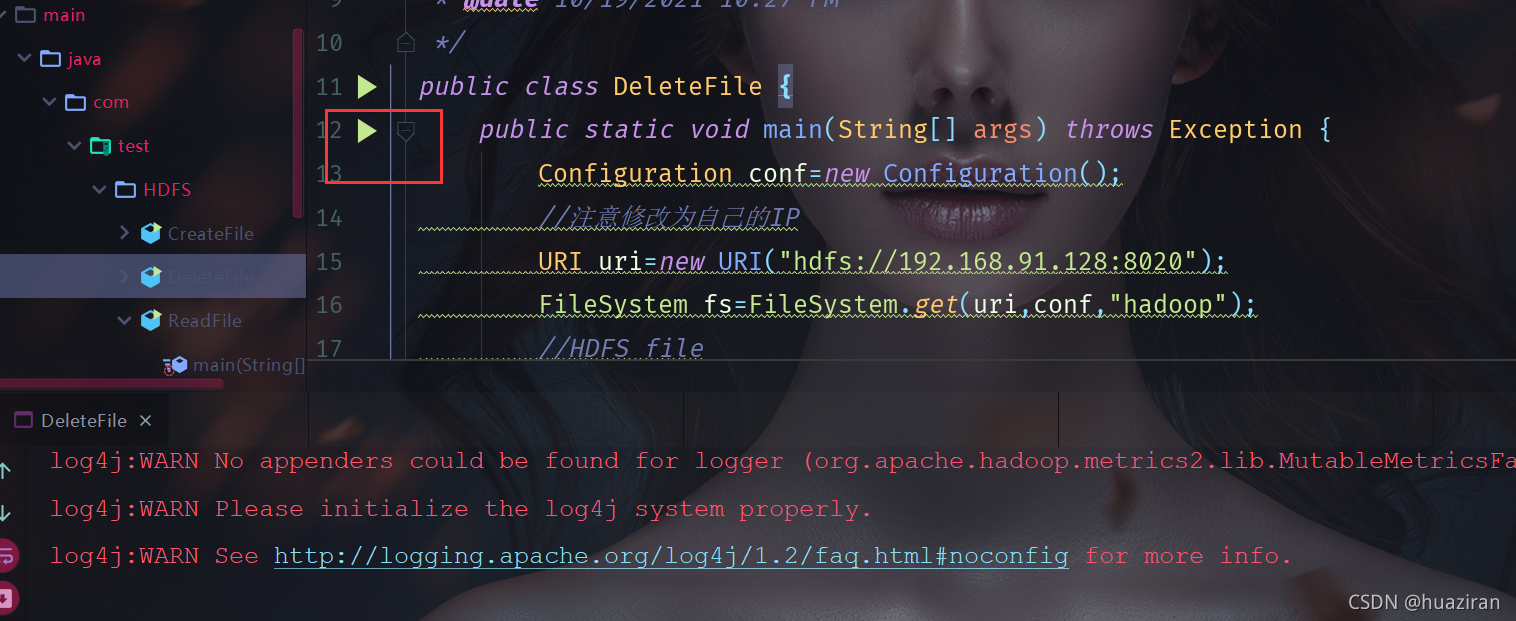

文件删除

新建类

DeleteFile.java

代码:

package com.test.HDFS;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/19/2021 10:27 PM

*/

public class DeleteFile {

public static void main(String[] args) throws Exception {

Configuration conf=new Configuration();

//注意修改为自己的IP

URI uri=new URI("hdfs://192.168.91.128:8020");

FileSystem fs=FileSystem.get(uri,conf,"hadoop");

//HDFS file

Path path=new Path("/user/hadoop/1.txt");

fs.delete(path,true);

}

}

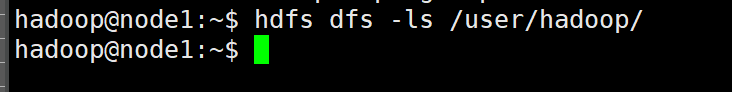

运行:

查看是否删除成功

hdfs dfs -ls /user/hadoop/

文件为空

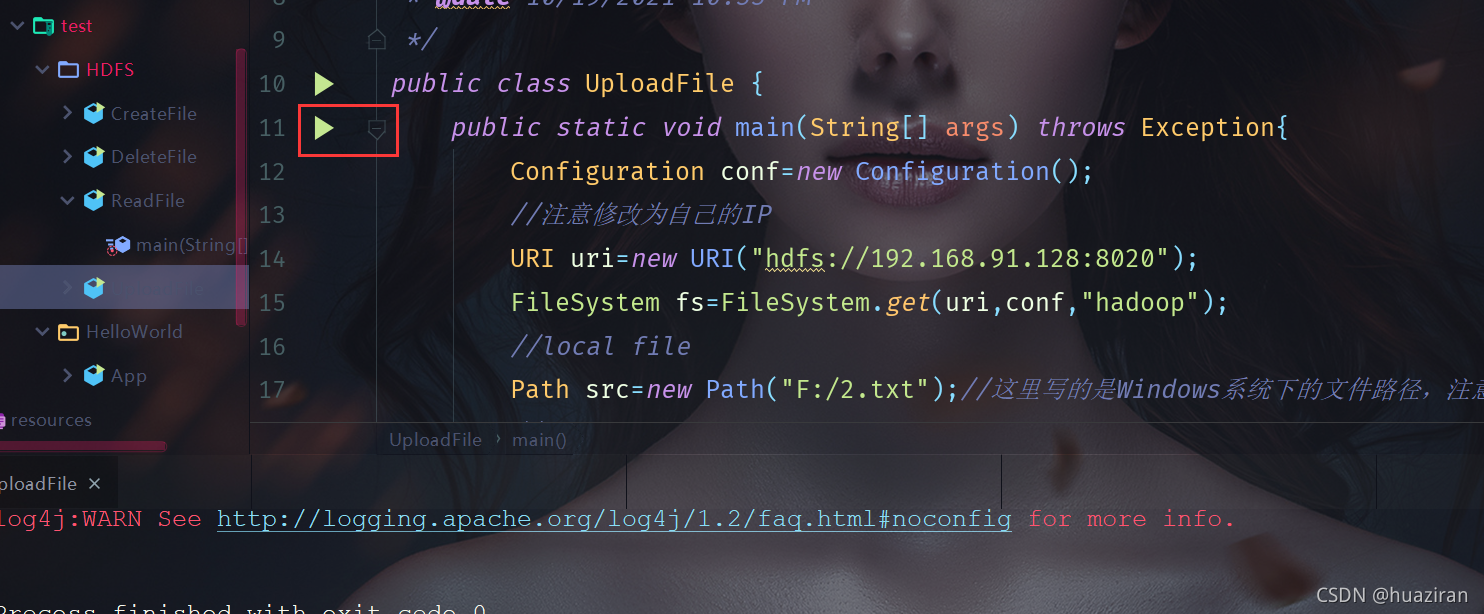

文件上传

新建类

UploadFile.java

代码:

package com.test.HDFS;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.fs.*;

import java.net.URI;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/19/2021 10:33 PM

*/

public class UploadFile {

public static void main(String[] args) throws Exception{

Configuration conf=new Configuration();

//注意修改为自己的IP

URI uri=new URI("hdfs://192.168.91.128:8020");

FileSystem fs=FileSystem.get(uri,conf,"hadoop");

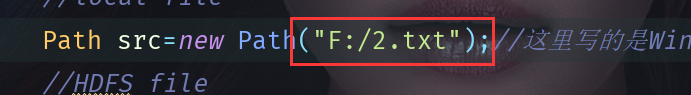

//local file

Path src=new Path("F:/2.txt");//这里写的是Windows系统下的文件路径,注意按实际修改。

//HDFS file

//上传到目的地路径,可以自定义, hdfs的/user/hadoop目录需要先用命令创建出来

Path dst=new Path("/user/hadoop");

fs.copyFromLocalFile(src,dst);

}

}

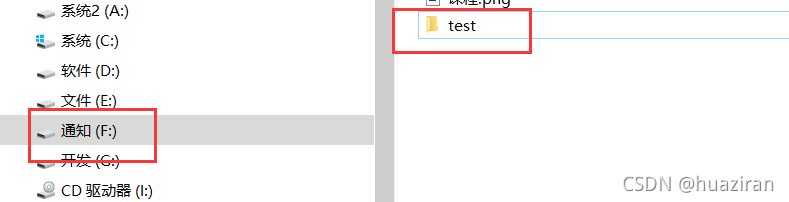

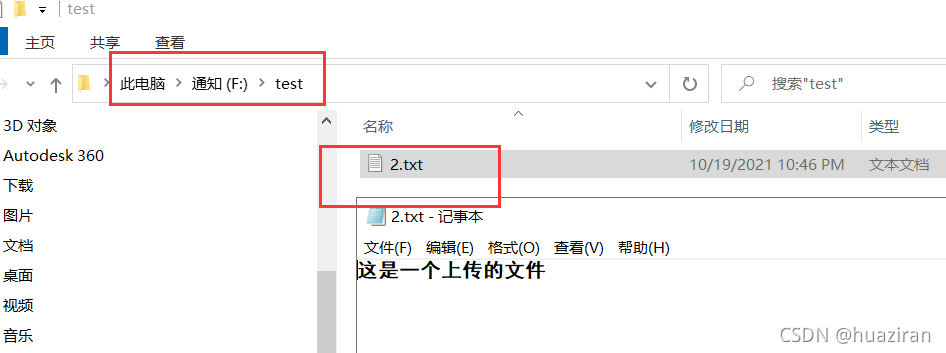

在相应文件位置找到路劲文件

eg:

对应

对应

运行

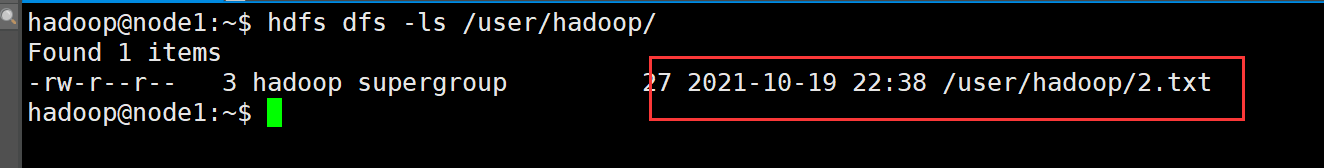

查看

hdfs dfs -ls /user/hadoop/

文件的下载

新建类

DownloadFile.java

代码:

package com.test.HDFS;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.fs.*;

import java.net.URI;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/19/2021 10:40 PM

*/

public class DownloadFile {

public static void main(String[] args) throws Exception{

Configuration conf=new Configuration();

URI uri=new URI("hdfs://192.168.91.128:8020");

FileSystem fs=FileSystem.get(uri,conf,"hadoop");

//HDFS文件:"/2.txt"为hdfs的文件,如果没有该文件请从Linux上传一个文件到HDFS.

Path src=new Path("/user/hadoop/2.txt");

//local文件位置:需要先在Windows D盘创建一个test文件夹存放下载的文件

Path dst=new Path("F:/test");

//从HDFS下载文件到本地

fs.copyToLocalFile(false,src,dst,true);

}

}

先创建文件夹

运行结果

查看F:/test下是否有文件/user/hadoop/中的文件2.txt

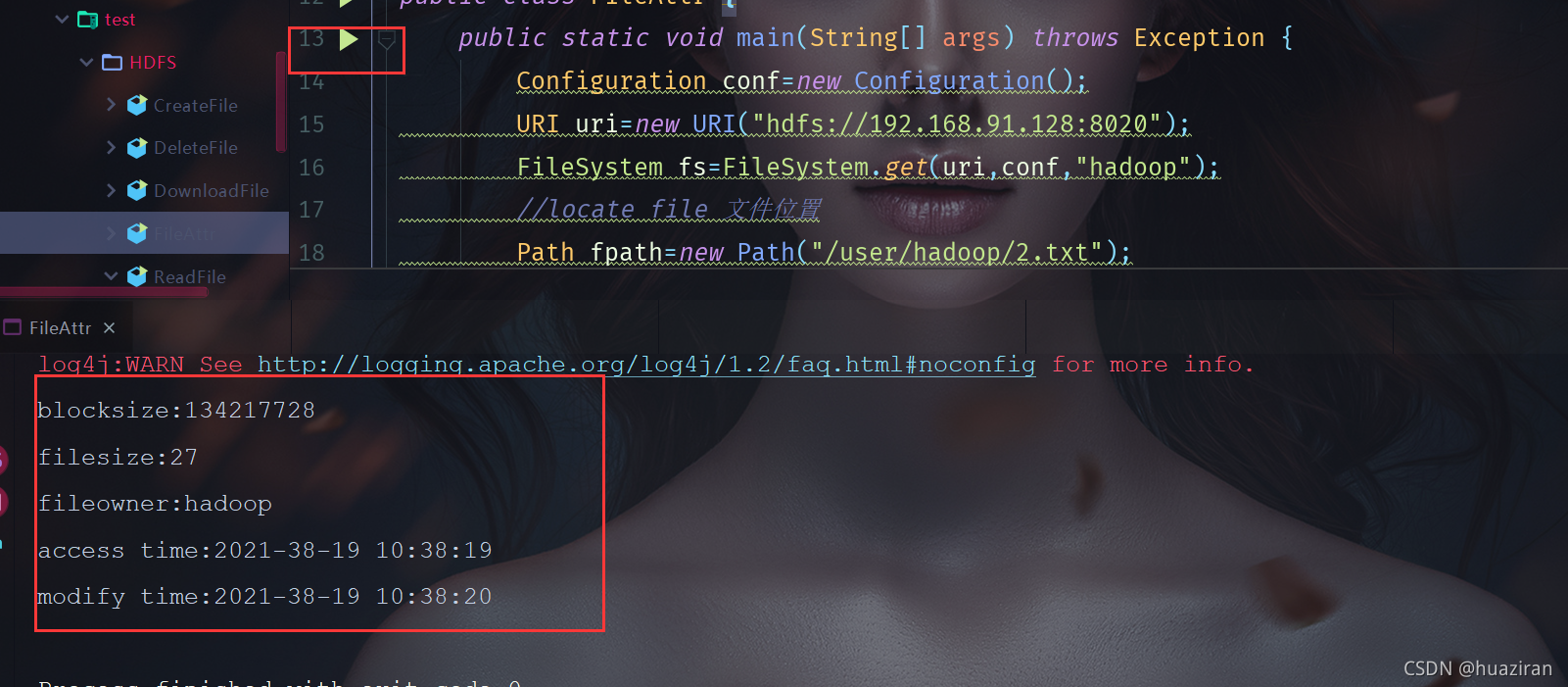

查看文件属性

新建类

FileAttr.java

代码:

package com.test.HDFS;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.fs.*;

import java.net.URI;

import java.sql.Date;

import java.text.SimpleDateFormat;

/**

* @author CreazyHacking

* @version 1.0

* @date 10/19/2021 10:55 PM

*/

public class FileAttr {

public static void main(String[] args) throws Exception {

Configuration conf=new Configuration();

URI uri=new URI("hdfs://192.168.91.128:8020");

FileSystem fs=FileSystem.get(uri,conf,"hadoop");

//locate file 文件位置

Path fpath=new Path("/user/hadoop/2.txt");

FileStatus filestatus=fs.getFileLinkStatus(fpath);

//get block size 获取文件块大小

long blocksize=filestatus.getBlockSize();

System.out.println("blocksize:"+blocksize);

//get file size 获取文件大小

long filesize=filestatus.getLen();

System.out.println("filesize:"+filesize);

//get file owner 获取文件所属者

String fileowner=filestatus.getOwner();

System.out.println("fileowner:"+fileowner);

//get file access time 获取文件存取时间

SimpleDateFormat formatter=new SimpleDateFormat("yyyy-mm-dd hh:mm:ss");

long accessTime=filestatus.getAccessTime();

System.out.println("access time:"+formatter.format(new Date(accessTime)));

//get file modify time 获取文件修改时间

long modifyTime=filestatus.getModificationTime();

System.out.println("modify time:"+formatter.format(new Date(modifyTime)));

}

}

运行结果

完成! enjoy it!