之前网上的版本太老了,mnist_spark.py和mnist_data_setup.py测试文件的参数和位置,以及tensorflow-hadoop的版本(1.5)都更新了

一、运行环境准备

1、hadoop2.7:最好是HA

2、spark on yarn:必须是yarn环境,否则不能执行训练,要求必须多台机器的集群

配置好环境变量

export JAVA_HOME=/usr/local/jdk8

export HADOOP_HOME=/home/hadoop/service/hadoop2.7

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export YARN_HOME=/home/hadoop/service/hadoop2.7

export YARN_CONF_DIR=${YARN_HOME}/etc/hadoop

export SPARK_HOME=/home/hadoop/service/spark

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_CONF_DIR/bin:$YARN_HOME/bin:$YARN_CONF_DIR/bin:$SPARK_HOME/bin

3、scala:我用的是scala-2.11.12

4、Python:建议3.7

yum -y install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gdbm-devel db4-devel libpcap-devel xz-devel libffi-devel

wget https://www.python.org/ftp/python/3.7.3/Python-3.7.3.tgz

mkdir /usr/local/python3

tar -zxf Python-3.7.3.tgz

cd Python-3.7.3

./configure --prefix=/usr/local/python3

make && make install

ln -s /usr/local/python3/bin/python3.7 /usr/bin/python3

rm -rf /usr/bin/python

ln -s /usr/local/python3/bin/python3.7 /usr/bin/python

ln -s /usr/local/python3/bin/pip3.7 /usr/bin/pip3

rm -rf /usr/bin/pip

ln -s /usr/local/python3/bin/pip3.7 /usr/bin/pip

记得修改/usr/bin/yum,改为 #!/usr/bin/python2,否则yum以后不能用

此时执行pyspark可以正常出来运行界面

5、python环境:pip 安装tensorflow、tensorflowonspark

pip install tensorflow -i http://pypi.doubanio.com/simple/ --trusted-host pypi.doubanio.com

pip install tensorflowonspark -i http://pypi.doubanio.com/simple/ --trusted-host pypi.doubanio.com

二、环境包准备

1、打包python

# 到python3.7的目录下

pushd "/usr/local/python3"

zip -r Python.zip *

popd

# 上传hdfs

2、打包TensorFlowOnSpark

# 到https://github.com/yahoo/TensorFlowOnSpark下载,制作tfspark.zip

zip -r TensorFlowOnSpark.zip tensorflowonspark

3、打包tensorflow-hadoop-1.5-SNAPSHOT.jar

git clone https://github.com/tensorflow/ecosystem.git

cd ecosystem/hadoop

# 打包会报错maven-javadoc-plugin的版本问题,或者直接删掉javadoc,另外是tensorflow-hadoop-1.5.jar

mvn package -Dmaven.test.skip=true

上传hdfs

4、打包数据

pushd ./mnist >/dev/null

curl -O "http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz"

curl -O "http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz"

curl -O "http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz"

curl -O "http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz"

zip -r mnist.zip *

popd >/dev/null

上传hdfs

三、使用

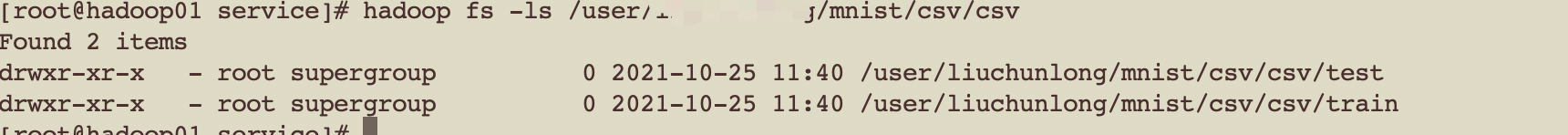

1、将图像文件(images)和标签(labels)转换为CSV文件

./bin/spark-submit \

--deploy-mode client \

--master yarn \

--num-executors 3 \

--executor-memory 1G \

--archives hdfs://hadoop01:9000/user/hadoop/Python.zip#Python,hdfs://hadoop01:9000/user/liuchunlong/mnist/mnist.zip#mnist \

--jars hdfs://hadoop01:9000/user/hadoop/tensorflow-hadoop-1.5.0.jar \

/home/hadoop/TensorFlowOnSpark/examples/mnist/mnist_data_setup.py \

--output hdfs://hadoop01:9000/user/hadoop/mnist/csv

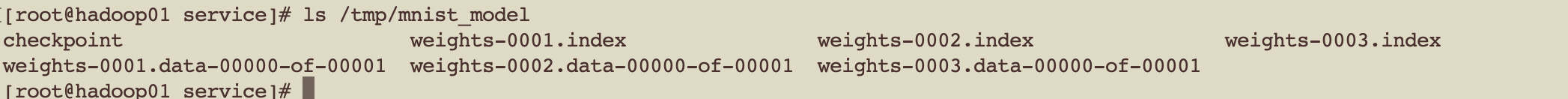

2、训练

./bin/spark-submit \

--deploy-mode client \

--master yarn \

--num-executors 3 \

--executor-memory 1G \

--py-files /home/hadoop/package/TensorFlowOnSpark.zip \

--conf spark.dynamicAllocation.enabled=false \

--conf spark.yarn.maxAppAttempts=1 \

--archives hdfs://hadoop01:9000/user/hadoop/Python.zip#Python \

/home/hadoop/TensorFlowOnSpark/examples/mnist/keras/mnist_spark.py \

--images_labels hdfs://hadoop01:9000/user/hadoop/mnist/csv/csv/train \

--model_dir /tmp/mnist_model

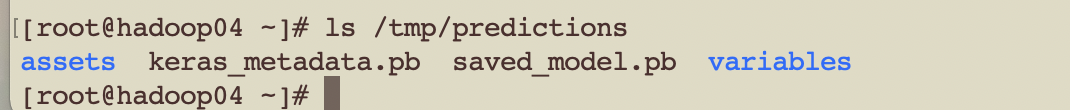

3、预测

./bin/spark-submit \

--master yarn \

--deploy-mode client \

--num-executors 3 \

--executor-memory 1G \

--py-files /home/hadoop/package/TensorFlowOnSpark.zip \

--conf spark.dynamicAllocation.enabled=false \

--conf spark.yarn.maxAppAttempts=1 \

--conf spark.yarn.executor.memoryOverhead=6144 \

--archives hdfs://hadoop01:9000/user/hadoop/Python.zip#Python \

--conf spark.executorEnv.LD_LIBRARY_PATH="$JAVA_HOME/jre/lib/amd64/server" \

/home/hadoop/TensorFlowOnSpark/examples/mnist/keras/mnist_spark.py \

--images_labels hdfs://hadoop01:9000/user/hadoop/mnist/csv/csv/test \

--mode inference \

--model_dir /tmp/mnist_model \

--export_dir /tmp/predictions

不在本机,可以指定hdfs