canal简介

canal,译意为水道/管道/沟渠,主要用途是基于 MySQL 数据库增量日志解析,提供增量数据订阅和消费

基于日志增量订阅和消费的业务包括

- 数据库镜像

- 数据库实时备份

- 索引构建和实时维护(拆分异构索引、倒排索引等)

- 业务 cache 刷新

- 带业务逻辑的增量数据处理

当前的 canal 支持源端 MySQL 版本包括 5.1.x , 5.5.x , 5.6.x , 5.7.x , 8.0.x

canal和canal-adapter下载

官方下载地址: https://github.com/alibaba/canal/releases

百度云-1.1.4:https://pan.baidu.com/s/1T1ZVjmluu99NlVRUWtDnUw 密码:snmc

Mysql配置

[mysqld]

log-bin=mysql-bin #添加这一行就ok

binlog-format=ROW #选择row模式

server_id=1 #配置mysql replaction需要定义,不能和canal的slaveId重复

查看是否开启bin-log

show variables like ‘log_bin’;

查看bin-log的模式

show variables like ‘binlog_format’;

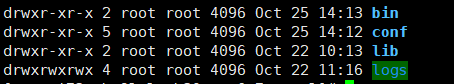

canal安装及配置

安装

下载的canal.deployer-1.1.4.tar.gz拷贝到linux目录下进行解压

tar -zxvf canal.deployer-1.1.4.tar.gz -C /opt/module/canal.deployer

注意:canal解压后是散的,我们在指定解压目录的时候需要将canal指定上

配置

本文测试的是canal实时同步mysql数据到kafka

修改 conf/canal.properties 的配置

#################################################

######### common argument #############

#################################################

# tcp bind ip

canal.ip =

# register ip to zookeeper

canal.register.ip =

canal.port = 11111

canal.metrics.pull.port = 11112

# canal instance user/passwd

# canal.user = canal

# canal.passwd = E3619321C1A937C46A0D8BD1DAC39F93B27D4458

# canal admin config

#canal.admin.manager = 127.0.0.1:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

canal.zkServers =

# flush data to zk

canal.zookeeper.flush.period = 1000

canal.withoutNetty = false

# tcp, kafka, RocketMQ

#默认tcp模式 我们修改为kafka

canal.serverMode = kafka

# flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir}

canal.file.flush.period = 1000

## memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

## memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

## meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE

canal.instance.memory.rawEntry = true

## detecing config

canal.instance.detecting.enable = false

#canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

canal.instance.detecting.sql = select 1

canal.instance.detecting.interval.time = 3

canal.instance.detecting.retry.threshold = 3

canal.instance.detecting.heartbeatHaEnable = false

# support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

# mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

# network config

canal.instance.network.receiveBufferSize = 16384

canal.instance.network.sendBufferSize = 16384

canal.instance.network.soTimeout = 30

# binlog filter config

canal.instance.filter.druid.ddl = true

canal.instance.filter.query.dcl = false

canal.instance.filter.query.dml = false

canal.instance.filter.query.ddl = false

canal.instance.filter.table.error = false

canal.instance.filter.rows = false

canal.instance.filter.transaction.entry = false

# binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED

canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

# binlog ddl isolation

canal.instance.get.ddl.isolation = false

# parallel parser config

canal.instance.parser.parallel = true

## concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

#这个参数开启一下防止获取不到数据

canal.instance.parser.parallelThreadSize = 16

## disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

# table meta tsdb info

canal.instance.tsdb.enable = true

canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;

canal.instance.tsdb.dbUsername = canal

canal.instance.tsdb.dbPassword = canal

# dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

# purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

# aliyun ak/sk , support rds/mq

canal.aliyun.accessKey =

canal.aliyun.secretKey =

#################################################

######### destinations #############

#################################################

#conf下的每一个 example 即是一个实例,每个实例下面都有独立的配置文件。默认只有一个实例 example,可以有多个canal.destinations=实例 1,实例 2,实例 3

canal.destinations = example

# conf root dir

canal.conf.dir = ../conf

# auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true

canal.auto.scan.interval = 5

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

#canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = spring

canal.instance.global.lazy = false

canal.instance.global.manager.address = ${canal.admin.manager}

#canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

canal.instance.global.spring.xml = classpath:spring/file-instance.xml

#canal.instance.global.spring.xml = classpath:spring/default-instance.xml

##################################################

######### MQ #############

##################################################

#kafka集群地址

canal.mq.servers = xxx:9092,xxx:9092,xxx:9092

canal.mq.retries = 0

# flagMessage模式下可以调大该值, 但不要超过MQ消息体大小上限

canal.mq.batchSize = 16384

canal.mq.maxRequestSize = 1048576

# flatMessage模式下请将该值改大, 建议50-200

canal.mq.lingerMs = 100

canal.mq.bufferMemory = 33554432

canal.mq.canalBatchSize = 50

canal.mq.canalGetTimeout = 100

canal.mq.flatMessage = true

canal.mq.compressionType = none

canal.mq.acks = all

#canal.mq.properties. =

canal.mq.producerGroup = test

# Set this value to "cloud", if you want open message trace feature in aliyun.

canal.mq.accessChannel = local

# aliyun mq namespace

#canal.mq.namespace =

##################################################

######### Kafka Kerberos Info #############

##################################################

canal.mq.kafka.kerberos.enable = false

canal.mq.kafka.kerberos.krb5FilePath = "../conf/kerberos/krb5.conf"

canal.mq.kafka.kerberos.jaasFilePath = "../conf/kerberos/jaas.conf"

详细参数配置: https://github.com/alibaba/canal/wiki/Canal-Kafka-RocketMQ-QuickStart

修改 example/instance.properties的配置

#################################################

## mysql serverId , v1.0.26+ will autoGen

# canal.instance.mysql.slaveId=0

# enable gtid use true/false

canal.instance.gtidon=false

# position info

#源mysql数据库地址

canal.instance.master.address=127.0.0.1:3306

canal.instance.master.journal.name=

canal.instance.master.position=

canal.instance.master.timestamp=

canal.instance.master.gtid=

# rds oss binlog

canal.instance.rds.accesskey=

canal.instance.rds.secretkey=

canal.instance.rds.instanceId=

# table meta tsdb info

canal.instance.tsdb.enable=true

#canal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdb

#canal.instance.tsdb.dbUsername=canal

#canal.instance.tsdb.dbPassword=canal

#canal.instance.standby.address =

#canal.instance.standby.journal.name =

#canal.instance.standby.position =

#canal.instance.standby.timestamp =

#canal.instance.standby.gtid=

# username/password

#数据库用户名和密码

canal.instance.dbUsername=canal

canal.instance.dbPassword=canal

canal.instance.connectionCharset = UTF-8

# enable druid Decrypt database password

canal.instance.enableDruid=false

#canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ==

# table regex

#监控需要同步的具体表,也可以使用正则表达式监听多张表或者库

#某个库下所有表:mytest\\..*

#匹配所有:.*\\..*

canal.instance.filter.regex=test.test

# table black regex

#canal.instance.filter.black.regex=

# table field filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)

#canal.instance.filter.field=test1.t_product:id/subject/keywords,test2.t_company:id/name/contact/ch

# table field black filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)

#canal.instance.filter.black.field=test1.t_product:subject/product_image,test2.t_company:id/name/contact/ch

# mq config

#kafka的topic名

canal.mq.topic=kf_test

# dynamic topic route by schema or table regex

#canal.mq.dynamicTopic=mytest1.user,mytest2\\..*,.*\\..*

#默认还是输出到指定 Kafka 主题的一个 kafka 分区,因为多个分区并行可能会打乱binlog 的顺序

canal.mq.partition=0

# hash partition config

#kafka的分区数

#如果要提高并行度,首先设置 kafka 的分区数>1,然后设置 canal.mq.partitionHash 属性

#canal.mq.partitionsNum=3

#canal.mq.partitionHash=test.table:id^name,.*\\..*

#################################################

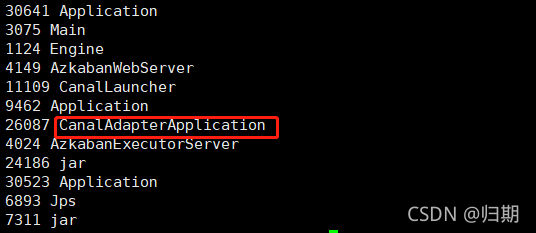

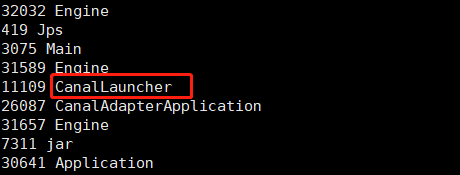

启动 canal

到canal安装的bin路径下启动

看到 CanalLauncher 你表示启动成功,同时会创建 kafka主题

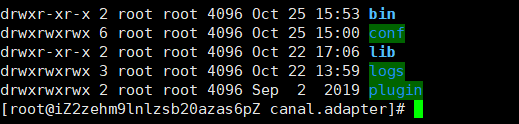

canal-adapter安装及配置

安装

下载的canal.adapter-1.1.4.tar.gz拷贝到linux目录下进行解压

tar -zxvf canal.adapter-1.1.4.tar.gz -C /opt/module/canal.adapter

配置

canal.adapter读取上面canal发送到kafka的数据到postgres中

修改conf/application.yml文件

server:

port: 8081

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

default-property-inclusion: non_null

canal.conf:

# 默认tcp

mode: kafka # kafka rocketMQ

# canalServerHost: 127.0.0.1:11111

# zookeeperHosts: slave1:2181

# 修改成自己的kafka地址

mqServers: xxx:9092,xxx:9092,xxx:9092 #or rocketmq

# 数据压缩格式

# flatMessage: true

batchSize: 5

syncBatchSize: 2

retries: 0

timeout:

accessKey:

secretKey:

srcDataSources:

defaultDS:

# 源mysql数据库jdbc地址

url: jdbc:127.0.0.1:3306/test?useUnicode=true

username: canal

password: canal

canalAdapters:

# kafka主题名

- instance: kf_test # canal instance Name or mq topic name

groups:

- groupId: g1

outerAdapters:

- name: logger

# - name: rdb

# key: mysql1

# properties:

# jdbc.driverClassName: com.mysql.jdbc.Driver

# jdbc.url: jdbc:mysql://127.0.0.1:3306/mytest2?useUnicode=true

# jdbc.username: root

# jdbc.password: 121212

# - name: rdb

# key: oracle1

# properties:

# jdbc.driverClassName: oracle.jdbc.OracleDriver

# jdbc.url: jdbc:oracle:thin:@localhost:49161:XE

# jdbc.username: mytest

# jdbc.password: m12121

# 指定为rdb类型同步

- name: rdb

#指定adapter的唯一key, 与表映射配置中outerAdapterKey对应

key: postgres1

properties:

#pg数据库的链接地址

jdbc.driverClassName: org.postgresql.Driver

jdbc.url: jdbc:postgresql:120.0.0.1:3433/dapeng

jdbc.username: canal

jdbc.password: canal

threads: 1000

commitSize: 3000

# - name: hbase

# properties:

# hbase.zookeeper.quorum: 127.0.0.1

# hbase.zookeeper.property.clientPort: 2181

# zookeeper.znode.parent: /hbase

# - name: es

# hosts: 127.0.0.1:9300 # 127.0.0.1:9200 for rest mode

# properties:

# mode: transport # or rest

# # security.auth: test:123456 # only used for rest mode

# cluster.name: elasticsearch

修改conf/rdb/下的mytest_user.yml文件

dataSourceKey: defaultDS #和application里面的srcDataSources参数一致

destination: topic1 #canal的实例名字或者是kafka的topic名字

groupId: g1 #和application里面的一致

outerAdapterKey: postgres1 #和application里面的一致

concurrent: true

dbMapping:

database: test #源MySQL的数据库

table: test #源MySQL的org的表orders

targetTable: public.test #目标数据库和表 这里的是pg数据的模式名称不是数据库名称

targetPk:

id: id #MySQL的主键

mapAll: true #全量映射

# targetColumns: #部分映射

# id:

# name:

# role_id:

# c_time:

# test1:

# etlCondition: "where c_time>={}" # 简单的etl处理

commitBatch: 3000 # 批量提交的大小

## Mirror schema synchronize config

#dataSourceKey: defaultDS

#destination: example

#groupId: g1

#outerAdapterKey: mysql1

#concurrent: true

#dbMapping:

# mirrorDb: true

# database: mytest

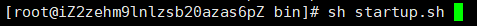

启动 canal-adapter

到canal-adapter安装的bin路径下启动

看到 CanalAdapterApplication表示启动成功