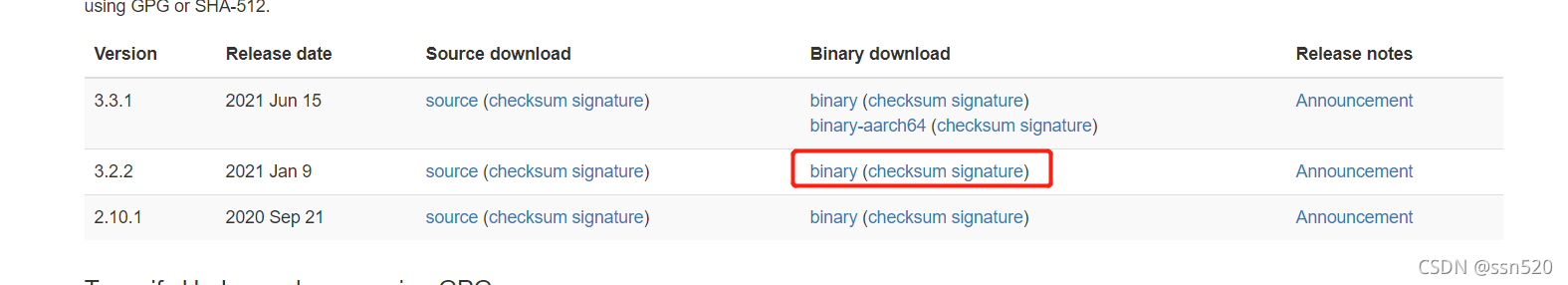

官网:hadoop.apache.org

下载地址:

Apache Hadoophttps://hadoop.apache.org/releases.html

1.下载安装包

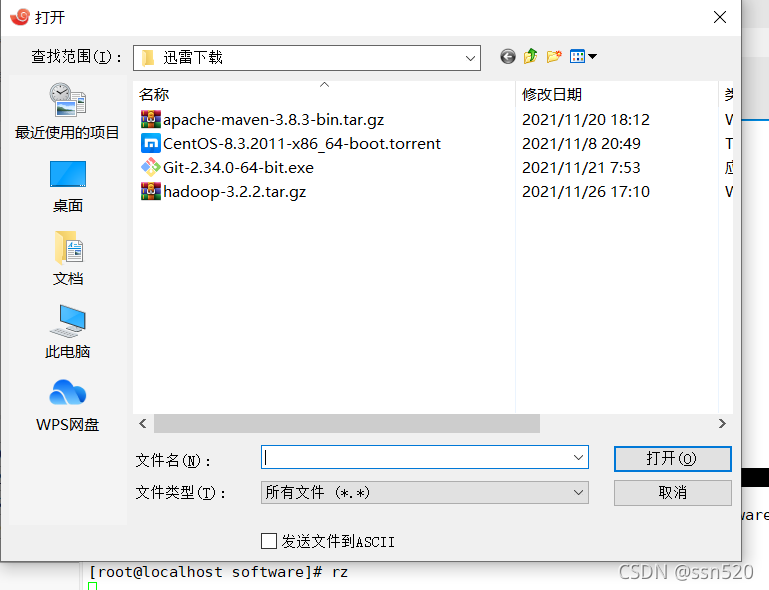

?2.上传到Linux中/tmp目录中

[root@localhost ~]# cd software

[root@localhost software]# rz

?3.添加一个维护的用户和文件夹

[root@localhost software]# useradd ssn

[root@localhost software]# id ssn

uid=1001(ssn) gid=1001(ssn) groups=1001(ssn)

[root@localhost software]# pwd

/root/software

[root@localhost software]# su - ssn

[ssn@localhost ~]$ mkdir source software app log data lib tmp

[ssn@localhost ~]$ ls

app data lib log software source tmp

创建tmp目录的原因:系统自带的tmp目录,定期不访问,根据当前版本特性,自动清除,默认是30天

4.移动安装包,并赋予权限

[ssn@localhost ~]$ exit

[root@localhost software]# mv ~/software/hadoop-3.2.2.tar.gz /home/ssn/software/

[root@localhost software]# chown ssn:ssn /home/ssn/software/* 5.解压

[root@localhost software]# su - ssn

Last login: Fri Nov 26 17:43:04 CST 2021 on pts/2

[ssn@localhost ~]$ cd software

[ssn@localhost software]$ ls

hadoop-3.2.2.tar.gz

[ssn@localhost software]$ tar xzvf hadoop-3.2.2.tar.gz -C ../app/

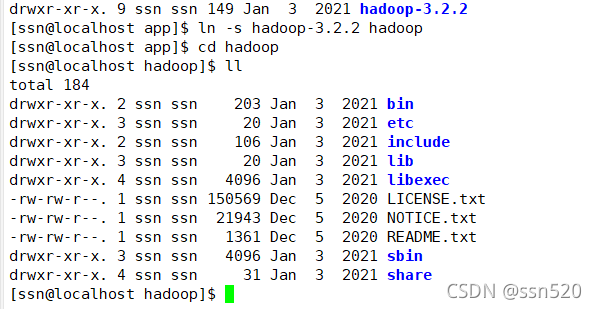

6.做软链接

[ssn@localhost software]$ cd ../app

[ssn@localhost app]$ ll

total 0

drwxr-xr-x. 9 ssn ssn 149 Jan 3 2021 hadoop-3.2.2

[ssn@localhost app]$ ln -s hadoop-3.2.2 hadoop

?bin:命令执行脚本

etc:配置文件

sbin:启动停止脚本

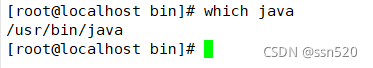

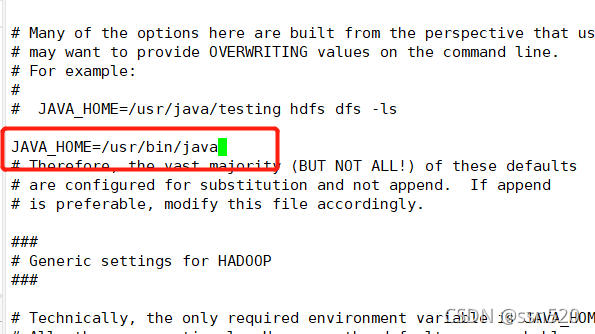

7.配置环境变量 (hadoop软件比较特殊,需要到hadoop软件的配置文件里配置JAVA_HOME)

[root@localhost ~]# cd /home/ssn/app/hadoop

[root@localhost hadoop]# ll

total 184

drwxr-xr-x. 2 ssn ssn 203 Jan 3 2021 bin

drwxr-xr-x. 3 ssn ssn 20 Jan 3 2021 etc

drwxr-xr-x. 2 ssn ssn 106 Jan 3 2021 include

drwxr-xr-x. 3 ssn ssn 20 Jan 3 2021 lib

drwxr-xr-x. 4 ssn ssn 4096 Jan 3 2021 libexec

-rw-rw-r--. 1 ssn ssn 150569 Dec 5 2020 LICENSE.txt

-rw-rw-r--. 1 ssn ssn 21943 Dec 5 2020 NOTICE.txt

-rw-rw-r--. 1 ssn ssn 1361 Dec 5 2020 README.txt

drwxr-xr-x. 3 ssn ssn 4096 Jan 3 2021 sbin

drwxr-xr-x. 4 ssn ssn 31 Jan 3 2021 share

[root@localhost hadoop]# cd etc/hadoop/

[root@localhost hadoop]# ll

total 172

-rw-r--r--. 1 ssn ssn 9213 Jan 3 2021 capacity-scheduler.xml

-rw-r--r--. 1 ssn ssn 1335 Jan 3 2021 configuration.xsl

-rw-r--r--. 1 ssn ssn 1940 Jan 3 2021 container-executor.cfg

-rw-r--r--. 1 ssn ssn 774 Jan 3 2021 core-site.xml

-rw-r--r--. 1 ssn ssn 3999 Jan 3 2021 hadoop-env.cmd

-rw-r--r--. 1 ssn ssn 16235 Jan 3 2021 hadoop-env.sh

-rw-r--r--. 1 ssn ssn 3321 Jan 3 2021 hadoop-metrics2.properties

-rw-r--r--. 1 ssn ssn 11392 Jan 3 2021 hadoop-policy.xml

-rw-r--r--. 1 ssn ssn 3414 Jan 3 2021 hadoop-user-functions.sh.example

-rw-r--r--. 1 ssn ssn 775 Jan 3 2021 hdfs-site.xml

-rw-r--r--. 1 ssn ssn 1484 Jan 3 2021 httpfs-env.sh

-rw-r--r--. 1 ssn ssn 1657 Jan 3 2021 httpfs-log4j.properties

-rw-r--r--. 1 ssn ssn 21 Jan 3 2021 httpfs-signature.secret

-rw-r--r--. 1 ssn ssn 620 Jan 3 2021 httpfs-site.xml

-rw-r--r--. 1 ssn ssn 3518 Jan 3 2021 kms-acls.xml

-rw-r--r--. 1 ssn ssn 1351 Jan 3 2021 kms-env.sh

-rw-r--r--. 1 ssn ssn 1860 Jan 3 2021 kms-log4j.properties

-rw-r--r--. 1 ssn ssn 682 Jan 3 2021 kms-site.xml

-rw-r--r--. 1 ssn ssn 14713 Jan 3 2021 log4j.properties

-rw-r--r--. 1 ssn ssn 951 Jan 3 2021 mapred-env.cmd

-rw-r--r--. 1 ssn ssn 1764 Jan 3 2021 mapred-env.sh

-rw-r--r--. 1 ssn ssn 4113 Jan 3 2021 mapred-queues.xml.template

-rw-r--r--. 1 ssn ssn 758 Jan 3 2021 mapred-site.xml

drwxr-xr-x. 2 ssn ssn 24 Jan 3 2021 shellprofile.d

-rw-r--r--. 1 ssn ssn 2316 Jan 3 2021 ssl-client.xml.example

-rw-r--r--. 1 ssn ssn 2697 Jan 3 2021 ssl-server.xml.example

-rw-r--r--. 1 ssn ssn 2642 Jan 3 2021 user_ec_policies.xml.template

-rw-r--r--. 1 ssn ssn 10 Jan 3 2021 workers

-rw-r--r--. 1 ssn ssn 2250 Jan 3 2021 yarn-env.cmd

-rw-r--r--. 1 ssn ssn 6272 Jan 3 2021 yarn-env.sh

-rw-r--r--. 1 ssn ssn 2591 Jan 3 2021 yarnservice-log4j.properties

-rw-r--r--. 1 ssn ssn 690 Jan 3 2021 yarn-site.xml

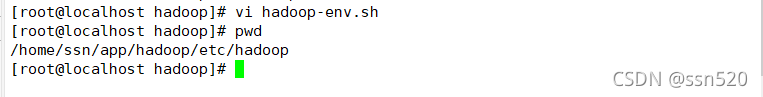

[root@localhost hadoop]# vi hadoop-env.sh

?

?总结:在上图这个目录中,配置JAVA_HOME

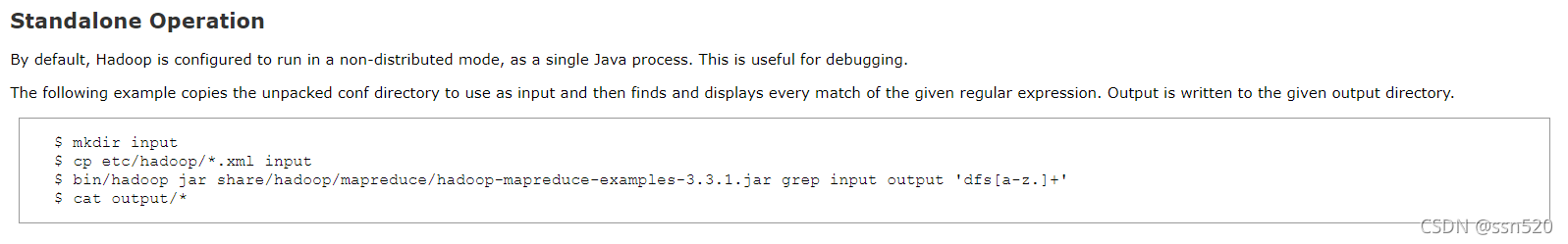

部署本地模式(Standalone Operation)也称单机模式,无进程,一般不用

????????官方文档截图:

????????

?

[root@localhost hadoop]# cd ../../

[root@localhost hadoop]# pwd

/home/ssn/app/hadoop

[root@localhost hadoop]# mkdir input

[root@localhost hadoop]# cp etc/hadoop/*.xml input

[root@localhost hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.2.jar grep input output 'dfs[a-z.]+'

[root@localhost hadoop]# cd output/

[root@localhost output]# ll

total 4

-rw-r--r--. 1 root root 11 Nov 26 19:10 part-r-00000

-rw-r--r--. 1 root root 0 Nov 26 19:10 _SUCCESS

[root@localhost output]# cat part-r-00000

1 dfsadmin

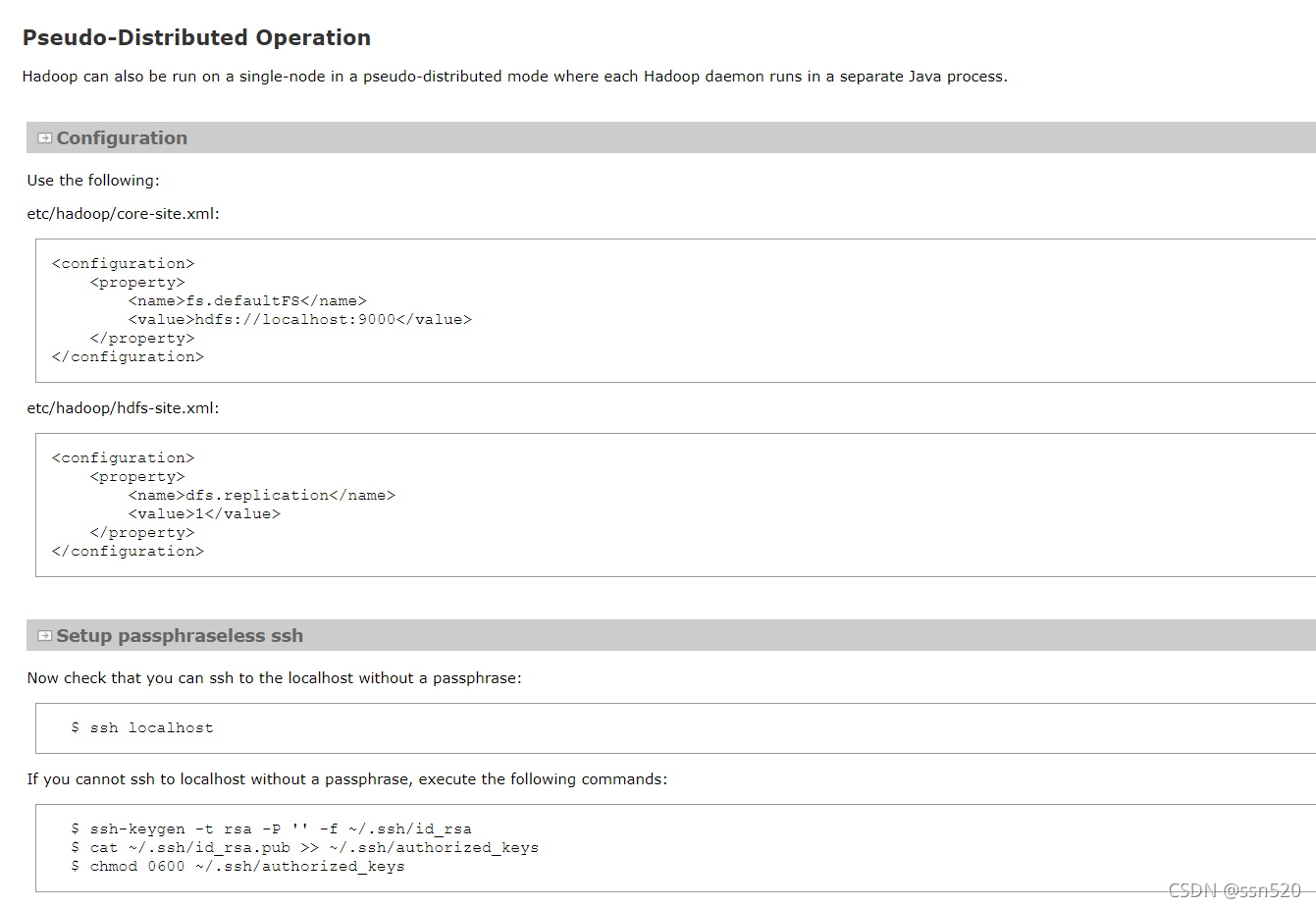

伪分布式部署(Pseudo-Distributed Operation)

官方说明截图

?a.修改core-site.xml文件:

[root@localhost output]# cd /home/ssn/app/hadoop

[root@localhost hadoop]# vi etc/hadoop/core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

????????localhost是当前机器的名字,默认是localhost,因为我用的默认的,所以无需修改,请根据自己机器名进行修改编辑

b.修改hdfs-site.xml :

[root@localhost hadoop]# vi etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>因为之前创建了用户ssn,应该用用户ssn编辑,一直使用的root进行编辑,故需要修正权限:

[root@localhost hadoop]# cd /home/ssn/app

[root@localhost app]# chown -R ssn:ssn hadoop/*

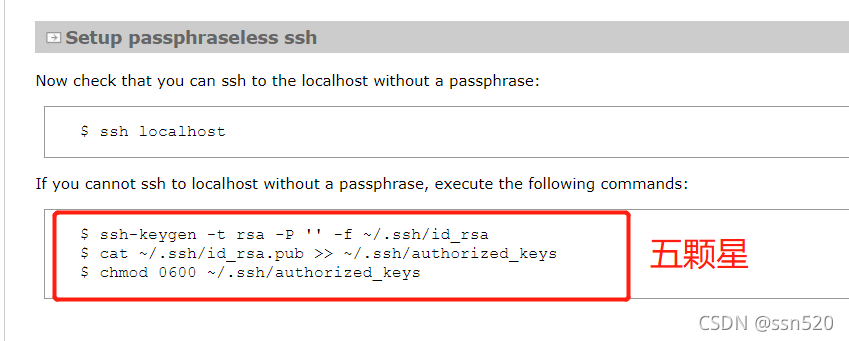

c.切换ssn用户之后登录操作

[ssn@localhost ~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

[ssn@localhost ~]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[ssn@localhost ~]$ chmod 0600 ~/.ssh/authorized_keys

?

[ssn@localhost ~]$ ssh localhost //登录光标所跳动的用户 等同于下面一行代码

[ssn@localhost ~]$ ssh ssn@localhostd.格式化

[ssn@localhost ~]$ cd app/hadoop

[ssn@localhost hadoop]$ bin/hdfs namenode -format

2021-11-26 19:57:23,715 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1232999028-127.0.0.1-1637927843705

2021-11-26 19:57:23,729 INFO common.Storage: Storage directory /tmp/hadoop-ssn/dfs/name has been successfully formatted.

2021-11-26 19:57:23,767 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-ssn/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2021-11-26 19:57:23,846 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-ssn/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 398 bytes saved in 0 seconds .

2021-11-26 19:57:23,855 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2021-11-26 19:57:23,860 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2021-11-26 19:57:23,861 INFO namenode.NameNode: SHUTDOWN_MSG:?

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

[ssn@localhost hadoop]$?

?

e.NameNode daemon and DataNode daemon启动:(名称节点和数据节点启动)

[ssn@localhost hadoop]$ sbin/start-dfs.sh

[ssn@localhost hadoop]$ sbin/start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [localhost.localdomain]

localhost.localdomain: Warning: Permanently added 'localhost.localdomain' (ECDSA) to the list of known hosts.

[ssn@localhost hadoop]$

jps查看服务有没有成功启动,但是不保险,建议还是使用ps -ef | grep hadoop? ?

? ??

? ??

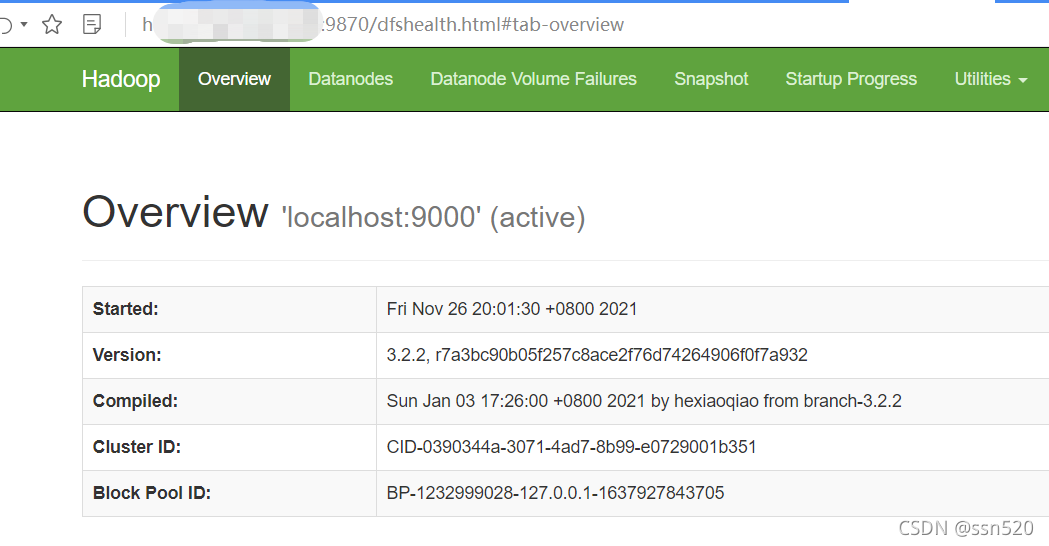

如果使用的云主机,需要配置安全组才能访问,id地址:9870,访问成功如图所示

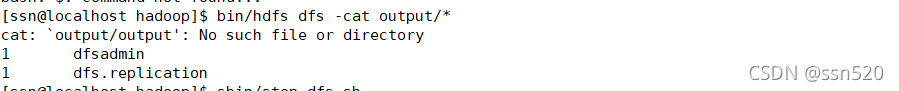

?g.

注意:

先用root用户执行

rm -rf /tmp/hadoop

然后使用ssn用户执行

[ssn@localhost hadoop]$bin/hdfs dfs -mkdir /user

[ssn@localhost hadoop]$bin/hdfs dfs -mkdir /user/ssn

[ssn@localhost hadoop]$bin/hdfs dfs -mkdir input

[ssn@localhost hadoop]$bin/hdfs dfs -put etc/hadoop/*.xml input

[ssn@localhost hadoop]$bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.2.jar grep input output 'dfs[a-z.]+'

[ssn@localhost hadoop]$bin/hdfs dfs -get output output

[ssn@localhost hadoop]$bin/hdfs dfs -cat output/*

[ssn@localhost hadoop]$$ sbin/stop-dfs.sh

?