DataNode 启动失败报错 Incompatible clusterIDs

信息

- 环境版本: Hadoop 3.3.1

- 系统版本: CentOS 7.4

- Java 版本: Java SE 1.8.0_301

报错摘要

java.io.IOException: Incompatible clusterIDs in /opt/module/hadoop-3.3.1/data/dfs/data: namenode clusterID = CID-aa23cfe4-9ad3-4c06-87fc-e862c8f3a722; datanode clusterID = CID-55fa9a51-7777-4ff4-87d6-4df7cf2cb8b9

问题描述

DataNode 启动报错, /opt/module/hadoop-3.3.1/logs/hadoop-bordy-datanode-hadoop102.log 日志报错内容如下:

2021-11-29 21:58:51,350 INFO org.apache.hadoop.hdfs.server.common.Storage: Using 1 threads to upgrade data directories (dfs.datanode.parallel.volumes.load.threads.num=1, dataDirs=1)

2021-11-29 21:58:51,354 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /opt/module/hadoop-3.3.1/data/dfs/data/in_use.lock acquired by nodename 13694@hadoop102

2021-11-29 21:58:51,356 WARN org.apache.hadoop.hdfs.server.common.Storage: Failed to add storage directory [DISK]file:/opt/module/hadoop-3.3.1/data/dfs/data

java.io.IOException: Incompatible clusterIDs in /opt/module/hadoop-3.3.1/data/dfs/data: namenode clusterID = CID-aa23cfe4-9ad3-4c06-87fc-e862c8f3a722; datanode clusterID = CID-55fa9a51-7777-4ff4-87d6-4df7cf2cb8b9

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:746)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadStorageDirectory(DataStorage.java:296)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadDataStorage(DataStorage.java:409)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:389)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:561)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1753)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1689)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:394)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:295)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:854)

at java.lang.Thread.run(Thread.java:748)

2021-11-29 21:58:51,358 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid a4eeff59-0192-4402-8278-4743158fa405) service to hadoop101/192.168.2.101:8020. Exiting.

java.io.IOException: All specified directories have failed to load.

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:562)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1753)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1689)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:394)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:295)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:854)

at java.lang.Thread.run(Thread.java:748)

2021-11-29 21:58:51,359 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool <registering> (Datanode Uuid a4eeff59-0192-4402-8278-4743158fa405) service to hadoop101/192.168.2.101:8020

2021-11-29 21:58:51,363 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Removed Block pool <registering> (Datanode Uuid a4eeff59-0192-4402-8278-4743158fa405)

2021-11-29 21:58:53,364 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Exiting Datanode

2021-11-29 21:58:53,424 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at hadoop102/192.168.2.102

************************************************************/

问题原因

hadoop的升级功能需要data-node在它的版本文件里存储一个永久性的clusterID,当datanode启动时会检查并匹配namenode的版本文件里的clusterID,如果两者不匹配,就会出现"Incompatible clusterIDs"的异常. 参见官方CCR[HDFS-107]

分析步骤

-

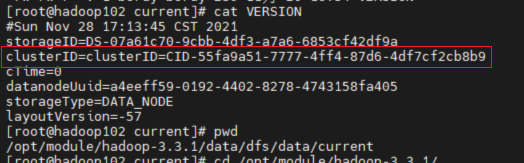

查看DataNode 目录

/opt/module/hadoop-3.3.1/data/dfs/data/current下的VERSION文件中的clusterID.

-

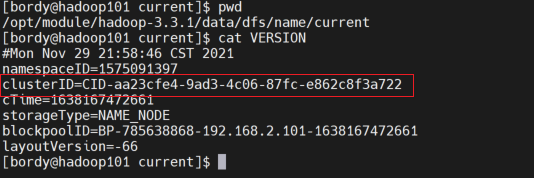

查看 NamaNode 目录

/opt/module/hadoop-3.3.1/data/dfs/name/current下的VERSION文件中的clusterID.

-

发现两个文件中的 clusterID 缺失不匹配.

-

经了解,HDFS架构中,每个DataNode 需要与 NameNode 进行通信, ClusterID 为 NameNode 的唯一标识.

解决办法

将启动失败的 DataNode 的 ClusterID 值 修改为 主 NameNode 的 ClusterID.