1 通过数据源创建DF

原始数据:

{"name":"Tom","age":18},

{"name":"Alice","age":17}

步骤:

//读取目录文件

scala> val df = spark.read.json("file:///opt/module/spark/mycode/a.json")

df: org.apache.spark.sql.DataFrame = [age: bigint, name: string]

//创建临时视图

scala> df.createOrReplaceTempView("people")

//查看DF

scala> spark.sql("select * from people").show

//打印结果

+---+-----+

|age| name|

+---+-----+

| 18| Tom|

| 17|Alice|

+---+-----+

2 通过反射机制创建DF

原始数据:

Tom,18

Alice,17

步骤:

//导入包,支持把一个RDD隐式转换为一个DataFrame

scala> import spark.implicits._

//1.创建样例类

case class People(name:String, age: Int)

//2.创建RDD

scala> val rdd = sc.textFile("file:///opt/module/spark/mycode/b.txt")

rdd: org.apache.spark.rdd.RDD[String] = file:///opt/module/spark/mycode/b.txt MapPartitionsRDD[1] at textFile at <console>:24

//3.使用map算子将每一行都创建一个People对象

scala> val df = rdd.map(_.split(",")).map(data=>People(data(0),data(1).toInt)).toDF

df: org.apache.spark.sql.DataFrame = [name: string, age: int]

//4.打印df

scala> df.show

+-----+---+

| name|age|

+-----+---+

| Tom| 18|

|Alice| 17|

+-----+---+

注意,import spark.implicits._ 中的spark指的是SparkSession对象(在scala交互式环境中默认存在了一个SparkSession对象)。

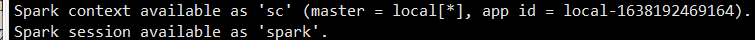

启动spark-shell时的信息:

3 使用编程定义方式创建DF

当无法提前定义case class时,就需要采用编程方式定义RDD模式

//导入相关包

scala> import org.apache.spark.sql.types._

import org.apache.spark.sql.types._

scala> import org.apache.spark.sql.Row

import org.apache.spark.sql.Row

//1.定义一个模式字符串

scala> val schemaString = "name age"

schemaString: String = name age

//2.根据模式字符串创建模式

scala> val fields = schemaString.split(" ").map(fieldName=>StructField(fieldName,StringType,nullable=true))

fields: Array[org.apache.spark.sql.types.StructField] = Array(StructField(name,StringType,true), StructField(age,StringType,true))

scala> val schema = StructType(fields)

schema: org.apache.spark.sql.types.StructType = StructType(StructField(name,StringType,true), StructField(age,StringType,true))

//3.将rdd的每条数据转换成Row类型

scala> val rowRDD = rdd.map(_.split(",")).map(data=>Row(data(0),data(1)))

rowRDD: org.apache.spark.rdd.RDD[org.apache.spark.sql.Row] = MapPartitionsRDD[19] at map at <console>:29

//4.根据设计的模式创建DF

scala> val df = spark.createDataFrame(rowRDD, schema)

df: org.apache.spark.sql.DataFrame = [name: string, age: string]

//5.打印

scala> df.show

+-----+---+

| name|age|

+-----+---+

| Tom| 18|

|Alice| 17|

+-----+---+