案例需求:

????????过滤输出的log日志,包含tuomasi的网址输出到 tuomasi.log文件,不包含 tuomasi 的网址输出到 other.log文件

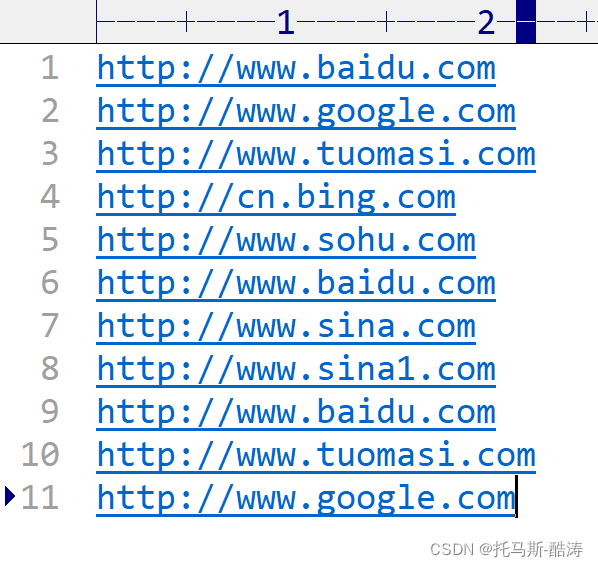

输入数据:

?

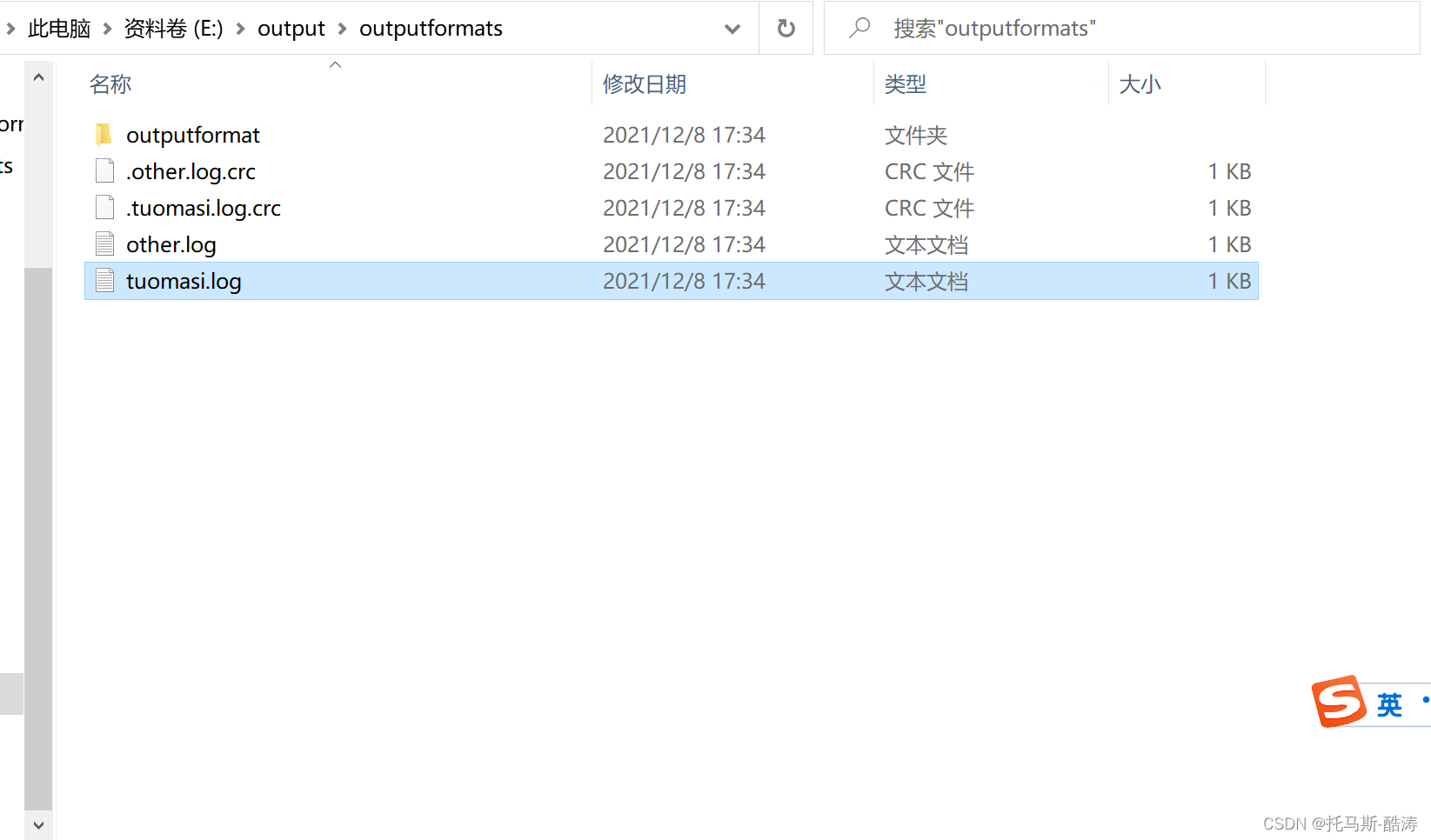

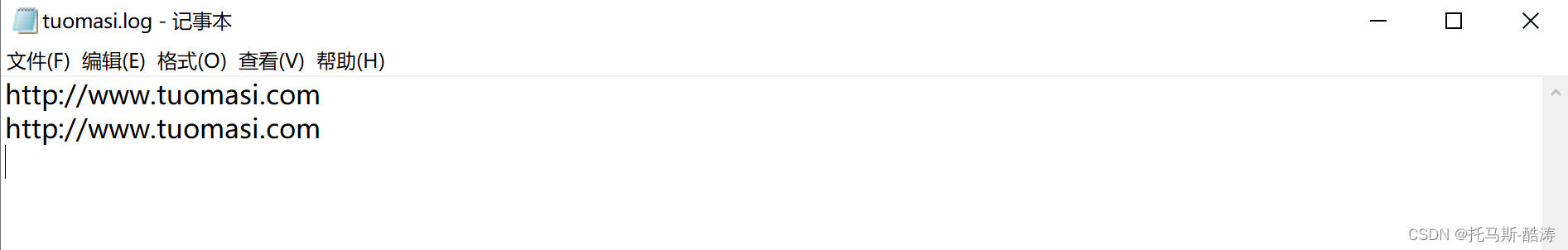

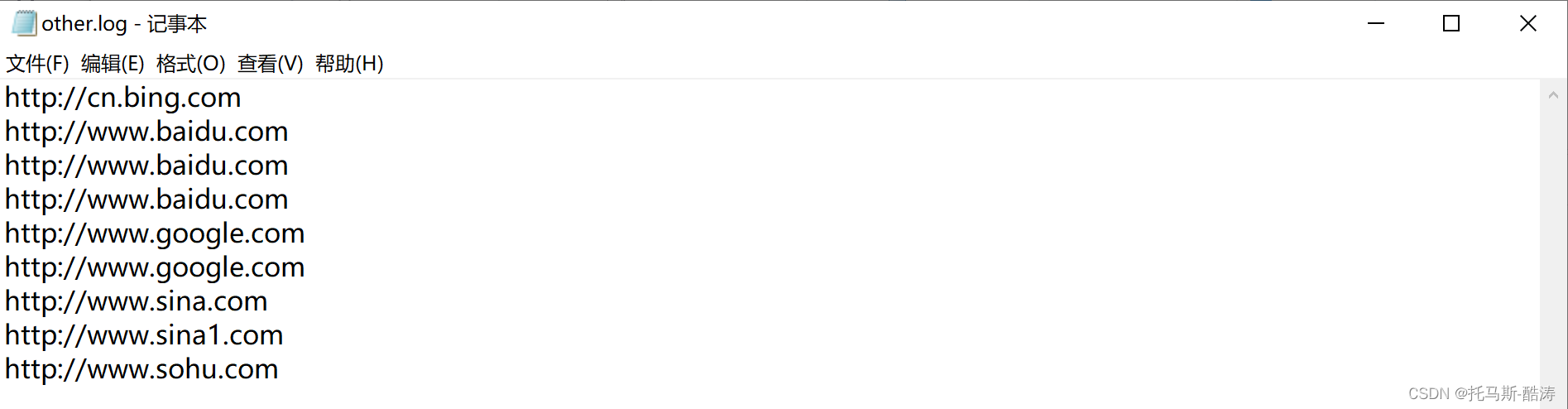

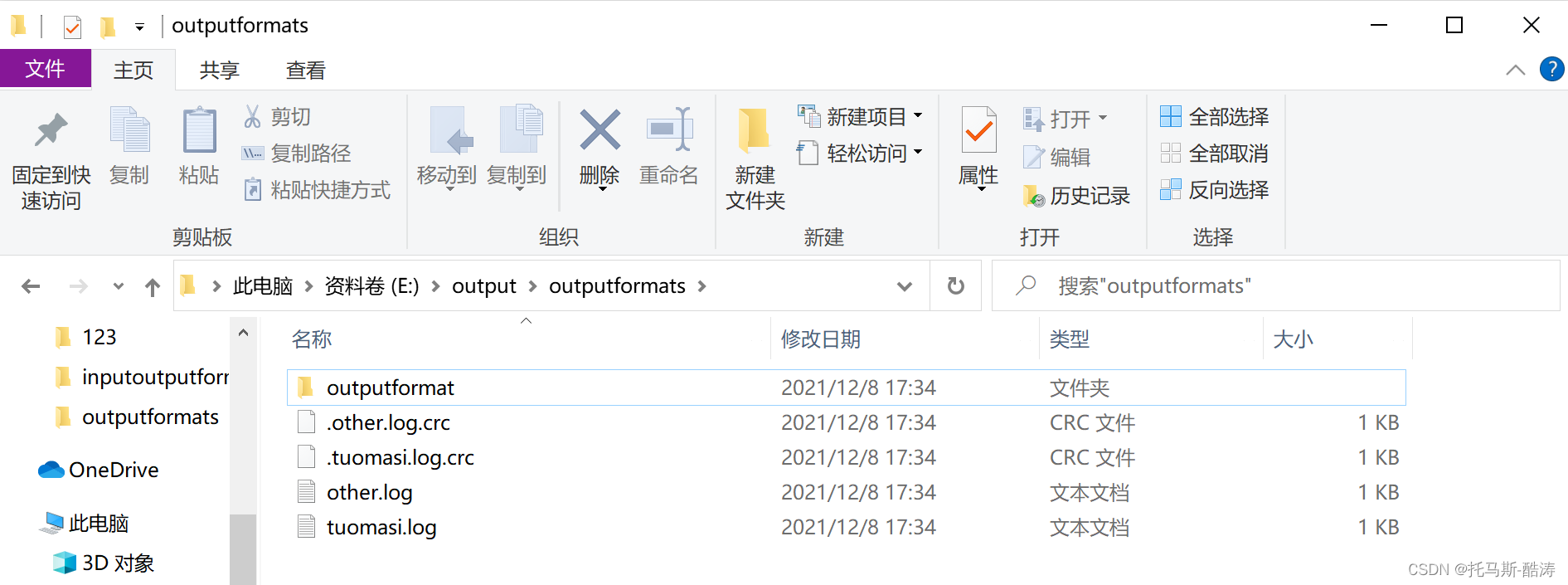

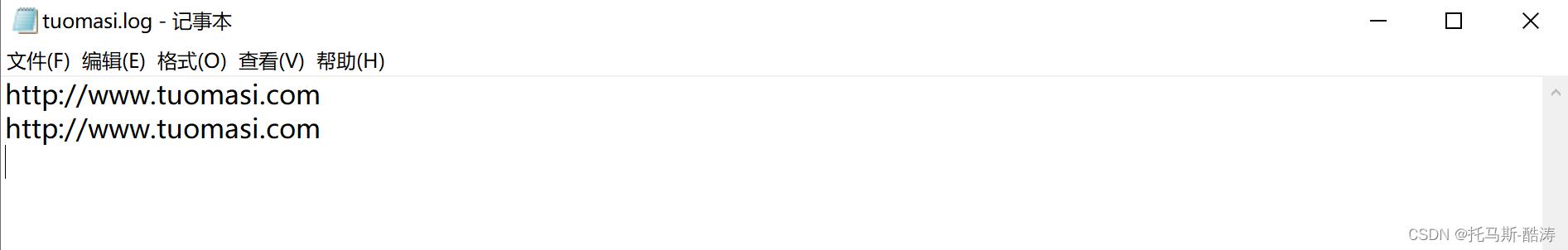

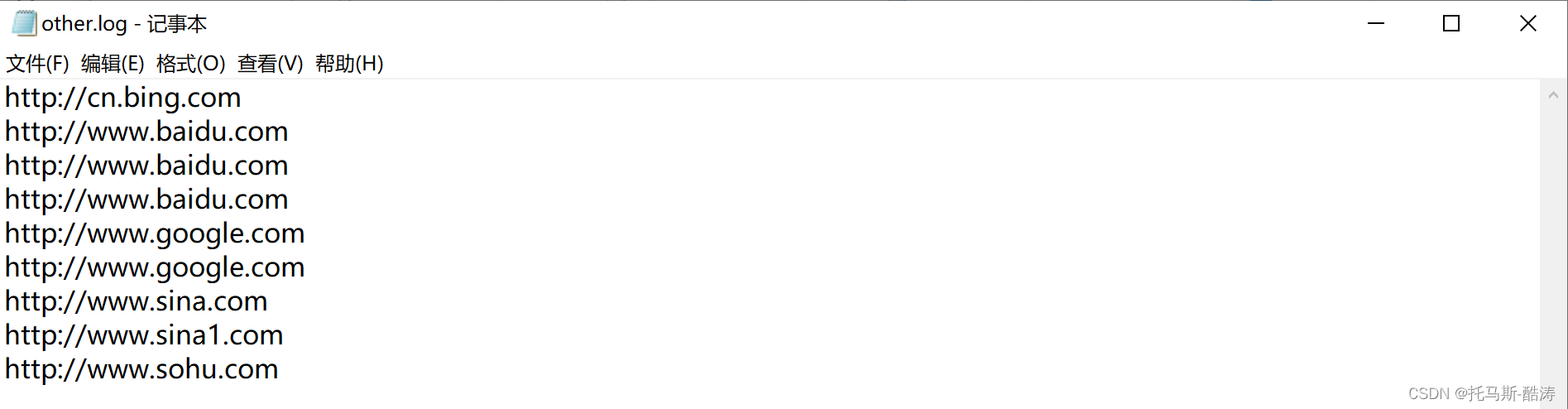

期望输出数据:

?

? ? ? ? ?注:通过观察可以看到存在 tuomasi 字符的网址已经被存放在 tuomasi.log 文件中,其余网址则被存放在 other.log 文件中

程序编写:

? ? ? ? 程序主体架构:

?

(1)LogMapper编写

package org.example.mapreduce.outputformat;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class LogMapper extends Mapper<LongWritable, Text, Text, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

context.write(value, NullWritable.get());

}

}

(2)LogReducer编写

package org.example.mapreduce.outputformat;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class LogReducer extends Reducer<Text, NullWritable, Text, NullWritable> {

@Override

protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

//为防止输入相同网址输出一条数据,进行循环迭代

for (NullWritable value : values) {

context.write(key, NullWritable.get());

}

}

}

(3)LogOutputFormat编写

package org.example.mapreduce.outputformat;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class LogOutputFormat extends FileOutputFormat<Text, NullWritable> {

@Override

public RecordWriter<Text, NullWritable> getRecordWriter(TaskAttemptContext job) throws IOException, InterruptedException {

LogRecordWriter lrw = new LogRecordWriter(job);

return lrw;

}

}

(4)LogRecordWriter编写

package org.example.mapreduce.outputformat;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.RecordWriter;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import java.io.IOException;

public class LogRecordWriter extends RecordWriter<Text, NullWritable> {

private FSDataOutputStream tuomasiOut;

private FSDataOutputStream otherOut;

public LogRecordWriter(TaskAttemptContext job) {

//创建流

try {

FileSystem fs = FileSystem.get(job.getConfiguration());

tuomasiOut = fs.create(new Path("E:\\output\\outputformats\\tuomasi.log"));

otherOut = fs.create(new Path("E:\\output\\outputformats\\other.log"));

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void write(Text key, NullWritable value) throws IOException, InterruptedException {

String log = key.toString();

//具体流程

if (log.contains("tuomasi")) {

tuomasiOut.writeBytes(log+"\n");

} else {

otherOut.writeBytes(log+"\n");

}

}

@Override

public void close(TaskAttemptContext context) throws IOException, InterruptedException {

//关闭流

IOUtils.closeStream(tuomasiOut);

IOUtils.closeStream(otherOut);

}

}

(5)LogDriver编写

package org.example.mapreduce.outputformat;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class LogDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//获取job

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//设置jar包路径

job.setJarByClass(LogDriver.class);

//关联mapper和reducer

job.setMapperClass(LogMapper.class);

job.setReducerClass(LogReducer.class);

//设置mapper输出的k,v类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

//设置最终输出的K,V类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//设置自定义的outputformat

job.setOutputFormatClass(LogOutputFormat.class);

//设置输入路径和输出路径

FileInputFormat.setInputPaths(job, new Path("E:\\input\\inputoutputformat"));

FileOutputFormat.setOutputPath(job, new Path("E:\\output\\outputformats\\outputformat"));

//提交job作业

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

数据分析:

?

????????注:程序结果与预期效果一致

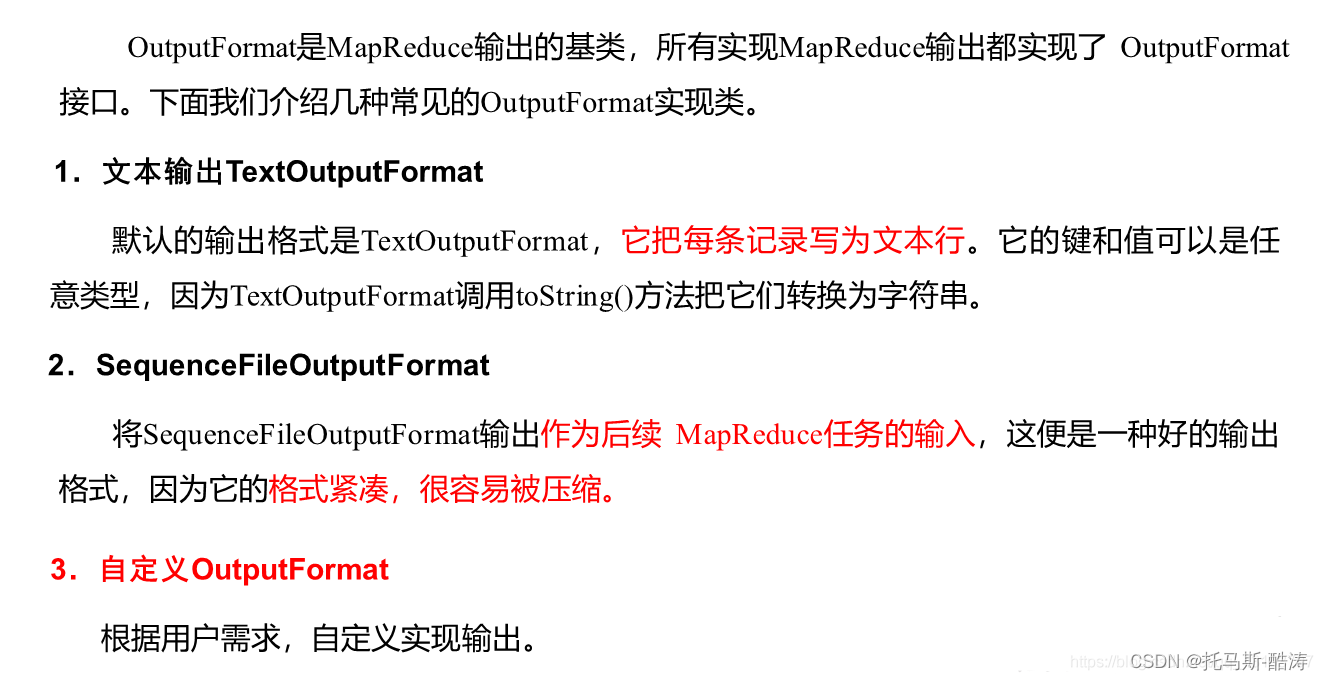

概论

OutputFormat接口实现类:

?

自定义outputformat:

?

????????总结:自定义outputformat可以更加便捷的控制输出文件的路径和格式,使程序更加人性化