本文讲述如何把OPCUA的历史数据存储到数据库里,数据库使用SQLite3,运行环境Debian 10,Ubuntu也是一样。

一 源码来源

本文以这个链接https://github.com/nicolasr75/open62541_sqlite里的代码为基础进行修改,该版本源码是windows版本,使用VS,本人经过改进让其适合于Linux(改动较大),应该也适合于Windows,没测试过… 整体来说还是挺花时间的

修改后的源码工程已经上传到本人的github,地址是https://github.com/happybruce/opcua/tree/main

二 工程源码

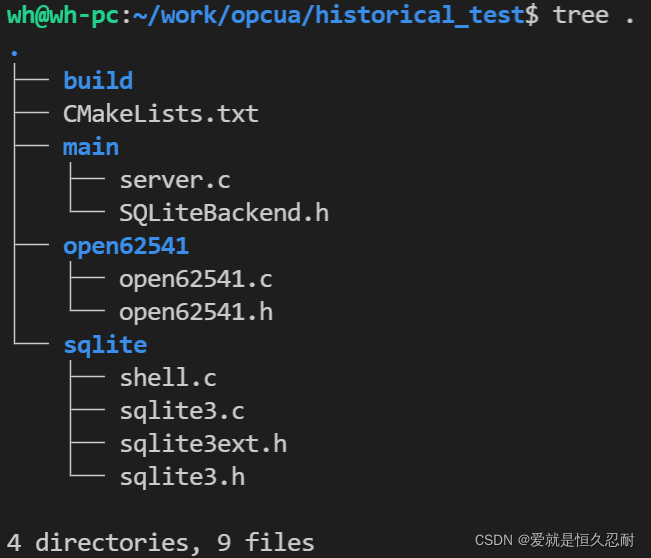

整体工程结构如下,

open62541.c/h是开启历史数据功能后编译生成的;sqlite目录里的代码是下载sqlite的源码然后解压放入的。

CMakeLists.txt内容如下,

cmake_minimum_required(VERSION 3.5)

project(historicalSqlite3)

include_directories(open62541)

include_directories(sqlite)

set(source main/server.c open62541/open62541.c sqlite/sqlite3.c)

add_executable(server ${source})

target_link_libraries (server pthread dl)

server.c是使用open62541自带example — tutorial_server_historicaldata.c,进行了修改,把整型变量换成double型,并把历史数据的backend换成了本文提供的基于sqlite3的backend,如下,

#include <signal.h>

#include "open62541.h"

#include "SQLiteBackend.h"

static UA_Boolean running = true;

static void stopHandler(int sign)

{

(void)sign;

UA_LOG_INFO(UA_Log_Stdout, UA_LOGCATEGORY_SERVER, "received ctrl-c");

running = false;

}

int main(void)

{

signal(SIGINT, stopHandler);

signal(SIGTERM, stopHandler);

UA_Server *server = UA_Server_new();

UA_ServerConfig *config = UA_Server_getConfig(server);

UA_ServerConfig_setDefault(config);

/*

* We need a gathering for the plugin to constuct.

* The UA_HistoryDataGathering is responsible to collect data and store it to the database.

* We will use this gathering for one node, only. initialNodeIdStoreSize = 1

* The store will grow if you register more than one node, but this is expensive.

*/

UA_HistoryDataGathering gathering = UA_HistoryDataGathering_Default(1);

/*

* We set the responsible plugin in the configuration.

* UA_HistoryDatabase is the main plugin which handles the historical data service.

*/

config->historyDatabase = UA_HistoryDatabase_default(gathering);

/* Define the attribute of the uint32 variable node */

UA_VariableAttributes attr = UA_VariableAttributes_default;

UA_Double myDouble = 17.2;

UA_Variant_setScalar(&attr.value, &myDouble, &UA_TYPES[UA_TYPES_DOUBLE]);

attr.description = UA_LOCALIZEDTEXT("en-US", "myDoubleValue");

attr.displayName = UA_LOCALIZEDTEXT("en-US", "myDoubleValue");

attr.dataType = UA_TYPES[UA_TYPES_DOUBLE].typeId;

/*

* We set the access level to also support history read

* This is what will be reported to clients

*/

attr.accessLevel = UA_ACCESSLEVELMASK_READ | UA_ACCESSLEVELMASK_WRITE | UA_ACCESSLEVELMASK_HISTORYREAD;

/*

* We also set this node to historizing, so the server internals also know from it.

*/

attr.historizing = true;

/* Add the variable node to the information model */

UA_NodeId doubleNodeId = UA_NODEID_STRING(1, "myDoubleValue");

UA_QualifiedName doubleName = UA_QUALIFIEDNAME(1, "myDoubleValue");

UA_NodeId parentNodeId = UA_NODEID_NUMERIC(0, UA_NS0ID_OBJECTSFOLDER);

UA_NodeId parentReferenceNodeId = UA_NODEID_NUMERIC(0, UA_NS0ID_ORGANIZES);

UA_NodeId outNodeId;

UA_NodeId_init(&outNodeId);

UA_StatusCode retval = UA_Server_addVariableNode(server,

doubleNodeId,

parentNodeId,

parentReferenceNodeId,

doubleName,

UA_NODEID_NUMERIC(0, UA_NS0ID_BASEDATAVARIABLETYPE),

attr,

NULL,

&outNodeId);

UA_LOG_INFO(UA_Log_Stdout, UA_LOGCATEGORY_SERVER, "UA_Server_addVariableNode %s", UA_StatusCode_name(retval));

/*

* Now we define the settings for our node

*/

UA_HistorizingNodeIdSettings setting;

setting.historizingBackend = UA_HistoryDataBackend_sqlite("database.sqlite");

/*

* We want the server to serve a maximum of 100 values per request.

* This value depend on the plattform you are running the server.

* A big server can serve more values, smaller ones less.

*/

setting.maxHistoryDataResponseSize = 100;

setting.historizingUpdateStrategy = UA_HISTORIZINGUPDATESTRATEGY_VALUESET;

/*

* At the end we register the node for gathering data in the database.

*/

retval = gathering.registerNodeId(server, gathering.context, &outNodeId, setting);

UA_LOG_INFO(UA_Log_Stdout, UA_LOGCATEGORY_SERVER, "registerNodeId %s", UA_StatusCode_name(retval));

retval = UA_Server_run(server, &running);

UA_LOG_INFO(UA_Log_Stdout, UA_LOGCATEGORY_SERVER, "UA_Server_run %s", UA_StatusCode_name(retval));

UA_Server_delete(server);

return (int)retval;

}

SQLiteBackend.h内容如下,整个头文件是围绕函数UA_HistoryDataBackend_sqlite()的实现来进行,

#ifndef BACKEND_H

#define BACKEND_H

#include <time.h>

#include <stdlib.h>

#include "open62541.h"

#include "sqlite3.h"

static const size_t END_OF_DATA = SIZE_MAX;

static const size_t QUERY_BUFFER_SIZE = 500;

UA_Int64 convertTimestampStringToUnixSeconds(const char* timestampString)

{

UA_DateTimeStruct dts;

memset(&dts, 0, sizeof(dts));

sscanf(timestampString, "%hu-%hu-%hu %hu:%hu:%hu",

&dts.year, &dts.month, &dts.day, &dts.hour, &dts.min, &dts.sec);

UA_DateTime dt = UA_DateTime_fromStruct(dts);

UA_Int64 t = UA_DateTime_toUnixTime(dt);

return t;

}

const char* convertUnixSecondsToTimestampString(UA_Int64 unixSeconds)

{

static char buffer[20];

UA_DateTime dt = UA_DateTime_fromUnixTime(unixSeconds);

UA_DateTimeStruct dts = UA_DateTime_toStruct(dt);

struct tm tm;

memset(&tm, 0, sizeof(tm));

tm.tm_year = dts.year - 1900;

tm.tm_mon = dts.month - 1;

tm.tm_mday = dts.day;

tm.tm_hour = dts.hour;

tm.tm_min = dts.min;

tm.tm_sec = dts.sec;

memset(buffer, 0, 20);

strftime(buffer, 20, "%Y-%m-%d %H:%M:%S", &tm);

return buffer;

}

//Context that is needed for the SQLite callback for copying data.

struct context_copyDataValues {

size_t maxValues;

size_t counter;

UA_DataValue *values;

};

typedef struct context_copyDataValues context_copyDataValues;

struct context_sqlite {

sqlite3* sqlite;

const char* measuringPointID;

};

static struct context_sqlite*

generateContext_sqlite(const char* filename)

{

sqlite3* handle;

char *errorMessage;

int res = sqlite3_open(filename, &handle);

if (res != SQLITE_OK)

return NULL;

struct context_sqlite* ret = (struct context_sqlite*)UA_calloc(1, sizeof(struct context_sqlite));

if (ret == NULL)

{

return NULL;

}

const char *sql = "DROP TABLE IF EXISTS PeriodicValues;"

"CREATE TABLE PeriodicValues(MeasuringPointID INT, Value DOUBLE, Timestamp DATETIME DEFAULT CURRENT_TIMESTAMP);";

res = sqlite3_exec(handle, sql, NULL, NULL, &errorMessage);

if (res != SQLITE_OK)

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

sqlite3_close(handle);

return NULL;

}

ret->sqlite = handle;

//For this demo we have only one source measuring point which we hardcode in the context.

//A more advanced demo should determine the available measuring points from the source

//itself or maybe an external configuration file.

ret->measuringPointID = "1";

return ret;

}

static UA_StatusCode

serverSetHistoryData_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId,

UA_Boolean historizing,

const UA_DataValue *value)

{

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

size_t result;

char* errorMessage;

char query[QUERY_BUFFER_SIZE];

strncpy(query, "INSERT INTO PeriodicValues VALUES(1, ", QUERY_BUFFER_SIZE);

if (value->hasValue &&

value->status == UA_STATUSCODE_GOOD &&

value->value.type == &UA_TYPES[UA_TYPES_DOUBLE])

{

char remaining[30];

snprintf(remaining, 30, "%f, CURRENT_TIMESTAMP);", *(double*)(value->value.data));

strncat(query, remaining, QUERY_BUFFER_SIZE);

}

else

{

printf("%s | Error | historical value is invalid\n", __func__);

return UA_STATUSCODE_BADINTERNALERROR;

}

int res = sqlite3_exec(context->sqlite, query, NULL, NULL, &errorMessage);

if (res != SQLITE_OK)

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

return UA_STATUSCODE_BADINTERNALERROR;

}

return UA_STATUSCODE_GOOD;

}

static size_t

getEnd_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId)

{

return END_OF_DATA;

}

//This is a callback for all queries that return a single timestamp as the number of Unix seconds

static int timestamp_callback(void* result, int count, char **data, char **columns)

{

*(UA_Int64*)result = convertTimestampStringToUnixSeconds(data[0]);

return 0;

}

static int resultSize_callback(void* result, int count, char **data, char **columns)

{

*(size_t*)result = strtol(data[0], NULL, 10);

return 0;

}

static size_t

lastIndex_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId)

{

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

size_t result;

char* errorMessage;

char query[QUERY_BUFFER_SIZE];

strncpy(query, "SELECT Timestamp FROM PeriodicValues WHERE MeasuringPointID=", QUERY_BUFFER_SIZE);

strncat(query, context->measuringPointID, QUERY_BUFFER_SIZE);

strncat(query, " ORDER BY Timestamp DESC LIMIT 1", QUERY_BUFFER_SIZE);

int res = sqlite3_exec(context->sqlite, query, timestamp_callback, &result, &errorMessage);

if (res != SQLITE_OK)

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

return END_OF_DATA;

}

return result;

}

static size_t

firstIndex_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId)

{

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

size_t result;

char* errorMessage;

char query[QUERY_BUFFER_SIZE];

strncpy(query, "SELECT Timestamp FROM PeriodicValues WHERE MeasuringPointID=", QUERY_BUFFER_SIZE);

strncat(query, context->measuringPointID, QUERY_BUFFER_SIZE);

strncat(query, " ORDER BY Timestamp LIMIT 1", QUERY_BUFFER_SIZE);

int res = sqlite3_exec(context->sqlite, query, timestamp_callback, &result, &errorMessage);

if (res != SQLITE_OK)

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

return END_OF_DATA;

}

return result;

}

static UA_Boolean

search_sqlite(struct context_sqlite* context,

UA_Int64 unixSeconds, MatchStrategy strategy,

size_t *index)

{

*index = END_OF_DATA; // TODO

char* errorMessage;

char query[QUERY_BUFFER_SIZE];

strncpy(query, "SELECT Timestamp FROM PeriodicValues WHERE MeasuringPointID=", QUERY_BUFFER_SIZE);

strncat(query, context->measuringPointID, QUERY_BUFFER_SIZE);

strncat(query, " AND ", QUERY_BUFFER_SIZE);

switch (strategy)

{

case MATCH_EQUAL_OR_AFTER:

strncat(query, "Timestamp>='", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(unixSeconds), QUERY_BUFFER_SIZE);

strncat(query, "' ORDER BY Timestamp LIMIT 1", QUERY_BUFFER_SIZE);

break;

case MATCH_AFTER:

strncat(query, "Timestamp>'", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(unixSeconds), QUERY_BUFFER_SIZE);

strncat(query, "' ORDER BY Timestamp LIMIT 1", QUERY_BUFFER_SIZE);

break;

case MATCH_EQUAL_OR_BEFORE:

strncat(query, "Timestamp<='", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(unixSeconds), QUERY_BUFFER_SIZE);

strncat(query, "' ORDER BY Timestamp DESC LIMIT 1", QUERY_BUFFER_SIZE);

break;

case MATCH_BEFORE:

strncat(query, "Timestamp<'", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(unixSeconds), QUERY_BUFFER_SIZE);

strncat(query, "' ORDER BY Timestamp DESC LIMIT 1", QUERY_BUFFER_SIZE);

break;

default:

return false;

}

int res = sqlite3_exec(context->sqlite, query, timestamp_callback, index, &errorMessage);

if (res != SQLITE_OK)

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

return false;

}

else

{

return true;

}

}

static size_t

getDateTimeMatch_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId,

const UA_DateTime timestamp,

const MatchStrategy strategy)

{

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

UA_Int64 ts = UA_DateTime_toUnixTime(timestamp);

size_t result = END_OF_DATA;

UA_Boolean res = search_sqlite(context, ts, strategy, &result);

return result;

}

static size_t

resultSize_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId,

size_t startIndex,

size_t endIndex)

{

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

char* errorMessage;

size_t result = 0;

char query[QUERY_BUFFER_SIZE];

strncpy(query, "SELECT COUNT(*) FROM PeriodicValues WHERE ", QUERY_BUFFER_SIZE);

strncat(query, "(Timestamp>='", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(startIndex), QUERY_BUFFER_SIZE);

strncat(query, "') AND (Timestamp<='", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(endIndex), QUERY_BUFFER_SIZE);

strncat(query, "') AND (MeasuringPointID=", QUERY_BUFFER_SIZE);

strncat(query, context->measuringPointID, QUERY_BUFFER_SIZE);

strncat(query, ")", QUERY_BUFFER_SIZE);

int res = sqlite3_exec(context->sqlite, query, resultSize_callback, &result, &errorMessage);

if (res != SQLITE_OK)

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

return 0; // no data

}

return result;

}

static int copyDataValues_callback(void* result, int count, char **data, char **columns)

{

UA_DataValue dv;

UA_DataValue_init(&dv);

dv.status = UA_STATUSCODE_GOOD;

dv.hasStatus = true;

dv.sourceTimestamp = UA_DateTime_fromUnixTime(convertTimestampStringToUnixSeconds(data[0]));

dv.hasSourceTimestamp = true;

dv.serverTimestamp = dv.sourceTimestamp;

dv.hasServerTimestamp = true;

double value = strtod(data[1], NULL);

UA_Variant_setScalarCopy(&dv.value, &value, &UA_TYPES[UA_TYPES_DOUBLE]);

dv.hasValue = true;

context_copyDataValues* ctx = (context_copyDataValues*)result;

UA_DataValue_copy(&dv, &ctx->values[ctx->counter]);

ctx->counter++;

if (ctx->counter == ctx->maxValues)

{

return 1;

}

else

{

return 0;

}

}

static UA_StatusCode

copyDataValues_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId,

size_t startIndex,

size_t endIndex,

UA_Boolean reverse,

size_t maxValues,

UA_NumericRange range,

UA_Boolean releaseContinuationPoints,

const UA_ByteString *continuationPoint,

UA_ByteString *outContinuationPoint,

size_t *providedValues,

UA_DataValue *values)

{

//NOTE: this demo does not support continuation points!!!

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

char* errorMessage;

const char* measuringPointID = "1";

char query[QUERY_BUFFER_SIZE];

strncpy(query, "SELECT Timestamp, Value FROM PeriodicValues WHERE ", QUERY_BUFFER_SIZE);

strncat(query, "(Timestamp>='", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(startIndex), QUERY_BUFFER_SIZE);

strncat(query, "') AND (Timestamp<='", QUERY_BUFFER_SIZE);

strncat(query, convertUnixSecondsToTimestampString(endIndex), QUERY_BUFFER_SIZE);

strncat(query, "') AND (MeasuringPointID=", QUERY_BUFFER_SIZE);

strncat(query, measuringPointID, QUERY_BUFFER_SIZE);

strncat(query, ")", QUERY_BUFFER_SIZE);

context_copyDataValues ctx;

ctx.maxValues = maxValues;

ctx.counter = 0;

ctx.values = values;

int res = sqlite3_exec(context->sqlite, query, copyDataValues_callback, &ctx, &errorMessage);

if (res != SQLITE_OK)

{

if (res == SQLITE_ABORT) // if reach maxValues, then request abort, so this is not error

{

sqlite3_free(errorMessage);

return UA_STATUSCODE_GOOD;

}

else

{

printf("%s | Error | %s\n", __func__, errorMessage);

sqlite3_free(errorMessage);

return UA_STATUSCODE_BADINTERNALERROR;

}

}

else

{

return UA_STATUSCODE_GOOD;

}

}

static const UA_DataValue*

getDataValue_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId,

size_t index)

{

struct context_sqlite* context = (struct context_sqlite*)hdbContext;

return NULL;

}

static UA_Boolean

boundSupported_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId)

{

return false; // We don't support returning bounds in this demo

}

static UA_Boolean

timestampsToReturnSupported_sqliteHDB(UA_Server *server,

void *hdbContext,

const UA_NodeId *sessionId,

void *sessionContext,

const UA_NodeId *nodeId,

const UA_TimestampsToReturn timestampsToReturn)

{

return true;

}

UA_HistoryDataBackend

UA_HistoryDataBackend_sqlite(const char* filename)

{

UA_HistoryDataBackend result;

memset(&result, 0, sizeof(UA_HistoryDataBackend));

result.serverSetHistoryData = &serverSetHistoryData_sqliteHDB;

result.resultSize = &resultSize_sqliteHDB;

result.getEnd = &getEnd_sqliteHDB;

result.lastIndex = &lastIndex_sqliteHDB;

result.firstIndex = &firstIndex_sqliteHDB;

result.getDateTimeMatch = &getDateTimeMatch_sqliteHDB;

result.copyDataValues = ©DataValues_sqliteHDB;

result.getDataValue = &getDataValue_sqliteHDB;

result.boundSupported = &boundSupported_sqliteHDB;

result.timestampsToReturnSupported = ×tampsToReturnSupported_sqliteHDB;

result.deleteMembers = NULL; // We don't support deleting in this demo

result.getHistoryData = NULL; // We don't support the high level API in this demo

result.context = generateContext_sqlite(filename);

return result;

}

#endif

三 使用

cd到build目录,执行下面语句进行编译,

cmake .. && make

编译完成后运行server,

./server

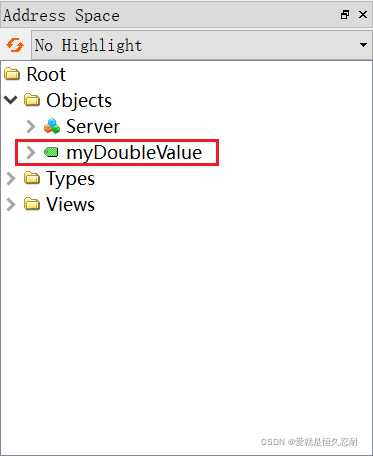

然后打开UaExpert进行连接,连接成功后,显示如下,

由于本文提供的backend不支持bound value,所以需要对UaExpert进行设置,关闭bound value,可以参考这篇文章

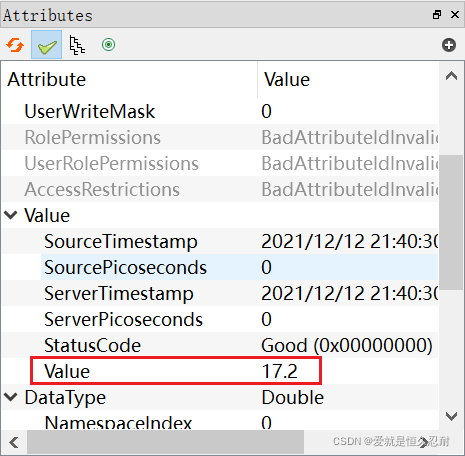

点击myDoubleValue,然后在右侧Attributes栏修改其值,分别改成18.2->19.2->20.2->15.2,

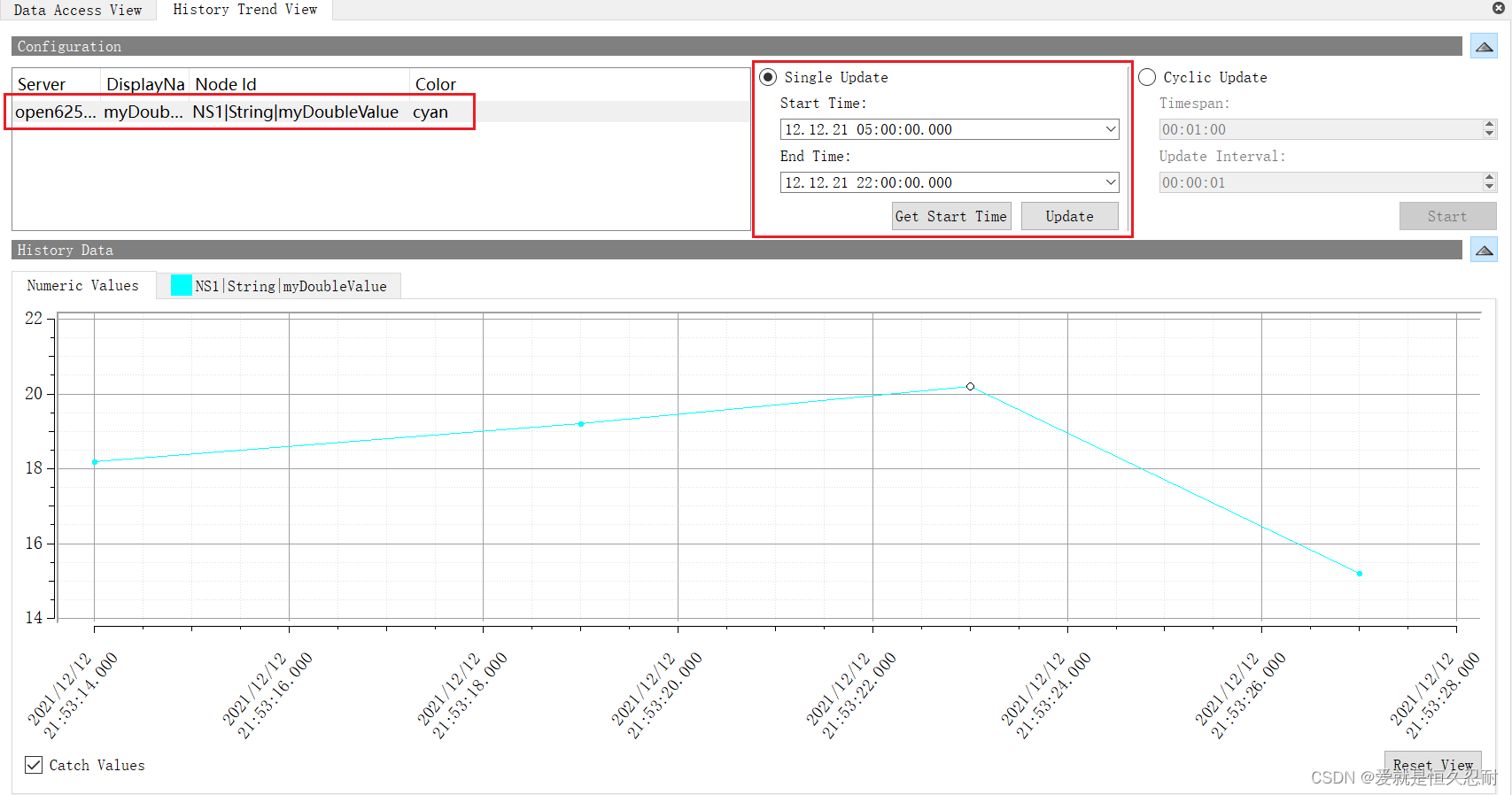

改完后,打开History Trend View,并把myDoubleValue节点拖进来,然后点击update,要注意Start Time和End Time,最后得到结果如下,

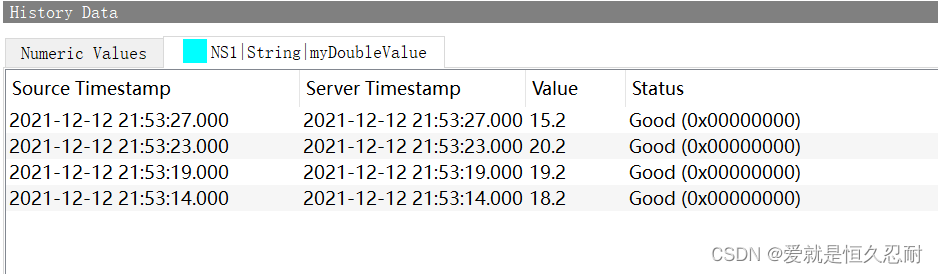

可以看到正是之前的改过的数据,点击Numeric Values右侧的NS1|String|myDoubleValue选项卡,可以看到收到的历史数据,如下,

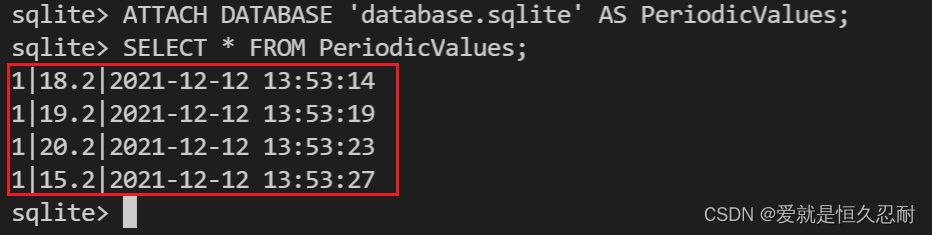

最后来看下sqlite3数据库里的数据,server.c里创建的数据库名字叫database.sqlite,本文的backend会在这个数据里创建表PeriodicValues

此时需要在CMakeLists.txt里添加如下语句,编译一下sqlite3的命令行工具,如下最后2行,

cmake_minimum_required(VERSION 3.5)

project(historicalSqlite3)

include_directories(open62541)

include_directories(sqlite)

set(source main/server.c open62541/open62541.c sqlite/sqlite3.c)

add_executable(server ${source})

target_link_libraries (server pthread dl)

add_executable(sqliteShell sqlite/shell.c sqlite/sqlite3.c)

target_link_libraries (sqliteShell pthread dl)

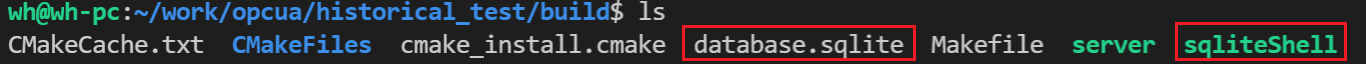

重新编译后会生成sqliteShell,和database.sqlite在同一目录下,

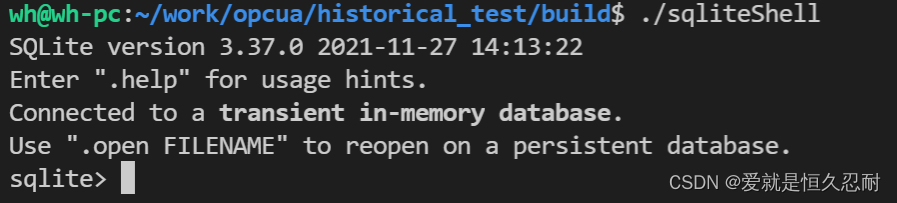

运行sqliteShell,

然后输入ATTACH DATABASE 'database.sqlite' AS PeriodicValues;回车,接着输入SELECT * FROM PeriodicValues;回车,最后结果如下,

可以看到存储的历史数据和实际一致。

需要注意的是:代码在创建数据库时,如果会把之前的数据库删掉,可以进行修改保留原来的数据库,改下SQL语句就行了。

四 结语

本人主要讲述如何使用数据库SQLite3来记录历史数据,本文只讲述了一个node,如果是多个node可以在数据库里添加一列,存放节点的nodeid,到时好区分,只是个人意见,应该还有其它方法。

主要是涉及到了SQL语句,本人也不是很熟悉SQL,如果想了解的更深入,就需要去掌握一下SQL。

同理,SQLite3也可以换成其它数据库。