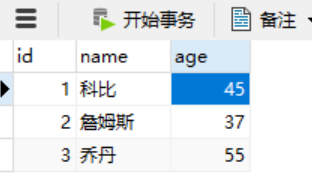

1.person表

2.score表

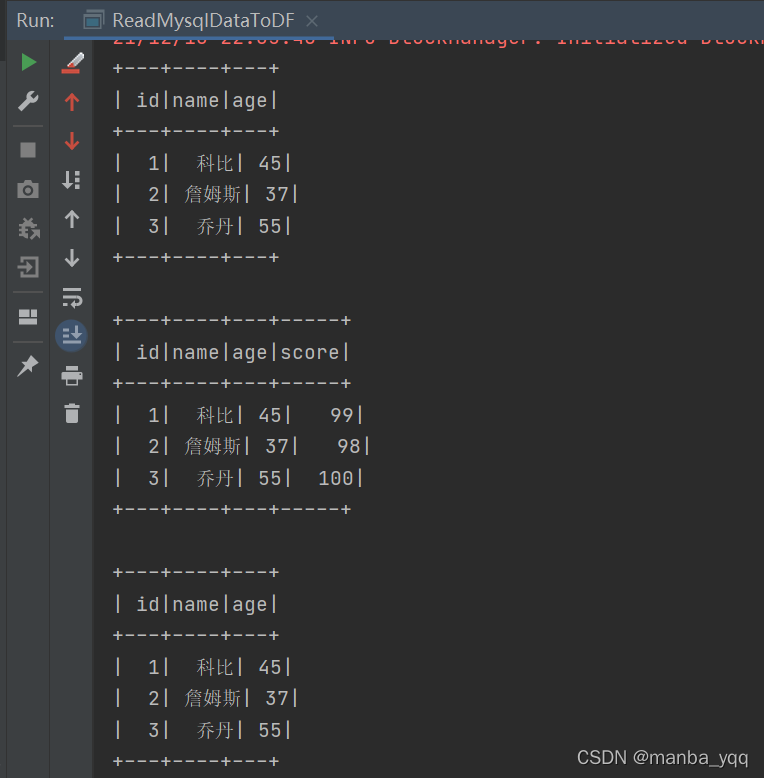

3.scala代码

package sparkSql

import org.apache.spark.sql.{DataFrame, DataFrameReader, SaveMode, SparkSession}

import java.util.Properties

/**

* @Author yqq

* @Date 2021/12/13 21:29

* @Version 1.0

*/

object ReadMysqlDataToDF {

def main(args: Array[String]): Unit = {

//spark.sql.shuffle.partitions:默认200,指定当产生shuffle时,底层转换spark job 的分区数

val session = SparkSession.builder().config("spark.sql.shuffle.partitions",1).master("local").appName("ttt").getOrCreate()

session.sparkContext.setLogLevel("Error")

var url = "jdbc:mysql://node1/spark?serverTimezone=GMT%2B8&useSSL=false"

/**

* 第一种方式

*/

val p = new Properties()

p.setProperty("user","root")

p.setProperty("password","123456")

val people: DataFrame = session.read.jdbc(url, "person", p)

//val people: DataFrame = session.read.jdbc("jdbc:mysql://node1/spark?serverTimezone=GMT%2B8&useSSL=false", "(select p.id,p.name,p.age,s.score FROM person p,score s where s.id=p.id) T", p)

people.show()

people.createTempView("peopleT")

/**

* 第二种方式

*/

val map = Map[String, String](

"user"->"root",

"password"->"123456",

"url"->url,

"driver"->"com.mysql.jdbc.Driver",

"dbtable"->"score"

)

val score: DataFrame = session.read.format("jdbc").options(map).load()

// score.show()

score.createTempView("scoreT")

//如果读取的是MySQL中关联语句,需要使用别名方式用SparkSQL读取

val frame: DataFrame = session.sql(

"""

|select p.id,p.name,p.age,s.score FROM peopleT p,scoreT s where s.id=p.id

|""".stripMargin)

frame.show()

//将数据存储到MySQL服务器中

frame.write.mode(SaveMode.Append).jdbc(url,"result",p)

/**

* 第三种

*/

val reader: DataFrameReader = session.read.format("jdbc")

.option("user", "root")

.option("password", "123456")

.option("url", url)

.option("driver", "com.mysql.jdbc.Driver")

.option("dbtable", "person")

val person: DataFrame = reader.load()

person.show()

}

}

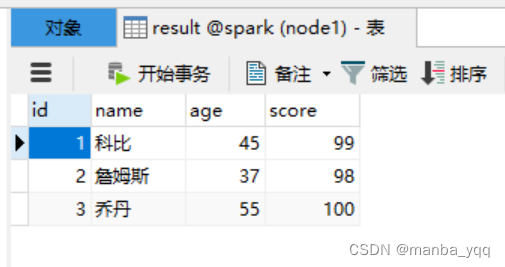

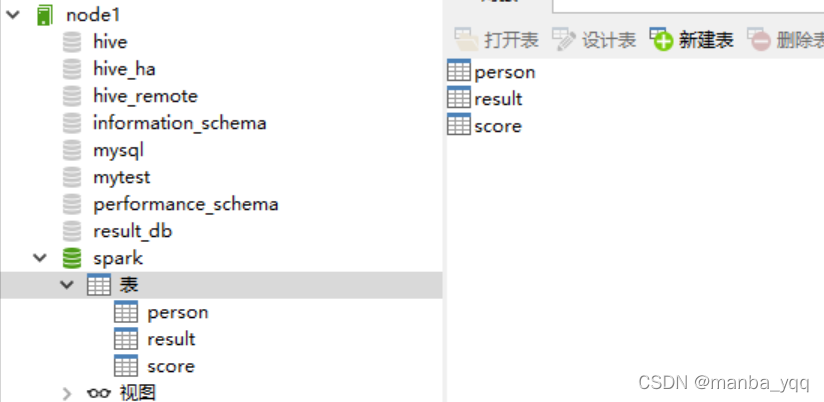

4.写入MySQL中的result表数据