1.安装jdk

1.1下载jdk (里面包括hadoop安装包,不用积分)

hadoop+jdk.zip-Hadoop文档类资源-CSDN下载

--1.2解压jkd

[root@localhost opt]#?tar zxf jkd-8u181-linux-x64.tar.zg

--1.3改名

[root@localhost opt]#? mv jkd-8u181-linux-x64 jdk8

--1.4设置环境变量

[root@localhost opt]#?vim /etc/profile

?? 没有vim? 可以先用 vi? /etc/profile? 也可

--安装vim

[root@localhost opt]# yum install vim

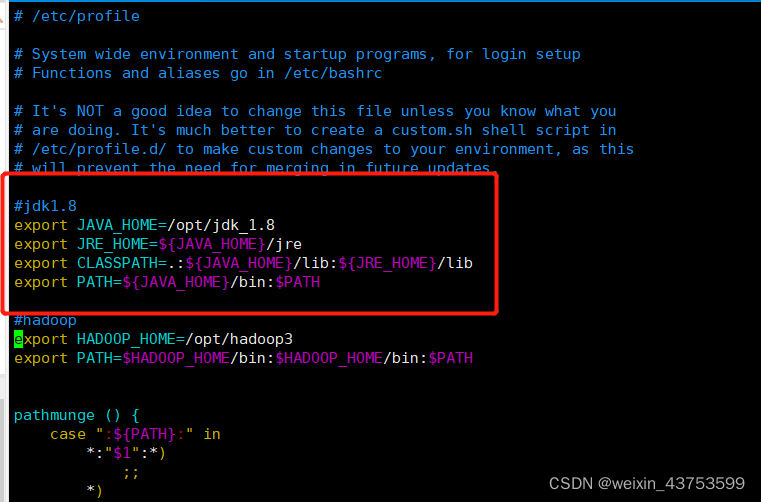

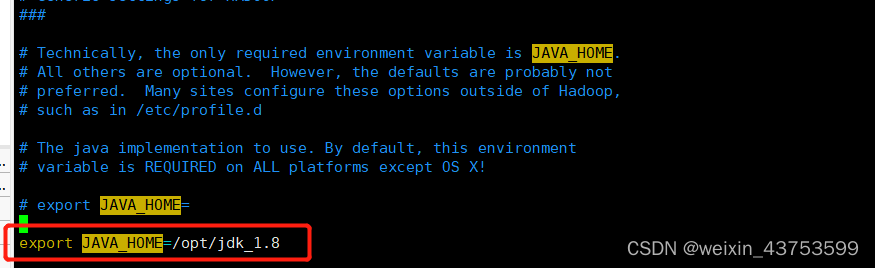

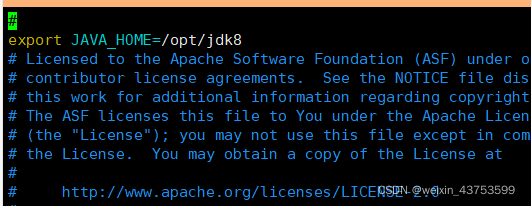

!!!!在/etc/profile最前面添加如下信息:

#jdk1.8 ?

export JAVA_HOME=/opt/jdk_1.8

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

如图所示:

?粘贴完成后 连按?? Esc? + Shift +? :?? (三个键)

然后输入: wq ? ?? (w:write写入 ,q:quit退出)?? 其中wq!表示强制写入退出

最后:按回车键Enter

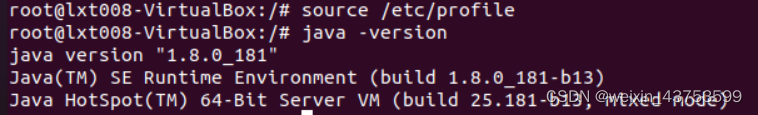

1.5 无需重启使得配置生效

[root@localhost opt]#? source /etc/profile

1.6 验证jdk是否安装成功

[root@localhost opt]#??java -version

?2.安装hadoop

2.1解压hadoop.tar.gz

“z”解压 ?“x”解包

[root@localhost opt]# tar -zxvf hadoop-3.1.3.tar.gz

2.2改名

[root@localhost opt]# mv hadoop-3.1.3 hadoop3

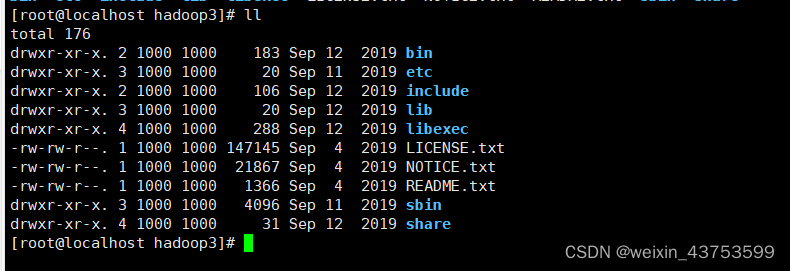

2.3 查看Hadoop路径:

[root@localhost opt]# cd hadoop

[root@localhost hadoop3]# ll

重要目录:

(1)bin目录:存放对Hadoop相关服务(HDFS,YARN)进行操作的脚本

(2)etc目录:Hadoop的配置文件目录,存放Hadoop的配置文件

(3)lib目录:存放Hadoop的本地库(对数据进行压缩解压缩功能)

(4)sbin目录:存放启动或停止Hadoop相关服务的脚本

(5)share目录:存放Hadoop的依赖jar包、文档、和官方案例

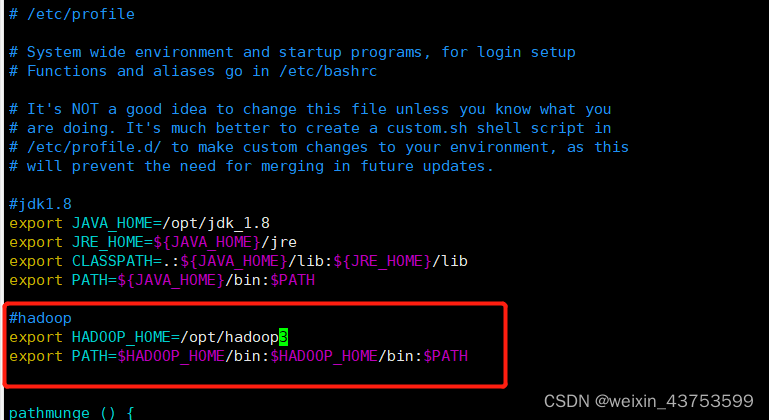

?2.4? /etc/profile? Hadoop环境变量配置

[root@localhost hadoop3]# vim /etc/profile

注意:/opt/hadoop3:是你自己安装hadoop的路径。

#hadoop

export HADOOP_HOME=/opt/hadoop3

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/bin:$PATH

2.4配置hadoop读取jdk

?--进入hadoop目录

[root@localhost hadoop]# cd /opt/hadoop3/etc/hadoop/

[root@localhost hadoop]# vim hadoop-env.sh

--在hadoop-enc.sh中查找java_jdk 的存放位置

按 Esc + Shift + :

输入? / JAVA_HOME?? 搜索

export JAVA_HOME=/opt/jdk_1.8

#我都是用root用户? 之所以加 hdfs 和 yarn 的? 看后面? 报错1:的错误(呜呜呜)

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

报错1:(后面启动时发现的)

[root@localhost hadoop]#start-dfs.sh

Starting namenodes on [master]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [master]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

[root@localhost hadoop]#start-yarn.sh

Starting resourcemanager

ERROR: Attempting to operate on yarn resourcemanager as root

ERROR: but there is no YARN_RESOURCEMANAGER_USER defined. Aborting operation.

Starting nodemanagers

ERROR: Attempting to operate on yarn nodemanager as root

ERROR: but there is no YARN_NODEMANAGER_USER defined. Aborting operation.下图是我添加的位置:

2.5 配置:core-site.xml

[root@localhost hadoop]# vim core-site.xml

<!-- 指定HDFS中NameNode的地址,其中hadoop101:要替换成你的主机 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop101:9000</value>

</property>

<!-- 指定Hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-3.1.3/data/tmp</value>

</property>2.6配置:hdfs-site.xml

<!-- 指定HDFS副本的数量 -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>3.启动集群(先格式化NameNode)

[root@localhost hadoop]# cd /opt/hadoop

[root@localhost hadoop]# ls

bin? include? libexec????? logs??????? README.txt? share??? wcouput

etc? lib????? LICENSE.txt? NOTICE.txt? sbin??????? wcinput

[root@localhost hadoop]# bin/hdfs namenode -format

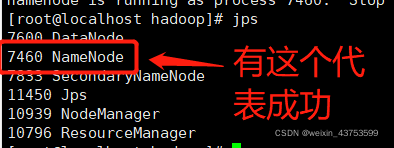

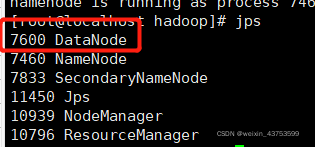

3.1启动NameNode和datanode

[root@localhost hadoop]#? hdfs --daemon start namenode

?[root@localhost hadoop]#? hdfs --daemon start datanode

?注意:jps是JDK中的命令,不是Linux命令。不安装JDK不能使用jps

# 关闭防火墙

--启动后要关闭防火墙才能在外部浏览器访问

[root@localhost opt]# systemctl stop firewalld

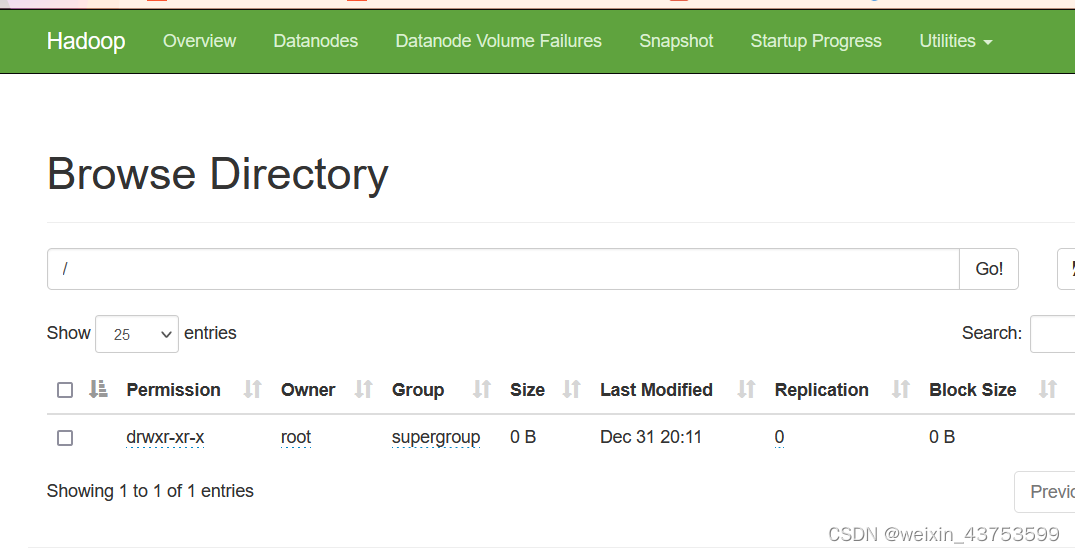

3.2 web查看HDFS文件系统

在浏览器上输入:http://192.168.118.12:9870/explorer.html#/

其中192.168.118.12:是linux的ip(我随便弄的ip,你们不用试图访问,哈哈哈^^)

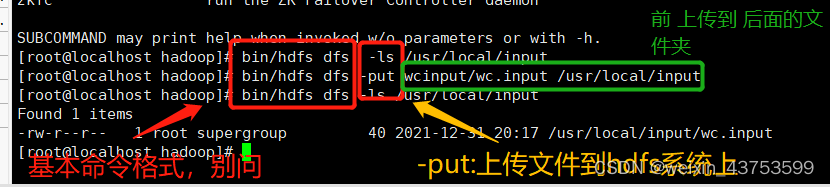

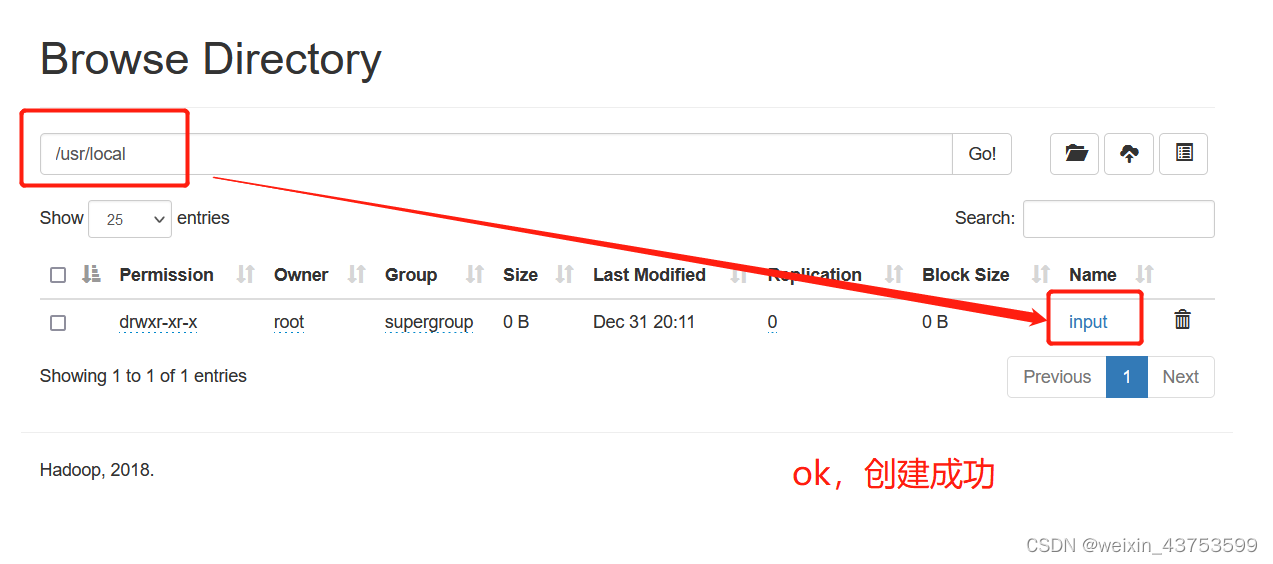

?3.3 验证一下(在HDFS文件系统上创建一个input文件夹)

[root@localhost hadoop]# bin/hdfs dfs -mkdir -p /usr/local/input

?成功标志:

?运行MapReduce程序

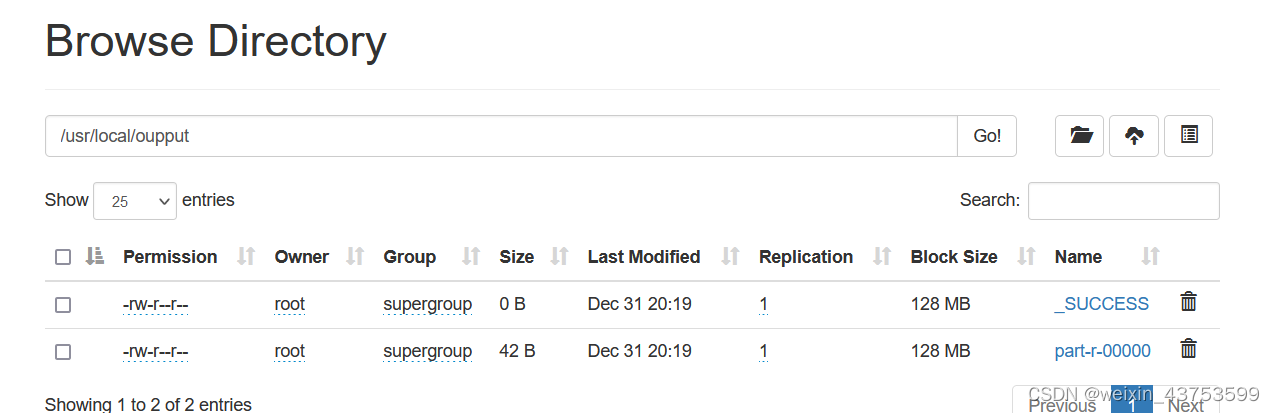

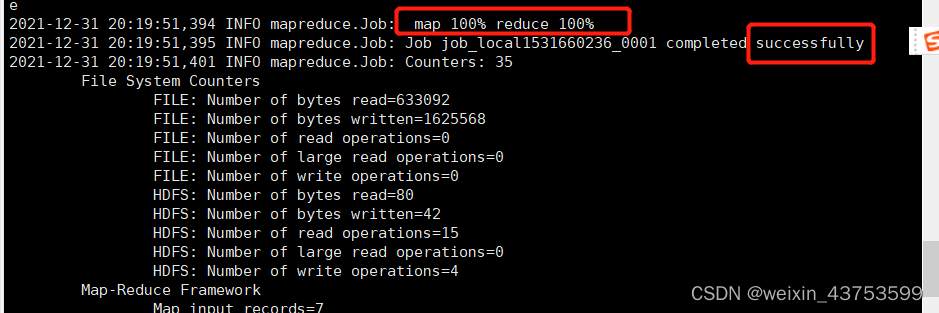

[root@localhost hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.1.jar wordcount /usr/local/input /usr/local/output

下图表示成功:

?

?

4.配置yarn

4.1配置yarn-env.sh

[root@localhost hadoop]# cd etc/hadoop/

[root@localhost hadoop]# vim yarn-env.sh

export JAVA_HOME=/opt/jdk8

?4.2配置yarn-site.xml

[root@localhost hadoop]# vim yarn-site.xml

<configuration>

educer获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定YARN的ResourceManager的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>localhost</value>

</property>

<!--yarn总管理器的web http通讯地址-->

??? <property>

??????? <name>yarn.resourcemanager.webapp.address</name>

??????? <value>0.0.0.0:8088</value>

??? </property></configuration>

4.3配置:mapred-env.sh

[root@localhost hadoop]# vim mapred-env.sh

export JAVA_HOME=/opt/jdk8

4.4 配置 :mapred-site.xml

[root@localhost hadoop]# vim mapred-site.xml

<!-- 指定MR运行在YARN上 -->

<property>

??????????????? <name>mapreduce.framework.name</name>

??????????????? <value>yarn</value>

</property>

4.5启动ResourceManager和NodeManager

[root@localhost hadoop]# yarn --daemon start resourcemanager

[root@localhost hadoop]# yarn --daemon start nodemanager

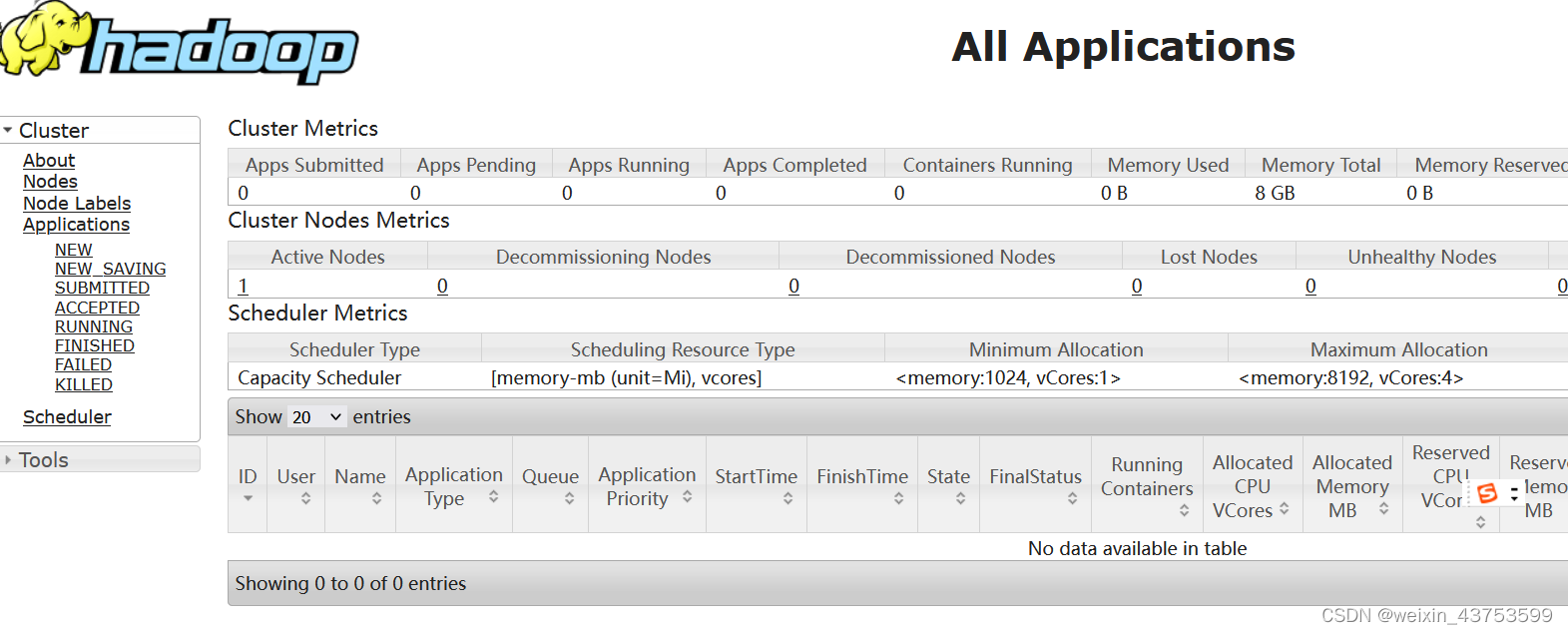

4.6 web查看yarn

在浏览器上输入:http://192.168.118.12:8088

其中192.168.118.12:(这个ip是模拟的,不用试图访问,哈哈哈^^)

?

最后:

启动hadoop? --->?? start-all.sh

关闭hadoop? ----> stop-all.sh