一.hdfs高可用

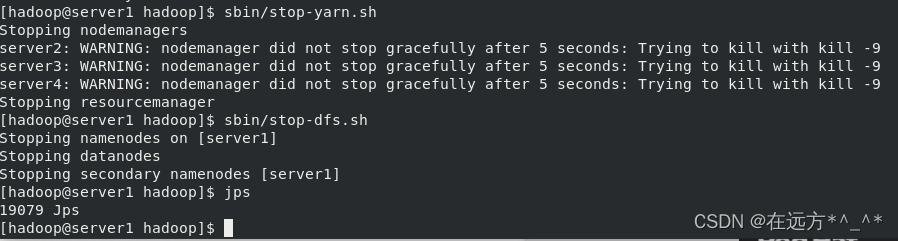

[hadoop@server1 hadoop]$ sbin/stop-yarn.sh

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

[hadoop@server1 hadoop]$ jps

19079 Jps

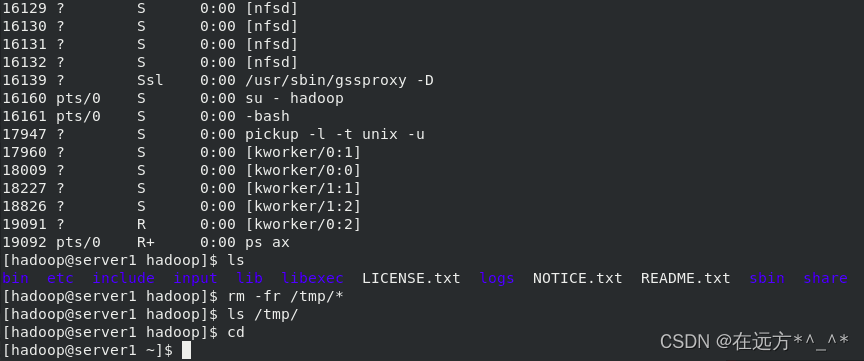

[hadoop@server1 hadoop]$ ps ax

[hadoop@server1 hadoop]$ ls

bin etc include input lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

[hadoop@server1 hadoop]$ rm -fr /tmp/*

[hadoop@server1 hadoop]$ ls /tmp/?停止服务,删除/tmp/目录下的文件

? 注意:一定要先删除/tmp/目录下的文件

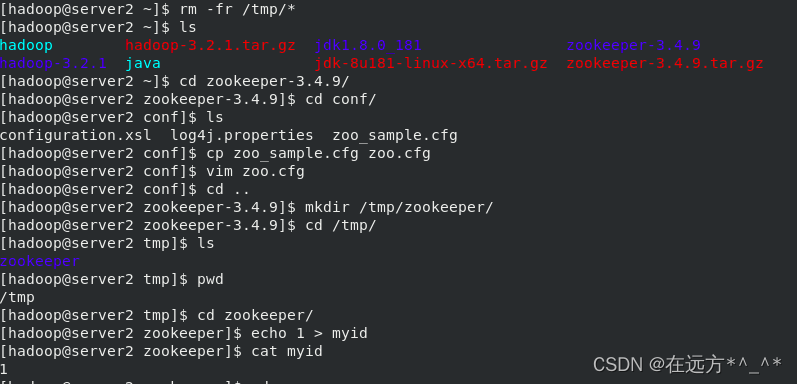

[hadoop@server2 ~]$ rm -fr /tmp/*

[hadoop@server2 ~]$ ls

hadoop hadoop-3.2.1.tar.gz jdk1.8.0_181 zookeeper-3.4.9

hadoop-3.2.1 java jdk-8u181-linux-x64.tar.gz zookeeper-3.4.9.tar.gz

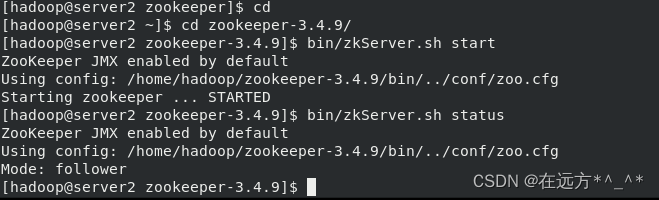

[hadoop@server2 ~]$ cd zookeeper-3.4.9/

[hadoop@server2 zookeeper-3.4.9]$ cd conf/

[hadoop@server2 conf]$ ls

configuration.xsl log4j.properties zoo_sample.cfg

[hadoop@server2 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server2 conf]$ vim zoo.cfg

server.1=172.25.52.2:2888:3888

server.2=172.25.52.3:2888:3888

server.3=172.25.52.4:2888:3888

[hadoop@server2 conf]$ cd ..

[hadoop@server2 zookeeper-3.4.9]$ mkdir /tmp/zookeeper/

[hadoop@server2 zookeeper-3.4.9]$ cd /tmp/

[hadoop@server2 tmp]$ ls

zookeeper

[hadoop@server2 tmp]$ pwd

/tmp

[hadoop@server2 tmp]$ cd zookeeper/

[hadoop@server2 zookeeper]$ echo 1 > myid

[hadoop@server2 zookeeper]$ cat myid  zookeeper集群搭建

zookeeper集群搭建

注意:在2,3,4三个节点都部署完成之后一起启动

在三个 DN 上依次启动 zookeeper 集群

?

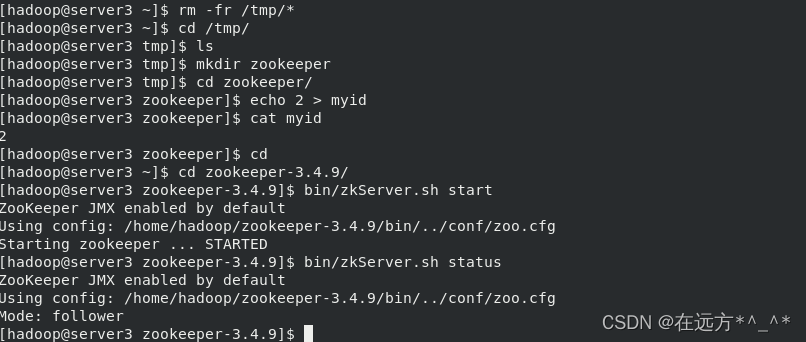

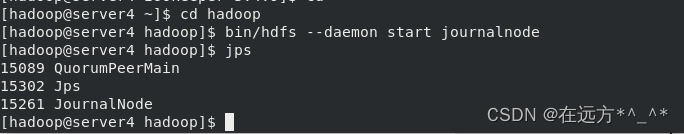

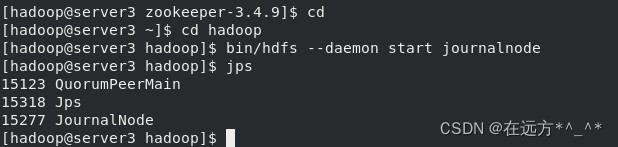

?在server3中:

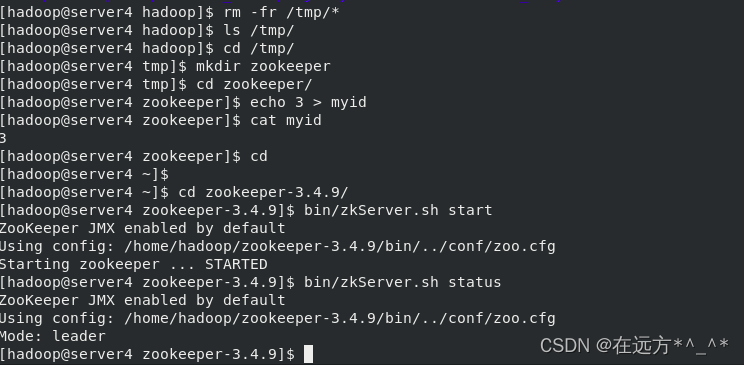

??在server4中:

HDFS高可用

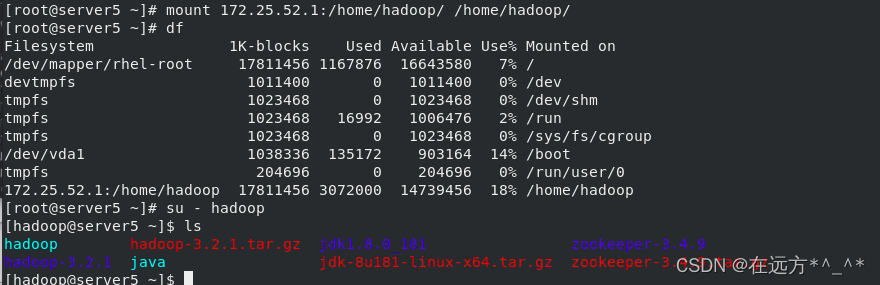

?添加一个虚拟机server5作为NN Standby

[root@server5 ~]# useradd hadoop

[root@server5 ~]# yum install -y nfs-utils

[root@server5 ~]# mount 172.25.52.1:/home/hadoop/ /home/hadoop/

[root@server5 ~]# df

[root@server5 ~]# su - hadoop

[hadoop@server5 ~]$ ls

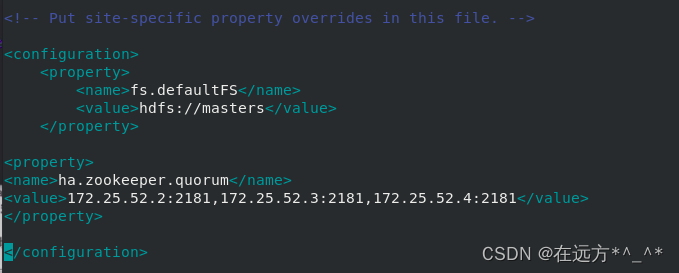

?Hadoop 配置

[hadoop@server1 zookeeper-3.4.9]$ cd

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://masters</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>172.25.52.2:2181,172.25.52.3:2181,172.25.52.4:2181</value>

</property>

</configuration>

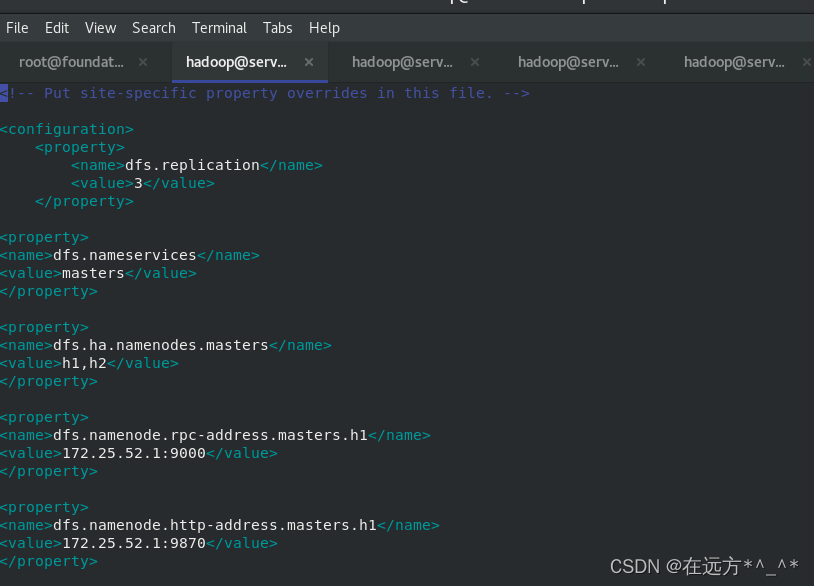

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>masters</value>

</property>

<property>

<name>dfs.ha.namenodes.masters</name>

<value>h1,h2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.masters.h1</name>

<value>172.25.52.1:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.masters.h1</name>

<value>172.25.52.1:9870</value>

</property>

<property>

<name>dfs.namenode.rpc-address.masters.h2</name>

<value>172.25.52.5:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.masters.h2</name>

<value>172.25.52.5:9870</value>

</property>

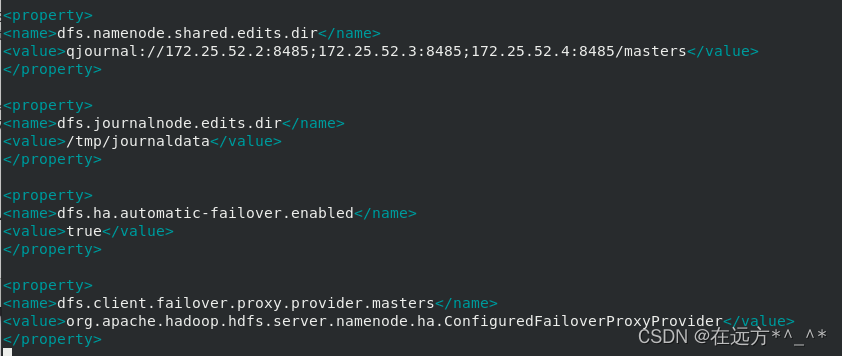

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://172.25.52.2:8485;172.25.52.3:8485;172.25.52.4:8485/masters</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/tmp/journaldata</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.masters</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

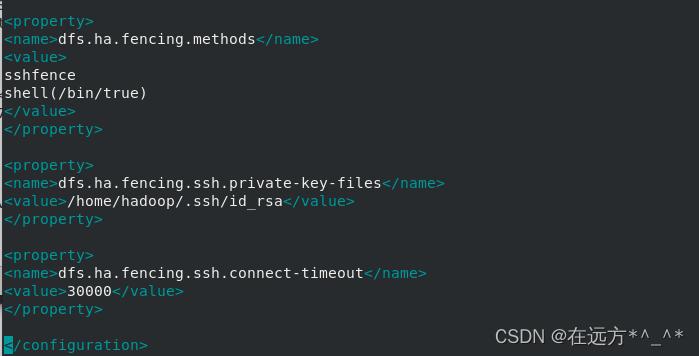

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

</configuration>

[hadoop@server1 hadoop]$

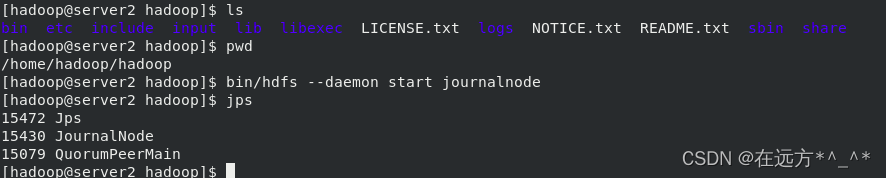

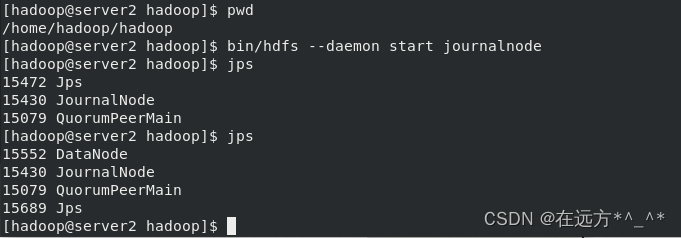

?在三个 DN 上依次启动 journalnode(第一次启动 hdfs 必须先启动 journalnode)

[hadoop@server2 hadoop]$ ls

bin etc include input lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

[hadoop@server2 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server2 hadoop]$ bin/hdfs --daemon start journalnode

[hadoop@server2 hadoop]$ jps

15472 Jps

15430 JournalNode

15079 QuorumPeerMain

[hadoop@server2 hadoop]$

?格式化 HDFS 集群

Namenode 数据默认存放在/tmp,需要把数据拷贝到 h2?

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ bin/hdfs namenode -format ### 格式化 HDFS 集群

[hadoop@server1 hadoop]$ cd /tmp/

[hadoop@server1 tmp]$ ls

hadoop-hadoop hadoop-hadoop-namenode.pid hsperfdata_hadoop

[hadoop@server1 tmp]$ scp -r /tmp/hadoop-hadoop 172.25.52.5:/tmp ? 格式化 zookeeper(只需在 h1 上执行即可)

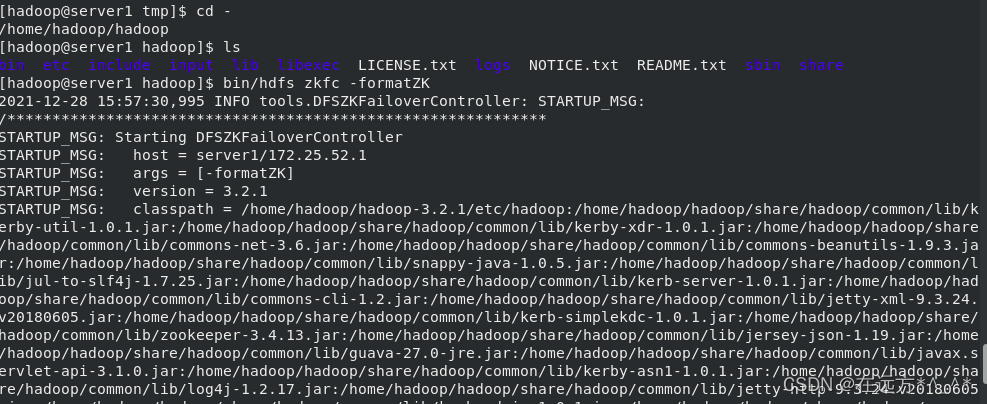

? 格式化 zookeeper(只需在 h1 上执行即可)

[hadoop@server1 tmp]$ cd -

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ ls

bin etc include input lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

[hadoop@server1 hadoop]$ bin/hdfs zkfc -formatZK

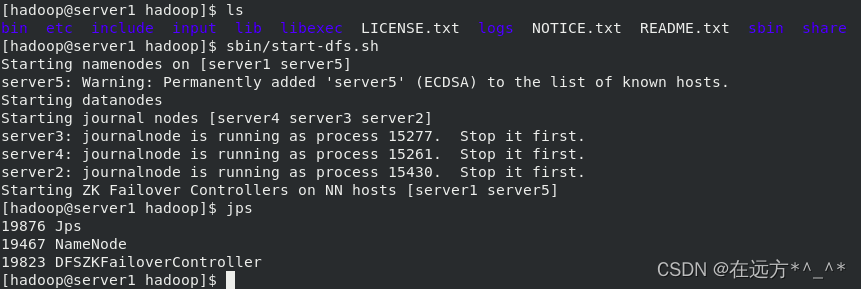

?启动 hdfs 集群(只需在 h1 上执行即可)

?[hadoop@server1 hadoop]$ ls

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

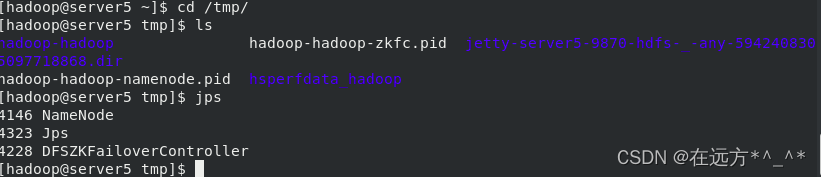

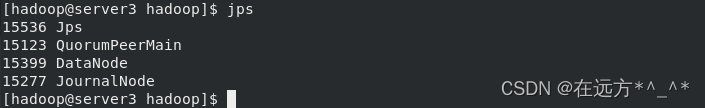

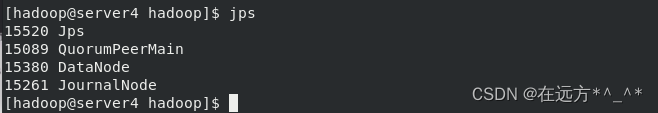

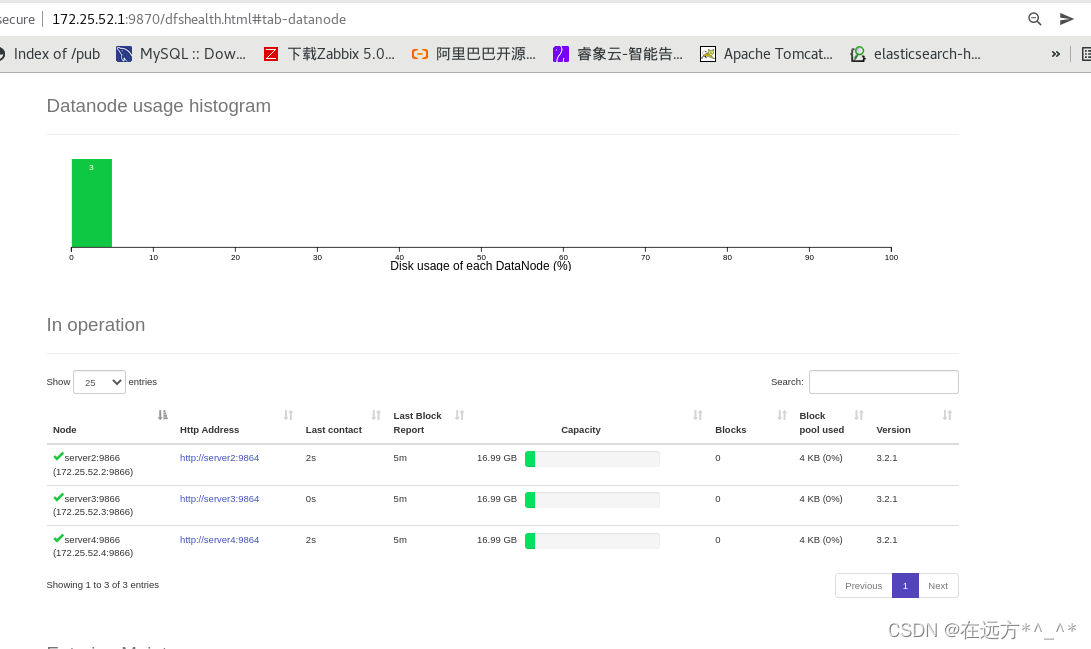

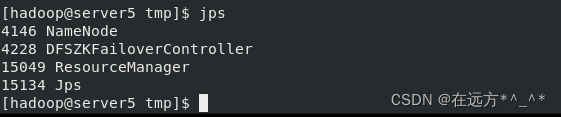

?查看各节点状态:

?

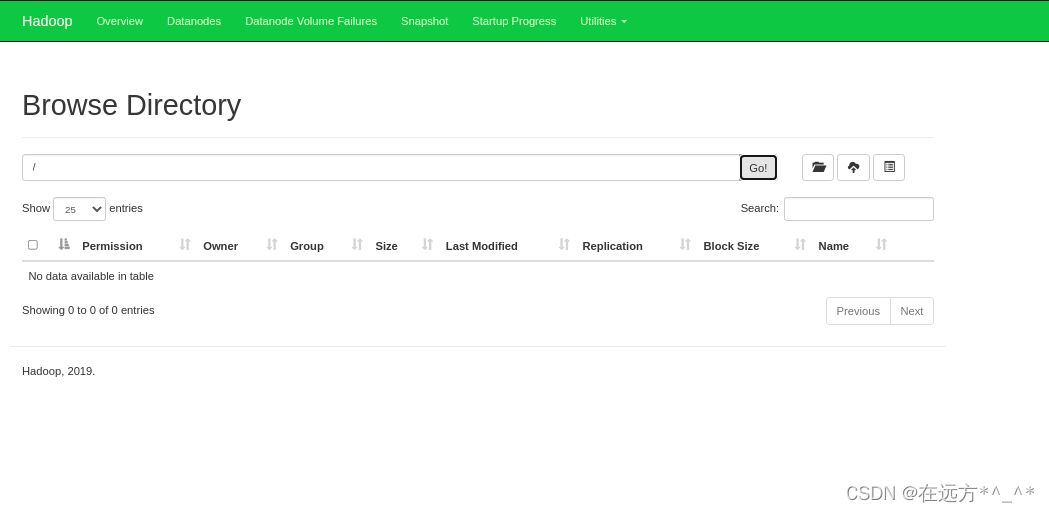

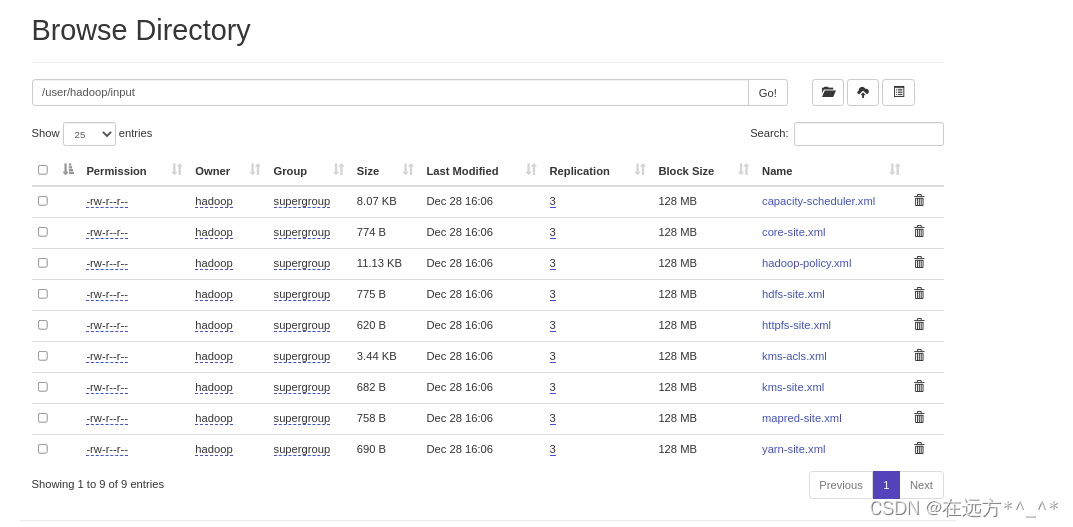

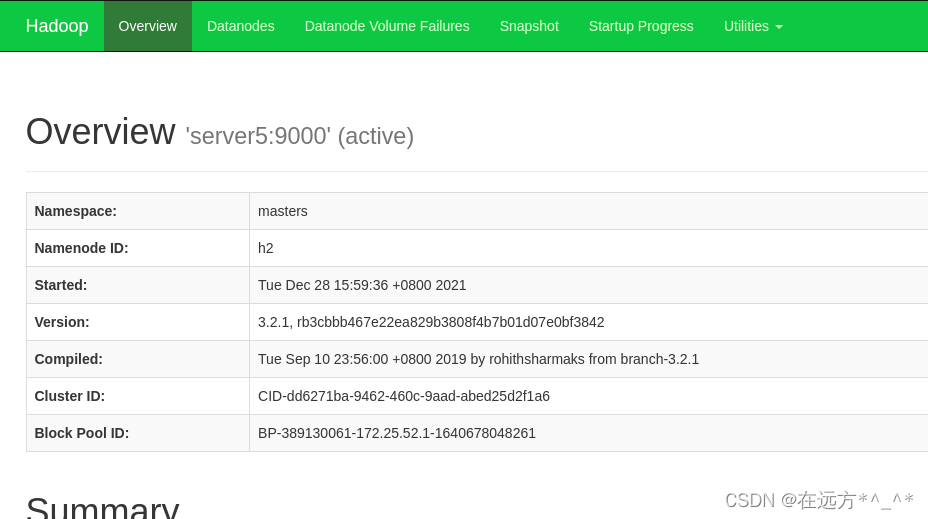

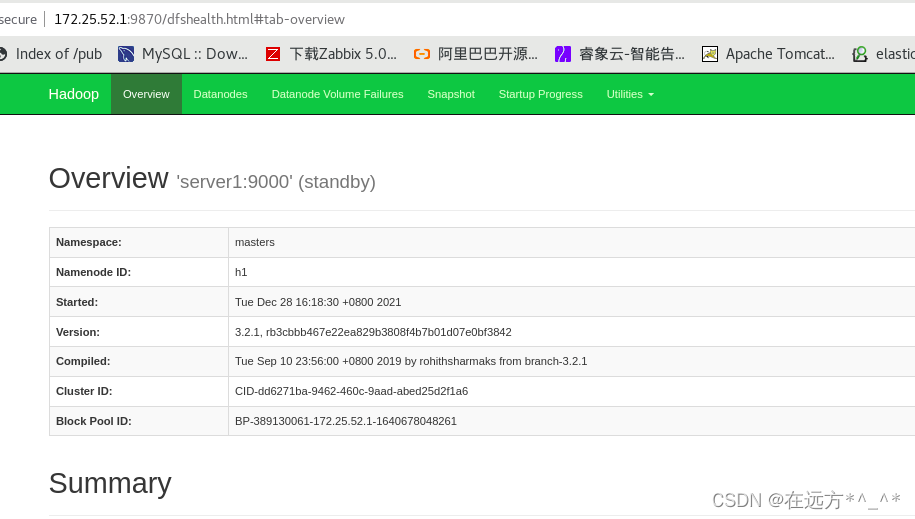

在前端查看:

?

?

?

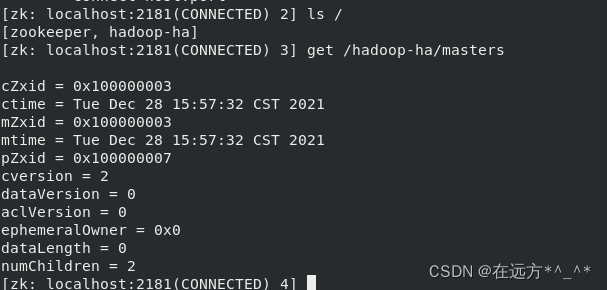

[hadoop@server3 ~]$ cd zookeeper-3.4.9/

[hadoop@server3 zookeeper-3.4.9]$ ls

bin docs README_packaging.txt zookeeper-3.4.9.jar.md5

build.xml ivysettings.xml README.txt zookeeper-3.4.9.jar.sha1

CHANGES.txt ivy.xml recipes zookeeper.out

conf lib src

contrib LICENSE.txt zookeeper-3.4.9.jar

dist-maven NOTICE.txt zookeeper-3.4.9.jar.asc

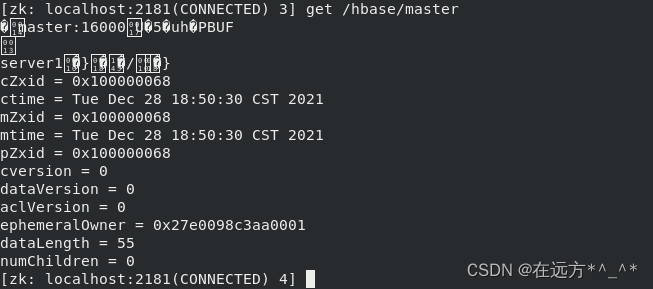

[hadoop@server3 zookeeper-3.4.9]$ bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 2] ls /

[zk: localhost:2181(CONNECTED) 3] get /hadoop-ha/masters

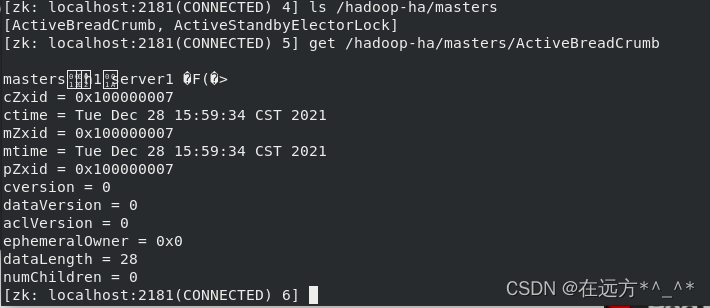

[zk: localhost:2181(CONNECTED) 4] ls /hadoop-ha/masters

[zk: localhost:2181(CONNECTED) 5] get /hadoop-ha/masters/ActiveBreadCrumb

?masters——>server1

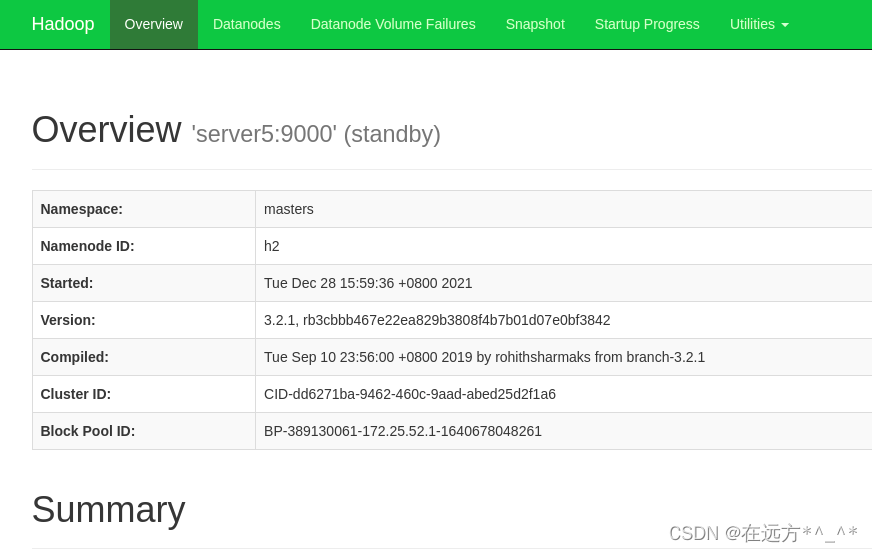

?server5——> standby

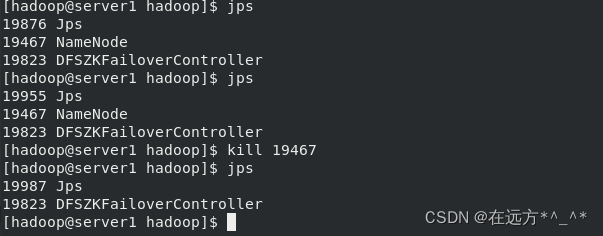

?测试故障自动切换?

在masters上:

杀掉 h1 主机的 namenode 进程后依然可以访问

?

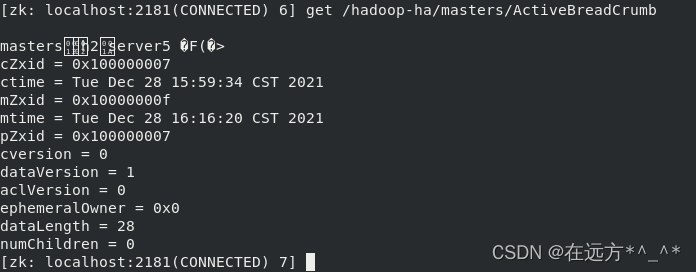

此时 h2(server5) 转为 active 状态接管 namenode :

? http://172.25.52.5:9870/???? ------>active

[hadoop@server1 hadoop]$ bin/hdfs --daemon start namenode

[hadoop@server1 hadoop]$ jps

20550 Jps

20042 NameNode

19823 DFSZKFailoverController

[hadoop@server1 hadoop]$启动 h1 上的 namenode,此时为 standby 状态。?

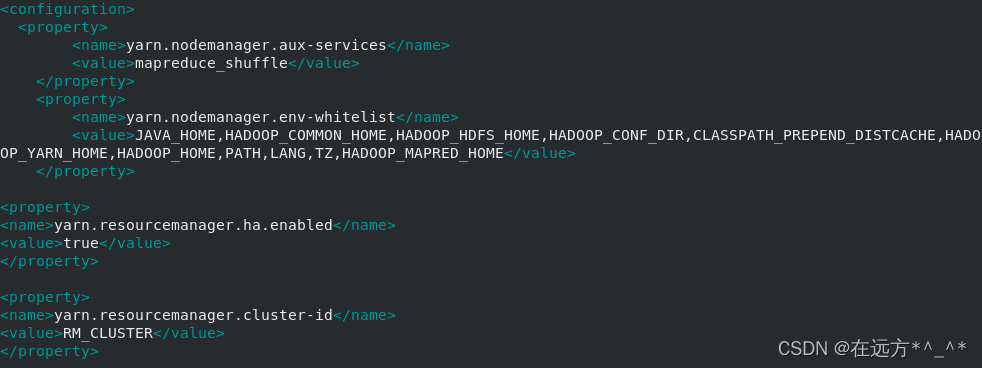

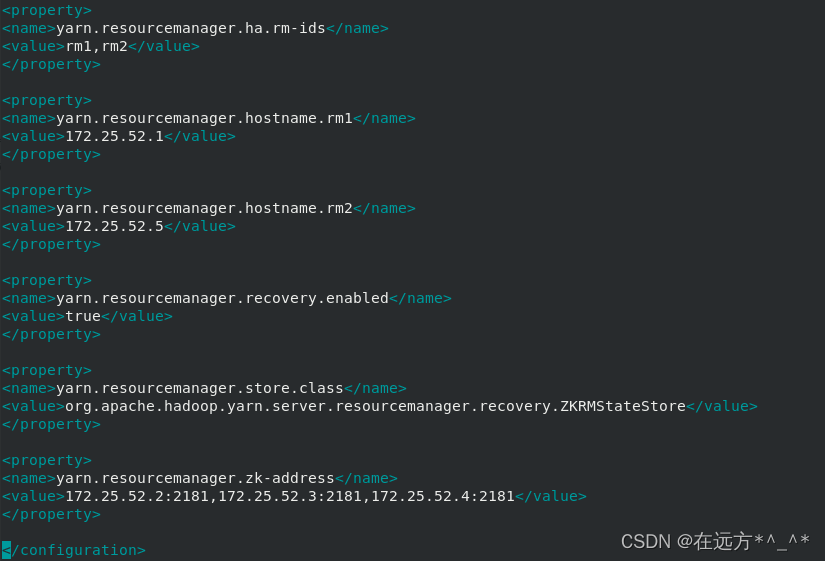

二. yarn 高可用

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOME</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>RM_CLUSTER</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>172.25.52.1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>172.25.52.5</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>172.25.52.2:2181,172.25.52.3:2181,172.25.52.4:2181</value>

</property>

</configuration>?

?

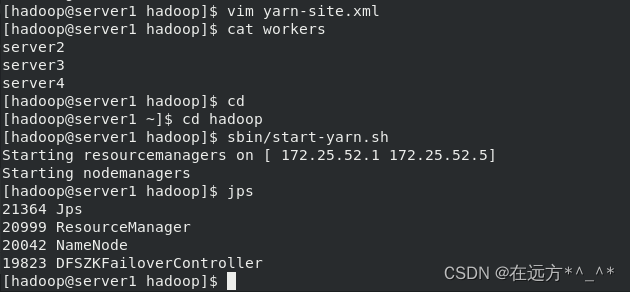

?启动 yarn 服务

[hadoop@server1 hadoop]$ cat workers

server2

server3

server4

[hadoop@server1 hadoop]$ cd

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ sbin/start-yarn.sh?

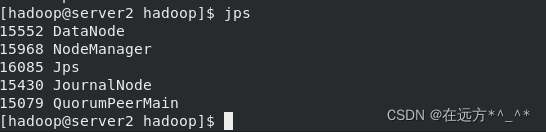

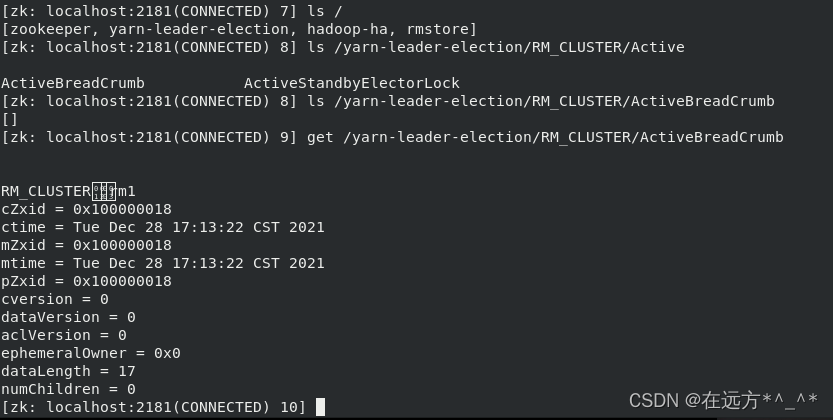

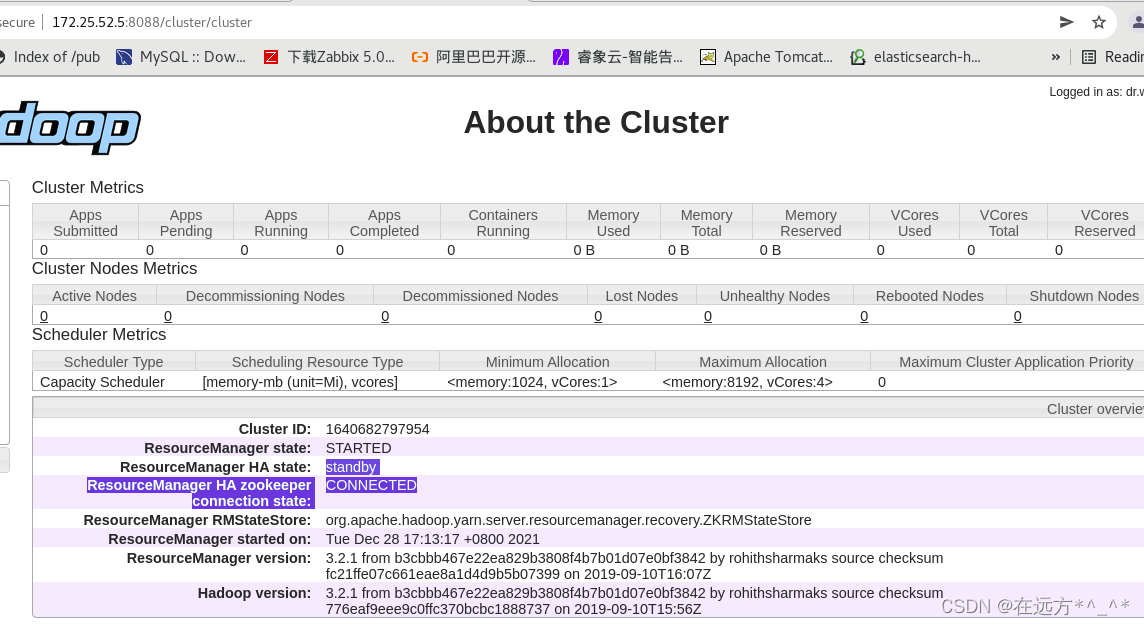

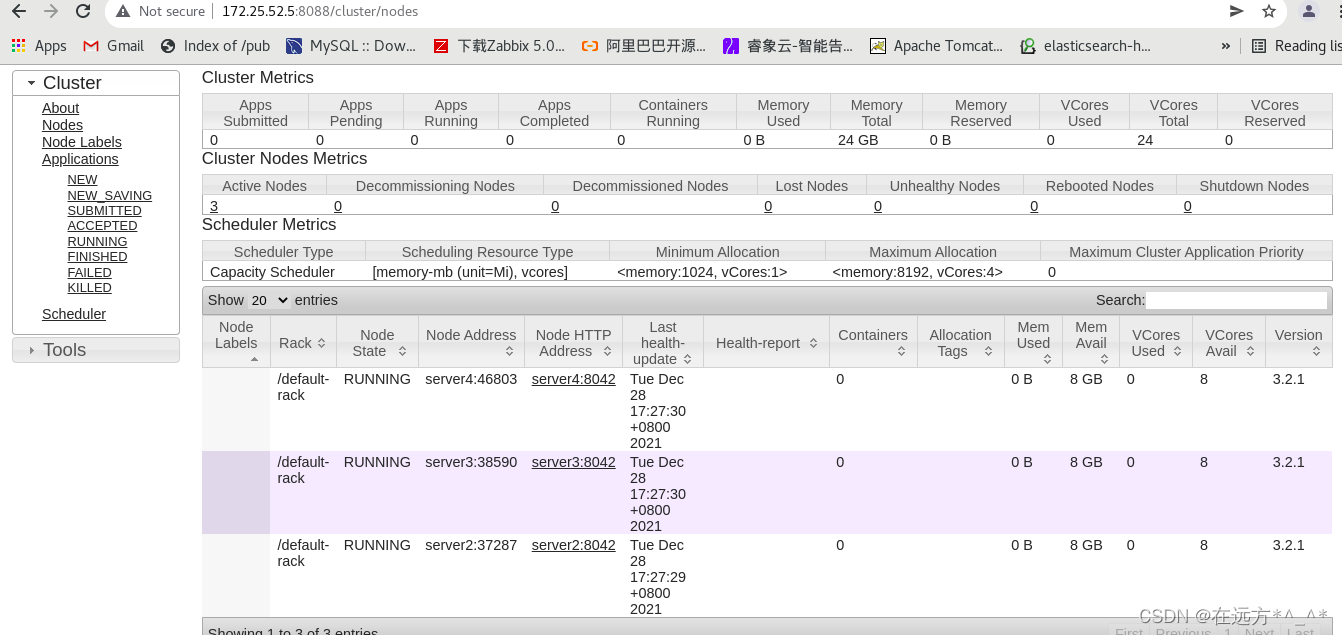

?查看各个节点的状态:

?

?

?

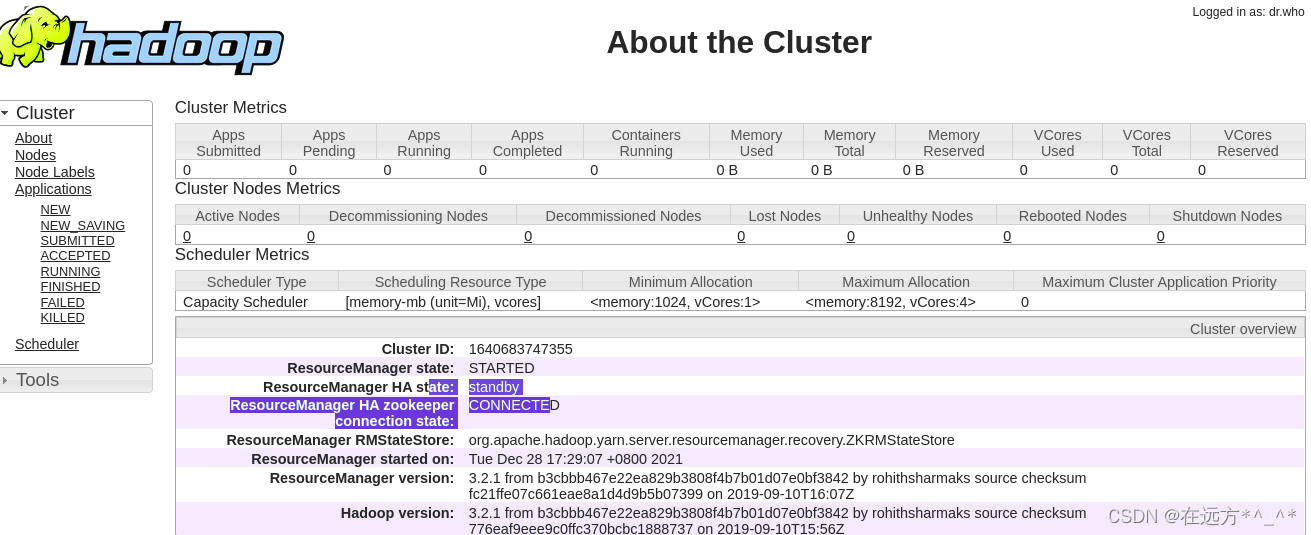

此时 RM_CLUSTER ——>m1(server1)

server5——>standby

server5——>standby

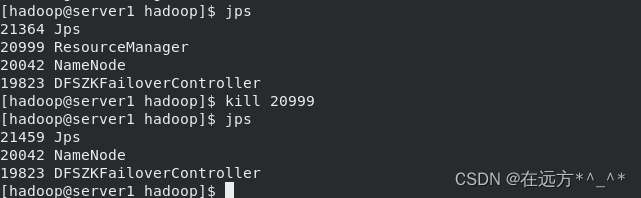

?测试 yarn 故障切换

?

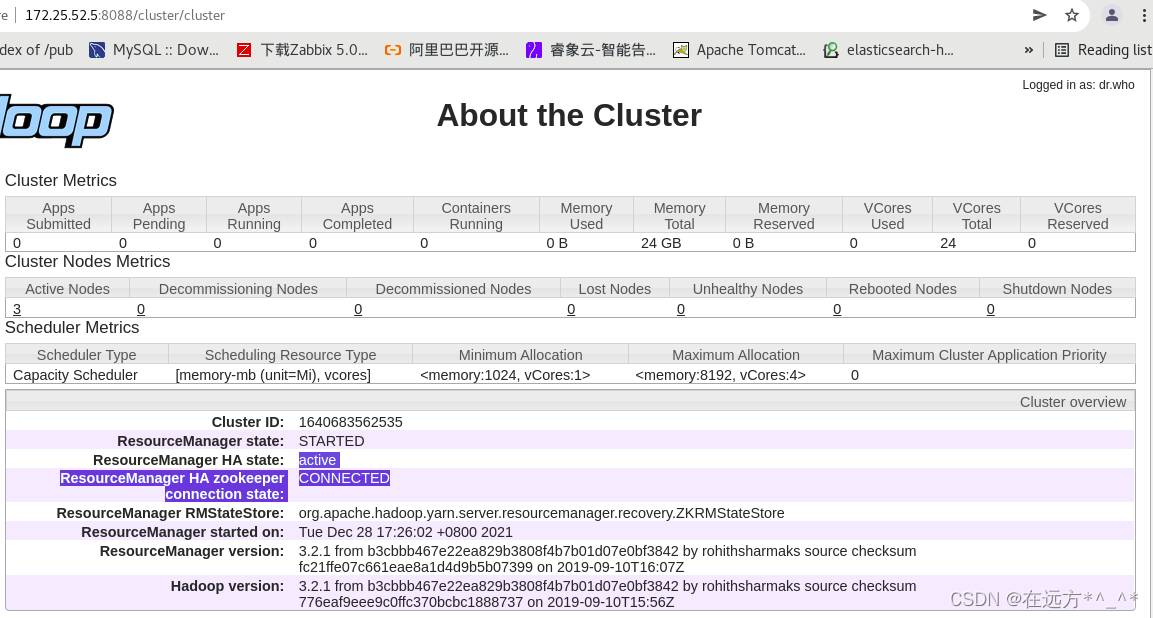

?

可以看到此时集群中 ResourceManager2 为 Active。?

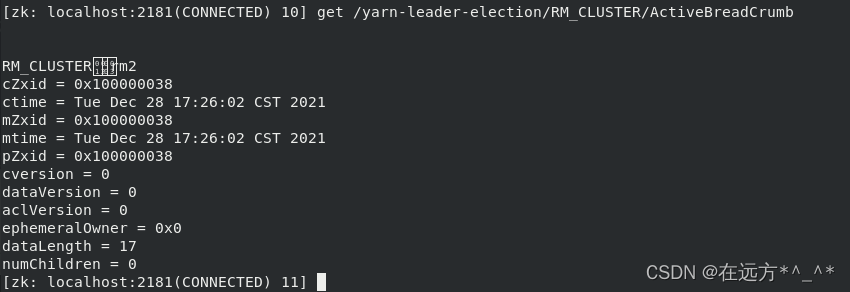

主备切换原理:

?YARN 是如何实现多个 ResourceManager 之间的主备切换的?

创建锁节点 在 ZooKeeper 上会有一个/yarn-leader-election/appcluster-yarn 的锁节点,所

有 的 ResourceManager 在 启 动 的 时 候 , 都 会 去 竞 争 写 一 个 Lock 子 节 点 :

/yarn-leader-election/appcluster-yarn/ActiveBreadCrumb,该节点是临时节点。ZooKeepr 能够

为 我 们 保 证 最 终 只 有 一 个 ResourceManager 能 够 创 建 成 功 。 创 建 成 功 的 那 个

ResourceManager 就切换为 Active 状态,没有成功的那些 ResourceManager 则切换为 Standby

状态。?

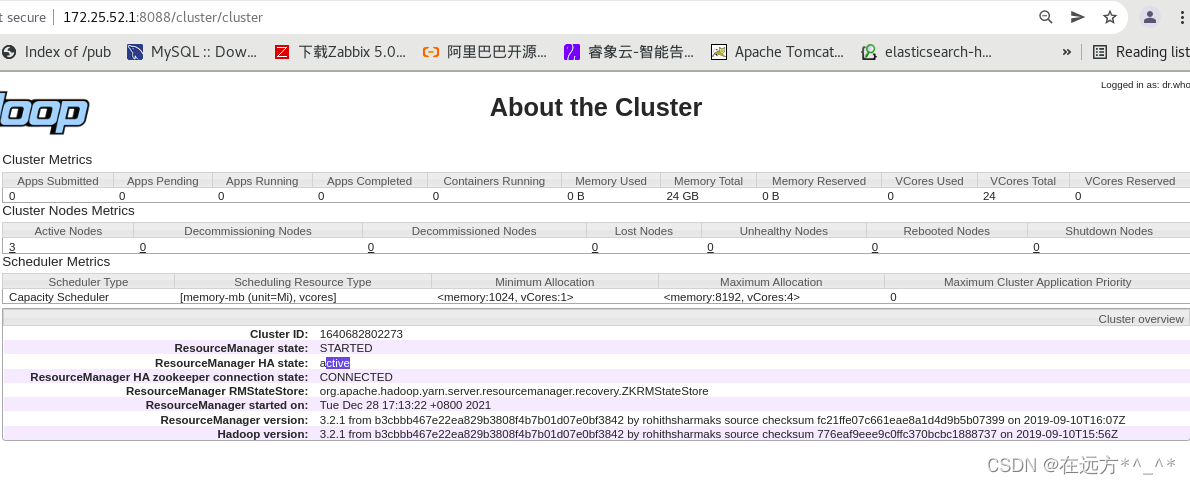

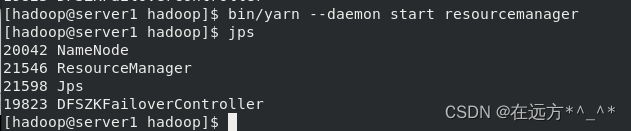

修复:

[hadoop@server1 hadoop]$ bin/yarn --daemon start resourcemanager

[hadoop@server1 hadoop]$ jps

?

?

?注 册 Watcher 监 听所 有 Standby 状 态 的 ResourceManager 都 会 向/yarn-leader-election/appcluster-yarn/ActiveBreadCrumb 节点注册一个节点变更的 Watcher 监听,利用临时节点的特性,能够快速感知到 Active 状态的 ResourceManager 的运行情况。

? ? ? ? 主备切换 当 Active 状态的 ResourceManager 出现诸如宕机或重启的异常情况时,其在

ZooKeeper 上 连 接 的 客 户 端 会 话 就 会 失 效 , 因 此

/yarn-leader-election/appcluster-yarn/ActiveBreadCrumb 节 点 就 会 被 删 除 。 此 时 其 余 各 个

Standby 状态的 ResourceManager 就都会接收到来自 ZooKeeper 服务端的 Watcher 事件通知,

然后会重复进行步骤 1 的操作。以 上 就 是 利 用 ZooKeeper 来 实 现 ResourceManager 的 主 备 切 换 的 过 程 , 实 现 了ResourceManager 的 HA。

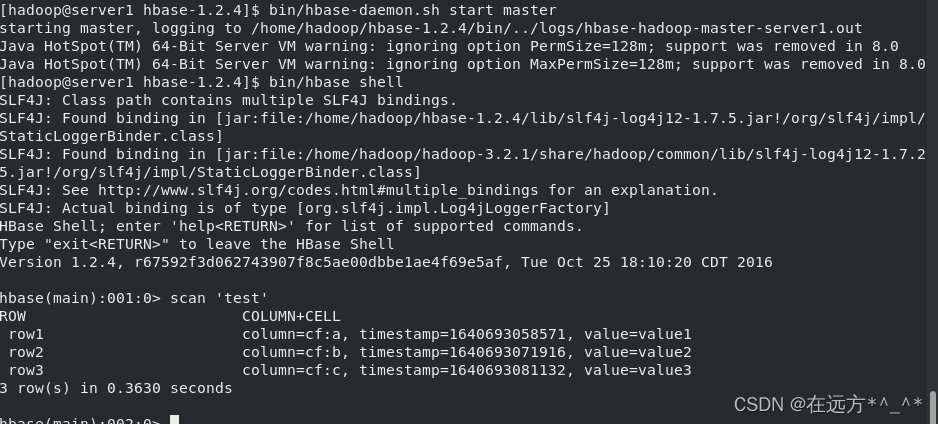

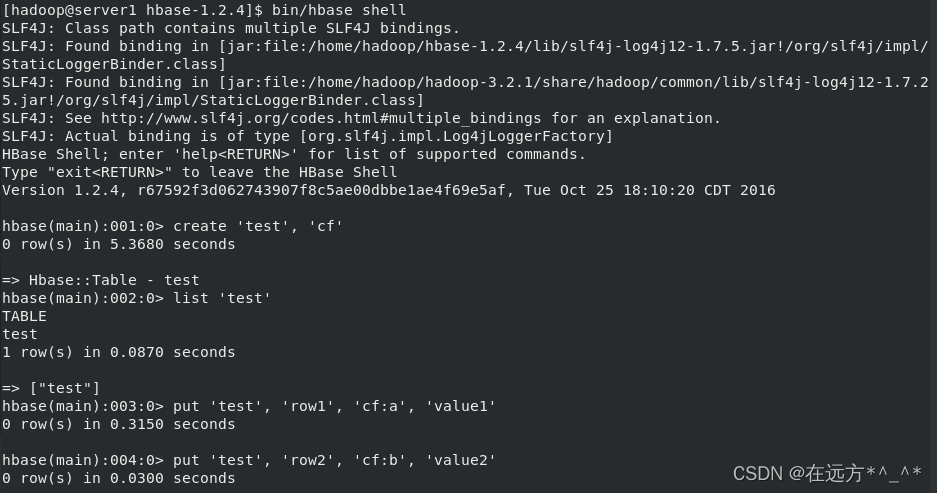

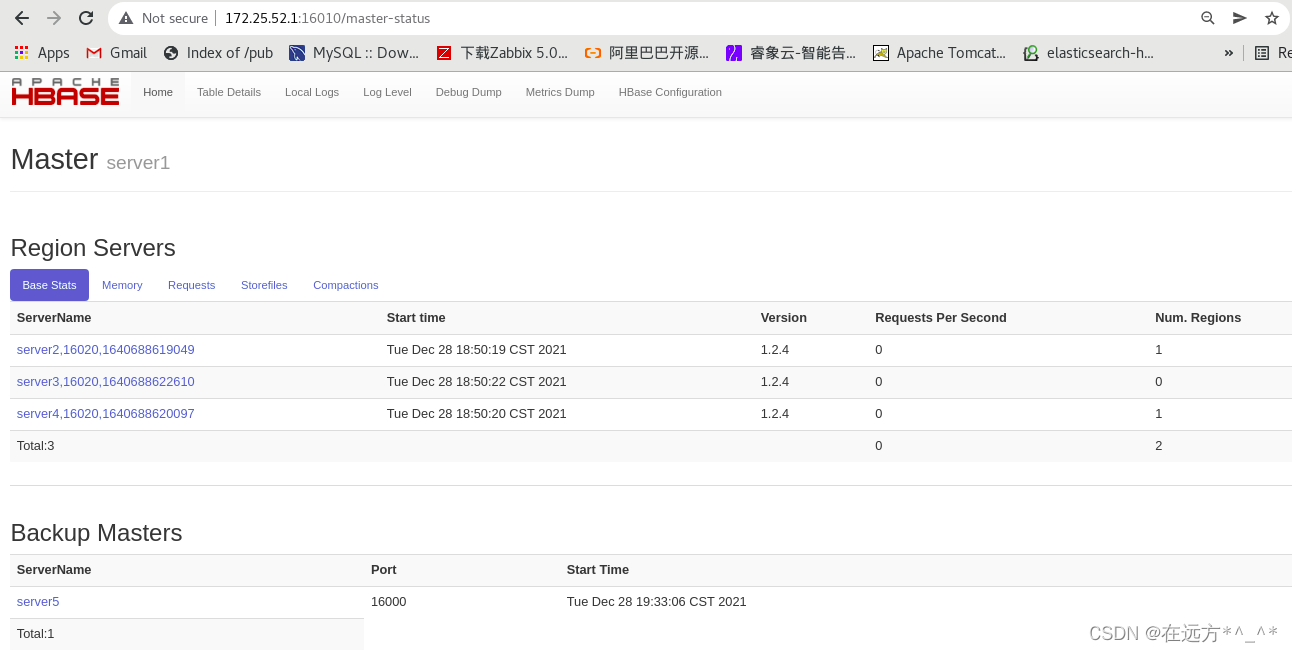

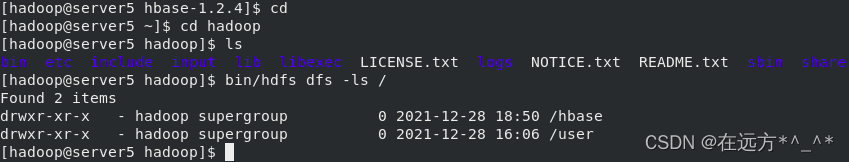

三.Hbse分布式部署

1.hbase 配置

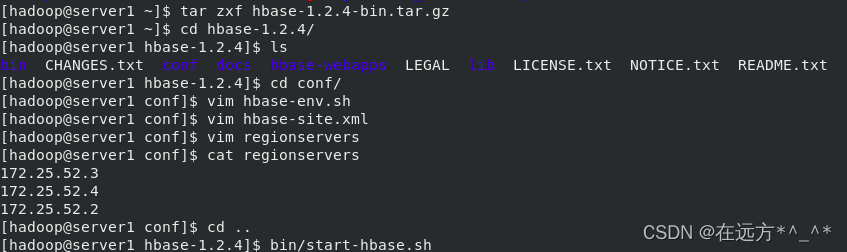

[hadoop@server1 ~]$ tar zxf hbase-1.2.4-bin.tar.gz

[hadoop@server1 ~]$ cd hbase-1.2.4/

[hadoop@server1 hbase-1.2.4]$ ls

bin CHANGES.txt conf docs hbase-webapps LEGAL lib LICENSE.txt NOTICE.txt README.txt

[hadoop@server1 hbase-1.2.4]$ cd conf/

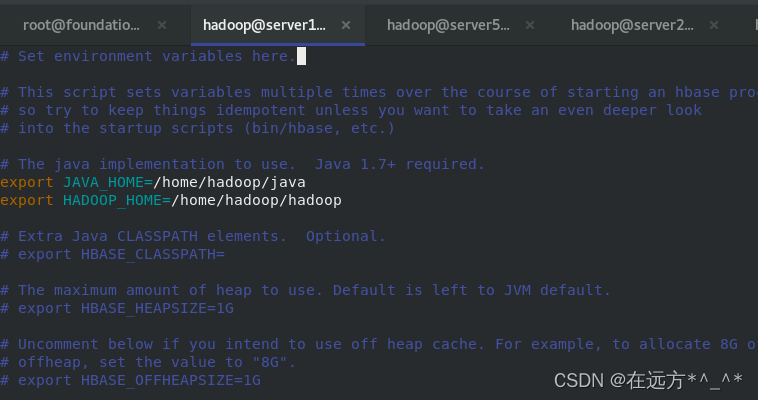

[hadoop@server1 conf]$ vim hbase-env.sh

export JAVA_HOME=/home/hadoop/java ##指定 jdk

export HADOOP_HOME=/home/hadoop/hadoop ##指定 hadoop 目录,否则 hbase无法识别 hdfs 集群配置。

export HBASE_MANAGES_ZK=false ##默认值是true,hbase 在启动时自动开启 zookeeper,如需自己维护 zookeeper 集群需设置为 false

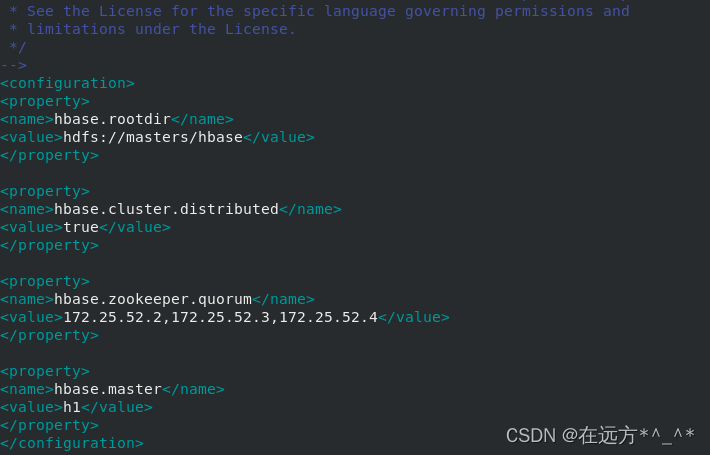

[hadoop@server1 conf]$ vim hbase-site.xml

[hadoop@server1 conf]$ vim regionservers

[hadoop@server1 conf]$ cat regionservers

172.25.52.3

172.25.52.4

172.25.52.2

[hadoop@server1 conf]$ cd ..

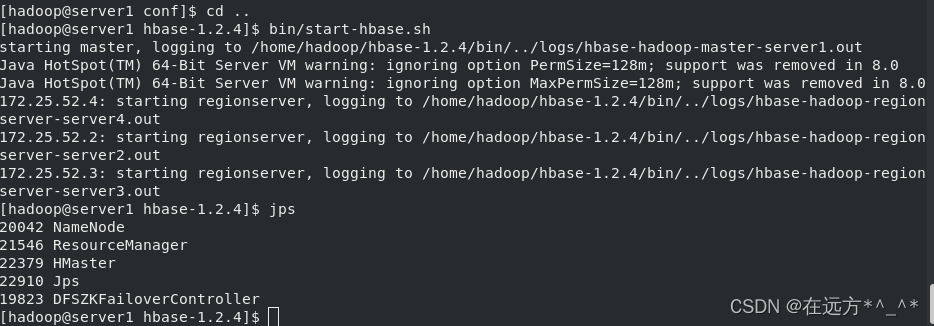

[hadoop@server1 hbase-1.2.4]$ bin/start-hbase.sh

[hadoop@server1 hbase-1.2.4]$ jps

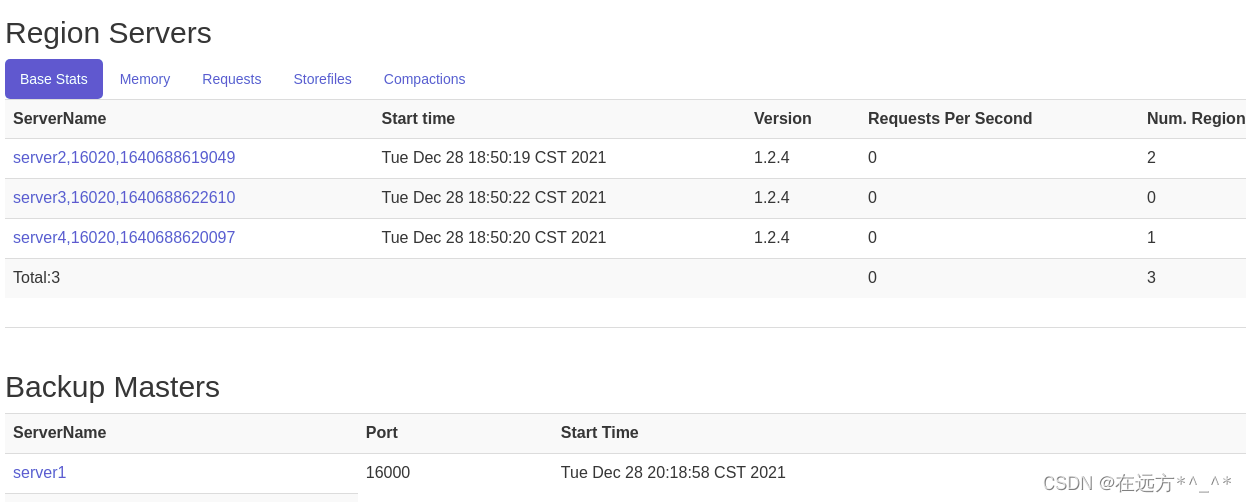

?启动 hbase

主节点运行: $ bin/start-hbase.sh

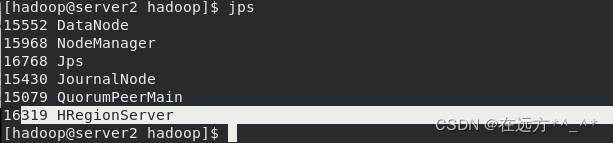

[hadoop@server2 hadoop]$ jps

?备节点运行:

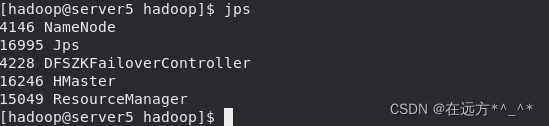

[hadoop@server5 hbase-1.2.4]$ bin/hbase-daemon.sh start master

[hadoop@server5 ~]$ cd hbase-1.2.4/

[hadoop@server5 hbase-1.2.4]$ ls

bin CHANGES.txt conf docs hbase-webapps LEGAL lib LICENSE.txt logs NOTICE.txt README.txt

[hadoop@server5 hbase-1.2.4]$ bin/hbase-daemon.sh start master

starting master, logging to /home/hadoop/hbase-1.2.4/bin/../logs/hbase-hadoop-master-server5.out

[hadoop@server5 hbase-1.2.4]$ jps ?

?

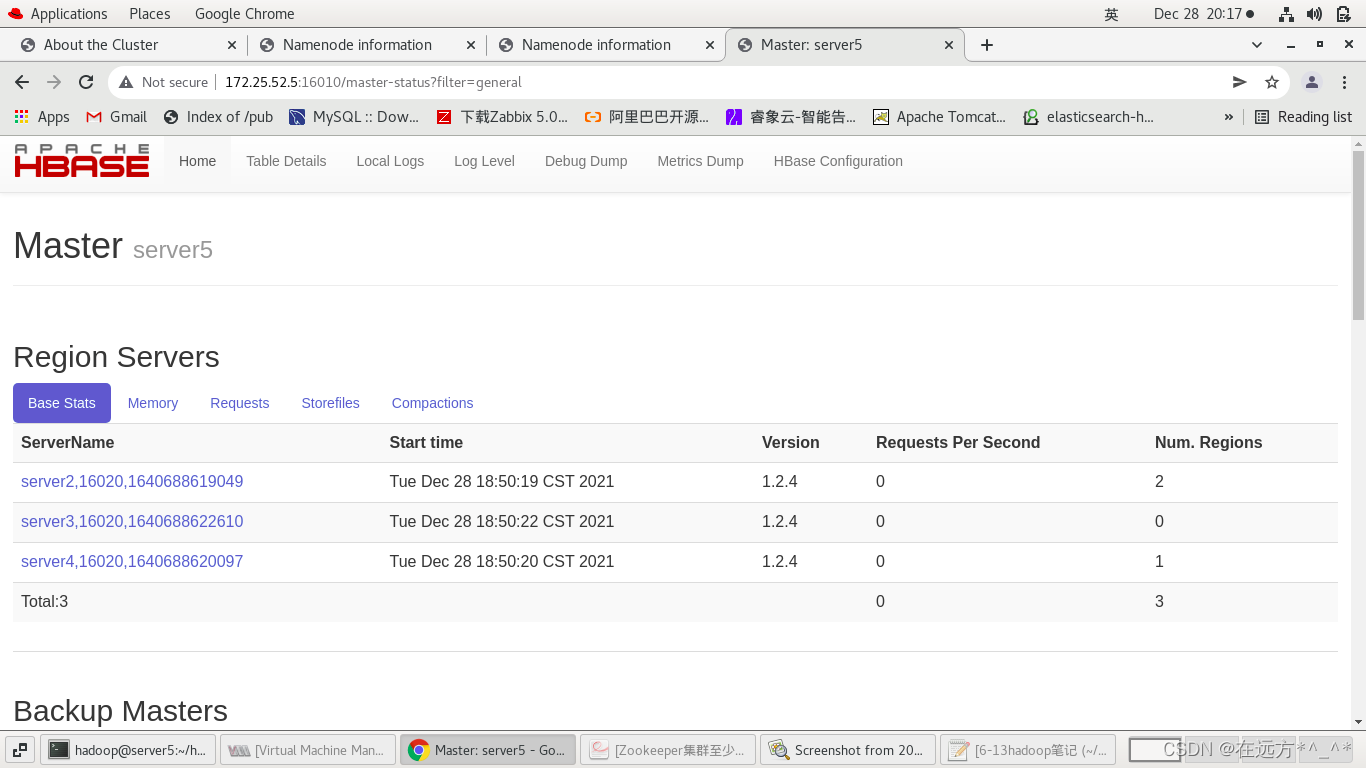

? HBase Master 默认端口时 16000,还有个 web 界面默认在 Master 的 16010 端口上,HBase RegionServers 会默认绑定 16020 端口,在端口 16030 上有一个展示信息的界面。

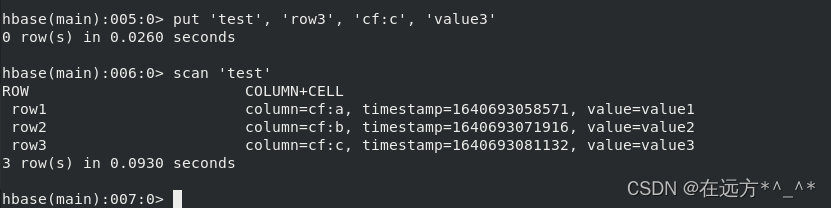

?测试

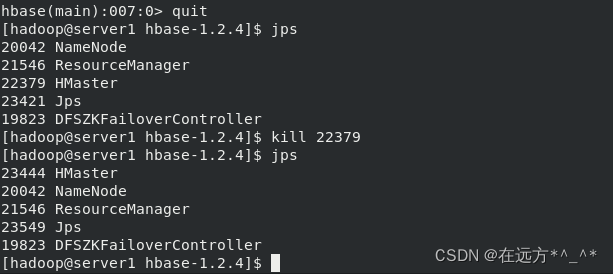

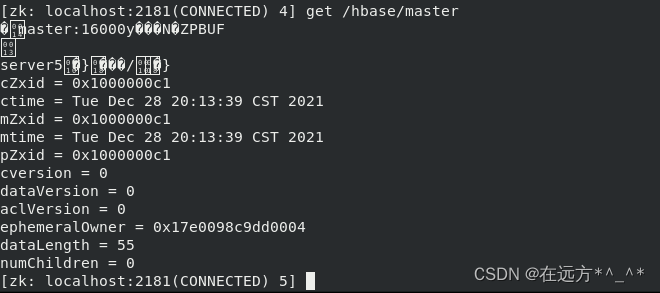

?在主节点上 kill 掉 HMaster 进程后查看故障切换:

?

?