Kubernetes集群EFK

Elasticsearch是一个实时的,分布式的,可扩展的搜索引擎,它允许进行全文本和结构化搜索以及对日志进行分析。它通常用于索引和搜索大量日志数据,也可以用于搜索许多不同种类的文档。

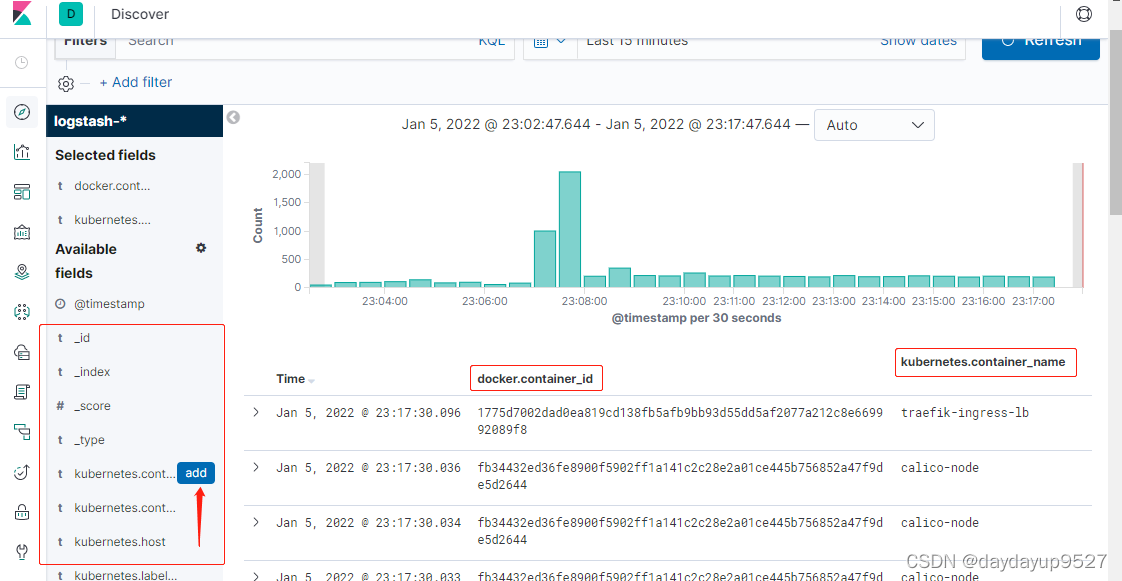

kibana是Elasticsearch 的功能强大的数据可视化的dashboard(仪表板)。Kibana允许你通过Web界面浏览Elasticsearch日志数据,也可自定义查询条件快速检索出elasticccsearch中的日志数据。

Fluentd是一个流行的开源数据收集器,我们将在 Kubernetes 集群节点上安装 Fluentd,通过获取容器日志文件、过滤和转换日志数据,然后将数据传递到 Elasticsearch 集群,在该集群中对其进行索引和存储。

[root@master ~]# cd efk

[root@master efk]# ls

busybox.tar.gz fluentd.tar.gz nfs-client-provisioner.tar.gz

elasticsearch_7_2_0.tar.gz kibana_7_2_0.tar.gz nginx.tar.gz

[root@master efk]# for i in `ls`;do docker load -i $i;done

在安装Elasticsearch集群之前,我们先创建一个名称空间,在这个名称空间下安装日志收工具elasticsearch、fluentd、kibana。我们创建一个kube-logging名称空间,将EFK组件安装到该名称空间中。

1.创建kube-logging名称空间

[root@master ~]# vim kube-logging.yaml

kind: Namespace

apiVersion: v1

metadata:

name: kube-logging

[root@master ~]#kubectl apply -f kube-logging.yaml

[root@master ~]# kubectl get namespaces |grep kube-logging

kube-logging Active 28s

安装elasticsearch组件

使用3个Elasticsearch Pods可以避免高可用中的多节点群集中发生的“裂脑”的问题。

1.创建一个headless service(无头服务)

在kube-logging名称空间定义了一个名为 elasticsearch 的 Service服务,带有app=elasticsearch标签,当我们将 Elasticsearch StatefulSet 与此服务关联时,服务将返回带有标签app=elasticsearch的 Elasticsearch Pods的DNS A记录,然后设置clusterIP=None,将该服务设置成无头服务。最后,我们分别定义端口9200、9300,分别用于与 REST API 交互,以及用于节点间通信。

[root@master ~]# vim elasticsearch_svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: kube-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

[root@master ~]# kubectl apply -f elasticsearch_svc.yaml

[root@master ~]# kubectl get svc -nkube-logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 38s

2.通过statefulset创建elasticsearch集群

Kubernetes statefulset可以为Pods分配一个稳定的标识,让pod具有稳定的、持久的存储。 Elasticsearch需要稳定的存储才能通过POD重新调度和重新启动来持久化数据。3个防止脑裂

1)下面将定义一个资源清单文件elasticsearch_statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: kube-logging

spec:

serviceName: elasticsearch

##与前面创建的headless服务名相关联,一致

replicas: 3

selector:

matchLabels:

app: elasticsearch

##选择拥有该标签的pod创建副本,与下面的template里定义相关联一致,新版本必写字段

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

##跟上面的headless服务的对应一致

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

###statefulset创建pod有序,默认会生成的pod的名字

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

env

cluster.name:Elasticsearch 集群的名称,我们这里是 k8s-logs。

node.name:节点的名称,通过metadata.name来获取。这将解析为 es-cluster-[0,1,2],取决于节点的指定顺序。

discovery.zen.ping.unicast.hosts:此字段用于设置在Elasticsearch集群中节点相互连接的发现方法。我们使用 unicastdiscovery 方式,它为我们的集群指定了一个静态主机列表。由于我们之前配置的无头服务,我们的 Pod 具有唯一的 DNS 域es-cluster-[0,1,2].elasticsearch.kube-logging.svc.cluster.local,因此我们相应地设置此变量。由于都在同一个 namespace 下面,所以我们可以将其缩短为es-cluster-[0,1,2].elasticsearch。要了解有关 Elasticsearch 发现的更多信息,请参阅 Elasticsearch 官方文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-discovery.html。

discovery.zen.minimum_master_nodes:我们将其设置为(N/2) + 1,N是我们的群集中符合主节点的节点的数量。我们有3个 Elasticsearch 节点,因此我们将此值设置为2(向下舍入到最接近的整数)。要了解有关此参数的更多信息,请参阅官方 Elasticsearch 文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-node.html#split-brain。

ES_JAVA_OPTS:这里我们设置为-Xms512m -Xmx512m,告诉JVM使用512 MB的最小和最大堆。您应该根据群集的资源可用性和需求调整这些参数。要了解更多信息,请参阅设置堆大小的相关文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/heap-size.html

. . .

initContainers:

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: do-block-storage

resources:

requests:

storage: 10Gi

创建storageclass,实现nfs做存储类的动态供给

[root@master ~]# yum install nfs-utils -y

[root@master ~]# cat /etc/exports

/data/v1 192.168.1.0/24(rw,no_root_squash)

[root@master ~]# mkdir /data/v1 -p

[root@master ~]# systemctl start nfs

[root@master ~]# showmount -e

Export list for master:

/data/v1 192.168.1.0/24

创建运行nfs-provisioner的sa账号

[root@master ~]# cat serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner

[root@master ~]# kubectl apply -f serviceaccount.yaml

serviceaccount/nfs-provisioner created

[root@master ~]# kubectl get sa

NAME SECRETS AGE

nfs-provisioner 1 2s

对sa账号做rbac授权

[root@master ~]# vim rbac.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-provisioner

apiGroup: rbac.authorization.k8s.io

[root@master ~]# kubectl apply -f rbac.yaml

clusterrole.rbac.authorization.k8s.io/nfs-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-provisioner created

通过deployment创建pod用来运行nfs-provisioner

[root@master ~]# vim deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-provisioner

spec:

selector:

matchLabels:

app: nfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: nfs-provisioner

image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: example.com/nfs

- name: NFS_SERVER

value: 192.168.1.11

- name: NFS_PATH

value: /data/v1

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.11

path: /data/v1

[root@master ~]# kubectl apply -f deployment.yaml

deployment.apps/nfs-provisioner created

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-provisioner-765944b7dc-m4qdp 1/1 Running 0 5s

创建storageclass

[root@master ~]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: do-block-storage

provisioner: example.com/nfs

#该值需要和前面nfs provisioner配置env的PROVISIONER_NAME处的value值保持一致

[root@master ~]# kubectl apply -f class.yaml

storageclass.storage.k8s.io/do-block-storage created

[root@master ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

do-block-storage example.com/nfs Delete Immediate false 7s

最后,我们指定了每个 PersistentVolume 的大小为 10GB,我们可以根据自己的实际需要进行调整

elasticsaerch-statefulset.yaml资源清单文件内容

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: kube-logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: fix-permissions

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: do-block-storage

resources:

requests:

storage: 10Gi

[root@master ~]# kubectl apply -f elasticsaerch-statefulset.yaml

statefulset.apps/es-cluster created

[root@master ~]# kubectl get pod -n kube-logging

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 35s

es-cluster-1 1/1 Running 0 29s

es-cluster-2 1/1 Running 0 22s

[root@master ~]# kubectl get svc -n kube-logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 4d10h

[root@master ~]# kubectl get pvc -n kube-logging

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-es-cluster-0 Bound pvc-4f21cab4-c063-4fdf-9279-9e13bb7c790b 10Gi RWO do-block-storage 7m50s

data-es-cluster-1 Bound pvc-5424af17-6a7f-42bd-833d-fefeb4317299 10Gi RWO do-block-storage 7m44s

data-es-cluster-2 Bound pvc-76b34d19-fb94-44e8-b10c-646ccbd086fe 10Gi RWO do-block-storage 7m37s

通过REST API检查elasticsearch集群是否部署成功,使用下面的命令将本地端口9200转发到 Elasticsearch 节点(如es-cluster-0)对应的端口:

[root@master ~]# kubectl port-forward es-cluster-0 9200:9200 --namespace=kube-logging

Forwarding from 127.0.0.1:9200 -> 9200

Forwarding from [::1]:9200 -> 9200

Handling connection for 9200

然后,在另外的终端窗口中,执行如下请求,新开一个master1终端:

curl http://localhost:9200/_cluster/state?pretty 可以看到es-cluster-0-2三个pod的IP信息

[root@master ~]# curl http://localhost:9200/_cluster/state?pretty

{

"cluster_name" : "k8s-logs",

"cluster_uuid" : "HUIB_6HDR0OBZ_IjAbEZGA",

"version" : 17,

"state_uuid" : "63xm4eeLSbCZw9e7l1hnnQ",

"master_node" : "9jIRSH-tSCWAZ1_zZj0oMA",

"blocks" : { },

"nodes" : {

"topJCw-URjK0CbiEv_6pyQ" : {

"name" : "es-cluster-1",

"ephemeral_id" : "vUyn2opWSI6Xq1VLdRAx-g",

"transport_address" : "10.244.1.124:9300",

"attributes" : {

"ml.machine_memory" : "3953963008",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true"

}

},

"L91tH0csQq6_MhQBsOB4Pg" : {

"name" : "es-cluster-2",

"ephemeral_id" : "eGUp4fTsSNe8oXCjtu1bPQ",

"transport_address" : "10.244.1.125:9300",

"attributes" : {

"ml.machine_memory" : "3953963008",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true"

}

},

"9jIRSH-tSCWAZ1_zZj0oMA" : {

"name" : "es-cluster-0",

"ephemeral_id" : "0Pz9qSyWQY28krb7vfe3Og",

"transport_address" : "10.244.1.123:9300",

"attributes" : {

"ml.machine_memory" : "3953963008",

"xpack.installed" : "true",

"ml.max_open_jobs" : "20"

}

}

},

安装kibana组件

[root@master ~]# cat kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

ports:

- port: 5601

type: NodePort

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601

[root@master ~]# kubectl apply -f kibana.yaml

[root@master ~]# kubectl get pods -n kube-logging

NAME READY STATUS RESTARTS AGE

kibana-5749b5778b-rclzg 1/1 Running 0 10s

[root@master ~]# kubectl get svc -n kube-logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 4d10h

kibana NodePort 10.105.166.246 <none> 5601:31324/TCP 22s

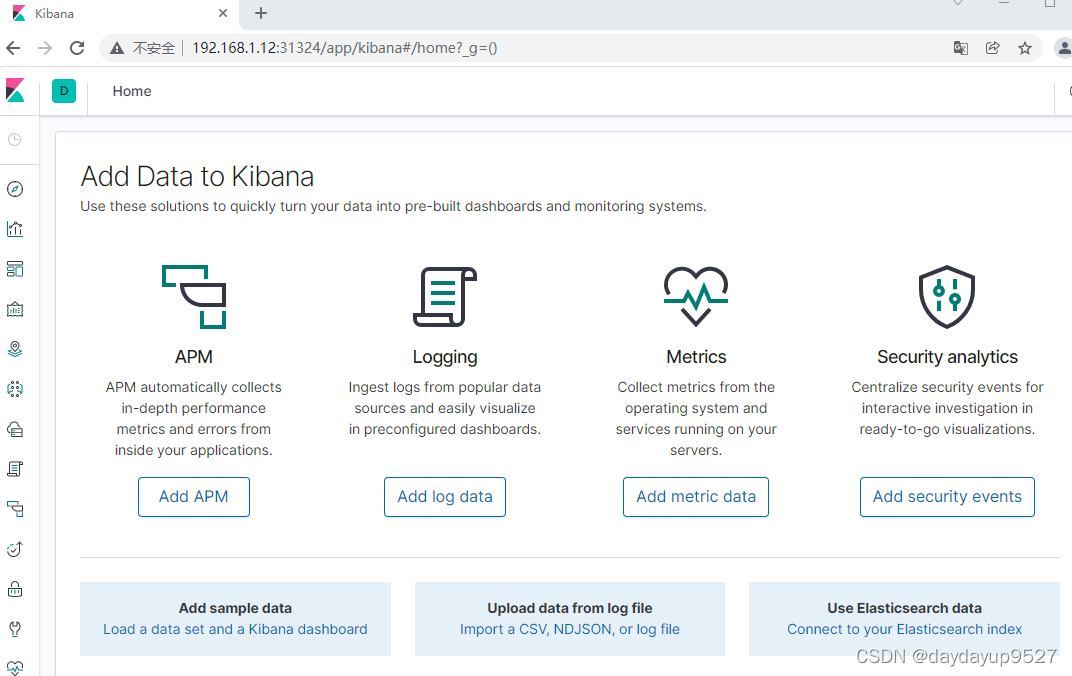

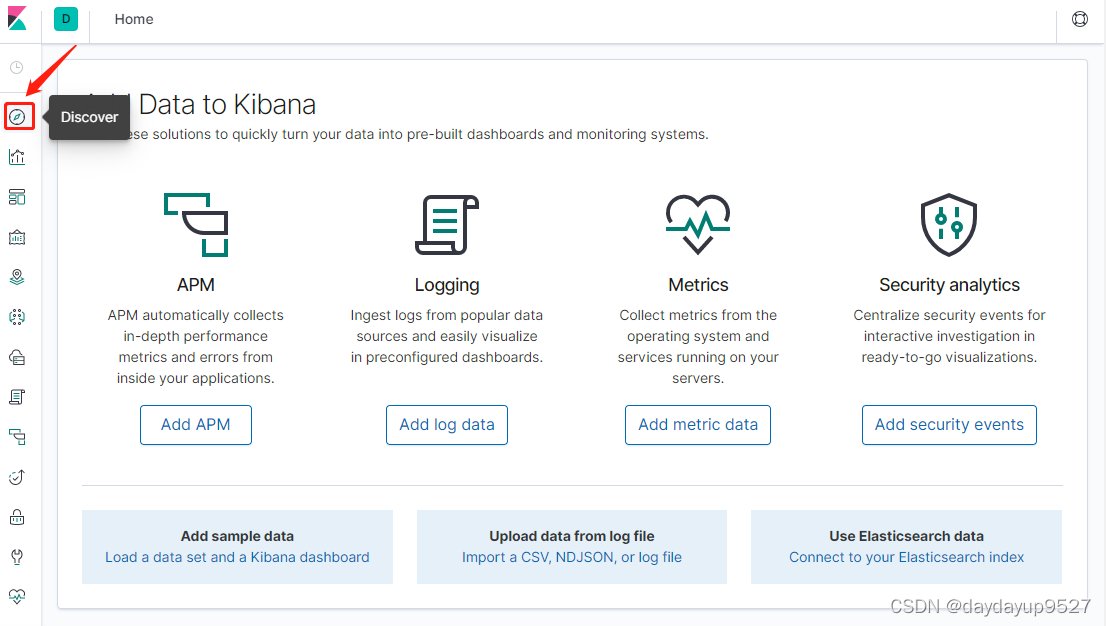

浏览器访问:192.168.4.12:31324

安装fluentd组件

我们使用daemonset控制器部署fluentd组件,这样可以保证集群中的每个节点都可以运行同样fluentd的pod副本,这样就可以收集k8s集群中每个节点的日志,在k8s集群中,容器应用程序的输入输出日志会重定向到node节点里的json文件中,fluentd可以tail和过滤以及把日志转换成指定的格式发送到elasticsearch集群中。除了容器日志,fluentd也可以采集kubelet、kube-proxy、docker的日志。

[root@master ~]# vim fluentd.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-logging

labels:

app: fluentd

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

labels:

app: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: kube-logging

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-logging

labels:

app: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1

imagePullPolicy: IfNotPresent

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.kube-logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: disable

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

##定义的两个采集数据的路径

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

[root@master ~]# kubectl apply -f fluentd.yaml

serviceaccount/fluentd created

clusterrole.rbac.authorization.k8s.io/fluentd created

clusterrolebinding.rbac.authorization.k8s.io/fluentd created

daemonset.apps/fluentd created

[root@master ~]# kubectl get pod -n kube-logging -owide

NAME READY STATUS RESTARTS AGE IP NODE

es-cluster-0 1/1 Running 0 36m 10.244.1.123 node1

es-cluster-1 1/1 Running 0 36m 10.244.1.124 node1

es-cluster-2 1/1 Running 0 36m 10.244.1.125 node1

fluentd-qn2nx 1/1 Running 0 80s 10.244.1.128 node1

fluentd-vbg4b 1/1 Running 0 80s 10.244.0.38 master

kibana-5749b5-k 1/1 Running 0 14m 10.244.1.127 node1

自定义的容器日志格式

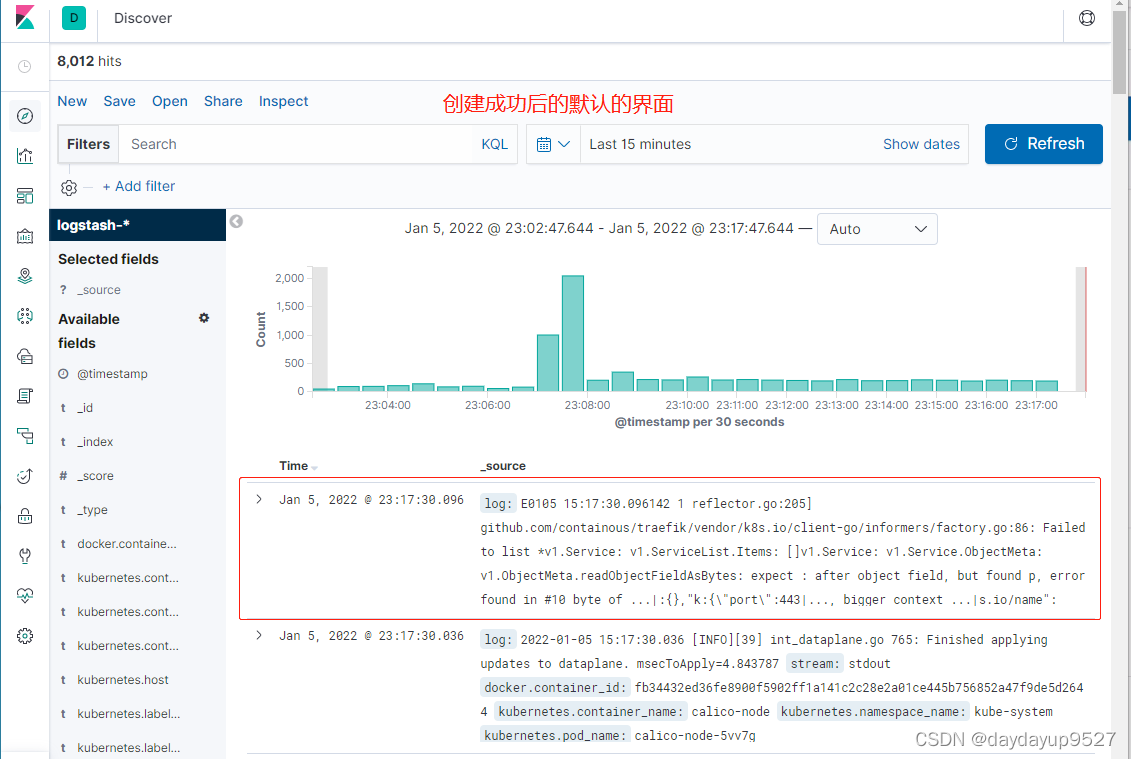

日志的搜索

测试容器日志

[root@master ~]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox

imagePullPolicy: IfNotPresent

args: [/bin/sh, -c,'i=0;while true;do echo"$i:$(date)";i=$((i+1));sleep 1;done']

[root@master ~]# kubectl apply -f pod.yaml

pod/counter created

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

counter 1/1 Running 0 5s

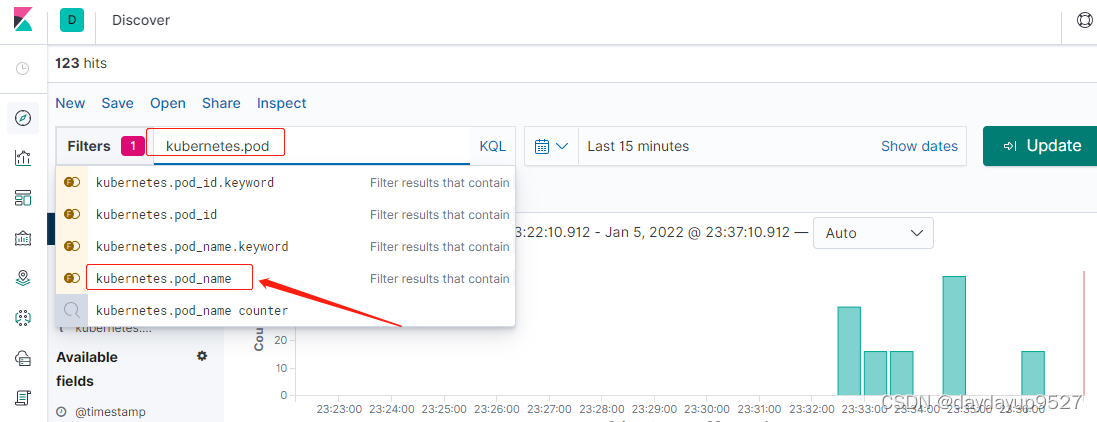

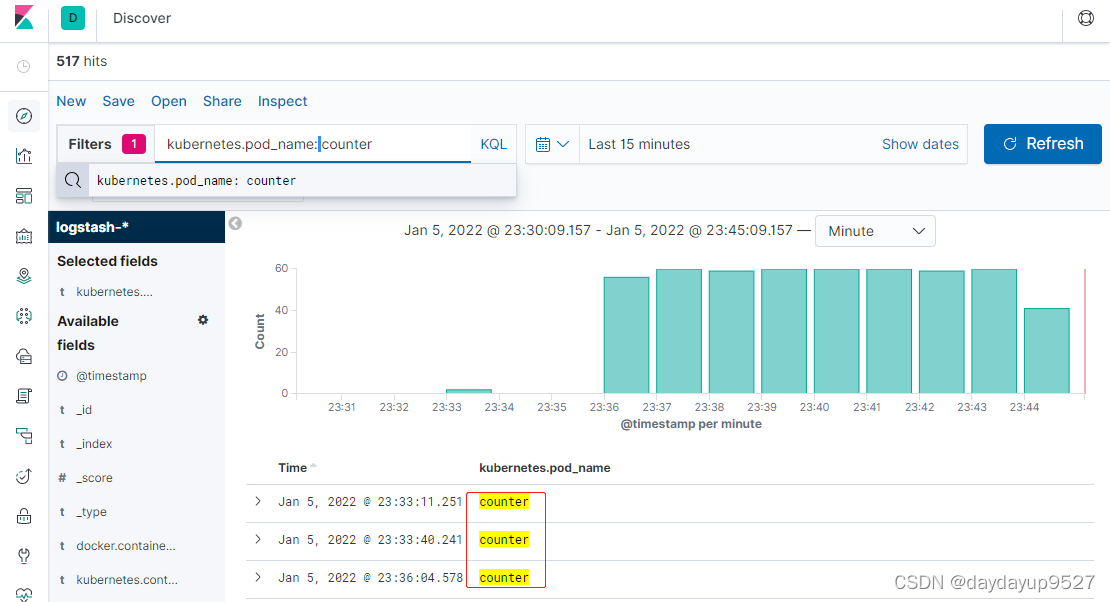

回到kibana上搜索 kubernetes.pod_name: counter