异常代码描述

刚刚开始接触Hadoop,对于MapReduce并不时特别了解,以下记录以下纠结了一天的问题及解决方案

1、执行MapReduce任务

hadoop jar wc.jar hejie.zheng.mapreduce.wordcount2.WordCountDriver /input /output

2、跳出异常 Task failed task_1643869122334_0004_m_000000

[2022-02-03 15:10:59.255]Container killed on request. Exit code is 143

[2022-02-03 15:10:59.333]Container exited with a non-zero exit code 143.

2022-02-03 15:11:11,860 INFO mapreduce.Job: Task Id : attempt_1643869122334_0004_m_000000_2, Status : FAILED

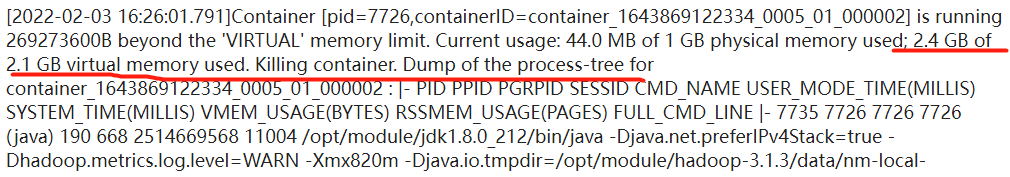

[2022-02-03 15:11:10.394]Container [pid=4334,containerID=container_1643869122334_0004_01_000004] is running 273537536B beyond the 'VIRTUAL' memory limit. Current usage: 68.0 MB of 1 GB physical memory used; 2.4 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1643869122334_0004_01_000004 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 4343 4334 4334 4334 (java) 543 250 2518933504 17149 /opt/module/jdk1.8.0_212/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/user01/appcache/application_1643869122334_0004/container_1643869122334_0004_01_000004/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.10.102 39922 attempt_1643869122334_0004_m_000000_2 4

|- 4334 4332 4334 4334 (bash) 2 6 9461760 270 /bin/bash -c /opt/module/jdk1.8.0_212/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/user01/appcache/application_1643869122334_0004/container_1643869122334_0004_01_000004/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.10.102 39922 attempt_1643869122334_0004_m_000000_2 4 1>/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004/stdout 2>/opt/module/hadoop-3.1.3/logs/userlogs/application_1643869122334_0004/container_1643869122334_0004_01_000004/stderr

[2022-02-03 15:11:10.532]Container killed on request. Exit code is 143

[2022-02-03 15:11:10.562]Container exited with a non-zero exit code 143.

2022-02-03 15:11:22,338 INFO mapreduce.Job: map 100% reduce 100%

2022-02-03 15:11:23,386 INFO mapreduce.Job: Job job_1643869122334_0004 failed with state FAILED due to: Task failed task_1643869122334_0004_m_000000

Job failed as tasks failed. failedMaps:1 failedReduces:0 killedMaps:0 killedReduces: 0

2022-02-03 15:11:23,653 INFO mapreduce.Job: Counters: 13

Job Counters

Failed map tasks=4

Killed reduce tasks=1

Launched map tasks=4

Other local map tasks=3

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=30921

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=30921

Total vcore-milliseconds taken by all map tasks=30921

Total megabyte-milliseconds taken by all map tasks=31663104

Map-Reduce Framework

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

3、解决方案

先上解决方法,在描述问题原因

参考文章中的配置。

首先,跳转到hadoop-3.1.3\etc\hadoop 目录下,执行:

vim mapred-site.xml

添加如下代码,配置运行时内存(如文件内容比较大,可以根据实践情况配置)

<property>

<name>mapreduce.map.memory.mb</name>

<value>1024</value>

<description>修改每个Map task容器申请的内存大小;默认1G</description>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx64m</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx64m</value>

<description>一般设置为mapreduce.map.memory.mb的85%左右</description>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>4</value>

4、刨根问底

其实可以通过http://hadoop103:8088/cluster,看出当前任务的history,查看具体的问题出现在哪里:

可以看到MapReduce申请了2.4GB的内存空间,超过了默认的虚拟内存2.1GB,内存不够导致异常。

修改mapred-site.xml内存大小即可。

(1)mapreduce.map.memory.mb 默认1G,map执行时分配的内存

(2)mapreduce.reduce.memory.mb 默认1G,reduce执行时分配的内存

(3)yarn.app.mapreduce.am.resource.mb 默认1.5G, MR AppMaster分配的内存

jvm参数默认值

有下面的一些jvm的参数,他们的默认值一般比较容易忽略,记录下来,方便查询

-Xms 默认情况下堆内存的64分之一,最小1M

-Xmx 默认情况下堆内存的4分之一或1G

-Xmn 默认情况下堆内存的64分之一

-XX:NewRatio 默认为2

-XX:SurvivorRatio 默认为8

o -Xms:初始堆大小,如4096M

o -Xmx:最大堆大小,如4096M,如果xms>xmx, 那默认空余堆内存小于40%会触发调整到xmx,但是MinheapFreeRation可以调整

o -XX:NewSize=n:设置年轻代大小,如1024

o -Xmn1024M 设置年轻代大小为1024M

o -XX:NewRatio=n:设置年老代和年轻代的比值。建议值为3至5,如4,表示年老代:年轻代=4:1,年轻代占整个年轻代年老代和的1/5

o -XX:SurvivorRatio=n:年轻代中Eden区与两个Survivor区的比值。建议值3-5。注意Survivor区有两个。如8,表示2*Survivor:Eden=2:8,一个Survivor区占整个年轻代的1/10

-Xmx64M:最大堆空间64MB

深入了解内存设置可参考Yarn下Mapreduce的内存参数理解&xml参数配置

以上参数可以根据实践需要进行调整以达到最优效果。