一、elasticsearch kibana

1、安装镜像

docker pull elasticsearch:7.4.2

docker pull kibana:7.4.2

2、创建文件夹 并授予权限

mkdir -p /mydata/elasticsearch/data

mkdir -p /mydata/elasticsearch/config

chmod 777 /mydata/elasticsearch/config/

chmod 777 /mydata/elasticsearch/data/

3、启动 -d 后台运行 -p端口映射 -e 环境变量配置

docker run -d --name elasticsearch -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xms128m -Xmx512m" -v /mydata/elasticsearch/data:/usr/share/elasticsearch/data -v/mydata/elasticsearch/plugins:/usr/share/elasticsearch/plugins -d elasticsearch:7.4.2

4、解决问题

Error response from daemon: Conflict. The container name "/kibana" is already in use by container "e7670a321f758a36d13963cd092aad30132be59d3a7f083d8fbbfc0c27d792ef". You have to remove (or rename) that container to be able to reuse that name.

docker ps -a 删除容器

之后再启动

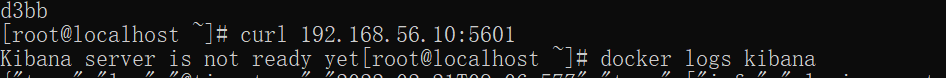

4.2 kibana启动不起来

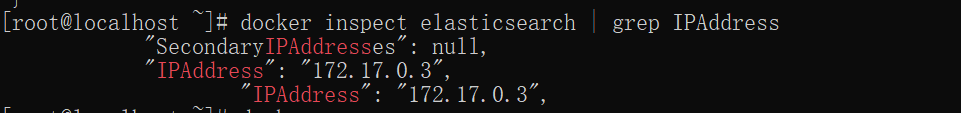

解决es分配内网:

docker inspect elasticsearch |grep IPAddress

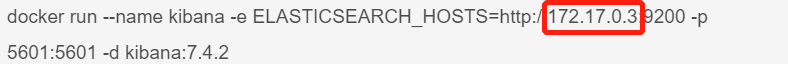

使用es分配的内网启动kibana

docker run --name kibana -e ELASTICSEARCH_HOSTS=http://172.17.0.3:9200 -p 5601:5601 -d kibana:7.4.2

二、分词器

1、在/mydata/elasticsearch/plugins 目录下 下载ik分词压缩包

yum install wget

wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.2/elasticsearch-analysis-ik-7.4.2.zip

2、解压到ik文件夹下

yum install unzip

unzip elasticsearch-analysis-ik-7.4.2.zip

删除zip

rm -rf *.zip

3、将解压的文件放在ik文件夹下

重新启动elasticsearch

安装分词器之前

POST _analyze

{

"text": "北京冬奥会,加油!"

}

结果

{

"tokens" : [

{

"token" : "北",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token" : "京",

"start_offset" : 1,

"end_offset" : 2,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "冬",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token" : "奥",

"start_offset" : 3,

"end_offset" : 4,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token" : "会",

"start_offset" : 4,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 4

},

{

"token" : "加",

"start_offset" : 6,

"end_offset" : 7,

"type" : "<IDEOGRAPHIC>",

"position" : 5

},

{

"token" : "油",

"start_offset" : 7,

"end_offset" : 8,

"type" : "<IDEOGRAPHIC>",

"position" : 6

}

]

}

分词后

POST _analyze

{

"analyzer": "ik_smart",

"text": "北京冬奥会,加油!"

}

{

"tokens" : [

{

"token" : "北京",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "冬奥会",

"start_offset" : 2,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "加油",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 2

}

]

}