sqoop 配置达梦

由于我的hadoop集群的虚拟机hadoop01,hadoop02,hadoop03中 hadoop03虚机丢失,因此采用克隆出的hadoop05制作单节点环境进行测试

前言

由于 hadoop5是克隆出的节点,因此需要将原有的环境进行备份操作

#hadoop05上配置文件的路径为

/home/hadoop01/hadoop/apps/hadoop-2.7.6/etc

#将其中的hadoop 目录进行拷贝复制

[hadoop01@hadoop05 etc]$ ls

hadoop hadoop_20220201

使用环境

| 配置项 | 配置值 |

|---|---|

| 虚拟机 | hadoop05 |

| ip | 192.168.142.241 |

| OSuser | hadoop01 |

| hadoop版本 | hadoop-2.7.6 |

| sqoop版本 | sqoop-1.4.6 |

| 达梦版本 | dm8 |

hadoop伪分布式安装

1、jdk 版本检查

[hadoop01@hadoop05 hadoop]$ java -version

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

修改 hadoop-env.sh 配置文件,添加 jdk 安装目录配置

export JAVA_HOME=/usr/local/jdk1.8.0_221

2、修改core.xml

<configuration>

<!-- 指定 hadoop 数据存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop01/data/hadoopdata/</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop05:9000</value>

</property>

</configuration>

3、修改slaves

hadoop05

4、hadoop环境变量

[hadoop01@hadoop05 hadoop]$ echo $HADOOP_HOME

/home/hadoop01/hadoop/apps/hadoop-2.7.6

[hadoop01@hadoop05 hadoop]$ pwd

/home/hadoop01/hadoop/apps/hadoop-2.7.6/etc/hadoop

[hadoop01@hadoop05 hadoop]$ echo $PATH

/usr/lib64/qt-3.3/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/home/hadoop01/hadoop/apps/hbase-1.2.6/bin:/usr/local/jdk1.8.0_221/bin:/home/hadoop01/hadoop/apps/hadoop-2.7.6/bin:/home/hadoop01/hadoop/apps/hadoop-2.7.6/sbin:/home/hadoop01/hadoop/apps/zookeeper-3.4.10/bin:/home/hadoop01/hadoop/apps/apache-hive-2.3.2-bin/bin:/home/hadoop01/hadoop/apps/flink-1.7.2/bin:/home/hadoop01/bin:/home/hadoop01/hadoop/apps/scala-2.11.8/bin:/home/hadoop01/hadoop/apps/spark-2.3.1-bin-hadoop2.7/bin:/home/hadoop01/hadoop/apps/spark-2.3.1-bin-hadoop2.7/sbin:/home/hadoop01/hadoop/apps/kafka/bin

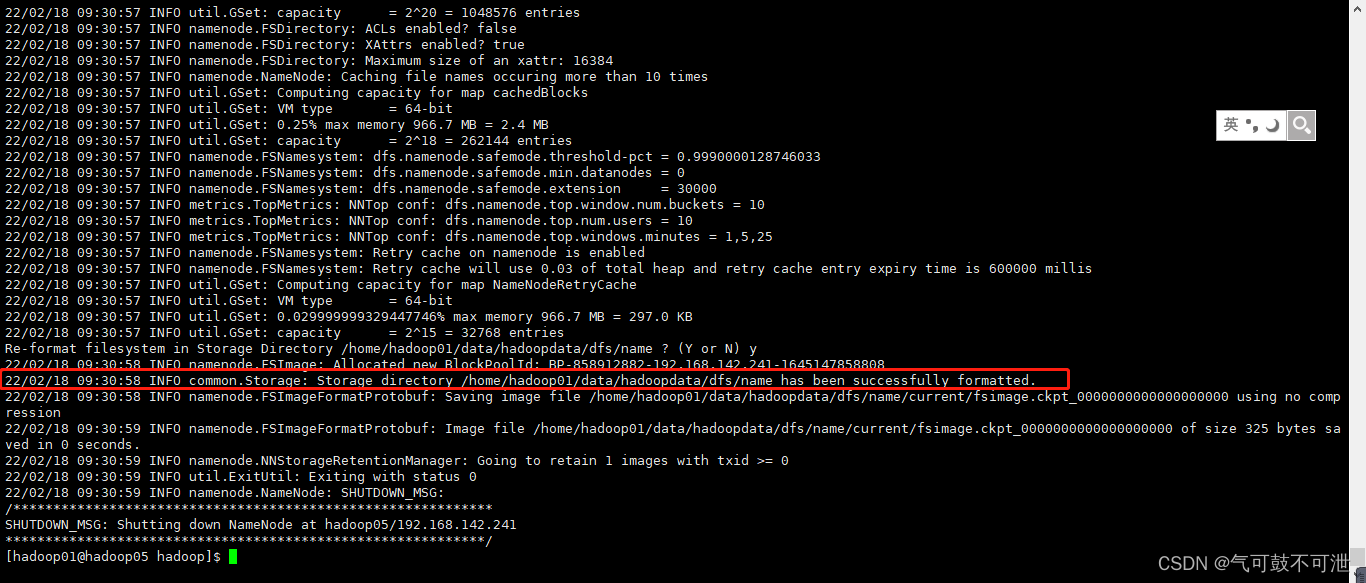

5、格式化namenode

hadoop namenode -format

#运行成功后如下图所示

6、启动dfs

[hadoop01@hadoop05 hadoop-2.7.6]$ start-dfs.sh

Starting namenodes on [hadoop05]

hadoop05: starting namenode, logging to /home/hadoop01/hadoop/apps/hadoop-2.7.6/logs/hadoop-hadoop01-namenode-hadoop05.out

hadoop05: starting datanode, logging to /home/hadoop01/hadoop/apps/hadoop-2.7.6/logs/hadoop-hadoop01-datanode-hadoop05.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop01/hadoop/apps/hadoop-2.7.6/logs/hadoop-hadoop01-secondarynamenode-hadoop05.out

[hadoop01@hadoop05 hadoop-2.7.6]$ jps

5424 NameNode

5558 DataNode

5838 Jps

5726 SecondaryNameNode

7、启动yarn

[hadoop01@hadoop05 hadoop-2.7.6]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop01/hadoop/apps/hadoop-2.7.6/logs/yarn-hadoop01-resourcemanager-hadoop05.out

hadoop05: starting nodemanager, logging to /home/hadoop01/hadoop/apps/hadoop-2.7.6/logs/yarn-hadoop01-nodemanager-hadoop05.out

[hadoop01@hadoop05 hadoop-2.7.6]$ jps

5424 NameNode

6000 NodeManager

6212 Jps

5558 DataNode

5895 ResourceManager

5726 SecondaryNameNode

8、验证

1)检查进程数

[hadoop01@hadoop05 hadoop-2.7.6]$ jps

5424 NameNode

6000 NodeManager

6212 Jps

5558 DataNode

5895 ResourceManager

5726 SecondaryNameNode

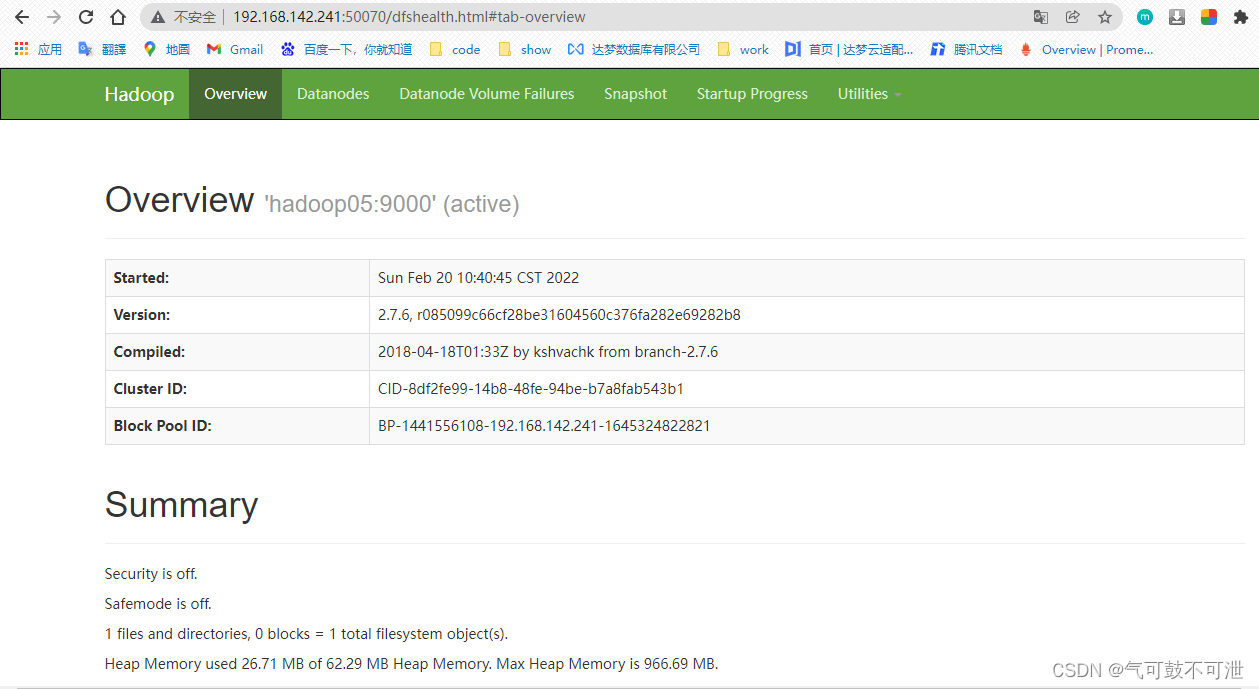

2)访问网页

#hdfs的访问地址

http://192.168.142.241:50070/

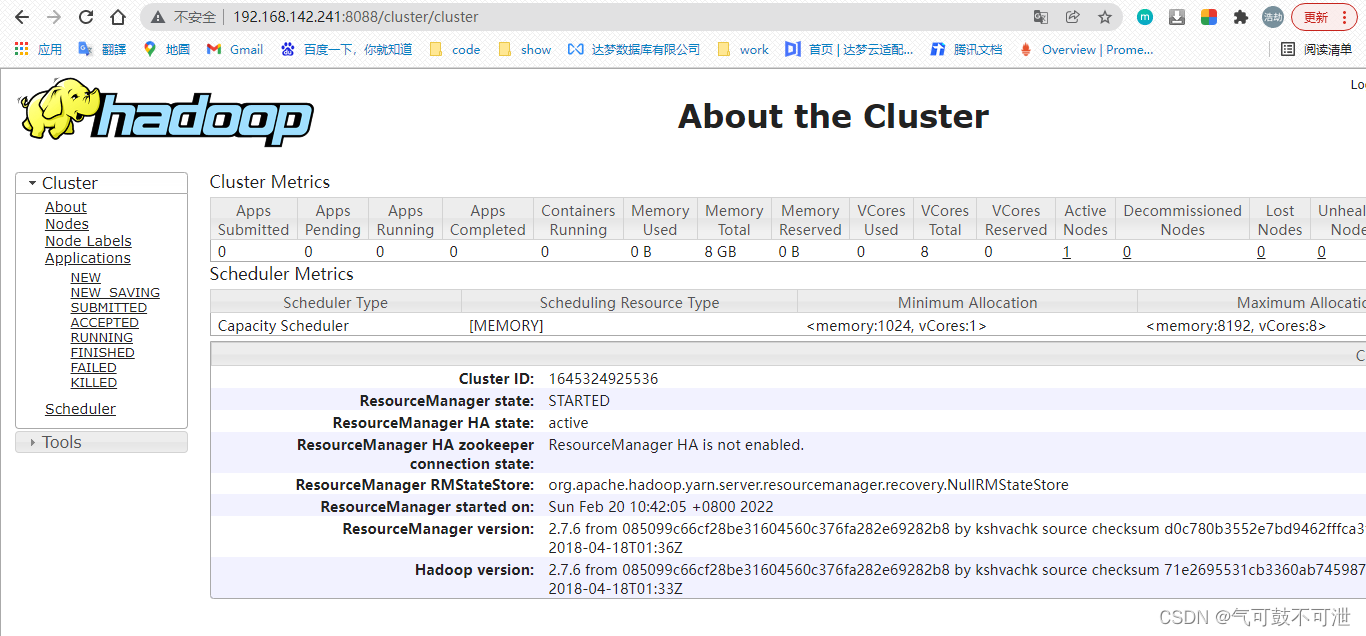

3)mapreduce

http://hadoop01:8088/cluster/cluster

配置sqoop环境

1、解压安装包

cd

tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha apps/sqoop-1.4.6

2、配置环境变量

1)在~/.bash_profile新增sqoop环境变量

export SQOOP_HOME=/home/hadoop01/hadoop/apps/sqoop-1.4.6

export PATH=$PATH:$SQOOP_HOME/bin

2)验证

[hadoop01@hadoop05 conf]$ source ~/.bash_profile

[hadoop01@hadoop05 conf]$ sqoop version

Warning: /home/hadoop01/hadoop/apps/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop01/hadoop/apps/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

22/02/20 10:57:08 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

Sqoop 1.4.6

git commit id c0c5a81723759fa575844a0a1eae8f510fa32c25

Compiled by root on Mon Apr 27 14:38:36 CST 2015

3、配置sqoop运行所需环境变量

cd $SQOOP_HOME/conf

cp sqoop-env-template.sh sqoop-env.sh

vim sqoop-env.sh

#修改环境变量参数

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/home/hadoop01/hadoop/apps/hadoop-2.7.6

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop01/hadoop/apps/hadoop-2.7.6

5、将达梦驱动复制到$SQOOP_HOME/lib目录下

sqoop数据导入与导出

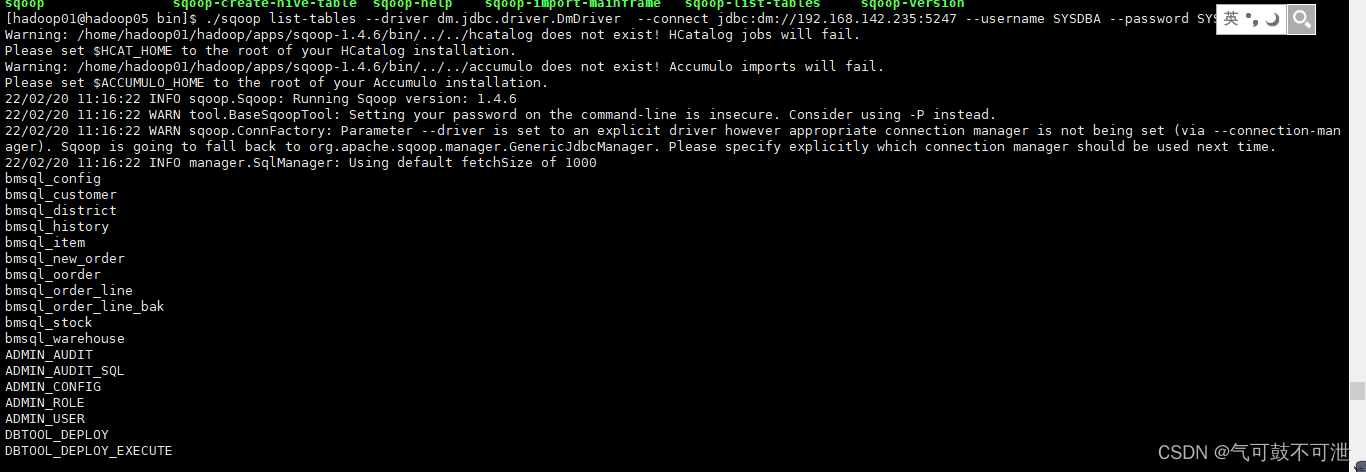

查看SYSDBA用户下所有表

./sqoop list-tables --driver dm.jdbc.driver.DmDriver --connect jdbc:dm://192.168.142.235:5247 --username SYSDBA --password SYSDBA

sqoop数据导入(dm导出到hdfs)

1)执行命令

#select * from "SYSDBA"."BAK_DMINI_210714";

./sqoop import --driver dm.jdbc.driver.DmDriver --connect jdbc:dm://192.168.142.235:5247 --username SYSDBA --password SYSDBA --table BAK_DMINI_210714 -m 1

./sqoop import --driver dm.jdbc.driver.DmDriver --connect jdbc:dm://192.168.142.235:5247 --username SYSDBA --password SYSDBA --table BAK_DMINI_210715 -m 1

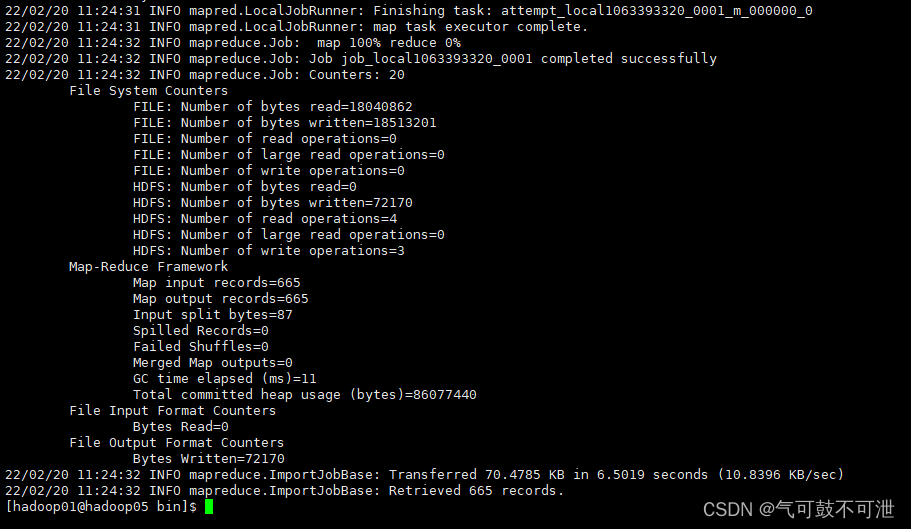

2)执行结果

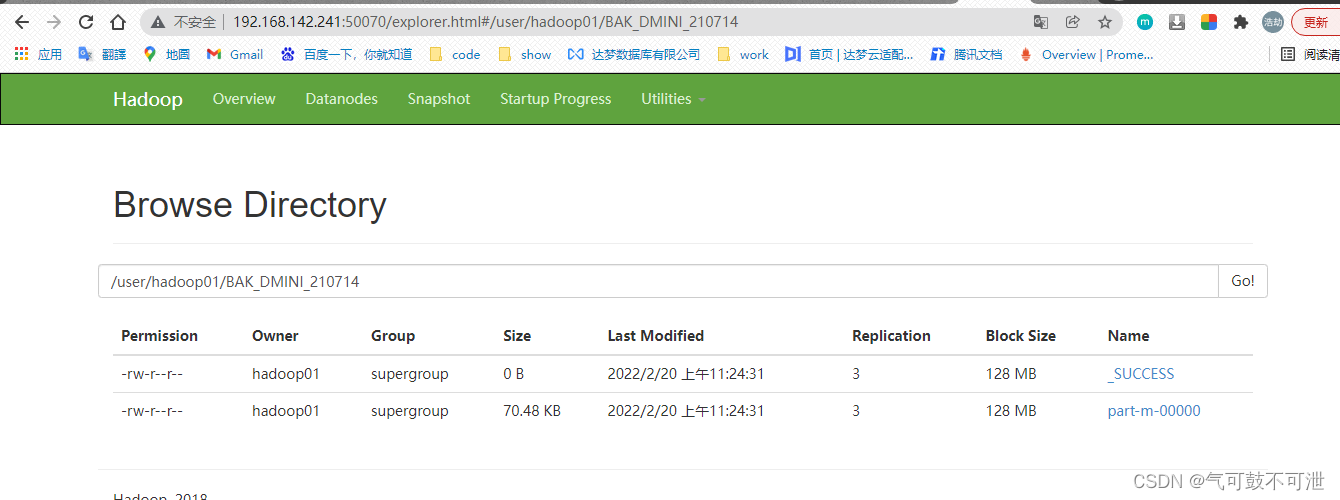

3)hdfs查看

4) 查看文件内容

hadoop fs -ls /user/hadoop01/BAK_DMINI_210714

hadoop fs -cat /user/hadoop01/BAK_DMINI_210714/part-m-00000

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-flndNgMQ-1646140753516)(sqoop配置达梦.assets/image-20220220113003291.png)]](https://img-blog.csdnimg.cn/903e735c4c8c48d4ba27eeeff106c6d8.png?x-oss-process=image/watermark,type_d3F5LXplbmhlaQ,shadow_50,text_Q1NETiBA5rCU5Y-v6byT5LiN5Y-v5rOE,size_20,color_FFFFFF,t_70,g_se,x_16)

停止dfs

[hadoop01@hadoop05 lib]$ stop-yarn.sh

stopping yarn daemons

stopping resourcemanager

hadoop05: stopping nodemanager

no proxyserver to stop

[hadoop01@hadoop05 lib]$ jps

5424 NameNode

5558 DataNode

11371 Jps

5726 SecondaryNameNode

[hadoop01@hadoop05 lib]$ stop-dfs.sh

Stopping namenodes on [hadoop05]

hadoop05: stopping namenode

hadoop05: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

[hadoop01@hadoop05 lib]$ jps

11722 Jps