第七部分 Spark on Yarn部署

相关配置

tar xf spark-2.3.2-bin-hadoop2.7.tgz

cd /opt/modules/spark-2.3.2-bin-hadoop2.7/conf

更改配置文件

cp spark-env.sh.template spark-env.sh

cp slaves.template slaves

vi slaves

加入localhost

vi spark-env.sh

export JAVA_HOME=/opt/modules/jdk1.8.0_261

export SPARK_HOME=/opt/modules/spark-2.3.2-bin-hadoop2.7

#Spark主节点的IP

export SPARK_MASTER_IP=hadoop

#Spark主节点的端口号

export SPARK_MASTER_PORT=7077

启动:

cd /opt/modules/spark-2.3.2-bin-hadoop2.7/sbin

./start-all.sh

#vim /etc/profile

#添加spark的环境变量,加如PATH下、export出来

#source /etc/profile

export SPARK_HOME="/opt/modules/spark-2.3.2-bin-hadoop2.7"

export SPARK_MASTER_IP=master

export SPARK_EXECUTOR_MEMORY=1G

export PATH=$SPARK_HOME/bin:$PATH

操作记录如下

[hadoop@bigdata-senior01 ~]$ cd /opt/modules/

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ pwd

/opt/modules

[hadoop@bigdata-senior01 modules]$ tar xf scala-2.12.7.tgz

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$

[hadoop@bigdata-senior01 modules]$ cd scala-2.12.7/

[hadoop@bigdata-senior01 scala-2.12.7]$ ll

total 0

drwxrwxr-x 2 hadoop hadoop 162 Sep 27 2018 bin

drwxrwxr-x 4 hadoop hadoop 86 Sep 27 2018 doc

drwxrwxr-x 2 hadoop hadoop 244 Sep 27 2018 lib

drwxrwxr-x 3 hadoop hadoop 18 Sep 27 2018 man

[hadoop@bigdata-senior01 scala-2.12.7]$ pwd

/opt/modules/scala-2.12.7

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$ vim /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ sudo vim /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ source /etc/profile

[hadoop@bigdata-senior01 scala-2.12.7]$ echo $SCALA_HOME

/opt/modules/scala-2.12.7

[hadoop@bigdata-senior01 scala-2.12.7]$ scala

Welcome to Scala 2.12.7 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_261).

Type in expressions for evaluation. Or try :help.

scala>

scala> [hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$

[hadoop@bigdata-senior01 scala-2.12.7]$ cd ../

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ tar xf spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ ll

total 1478576

drwxrwxr-x 10 hadoop hadoop 184 Jan 4 22:41 apache-hive-2.3.3

-rw-r--r-- 1 hadoop hadoop 232229830 Jan 4 21:41 apache-hive-2.3.3-bin.tar.gz

drwxr-xr-x 10 hadoop hadoop 182 Jan 4 22:14 hadoop-2.8.5

-rw-r--r-- 1 hadoop hadoop 246543928 Jan 4 21:41 hadoop-2.8.5.tar.gz

drwxr-xr-x 8 hadoop hadoop 273 Jun 17 2020 jdk1.8.0_261

-rw-r--r-- 1 hadoop hadoop 143111803 Jan 4 21:41 jdk-8u261-linux-x64.tar.gz

drwxr-xr-x 2 root root 6 Jan 4 22:29 mysql-5.7.25-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 644862820 Jan 4 22:27 mysql-5.7.25-linux-glibc2.12-x86_64.tar.gz

-rw-r--r-- 1 hadoop hadoop 1006904 Jan 4 21:41 mysql-connector-java-5.1.49.jar

drwxrwxr-x 6 hadoop hadoop 50 Sep 27 2018 scala-2.12.7

-rw-r--r-- 1 hadoop hadoop 20415505 Jan 4 21:41 scala-2.12.7.tgz

drwxrwxr-x 13 hadoop hadoop 211 Sep 16 2018 spark-2.3.2-bin-hadoop2.7

-rw-r--r-- 1 hadoop hadoop 225875602 Jan 4 21:41 spark-2.3.2-bin-hadoop2.7.tgz

[hadoop@bigdata-senior01 modules]$ cd spark-2.3.2-bin-hadoop2.7/

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ pwd

/opt/modules/spark-2.3.2-bin-hadoop2.7

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ cd /opt/modules/spark-2.3.2-bin-hadoop2.7/conf

[hadoop@bigdata-senior01 conf]$ cp spark-env.sh.template spark-env.sh

[hadoop@bigdata-senior01 conf]$ cp slaves.template slaves

[hadoop@bigdata-senior01 conf]$ vim slaves

[hadoop@bigdata-senior01 conf]$ vi spark-env.sh

[hadoop@bigdata-senior01 conf]$ cd ../

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ ll

total 84

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 bin

drwxrwxr-x 2 hadoop hadoop 264 Jan 4 23:33 conf

drwxrwxr-x 5 hadoop hadoop 50 Sep 16 2018 data

drwxrwxr-x 4 hadoop hadoop 29 Sep 16 2018 examples

drwxrwxr-x 2 hadoop hadoop 12288 Sep 16 2018 jars

drwxrwxr-x 3 hadoop hadoop 25 Sep 16 2018 kubernetes

-rw-rw-r-- 1 hadoop hadoop 18045 Sep 16 2018 LICENSE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 licenses

-rw-rw-r-- 1 hadoop hadoop 26366 Sep 16 2018 NOTICE

drwxrwxr-x 8 hadoop hadoop 240 Sep 16 2018 python

drwxrwxr-x 3 hadoop hadoop 17 Sep 16 2018 R

-rw-rw-r-- 1 hadoop hadoop 3809 Sep 16 2018 README.md

-rw-rw-r-- 1 hadoop hadoop 164 Sep 16 2018 RELEASE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 sbin

drwxrwxr-x 2 hadoop hadoop 42 Sep 16 2018 yarn

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ cd conf

[hadoop@bigdata-senior01 conf]$ echo ${JAVA_HOME}

/opt/modules/jdk1.8.0_261

[hadoop@bigdata-senior01 conf]$

[hadoop@bigdata-senior01 conf]$ pwd

/opt/modules/spark-2.3.2-bin-hadoop2.7/conf

[hadoop@bigdata-senior01 conf]$

[hadoop@bigdata-senior01 conf]$ vim spark-env.sh

[hadoop@bigdata-senior01 conf]$ cd ../

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ ll

total 84

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 bin

drwxrwxr-x 2 hadoop hadoop 264 Jan 4 23:35 conf

drwxrwxr-x 5 hadoop hadoop 50 Sep 16 2018 data

drwxrwxr-x 4 hadoop hadoop 29 Sep 16 2018 examples

drwxrwxr-x 2 hadoop hadoop 12288 Sep 16 2018 jars

drwxrwxr-x 3 hadoop hadoop 25 Sep 16 2018 kubernetes

-rw-rw-r-- 1 hadoop hadoop 18045 Sep 16 2018 LICENSE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 licenses

-rw-rw-r-- 1 hadoop hadoop 26366 Sep 16 2018 NOTICE

drwxrwxr-x 8 hadoop hadoop 240 Sep 16 2018 python

drwxrwxr-x 3 hadoop hadoop 17 Sep 16 2018 R

-rw-rw-r-- 1 hadoop hadoop 3809 Sep 16 2018 README.md

-rw-rw-r-- 1 hadoop hadoop 164 Sep 16 2018 RELEASE

drwxrwxr-x 2 hadoop hadoop 4096 Sep 16 2018 sbin

drwxrwxr-x 2 hadoop hadoop 42 Sep 16 2018 yarn

[hadoop@bigdata-senior01 spark-2.3.2-bin-hadoop2.7]$ cd sbin

[hadoop@bigdata-senior01 sbin]$ ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/modules/spark-2.3.2-bin-hadoop2.7/logs/spark-hadoop- org.apache.spark.deploy.master.Master-1-bigdata-senior01.chybinmy.com.out

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

hadoop@localhost's password:

hadoop@localhost's password: localhost: Permission denied, please try again.

hadoop@localhost's password: localhost: Permission denied, please try again.

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$ jps

18898 NodeManager

96757 Jps

17144 DataNode

18600 ResourceManager

16939 NameNode

21277 JobHistoryServer

17342 SecondaryNameNode

95983 Master

[hadoop@bigdata-senior01 sbin]$ vim /etc/profile

[hadoop@bigdata-senior01 sbin]$ sudo vim /etc/profile

[hadoop@bigdata-senior01 sbin]$ source /etc/profile

[hadoop@bigdata-senior01 sbin]$ ./start-all.sh

org.apache.spark.deploy.master.Master running as process 95983. Stop it first.

hadoop@localhost's password:

localhost: starting org.apache.spark.deploy.worker.Worker, logging to /opt/modules/spark-2.3.2-bin-hadoop2.7/logs/sp ark-hadoop-org.apache.spark.deploy.worker.Worker-1-bigdata-senior01.chybinmy.com.out

[hadoop@bigdata-senior01 sbin]$ cat /opt/modules/spark-2.3.2-bin-hadoop2.7/logs/spark-hadoop-org.apache.spark.deploy .worker.Worker-1-bigdata-senior01.chybinmy.com.out

Spark Command: /opt/modules/jdk1.8.0_261/bin/java -cp /opt/modules/spark-2.3.2-bin-hadoop2.7/conf/:/opt/modules/spar k-2.3.2-bin-hadoop2.7/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://bigdata-senior01 .chybinmy.com:7077

========================================

2022-01-04 23:42:32 INFO Worker:2612 - Started daemon with process name: 98596@bigdata-senior01.chybinmy.com

2022-01-04 23:42:32 INFO SignalUtils:54 - Registered signal handler for TERM

2022-01-04 23:42:32 INFO SignalUtils:54 - Registered signal handler for HUP

2022-01-04 23:42:32 INFO SignalUtils:54 - Registered signal handler for INT

2022-01-04 23:42:33 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using buil tin-java classes where applicable

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing view acls to: hadoop

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing modify acls to: hadoop

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing view acls groups to:

2022-01-04 23:42:33 INFO SecurityManager:54 - Changing modify acls groups to:

2022-01-04 23:42:33 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users wi th view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

2022-01-04 23:42:34 INFO Utils:54 - Successfully started service 'sparkWorker' on port 46351.

2022-01-04 23:42:34 INFO Worker:54 - Starting Spark worker 192.168.100.20:46351 with 2 cores, 2.7 GB RAM

2022-01-04 23:42:34 INFO Worker:54 - Running Spark version 2.3.2

2022-01-04 23:42:34 INFO Worker:54 - Spark home: /opt/modules/spark-2.3.2-bin-hadoop2.7

2022-01-04 23:42:35 INFO log:192 - Logging initialized @4458ms

2022-01-04 23:42:35 INFO Server:351 - jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

2022-01-04 23:42:35 INFO Server:419 - Started @4642ms

2022-01-04 23:42:35 INFO AbstractConnector:278 - Started ServerConnector@6de6ec17{HTTP/1.1,[http/1.1]}{0.0.0.0:8081 }

2022-01-04 23:42:35 INFO Utils:54 - Successfully started service 'WorkerUI' on port 8081.

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6cd8d218{/logPage,null,AVAILABL E,@Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@265b9144{/logPage/json,null,AVA ILABLE,@Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@460a8f36{/,null,AVAILABLE,@Spar k}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@732d2fc5{/json,null,AVAILABLE,@ Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@9864155{/static,null,AVAILABLE, @Spark}

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@a5fd0ba{/log,null,AVAILABLE,@Sp ark}

2022-01-04 23:42:35 INFO WorkerWebUI:54 - Bound WorkerWebUI to 0.0.0.0, and started at http://bigdata-senior01.chyb inmy.com:8081

2022-01-04 23:42:35 INFO Worker:54 - Connecting to master bigdata-senior01.chybinmy.com:7077...

2022-01-04 23:42:35 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1c2b0b0e{/metrics/json,null,AVA ILABLE,@Spark}

2022-01-04 23:42:35 INFO TransportClientFactory:267 - Successfully created connection to bigdata-senior01.chybinmy. com/192.168.100.20:7077 after 156 ms (0 ms spent in bootstraps)

2022-01-04 23:42:36 INFO Worker:54 - Successfully registered with master spark://bigdata-senior01.chybinmy.com:7077

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$

[hadoop@bigdata-senior01 sbin]$

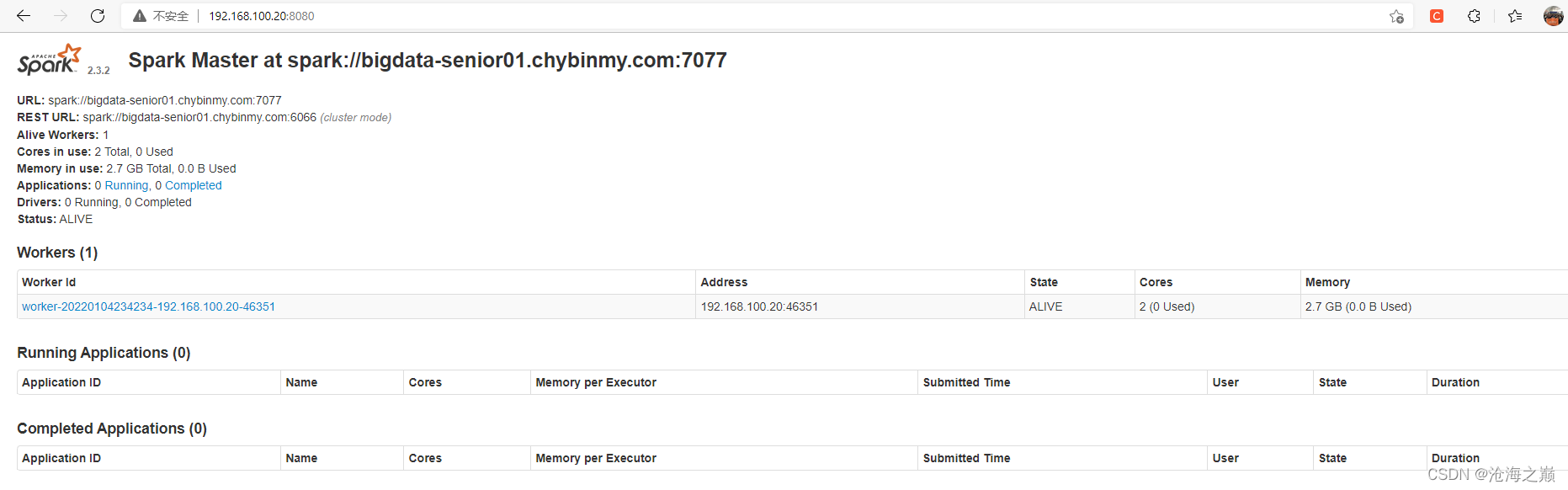

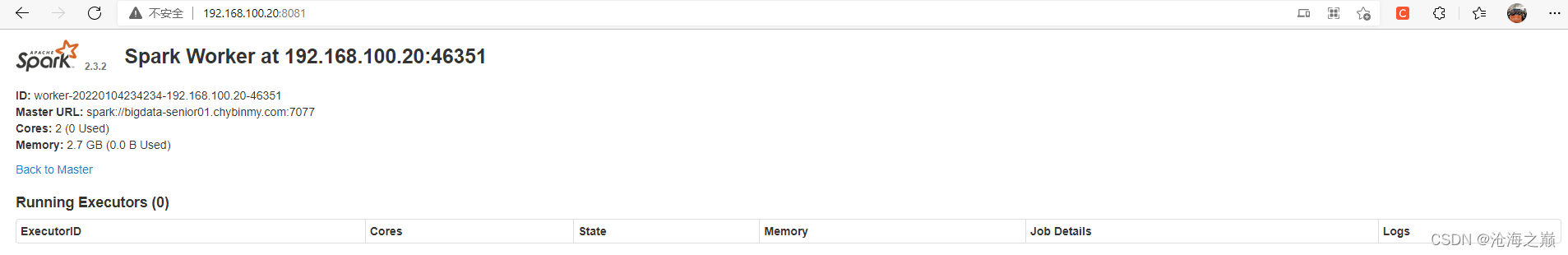

访问地址:

http://192.168.100.20:8080/

http://192.168.100.20:8081/

Spark(支持2.0以上所有版本) ,安装的机器必须支持执行spark-sql -e “show databases” 命令

$

[hadoop@bigdata-senior01 sbin]$ spark-sql -e "show databases"

2022-01-04 23:49:13 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2022-01-04 23:49:14 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2022-01-04 23:49:14 INFO ObjectStore:289 - ObjectStore, initialize called

2022-01-04 23:49:15 INFO Persistence:77 - Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

2022-01-04 23:49:15 INFO Persistence:77 - Property datanucleus.cache.level2 unknown - will be ignored

2022-01-04 23:49:18 INFO ObjectStore:370 - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2022-01-04 23:49:20 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:20 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:21 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:21 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:21 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is DERBY

2022-01-04 23:49:21 INFO ObjectStore:272 - Initialized ObjectStore

2022-01-04 23:49:21 WARN ObjectStore:6666 - Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

2022-01-04 23:49:21 WARN ObjectStore:568 - Failed to get database default, returning NoSuchObjectException

2022-01-04 23:49:22 INFO HiveMetaStore:663 - Added admin role in metastore

2022-01-04 23:49:22 INFO HiveMetaStore:672 - Added public role in metastore

2022-01-04 23:49:22 INFO HiveMetaStore:712 - No user is added in admin role, since config is empty

2022-01-04 23:49:22 INFO HiveMetaStore:746 - 0: get_all_databases

2022-01-04 23:49:22 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases

2022-01-04 23:49:22 INFO HiveMetaStore:746 - 0: get_functions: db=default pat=*

2022-01-04 23:49:22 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=*

2022-01-04 23:49:22 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

2022-01-04 23:49:23 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop

2022-01-04 23:49:23 INFO SessionState:641 - Created local directory: /tmp/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f_resources

2022-01-04 23:49:23 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f

2022-01-04 23:49:23 INFO SessionState:641 - Created local directory: /tmp/hadoop/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f

2022-01-04 23:49:23 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop/17c2283a-5ee4-41a0-88b7-54b3ec12cd9f/_tmp_space.db

2022-01-04 23:49:23 INFO SparkContext:54 - Running Spark version 2.3.2

2022-01-04 23:49:23 INFO SparkContext:54 - Submitted application: SparkSQL::192.168.100.20

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing view acls to: hadoop

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing modify acls to: hadoop

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing view acls groups to:

2022-01-04 23:49:23 INFO SecurityManager:54 - Changing modify acls groups to:

2022-01-04 23:49:23 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

2022-01-04 23:49:24 INFO Utils:54 - Successfully started service 'sparkDriver' on port 37135.

2022-01-04 23:49:24 INFO SparkEnv:54 - Registering MapOutputTracker

2022-01-04 23:49:24 INFO SparkEnv:54 - Registering BlockManagerMaster

2022-01-04 23:49:24 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2022-01-04 23:49:24 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2022-01-04 23:49:24 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-e68cfdc9-adda-400b-beac-f4441481481c

2022-01-04 23:49:24 INFO MemoryStore:54 - MemoryStore started with capacity 366.3 MB

2022-01-04 23:49:24 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2022-01-04 23:49:24 INFO log:192 - Logging initialized @14831ms

2022-01-04 23:49:25 INFO Server:351 - jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

2022-01-04 23:49:25 INFO Server:419 - Started @15163ms

2022-01-04 23:49:25 INFO AbstractConnector:278 - Started ServerConnector@7d0cd23c{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2022-01-04 23:49:25 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@130cfc47{/jobs,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4584304{/jobs/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@51888019{/jobs/job,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@19b5214b{/jobs/job/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5fb3111a{/stages,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4aaecabd{/stages/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@23bd0c81{/stages/stage,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6889f56f{/stages/stage/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@231b35fb{/stages/pool,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@26da1ba2{/stages/pool/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3820cfe{/storage,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2407a36c{/storage/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5ec9eefa{/storage/rdd,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@28b8f98a{/storage/rdd/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3b4ef59f{/environment,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22cb3d59{/environment/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@33e4b9c4{/executors,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5cff729b{/executors/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@10d18696{/executors/threadDump,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6b8b5020{/executors/threadDump/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7d37ee0c{/static,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@67f946c3{/,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@21b51e59{/api,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4f664bee{/jobs/job/kill,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@76563ae7{/stages/stage/kill,null,AVAILABLE,@Spark}

2022-01-04 23:49:25 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://bigdata-senior01.chybinmy.com:4040

2022-01-04 23:49:25 INFO Executor:54 - Starting executor ID driver on host localhost

2022-01-04 23:49:25 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 43887.

2022-01-04 23:49:25 INFO NettyBlockTransferService:54 - Server created on bigdata-senior01.chybinmy.com:43887

2022-01-04 23:49:25 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2022-01-04 23:49:25 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:25 INFO BlockManagerMasterEndpoint:54 - Registering block manager bigdata-senior01.chybinmy.com:43887 with 366.3 MB RAM, BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:25 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:25 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, bigdata-senior01.chybinmy.com, 43887, None)

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@35ac9ebd{/metrics/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO SharedState:54 - Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse').

2022-01-04 23:49:26 INFO SharedState:54 - Warehouse path is 'file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse'.

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@120350eb{/SQL,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2ccc9681{/SQL/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@aa752bb{/SQL/execution,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@77fc19cf{/SQL/execution/json,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2e45a357{/static/sql,null,AVAILABLE,@Spark}

2022-01-04 23:49:26 INFO HiveUtils:54 - Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

2022-01-04 23:49:26 INFO HiveClientImpl:54 - Warehouse location for Hive client (version 1.2.2) is file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse

2022-01-04 23:49:26 INFO metastore:291 - Mestastore configuration hive.metastore.warehouse.dir changed from /user/hive/warehouse to file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse

2022-01-04 23:49:26 INFO HiveMetaStore:746 - 0: Shutting down the object store...

2022-01-04 23:49:26 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=Shutting down the object store...

2022-01-04 23:49:26 INFO HiveMetaStore:746 - 0: Metastore shutdown complete.

2022-01-04 23:49:26 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=Metastore shutdown complete.

2022-01-04 23:49:26 INFO HiveMetaStore:746 - 0: get_database: default

2022-01-04 23:49:26 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_database: default

2022-01-04 23:49:26 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2022-01-04 23:49:26 INFO ObjectStore:289 - ObjectStore, initialize called

2022-01-04 23:49:26 INFO Query:77 - Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing

2022-01-04 23:49:26 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is DERBY

2022-01-04 23:49:26 INFO ObjectStore:272 - Initialized ObjectStore

2022-01-04 23:49:28 INFO StateStoreCoordinatorRef:54 - Registered StateStoreCoordinator endpoint

2022-01-04 23:49:28 INFO HiveMetaStore:746 - 0: get_database: global_temp

2022-01-04 23:49:28 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_database: global_temp

2022-01-04 23:49:28 WARN ObjectStore:568 - Failed to get database global_temp, returning NoSuchObjectException

2022-01-04 23:49:32 INFO HiveMetaStore:746 - 0: get_databases: *

2022-01-04 23:49:32 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_databases: *

2022-01-04 23:49:33 INFO CodeGenerator:54 - Code generated in 524.895015 ms

default

Time taken: 5.645 seconds, Fetched 1 row(s)

2022-01-04 23:49:33 INFO SparkSQLCLIDriver:951 - Time taken: 5.645 seconds, Fetched 1 row(s)

2022-01-04 23:49:33 INFO AbstractConnector:318 - Stopped Spark@7d0cd23c{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2022-01-04 23:49:33 INFO SparkUI:54 - Stopped Spark web UI at http://bigdata-senior01.chybinmy.com:4040

2022-01-04 23:49:33 INFO MapOutputTrackerMasterEndpoint:54 - MapOutputTrackerMasterEndpoint stopped!

2022-01-04 23:49:33 INFO MemoryStore:54 - MemoryStore cleared

2022-01-04 23:49:33 INFO BlockManager:54 - BlockManager stopped

2022-01-04 23:49:33 INFO BlockManagerMaster:54 - BlockManagerMaster stopped

2022-01-04 23:49:33 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:54 - OutputCommitCoordinator stopped!

2022-01-04 23:49:33 INFO SparkContext:54 - Successfully stopped SparkContext

2022-01-04 23:49:33 INFO ShutdownHookManager:54 - Shutdown hook called

2022-01-04 23:49:33 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-437b36ba-6b7b-4a31-be54-d724731cca35

2022-01-04 23:49:33 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-ef9ab382-9d00-4c59-a73f-f565e761bb45

[hadoop@bigdata-senior01 sbin]$

spark-sql -e “show databases”

[hadoop@dss sbin]$ spark-sql -e "show databases"

2022-03-03 06:29:44 WARN Utils:66 - Your hostname, dss resolves to a loopback address: 127.0.0.1; using 192.168.122.67 instead (on interface eth0)

2022-03-03 06:29:44 WARN Utils:66 - Set SPARK_LOCAL_IP if you need to bind to another address

2022-03-03 06:29:45 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2022-03-03 06:29:46 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2022-03-03 06:29:46 INFO ObjectStore:289 - ObjectStore, initialize called

2022-03-03 06:29:46 INFO Persistence:77 - Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

2022-03-03 06:29:46 INFO Persistence:77 - Property datanucleus.cache.level2 unknown - will be ignored

2022-03-03 06:29:55 INFO ObjectStore:370 - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2022-03-03 06:29:57 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2022-03-03 06:29:57 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2022-03-03 06:30:03 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

2022-03-03 06:30:03 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

2022-03-03 06:30:05 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is DERBY

2022-03-03 06:30:05 INFO ObjectStore:272 - Initialized ObjectStore

2022-03-03 06:30:06 WARN ObjectStore:6666 - Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

2022-03-03 06:30:06 WARN ObjectStore:568 - Failed to get database default, returning NoSuchObjectException

2022-03-03 06:30:07 INFO HiveMetaStore:663 - Added admin role in metastore

2022-03-03 06:30:07 INFO HiveMetaStore:672 - Added public role in metastore

2022-03-03 06:30:07 INFO HiveMetaStore:712 - No user is added in admin role, since config is empty

2022-03-03 06:30:07 INFO HiveMetaStore:746 - 0: get_all_databases

2022-03-03 06:30:07 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases

2022-03-03 06:30:07 INFO HiveMetaStore:746 - 0: get_functions: db=default pat=*

2022-03-03 06:30:07 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=*

2022-03-03 06:30:07 INFO Datastore:77 - The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

2022-03-03 06:30:08 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop

2022-03-03 06:30:08 INFO SessionState:641 - Created local directory: /tmp/d26dff0e-0e55-4623-af6a-a189a8d13689_resources

2022-03-03 06:30:08 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop/d26dff0e-0e55-4623-af6a-a189a8d13689

2022-03-03 06:30:08 INFO SessionState:641 - Created local directory: /tmp/hadoop/d26dff0e-0e55-4623-af6a-a189a8d13689

2022-03-03 06:30:08 INFO SessionState:641 - Created HDFS directory: /tmp/hive/hadoop/d26dff0e-0e55-4623-af6a-a189a8d13689/_tmp_space.db

2022-03-03 06:30:08 INFO SparkContext:54 - Running Spark version 2.3.2

2022-03-03 06:30:08 INFO SparkContext:54 - Submitted application: SparkSQL::192.168.122.67

2022-03-03 06:30:09 INFO SecurityManager:54 - Changing view acls to: hadoop

2022-03-03 06:30:09 INFO SecurityManager:54 - Changing modify acls to: hadoop

2022-03-03 06:30:09 INFO SecurityManager:54 - Changing view acls groups to:

2022-03-03 06:30:09 INFO SecurityManager:54 - Changing modify acls groups to:

2022-03-03 06:30:09 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

2022-03-03 06:30:09 INFO Utils:54 - Successfully started service 'sparkDriver' on port 37582.

2022-03-03 06:30:09 INFO SparkEnv:54 - Registering MapOutputTracker

2022-03-03 06:30:09 INFO SparkEnv:54 - Registering BlockManagerMaster

2022-03-03 06:30:09 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2022-03-03 06:30:09 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2022-03-03 06:30:09 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-56268e6d-f9da-4ba0-813d-8a9f0396a3ed

2022-03-03 06:30:09 INFO MemoryStore:54 - MemoryStore started with capacity 366.3 MB

2022-03-03 06:30:09 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2022-03-03 06:30:09 INFO log:192 - Logging initialized @26333ms

2022-03-03 06:30:09 INFO Server:351 - jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

2022-03-03 06:30:09 INFO Server:419 - Started @26464ms

2022-03-03 06:30:09 INFO AbstractConnector:278 - Started ServerConnector@231cdda8{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2022-03-03 06:30:09 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@544e3679{/jobs,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3e14d390{/jobs/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5eb87338{/jobs/job,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@31ab1e67{/jobs/job/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@29bbc391{/stages,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3487442d{/stages/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@530ee28b{/stages/stage,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@43c7fe8a{/stages/stage/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@67f946c3{/stages/pool,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@21b51e59{/stages/pool/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1785d194{/storage,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6b4a4e40{/storage/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@46a8c2b4{/storage/rdd,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4f664bee{/storage/rdd/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@76563ae7{/environment,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4fd74223{/environment/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4fea840f{/executors,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@32ae8f27{/executors/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@75e80a97{/executors/threadDump,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5b8853{/executors/threadDump/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1b8aaeab{/static,null,AVAILABLE,@Spark}

2022-03-03 06:30:09 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5bfc79cb{/,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@27ec8754{/api,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@62f9c790{/jobs/job/kill,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@21e5f0b6{/stages/stage/kill,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://192.168.122.67:4040

2022-03-03 06:30:10 INFO Executor:54 - Starting executor ID driver on host localhost

2022-03-03 06:30:10 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 49364.

2022-03-03 06:30:10 INFO NettyBlockTransferService:54 - Server created on 192.168.122.67:49364

2022-03-03 06:30:10 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2022-03-03 06:30:10 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, 192.168.122.67, 49364, None)

2022-03-03 06:30:10 INFO BlockManagerMasterEndpoint:54 - Registering block manager 192.168.122.67:49364 with 366.3 MB RAM, BlockManagerId(driver, 192.168.122.67, 49364, None)

2022-03-03 06:30:10 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, 192.168.122.67, 49364, None)

2022-03-03 06:30:10 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, 192.168.122.67, 49364, None)

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@537b3b2e{/metrics/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO SharedState:54 - Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse').

2022-03-03 06:30:10 INFO SharedState:54 - Warehouse path is 'file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse'.

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@27bc1d44{/SQL,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1af677f8{/SQL/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@34cd65ac{/SQL/execution,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@61911947{/SQL/execution/json,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5940b14e{/static/sql,null,AVAILABLE,@Spark}

2022-03-03 06:30:10 INFO HiveUtils:54 - Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

2022-03-03 06:30:10 INFO HiveClientImpl:54 - Warehouse location for Hive client (version 1.2.2) is file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse

2022-03-03 06:30:10 INFO metastore:291 - Mestastore configuration hive.metastore.warehouse.dir changed from /user/hive/warehouse to file:/opt/modules/spark-2.3.2-bin-hadoop2.7/sbin/spark-warehouse

2022-03-03 06:30:10 INFO HiveMetaStore:746 - 0: Shutting down the object store...

2022-03-03 06:30:10 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=Shutting down the object store...

2022-03-03 06:30:10 INFO HiveMetaStore:746 - 0: Metastore shutdown complete.

2022-03-03 06:30:10 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=Metastore shutdown complete.

2022-03-03 06:30:10 INFO HiveMetaStore:746 - 0: get_database: default

2022-03-03 06:30:10 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_database: default

2022-03-03 06:30:10 INFO HiveMetaStore:589 - 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

2022-03-03 06:30:10 INFO ObjectStore:289 - ObjectStore, initialize called

2022-03-03 06:30:10 INFO Query:77 - Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing

2022-03-03 06:30:10 INFO MetaStoreDirectSql:139 - Using direct SQL, underlying DB is DERBY

2022-03-03 06:30:10 INFO ObjectStore:272 - Initialized ObjectStore

2022-03-03 06:30:11 INFO StateStoreCoordinatorRef:54 - Registered StateStoreCoordinator endpoint

2022-03-03 06:30:11 INFO HiveMetaStore:746 - 0: get_database: global_temp

2022-03-03 06:30:11 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_database: global_temp

2022-03-03 06:30:11 WARN ObjectStore:568 - Failed to get database global_temp, returning NoSuchObjectException

2022-03-03 06:30:14 INFO HiveMetaStore:746 - 0: get_databases: *

2022-03-03 06:30:14 INFO audit:371 - ugi=hadoop ip=unknown-ip-addr cmd=get_databases: *

2022-03-03 06:30:14 INFO CodeGenerator:54 - Code generated in 271.767143 ms

default

Time taken: 2.991 seconds, Fetched 1 row(s)

2022-03-03 06:30:14 INFO SparkSQLCLIDriver:951 - Time taken: 2.991 seconds, Fetched 1 row(s)

2022-03-03 06:30:14 INFO AbstractConnector:318 - Stopped Spark@231cdda8{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2022-03-03 06:30:14 INFO SparkUI:54 - Stopped Spark web UI at http://192.168.122.67:4040

2022-03-03 06:30:14 INFO MapOutputTrackerMasterEndpoint:54 - MapOutputTrackerMasterEndpoint stopped!

2022-03-03 06:30:14 INFO MemoryStore:54 - MemoryStore cleared

2022-03-03 06:30:14 INFO BlockManager:54 - BlockManager stopped

2022-03-03 06:30:14 INFO BlockManagerMaster:54 - BlockManagerMaster stopped

2022-03-03 06:30:14 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:54 - OutputCommitCoordinator stopped!

2022-03-03 06:30:14 INFO SparkContext:54 - Successfully stopped SparkContext

2022-03-03 06:30:14 INFO ShutdownHookManager:54 - Shutdown hook called

2022-03-03 06:30:14 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-f46e0008-5a10-405d-8a2a-c5406427ef4d

2022-03-03 06:30:14 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-5d80c67d-8631-4340-ad2d-e112802b93a2

[hadoop@dss sbin]$