A provider nl.techop.kafka.TopicMetricNameResource registered in SERVER runtime does not implement any provider interfaces applicable in the SERVER runtime. Due to constraint configuration problems the provider nl.techop.kafka.TopicMetricNameResource will be ignored.

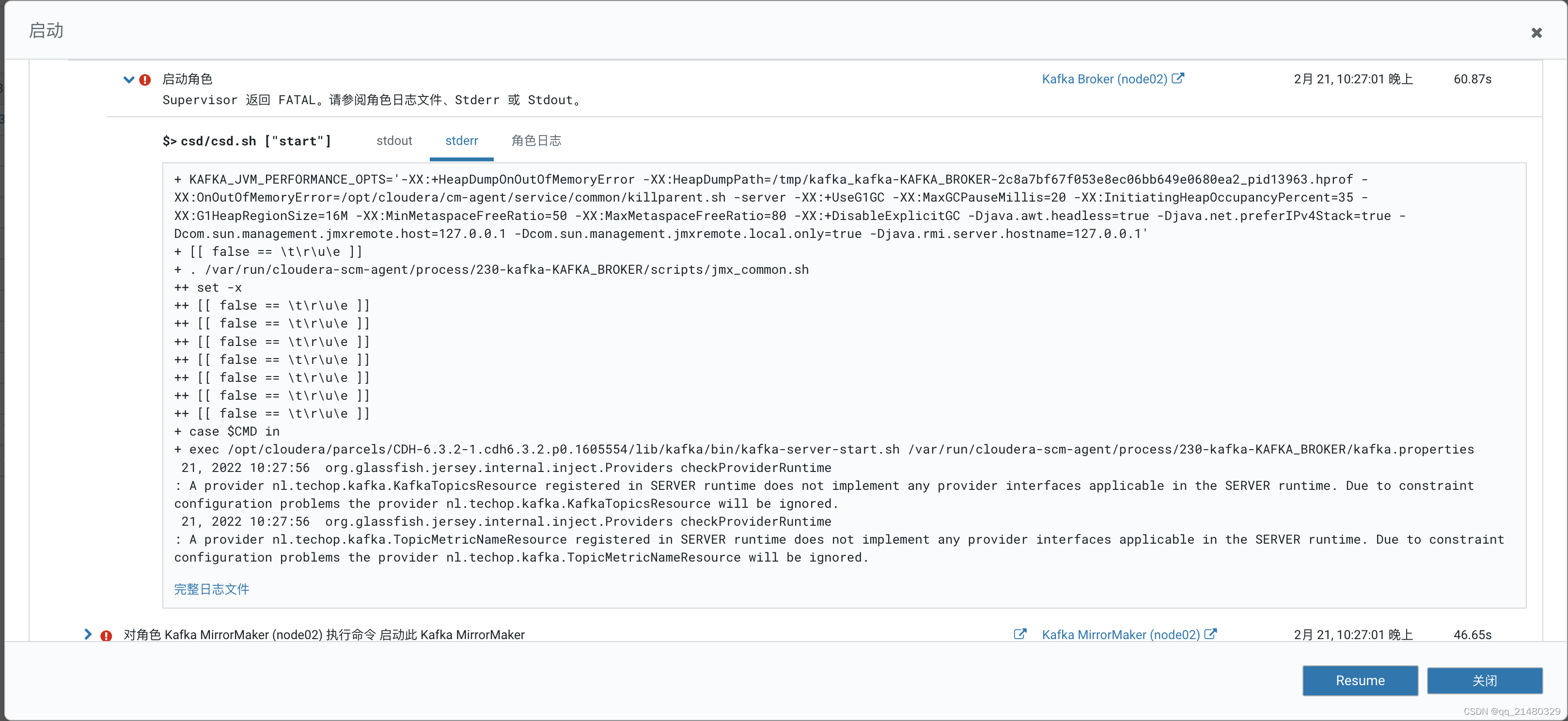

? CDH 6.3.2部署kafka出现异常,异常信息

+ KAFKA_JVM_PERFORMANCE_OPTS='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp/kafka_kafka-KAFKA_BROKER-2c8a7bf67f053e8ec06bb649e0680ea2_pid13963.hprof -XX:OnOutOfMemoryError=/opt/cloudera/cm-agent/service/common/killparent.sh -server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:G1HeapRegionSize=16M -XX:MinMetaspaceFreeRatio=50 -XX:MaxMetaspaceFreeRatio=80 -XX:+DisableExplicitGC -Djava.awt.headless=true -Djava.net.preferIPv4Stack=true -Dcom.sun.management.jmxremote.host=127.0.0.1 -Dcom.sun.management.jmxremote.local.only=true -Djava.rmi.server.hostname=127.0.0.1'

+ [[ false == \t\r\u\e ]]

+ . /var/run/cloudera-scm-agent/process/230-kafka-KAFKA_BROKER/scripts/jmx_common.sh

++ set -x

++ [[ false == \t\r\u\e ]]

++ [[ false == \t\r\u\e ]]

++ [[ false == \t\r\u\e ]]

++ [[ false == \t\r\u\e ]]

++ [[ false == \t\r\u\e ]]

++ [[ false == \t\r\u\e ]]

++ [[ false == \t\r\u\e ]]

+ case $CMD in

+ exec /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/kafka-server-start.sh /var/run/cloudera-scm-agent/process/230-kafka-KAFKA_BROKER/kafka.properties

21, 2022 10:27:56 org.glassfish.jersey.internal.inject.Providers checkProviderRuntime

: A provider nl.techop.kafka.KafkaTopicsResource registered in SERVER runtime does not implement any provider interfaces applicable in the SERVER runtime. Due to constraint configuration problems the provider nl.techop.kafka.KafkaTopicsResource will be ignored.

21, 2022 10:27:56 org.glassfish.jersey.internal.inject.Providers checkProviderRuntime

: A provider nl.techop.kafka.TopicMetricNameResource registered in SERVER runtime does not implement any provider interfaces applicable in the SERVER runtime. Due to constraint configuration problems the provider nl.techop.kafka.TopicMetricNameResource will be ignored.

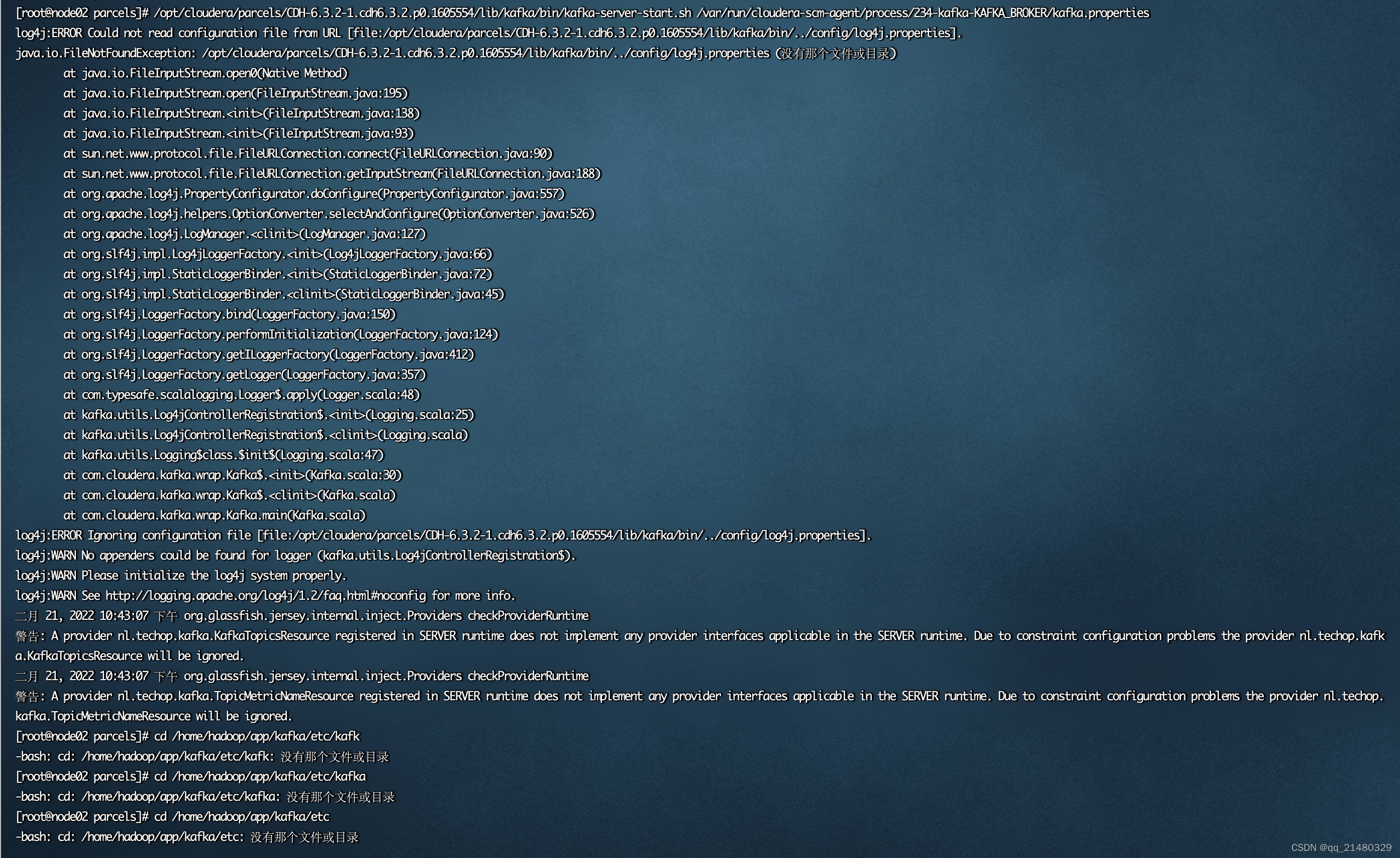

执行上面CDH 提示命令:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/kafka-server-start.sh ,报错如下:

[root@node02 parcels]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/kafka-server-start.sh /var/run/cloudera-scm-agent/process/234-kafka-KAFKA_BROKER/kafka.properties

log4j:ERROR Could not read configuration file from URL [file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/../config/log4j.properties].

java.io.FileNotFoundException: /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/../config/log4j.properties (没有那个文件或目录)

at java.io.FileInputStream.open0(Native Method)

at java.io.FileInputStream.open(FileInputStream.java:195)

at java.io.FileInputStream.<init>(FileInputStream.java:138)

at java.io.FileInputStream.<init>(FileInputStream.java:93)

at sun.net.www.protocol.file.FileURLConnection.connect(FileURLConnection.java:90)

at sun.net.www.protocol.file.FileURLConnection.getInputStream(FileURLConnection.java:188)

at org.apache.log4j.PropertyConfigurator.doConfigure(PropertyConfigurator.java:557)

at org.apache.log4j.helpers.OptionConverter.selectAndConfigure(OptionConverter.java:526)

at org.apache.log4j.LogManager.<clinit>(LogManager.java:127)

at org.slf4j.impl.Log4jLoggerFactory.<init>(Log4jLoggerFactory.java:66)

at org.slf4j.impl.StaticLoggerBinder.<init>(StaticLoggerBinder.java:72)

at org.slf4j.impl.StaticLoggerBinder.<clinit>(StaticLoggerBinder.java:45)

at org.slf4j.LoggerFactory.bind(LoggerFactory.java:150)

at org.slf4j.LoggerFactory.performInitialization(LoggerFactory.java:124)

at org.slf4j.LoggerFactory.getILoggerFactory(LoggerFactory.java:412)

at org.slf4j.LoggerFactory.getLogger(LoggerFactory.java:357)

at com.typesafe.scalalogging.Logger$.apply(Logger.scala:48)

at kafka.utils.Log4jControllerRegistration$.<init>(Logging.scala:25)

at kafka.utils.Log4jControllerRegistration$.<clinit>(Logging.scala)

at kafka.utils.Logging$class.$init$(Logging.scala:47)

at com.cloudera.kafka.wrap.Kafka$.<init>(Kafka.scala:30)

at com.cloudera.kafka.wrap.Kafka$.<clinit>(Kafka.scala)

at com.cloudera.kafka.wrap.Kafka.main(Kafka.scala)

log4j:ERROR Ignoring configuration file [file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/../config/log4j.properties].

log4j:WARN No appenders could be found for logger (kafka.utils.Log4jControllerRegistration$).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

二月 21, 2022 10:43:07 下午 org.glassfish.jersey.internal.inject.Providers checkProviderRuntime

警告: A provider nl.techop.kafka.KafkaTopicsResource registered in SERVER runtime does not implement any provider interfaces applicable in the SERVER runtime. Due to constraint configuration problems the provider nl.techop.kafka.KafkaTopicsResource will be ignored.

二月 21, 2022 10:43:07 下午 org.glassfish.jersey.internal.inject.Providers checkProviderRuntime

警告: A provider nl.techop.kafka.TopicMetricNameResource registered in SERVER runtime does not implement any provider interfaces applicable in the SERVER runtime. Due to constraint configuration problems the provider nl.techop.kafka.TopicMetricNameResource will be ignored.

[root@node02 parcels]# cd /home/hadoop/app/kafka/etc/kafk

-bash: cd: /home/hadoop/app/kafka/etc/kafk: 没有那个文件或目录

[root@node02 parcels]# cd /home/hadoop/app/kafka/etc/kafka

-bash: cd: /home/hadoop/app/kafka/etc/kafka: 没有那个文件或目录

[root@node02 parcels]# cd /home/hadoop/app/kafka/etc

-bash: cd: /home/hadoop/app/kafka/etc: 没有那个文件或目录

异常信息图片

执行CDH 提示命令:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/kafka-server-start.sh ,报错如下:

解决方案,如下:

1.执行/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/bin/kafka-server-start.sh后,日志信息很明确,log4j.properties不存在,只需要在相应目录/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/kafka/config下新建log4j.properties文件,并添加配置信息即可

2.log4j.properties内容

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Unspecified loggers and loggers with additivity=true output to server.log and stdout

# Note that INFO only applies to unspecified loggers, the log level of the child logger is used otherwise

log4j.rootLogger=INFO, stdout, kafkaAppender

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.kafkaAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.kafkaAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.kafkaAppender.File=${kafka.logs.dir}/server.log

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.kafkaAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.stateChangeAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.stateChangeAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.stateChangeAppender.File=${kafka.logs.dir}/state-change.log

log4j.appender.stateChangeAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.stateChangeAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.requestAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.requestAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.requestAppender.File=${kafka.logs.dir}/kafka-request.log

log4j.appender.requestAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.requestAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.cleanerAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.cleanerAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.cleanerAppender.File=${kafka.logs.dir}/log-cleaner.log

log4j.appender.cleanerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.cleanerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.controllerAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.controllerAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.controllerAppender.File=${kafka.logs.dir}/controller.log

log4j.appender.controllerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.controllerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.authorizerAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.authorizerAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.authorizerAppender.File=${kafka.logs.dir}/kafka-authorizer.log

log4j.appender.authorizerAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.authorizerAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

# Change the two lines below to adjust ZK client logging

log4j.logger.org.I0Itec.zkclient.ZkClient=INFO

log4j.logger.org.apache.zookeeper=INFO

# Change the two lines below to adjust the general broker logging level (output to server.log and stdout)

log4j.logger.kafka=INFO

log4j.logger.org.apache.kafka=INFO

# Change to DEBUG or TRACE to enable request logging

log4j.logger.kafka.request.logger=WARN, requestAppender

log4j.additivity.kafka.request.logger=false

# Uncomment the lines below and change log4j.logger.kafka.network.RequestChannel$ to TRACE for additional output

# related to the handling of requests

#log4j.logger.kafka.network.Processor=TRACE, requestAppender

#log4j.logger.kafka.server.KafkaApis=TRACE, requestAppender

#log4j.additivity.kafka.server.KafkaApis=false

log4j.logger.kafka.network.RequestChannel$=WARN, requestAppender

log4j.additivity.kafka.network.RequestChannel$=false

log4j.logger.kafka.controller=TRACE, controllerAppender

log4j.additivity.kafka.controller=false

log4j.logger.kafka.log.LogCleaner=INFO, cleanerAppender

log4j.additivity.kafka.log.LogCleaner=false

log4j.logger.state.change.logger=TRACE, stateChangeAppender

log4j.additivity.state.change.logger=false

# Access denials are logged at INFO level, change to DEBUG to also log allowed accesses

log4j.logger.kafka.authorizer.logger=INFO, authorizerAppender

log4j.additivity.kafka.authorizer.logger=false

相关大数据学习demo地址:

https://github.com/carteryh/big-data