环境准备

- Hadoop 完全分布式(一主两从即可)

- MySQL环境、Hive环境

一、环境准备

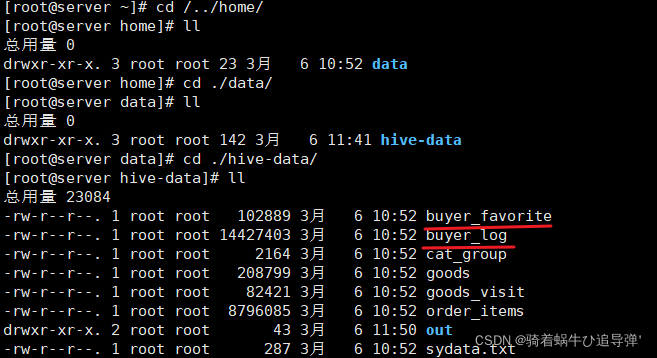

将 buyer_log 、buyer_favorite 导入到 /data/hive-data 下:

创建卖家行为日志表,名为 buyer_log,包含ID(id)、用户ID(buyer_id)、时间(dt)、地点(ip)、操作类型(opt_type) 5 个字段,字符类型为 string,按照 “\t” 分割符:

hive> create table buyer_log

> (id string,buyer_id string,dt string,ip string,opt_type string)

> row format delimited fields terminated by '\t'

> stored as textfile;

OK

Time taken: 1.752 seconds

创建买家收藏表,名为 buyer_favorite,包含 用户ID(buyer_id)、商品ID(goods_id)、时间(dt) 3 个字段,字符类型为 string,按照 “\t” 分割符:

hive> create table buyer_favorite

> (buyer_id string,goods_id string,dt string)

> row format delimited fields terminated by '\t'

> stored as textfile;

OK

Time taken: 0.141 seconds

将本地的 /data/hive-data 下的上述两个文件中的数据导入到刚刚创建的两张表中:

hive> load data local inpath '/../home/data/hive-data/buyer_log' into table buyer_log;

Loading data to table db.buyer_log

OK

Time taken: 3.36 seconds

hive> load data local inpath '/../home/data/hive-data/buyer_favorite' into table buyer_favorite;

Loading data to table db.buyer_favorite

OK

Time taken: 0.413 seconds

二、Hive 查询

1、普通查询

查询 buyer_log 表中的全部字段,数据量大的时候,应当避免查询全部的数据。这里我们使用 limit 关键字进行限制查询前10条数据:

hive> select * from buyer_log limit 10;

OK

461 10181 2010-03-26 19:45:07 123.127.164.252 1

462 10262 2010-03-26 19:55:10 123.127.164.252 1

463 20001 2010-03-29 14:28:02 221.208.129.117 2

464 20001 2010-03-29 14:28:02 221.208.129.117 1

465 20002 2010-03-30 10:56:35 222.44.94.235 2

466 20002 2010-03-30 10:56:35 222.44.94.235 1

481 10181 2010-03-31 16:48:43 123.127.164.252 1

482 10181 2010-04-01 17:35:05 123.127.164.252 1

483 10181 2010-04-02 10:34:20 123.127.164.252 1

484 20001 2010-04-04 16:38:22 221.208.129.38 1

Time taken: 1.467 seconds, Fetched: 10 row(s)

2、别名查询

查询表 buyer_log 中的 id 字段 和 ip 字段,当多表连接字段较多时,常常使用别名:

hive> select b.id,b.ip from buyer_log b limit 10;

OK

461 123.127.164.252

462 123.127.164.252

463 221.208.129.117

464 221.208.129.117

465 222.44.94.235

466 222.44.94.235

481 123.127.164.252

482 123.127.164.252

483 123.127.164.252

484 221.208.129.38

Time taken: 0.108 seconds, Fetched: 10 row(s)

3、限定查询

查询表 buyer_log 中的 opt_type=1 的用户 ID(buyer_id):

hive> select buyer_id from buyer_log where opt_type=1 limit 10;

OK

10181

10262

20001

20002

10181

10181

10181

20001

10181

20021

Time taken: 0.361 seconds, Fetched: 10 row(s)

4、多表联合查询

两表或多表进行查询的时候,如通过 用户ID(buyer_id)连接表 buyer_log 、buyer_favorite,查询表 buyer_log 的 dt 字段和表 buyer_favorite 的 goods_id 字段,多表联合查询可以按需求查询多个表中不同字段:

hive> select l.dt,f.goods_id from buyer_log l,buyer_favorite f

> where l.buyer_id = f.buyer_id

> limit 10;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = root_20220312204110_aa886926-12e1-4fc7-a0b7-2d21e4323941

Total jobs = 1

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

2022-03-12 20:41:29 Starting to launch local task to process map join; maximum memory = 477626368

2022-03-12 20:41:31 Dump the side-table for tag: 1 with group count: 682 into file: file:/usr/local/src/hive/tmp/ade490ef-9595-4235-9a9c-f58620ae753f/hive_2022-03-12_20-41-10_247_5739433956695466542-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile01--.hashtable

2022-03-12 20:41:31 Uploaded 1 File to: file:/usr/local/src/hive/tmp/ade490ef-9595-4235-9a9c-f58620ae753f/hive_2022-03-12_20-41-10_247_5739433956695466542-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile01--.hashtable (51658 bytes)

2022-03-12 20:41:31 End of local task; Time Taken: 1.91 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1647086333827_0001, Tracking URL = http://server:8088/proxy/application_1647086333827_0001/

Kill Command = /usr/local/src/hadoop/bin/hadoop job -kill job_1647086333827_0001

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 0

2022-03-12 20:42:57,007 Stage-3 map = 0%, reduce = 0%

2022-03-12 20:43:26,906 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 3.96 sec

MapReduce Total cumulative CPU time: 3 seconds 960 msec

Ended Job = job_1647086333827_0001

MapReduce Jobs Launched:

Stage-Stage-3: Map: 1 Cumulative CPU: 3.96 sec HDFS Read: 137752 HDFS Write: 487 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 960 msec

OK

2010-03-26 19:45:07 1000481

2010-03-26 19:45:07 1003185

2010-03-26 19:45:07 1002643

2010-03-26 19:45:07 1002994

2010-03-26 19:55:10 1003326

2010-03-29 14:28:02 1001597

2010-03-29 14:28:02 1001560

2010-03-29 14:28:02 1001650

2010-03-29 14:28:02 1002410

2010-03-29 14:28:02 1002989

Time taken: 138.793 seconds, Fetched: 10 row(s)

5、多表插入

多表插入指的是在同一条语句中,把读取的同一份数据插入到不同的表中,只需要扫描一遍数据即可完成所有表的插入操作,效率很高。我们使用买家行为日志 buyer_log 表作为插入表,创建 buyer_log1 和 buyer_log2 两表作为被插入表:

hive> create table buyer_log1 like buyer_log;

OK

Time taken: 1.199 seconds

hive> create table buyer_log2 like buyer_log;

OK

Time taken: 0.095 seconds

将 buyer_log 中的数据插入到 buyer_log1 、buyer_log2 中:

hive> from buyer_log

> insert overwrite table buyer_log1 select *

> insert overwrite table buyer_log2 select *;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = root_20220312205124_ae99b1d8-9ada-4358-9b64-9c3d61c6de76

Total jobs = 5

Launching Job 1 out of 5

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1647086333827_0002, Tracking URL = http://server:8088/proxy/application_1647086333827_0002/

Kill Command = /usr/local/src/hadoop/bin/hadoop job -kill job_1647086333827_0002

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 0

2022-03-12 20:51:55,535 Stage-2 map = 0%, reduce = 0%

2022-03-12 20:52:14,808 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 2.05 sec

MapReduce Total cumulative CPU time: 2 seconds 50 msec

Ended Job = job_1647086333827_0002

Stage-5 is selected by condition resolver.

Stage-4 is filtered out by condition resolver.

Stage-6 is filtered out by condition resolver.

Stage-11 is selected by condition resolver.

Stage-10 is filtered out by condition resolver.

Stage-12 is filtered out by condition resolver.

Moving data to directory hdfs://192.168.64.183:9000/user/hive/warehouse/db.db/buyer_log1/.hive-staging_hive_2022-03-12_20-51-24_797_2412140504440982474-1/-ext-10000

Moving data to directory hdfs://192.168.64.183:9000/user/hive/warehouse/db.db/buyer_log2/.hive-staging_hive_2022-03-12_20-51-24_797_2412140504440982474-1/-ext-10002

Loading data to table db.buyer_log1

Loading data to table db.buyer_log2

MapReduce Jobs Launched:

Stage-Stage-2: Map: 1 Cumulative CPU: 2.05 sec HDFS Read: 14432909 HDFS Write: 28293834 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 50 msec

OK

Time taken: 51.7 seconds

6、多目录输出文件

将统一文件输出到本地不同文件中,提高效率,可以避免重复操作 from,将买家行为日志 buyer_log 表导入到本地 /data/hive-data/out1 、/data/hive-data/out2 中:

[root@server hive-data]# mkdir ./out1 //首先创建两个文件夹

[root@server hive-data]# mkdir ./out2

[root@server hive-data]# ll

总用量 23084

-rw-r--r--. 1 root root 102889 3月 6 10:52 buyer_favorite

-rw-r--r--. 1 root root 14427403 3月 6 10:52 buyer_log

-rw-r--r--. 1 root root 2164 3月 6 10:52 cat_group

-rw-r--r--. 1 root root 208799 3月 6 10:52 goods

-rw-r--r--. 1 root root 82421 3月 6 10:52 goods_visit

-rw-r--r--. 1 root root 8796085 3月 6 10:52 order_items

drwxr-xr-x. 2 root root 43 3月 6 11:50 out

drwxr-xr-x. 2 root root 6 3月 12 20:57 out1

drwxr-xr-x. 2 root root 6 3月 12 20:57 out2

-rw-r--r--. 1 root root 287 3月 6 10:52 sydata.txt

hive> from buyer_log

> insert overwrite local directory '/home/data/hive-data/out1' select *

> insert overwrite local directory '/home/data/hive-data/out2' select *;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = root_20220312210028_a1b22b5b-255a-44b0-9b87-8c43ed291451

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1647086333827_0003, Tracking URL = http://server:8088/proxy/application_1647086333827_0003/

Kill Command = /usr/local/src/hadoop/bin/hadoop job -kill job_1647086333827_0003

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 0

2022-03-12 21:00:48,788 Stage-2 map = 0%, reduce = 0%

2022-03-12 21:00:55,289 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 2.25 sec

MapReduce Total cumulative CPU time: 2 seconds 250 msec

Ended Job = job_1647086333827_0003

Moving data to local directory /home/data/hive-data/out1

Moving data to local directory /home/data/hive-data/out2

MapReduce Jobs Launched:

Stage-Stage-2: Map: 1 Cumulative CPU: 2.25 sec HDFS Read: 14432070 HDFS Write: 28293676 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 250 msec

OK

Time taken: 29.427 seconds

查看本地存储的文件:

[root@server hive-data]# ll ./out1

总用量 13816

-rw-r--r--. 1 root root 14146838 3月 12 21:00 000000_0

[root@server hive-data]# ll ./out2

总用量 13816

-rw-r--r--. 1 root root 14146838 3月 12 21:00 000000_0