FlinkX

FlinkX 官网

https://dtstack.github.io/chunjun-web/docs/chunjunDocs/intro

案例

Mysql2hive.json

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

{

"name": "id",

"type": "int"

},

{

"name": "username",

"type": "string"

},

{

"name": "password",

"type": "string"

}

],

"customSql": "",

"splitPk": "id",

"increColumn": "id",

"startLocation": "" , ## 初始设为“”

"polling": true,

"pollingInterval": 3000,

"queryTimeOut": 1000,

"username": "root",

"password": "123456",

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://hadoop01:3306/web?useSSL=false"

],

"table": [

"job_user"

]

}

]

}

},

"writer": {

"name" : "hivewriter",

"parameter" : {

"jdbcUrl" : "jdbc:hive2://hadoop01:10000/default", ### hiverserver2 端口10000

"fileType" : "text",

"writeMode" : "overwrite", ### append | overwriter

"compress" : "",

"charsetName" : "UTF-8",

"tablesColumn" : "{\"flinkx_test\":[{\"key\":\"id\",\"type\":\"int\"},{\"key\":\"username\",\"type\":\"string\"},{\"key\":\"password\",\"type\":\"string\"}]}", ### 表若不存在则新建..

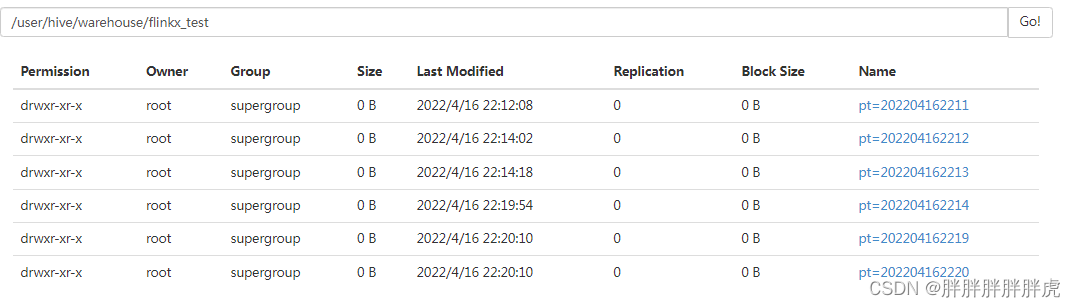

"partition" : "pt", ### 分区

"partitionType" : "MINUTE", ### 创建分区 MINUTE | DAY

"defaultFS" : "hdfs://hadoop01:9000",

"hadoopConfig": {

"hadoop.user.name": "root",

"dfs.ha.namenodes.ns": "hadoop01",

"fs.defaultFS": "hdfs://hadoop01:9000",

"dfs.nameservices": "hadoop01",

"fs.hdfs.impl.disable.cache": "true",

"fs.hdfs.impl": "org.apache.hadoop.hdfs.DistributedFileSystem"

}

}

}

}

],

"setting": {

"restore": {

"restoreColumnName": "id",

"maxRowNumForCheckpoint" : 0;

"isRestore" : false,

"restoreColumnName": "",

"restoreColumnIndex": 0

},

"speed": {

"channel": 1,

"bytes": 0

}

}

}

}

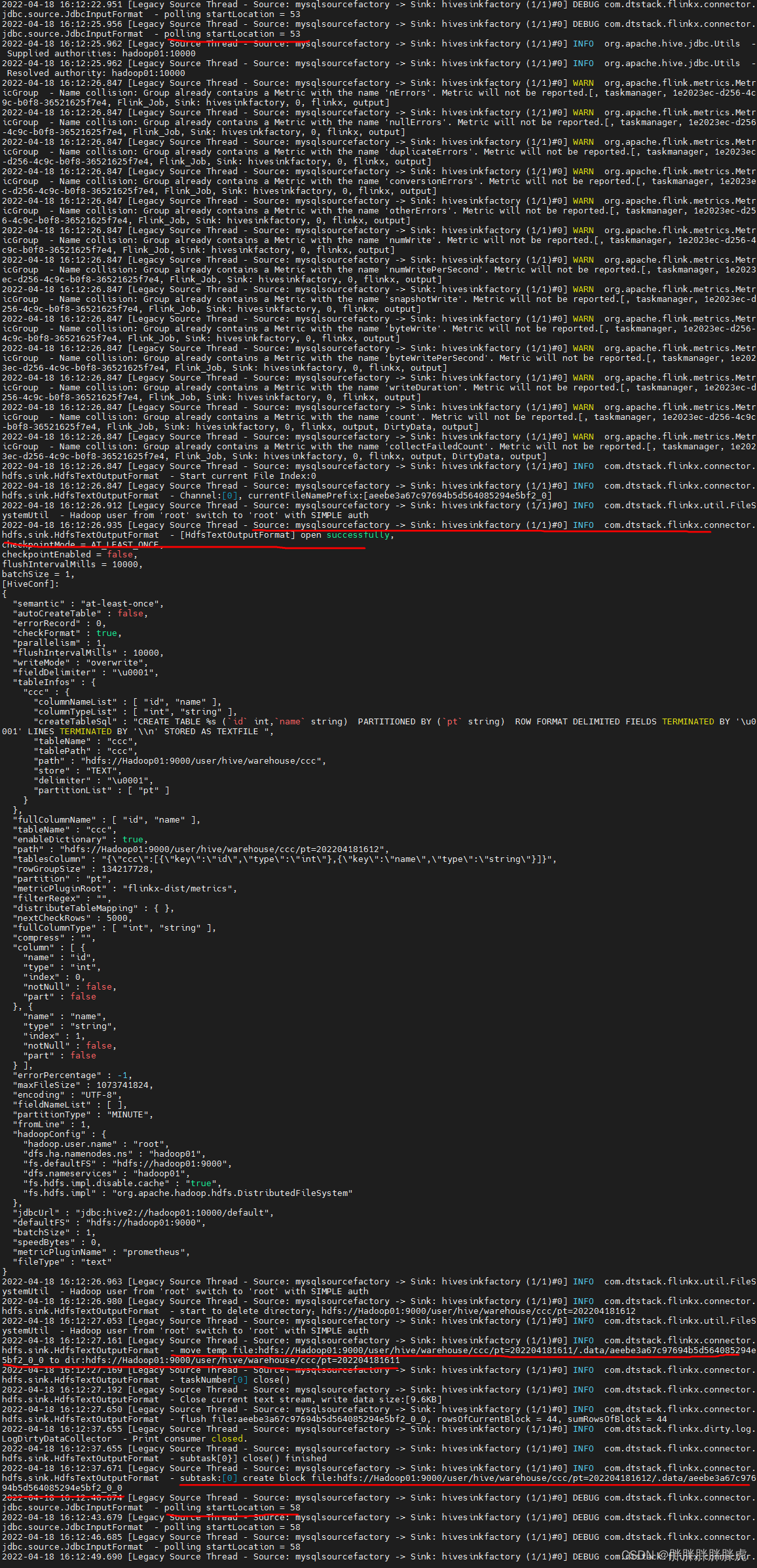

bin/flinkx \

-mode local \

-job /opt/module/flinkx/job/mysql2hive.json \

-jobType sync \

-flinkxDistDir flinkx-dist \

-flinkConfDir /opt/module/flinkx/flinkconf \

-flinkLibDir /opt/module/flinkx/lib \

-confProp "{\"flink.checkpoint.interval\":30000}"

启动 hiverserver2 服务

./hive --service hiveserver2