目录

? ? ? ? ?2.安装spark并配置环境

1.准备环境

虚拟机:vmware workstation16

linux版本:centOS 7

linux 分布式环境:hadoop 3.1.1

(1)创建三台虚拟机,并准备好linux环境和hadoop,确保hadoop集群能成功运行

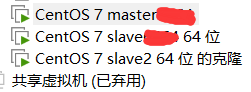

hadoop启动成功后,网页DataNode页面如下

主机为master(在网页上不显示),从机1为slave1,从机2为slave2,这是在配置hadoop时为三台机器配置的名字。

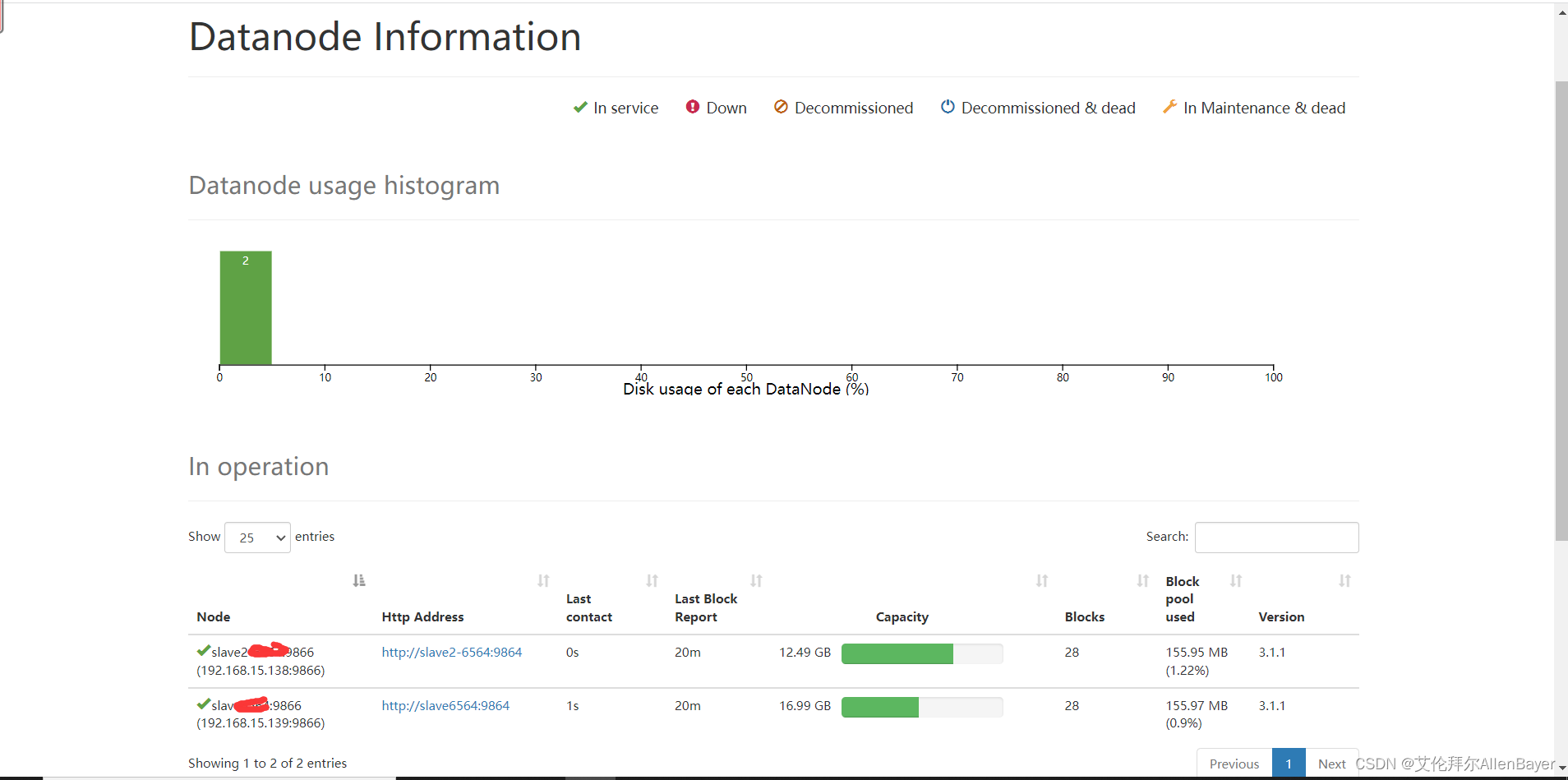

(2)准备安装文件

需要的安装文件如下

spark 2.4.0

Scala 2.11.8

到Scala官网下载Scala压缩包![]() https://www.scala-lang.org/download/

https://www.scala-lang.org/download/

?

编辑器,我使用的是idea

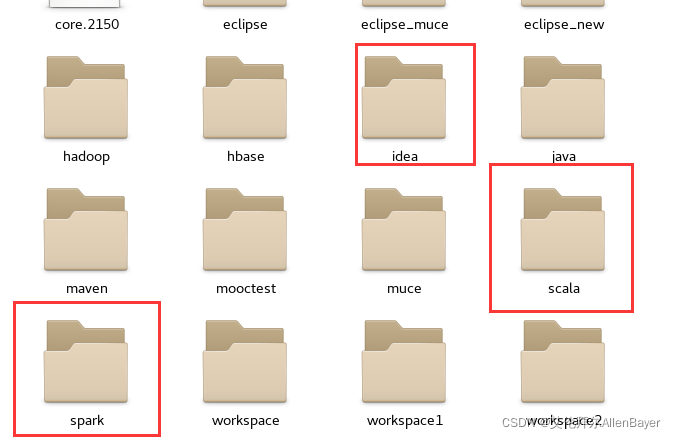

打开master,使用xftp传入这些安装包

2.安装spark并配置环境

(1)安装配置spark

在master中安装Spark,执行命令如下

进入spark目录,打开终端,将其复制到/usr/local 目录下,然后解压,放入spark目录

# cp spark-2.4.0-bin-without-hadoop.tgz /usr/local

# tar -zxf spark-2.4.0-bin-without-hadoop.tgz

# mv ./spark-2.4.0-bin-without-hadoop ./spark

# chown -R root:root ./spark

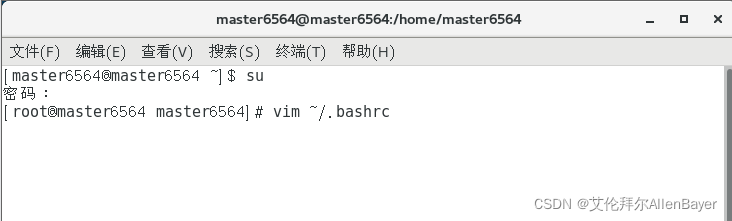

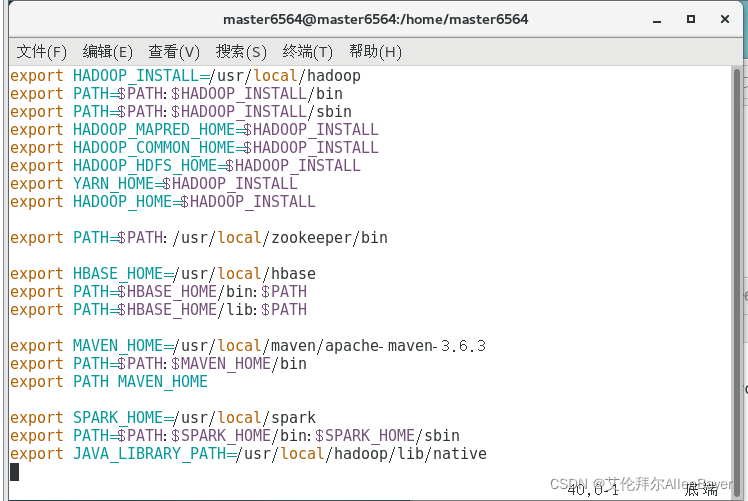

(2)配置环境变量

# vim ~/.bashrc

写入以下配置,然后保存退出

export SPARK_HOME=/usr/local/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

export JAVA_LIBRARY_PATH=/usr/local/hadoop/lib/native

刷新一下

# source ~/.bashrc

配置另一个环境变量

# vim /etc/profile

写入以下内容

export SPARK_HOME=/usr/local/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

别忘了刷新

# source /etc/profile

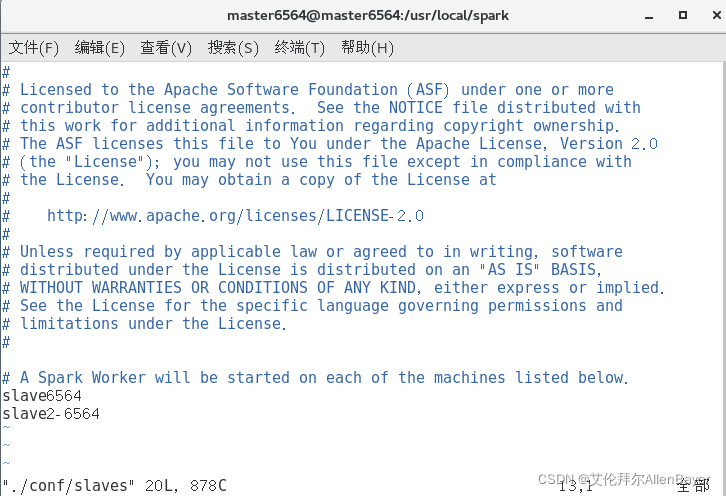

(3)配置slaves文件

新建一个终端

[root@master ~]# cd /usr/local/spark

[root@master spark]# cp ./conf/slaves.template ./conf/slaves

[root@master spark]# vim ./conf/slaves

写入两个从机的名称

进入安装后的spark目录(/usr/local下的)运行以下命令来?配置spark-env.sh

[root@master spark]# cp ./conf/spark-env.sh.template ./conf/spark-env.sh

[root@master spark]# vim ./conf/spark-env.sh

?写入以下内容

export SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop

export SPARK_MASTER_IP=192.168.50.194

export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64

export HADOOP_HOME=/usr/local/hadoop

export SPARK_WORKER_MEMORY=1024m

export SPARK_WORKER_CORES=1

注意:第三行export SPARK_MASTER_IP=192.168.50.194 这里的ip填自己主机(master)的ip

(4)配置slave节点

将master上的spark传输到从机上,先在master终端上运行以下命令

[root@master spark]# cd /usr/local/

[root@master local]# tar -zcf ~/spark.master.tar.gz ./spark

[root@master local]# cd ~

[root@master ~]# scp ./spark.master.tar.gz slave1:/home/spark.master.tar.gz

[root@master ~]# scp ./spark.master.tar.gz slave2:/home/spark.master.tar.gz

执行scp命令时,要注意,slave1和slave2改为自己的从机名字

然后进入虚拟机slave1,打开终端,执行以下语句

[root@slave1 ~]# rm -rf /usr/loal/spark

[root@slave1 ~]# tar -zxf /home/spark.master.tar.gz -C /usr/local

[root@slave1 ~]# chown -R root /usr/local/spark

这样,我们就把master上配置好的spark传输到了slave1上

同样在slave2上运行相同命令

[root@slave2 ~]# rm -rf /usr/loal/spark

[root@slave2 ~]# tar -zxf /home/spark.master.tar.gz -C /usr/local

[root@slave2 ~]# chown -R root /usr/local/spark

(5)启动spark集群

首先先启动hadoop

[root@master ~]# start-all.sh

[root@master ~]# jps

运行结果

25136 SecondaryNameNode

25525 ResourceManager

25687 Jps

17434 -- process information unavailable

24844 NameNode

启动成功

然后启动Spark集群

启动master结点

[root@master ~]# cd /usr/local/spark

[root@master spark]# sbin/start-master.sh

[root@master spark]# jps

运行结果

25136 SecondaryNameNode

26161 Master

26225 Jps

25525 ResourceManager

17434 -- process information unavailable

24844 NameNode

启动所有Slave节点

[root@master spark]# sbin/start-slaves.sh

[root@slave1 spark]# jps

23664 Worker

23777 Jps

23234 NodeManager

23094 DataNode

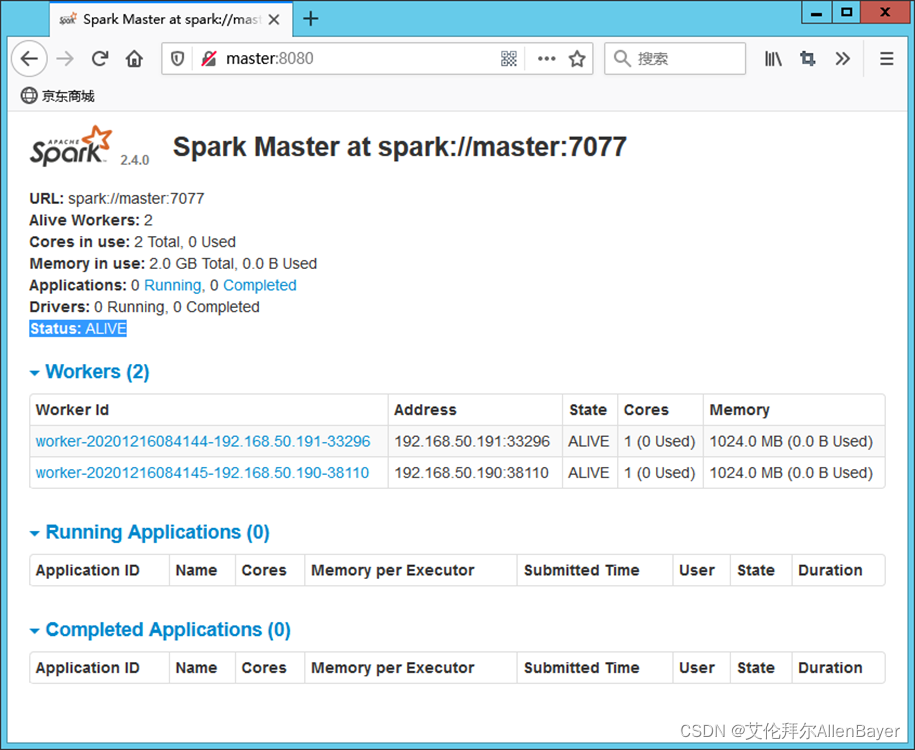

查看集群信息

打开linux内置浏览器,输入http://master:8080

看到如下页面说明启动成功

?配置开机启动Spark

[root@master spark]# vim /etc/rc.local

su - root -c /usr/local/hadoop/sbin/start-all.sh

su - root -c /usr/local/spark/sbin/start-master.sh

su - root -c /usr/local/spark/sbin/start-slaves.sh

关闭Spark集群

[root@master spark]# cd /usr/local/spark

[root@master spark]# sbin/stop-master.sh

[root@master spark]# sbin/stop-slaves.sh

[root@master spark]# stop.sh

3.安装scala并配置环境

此操作需要在主机和从机上都进行

和安装spark一样,复制到/usr/local/下解压

# cp scala-2.11.8.tgz /usr/local

# tar -zxf spark-2.4.0-bin-without-hadoop.tgz

# mv ./scala-2.11.8 ./scala

# chown -R root:root ./scala?配置环境变量

# vim ~/.bash_profileexport SCALA_HOME=/usr/local/scala/scala-2.11.8

export PATH=${SCALA_HOME}/bin:$PATH

刷新环境变量

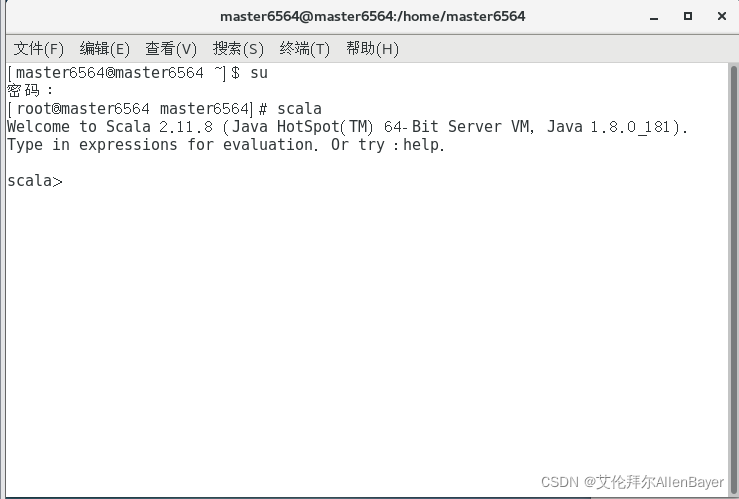

source ~/.bash_profile 验证安装

[root@master6564 master6564]# scala

安装成功

从机步骤完全相同

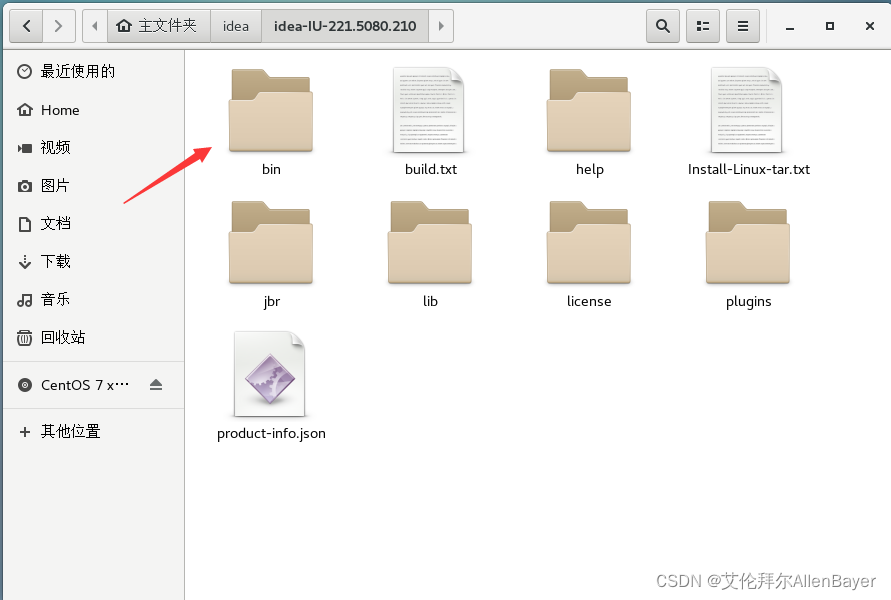

4.安装编辑器idea

在jetbrains官网上下载idea Ultimate linux版

在master新建一个idea命名为idea,用xftp传输到文件夹中,解压

tar -zxvf??ideaIU-2021.2.tar.gz进入bin目录

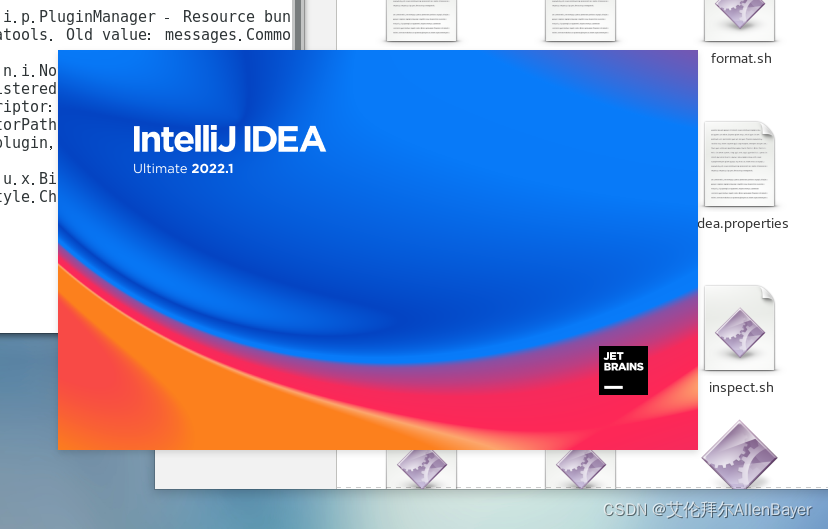

在bin目录中打开终端,输入以下指令来启动idea

./idea.sh?

成功启动idea

创建桌面图标后续会介绍

推荐购买正版,不想购买请自行想办法

5.编写Spark Scala应用程序实现单词计数统计

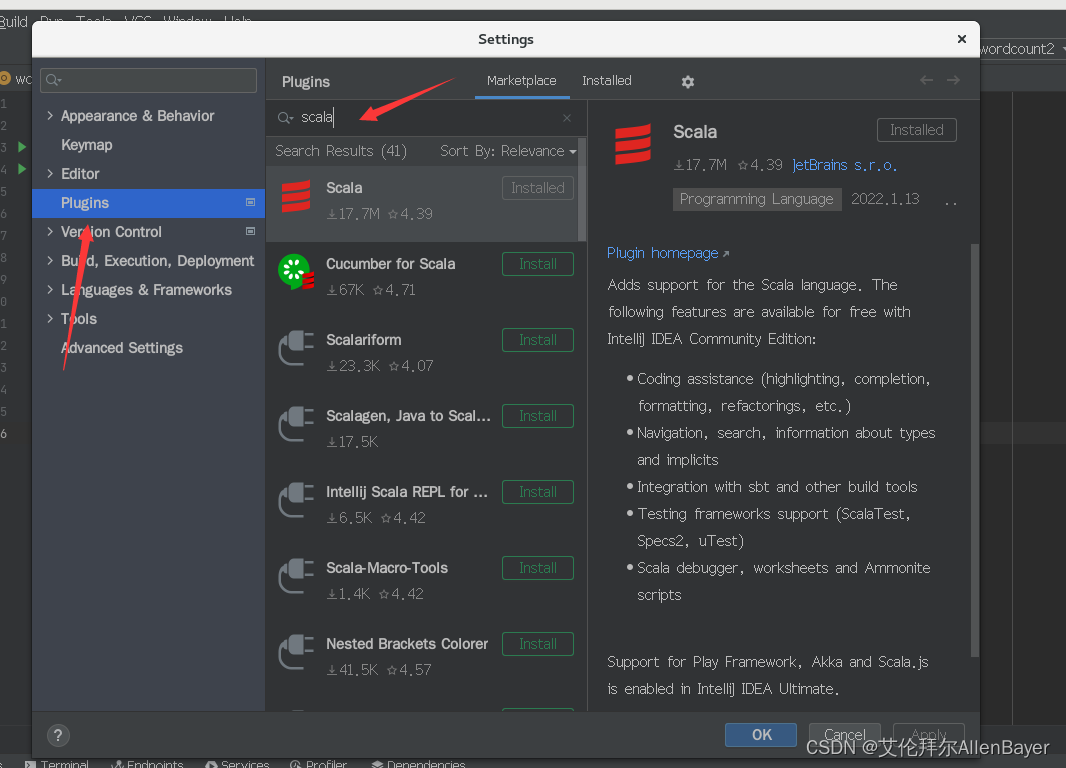

(1)配置idea Scala环境

在idea的设置中,安装scala插件,如果需要汉化就安装Chinese插件

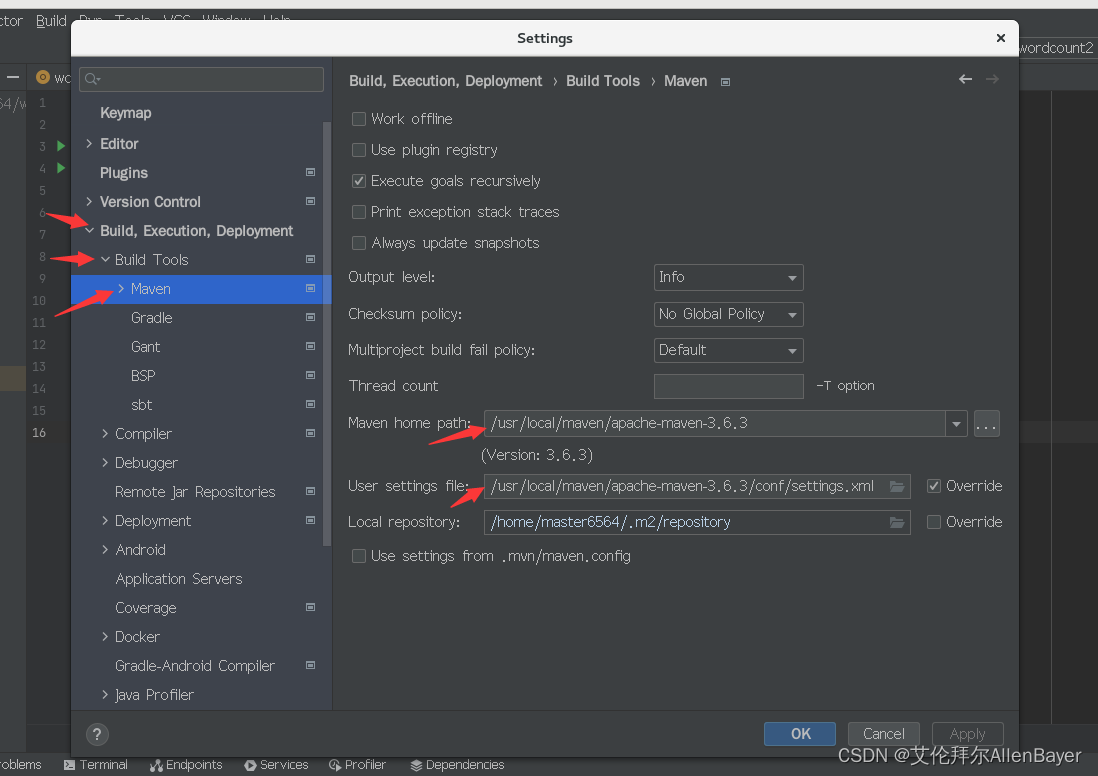

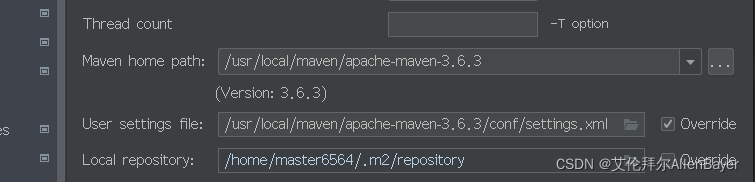

下面来配置一下idea的maven环境

?这里请选择自己的maven路径和配置文件,本地仓库我使用的是默认的

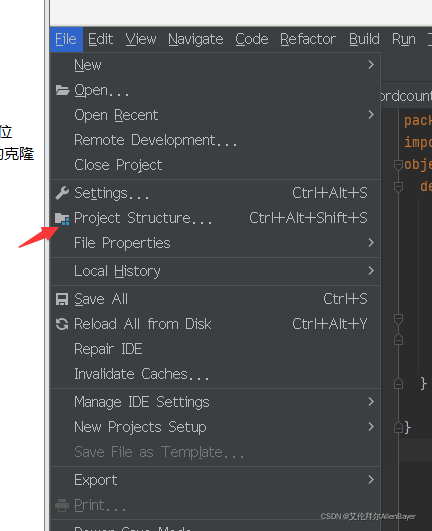

?然后点击项目配置

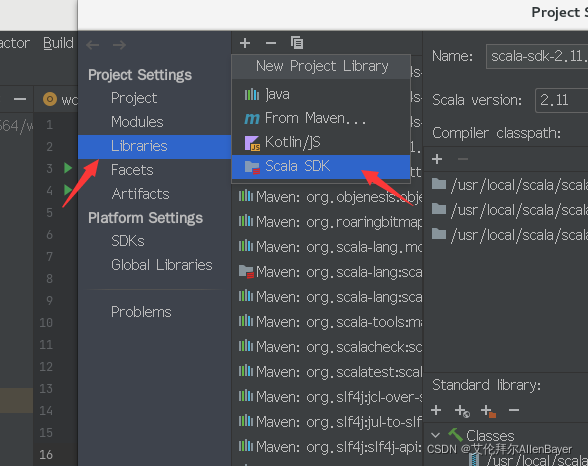

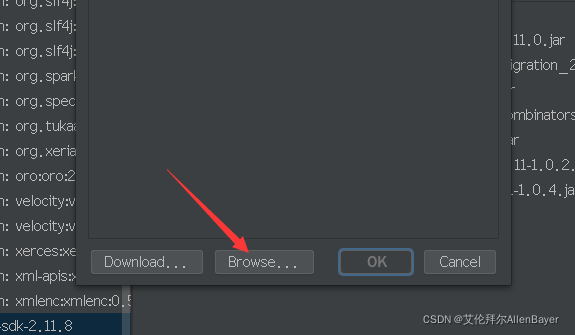

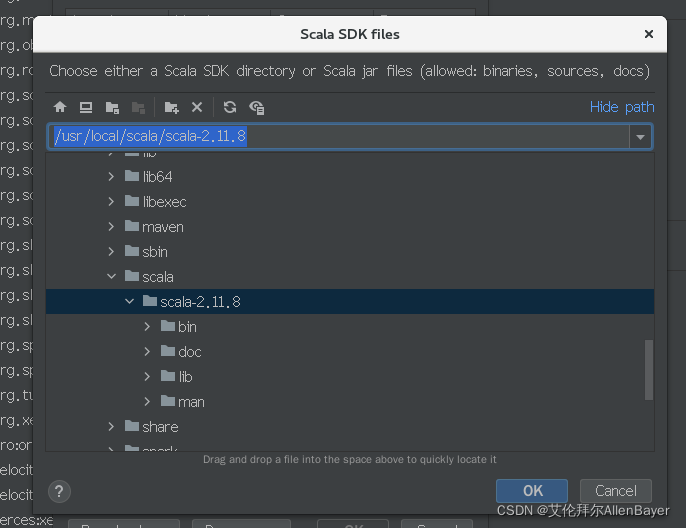

在libraries中选择scala SDK配置?

添加我们本地的scala

?

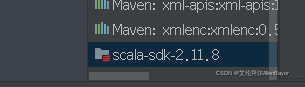

在library中显示,添加成功?

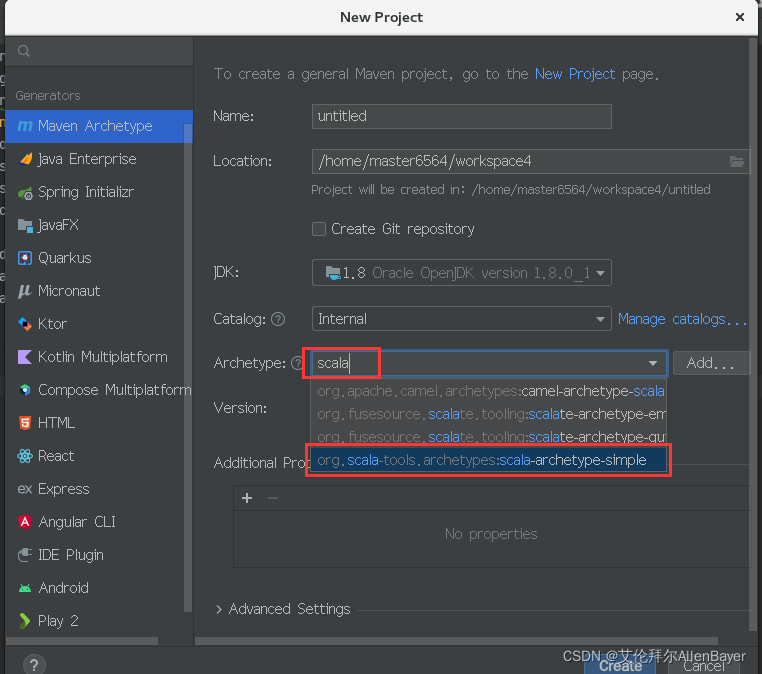

新建maven项目,archetype选择scala-archetype-simple

创建好后,修改pom.xml,这里是根据我的scala和spark版本来配置的,注意,spark对scala的版本极其敏感

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>wordcount</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<scala.version>2.11.8</scala.version>

</properties>

<repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<!-- spark -->

<!-- <dependency>-->

<!-- <groupId>org.apache.spark</groupId>-->

<!-- <artifactId>spark-sql_2.11</artifactId>-->

<!-- <version>2.1.1</version>-->

<!-- <scope>provided</scope>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>org.apache.spark</groupId>-->

<!-- <artifactId>spark-streaming_2.11</artifactId>-->

<!-- <version>2.1.1</version>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>org.apache.spark</groupId>-->

<!-- <artifactId>spark-streaming-kafka-0-10_2.11</artifactId>-->

<!-- <version>2.1.1</version>-->

<!-- </dependency>-->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.12</version>

</dependency>

<dependency>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<version>2.5.1</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.5</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>

?保存之后,刷新maven

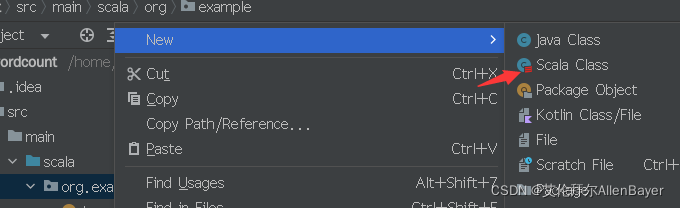

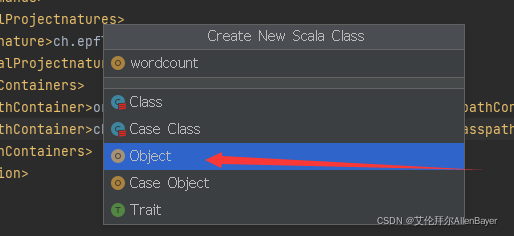

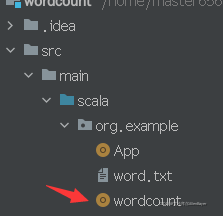

在src下新建scala类

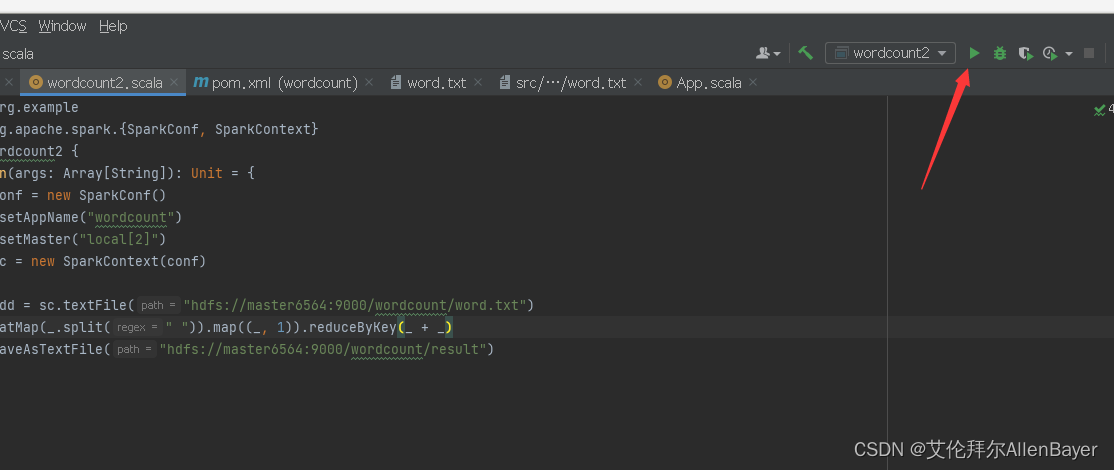

?写入代码

package org.example

import org.apache.spark.{SparkConf, SparkContext}

object wordcount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

conf.setAppName("wordcount")

conf.setMaster("local[2]")

val sc = new SparkContext(conf)

val rdd = sc.textFile("hdfs://master6564:9000/wordcount/word.txt")

.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_ + _)

rdd.saveAsTextFile("hdfs://master6564:9000/wordcount/result")

}

}

?这里的master6564:9000要改为你的master名称和对应的hdfs端口号

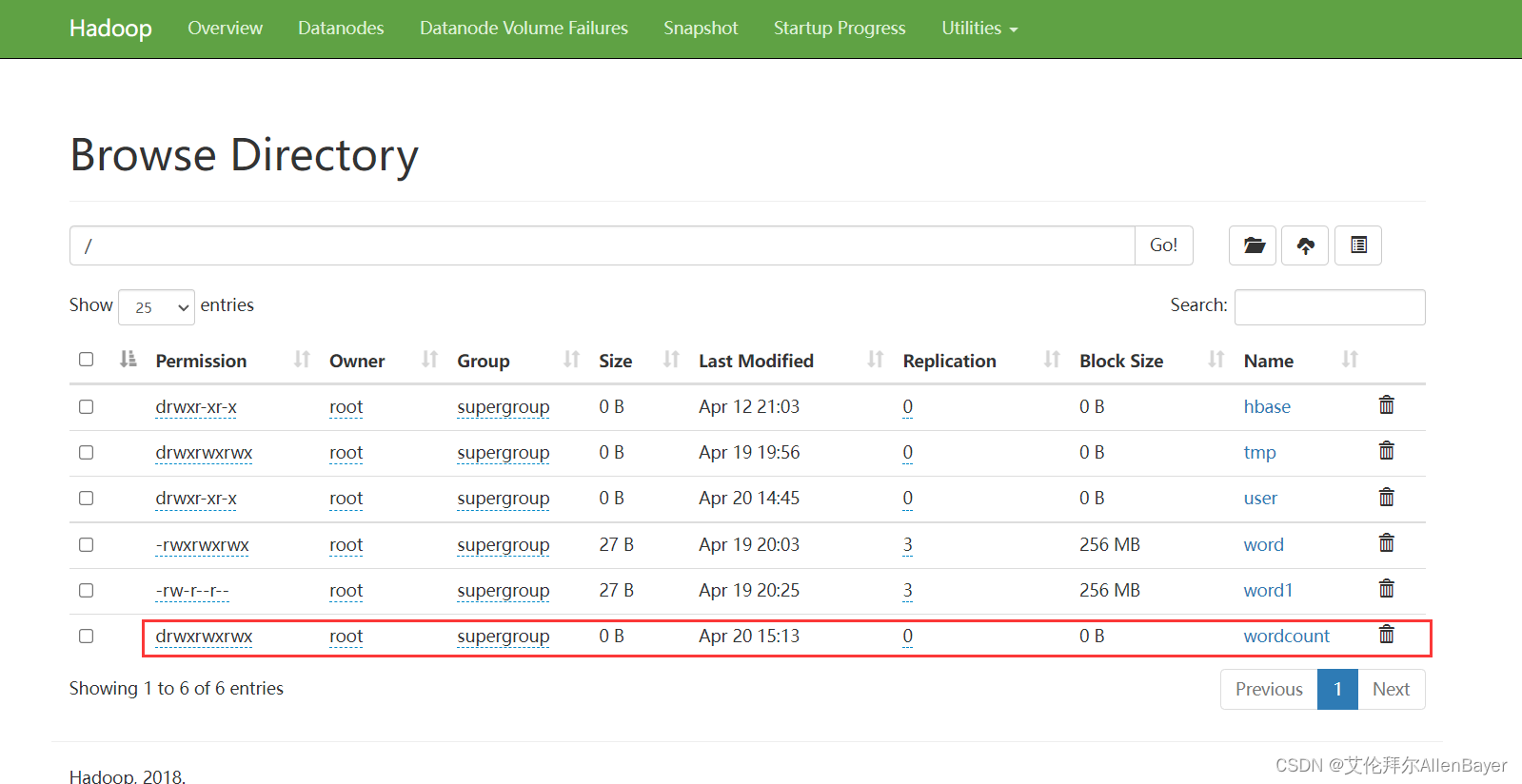

(2)准备hdfs输入文件

在本地新建一个txt,输入一些单词,保存?

启动hadoop和spark

启动hadoop

# cd /usr/local/hadoop

# sbin/start-all.sh

启动spark

# cd /usr/local/spark

# sbin/start-master.sh

# sbin/start-slaves.sh启动后,先在hdfs中新建一个文件夹,wordcount

[root@master6564 ~]# hadoop fs -mkdir /wordcount

[root@master6564 ~]# hadoop fs -chmod -R 777 /wordcount

上传输入文件word.txt?

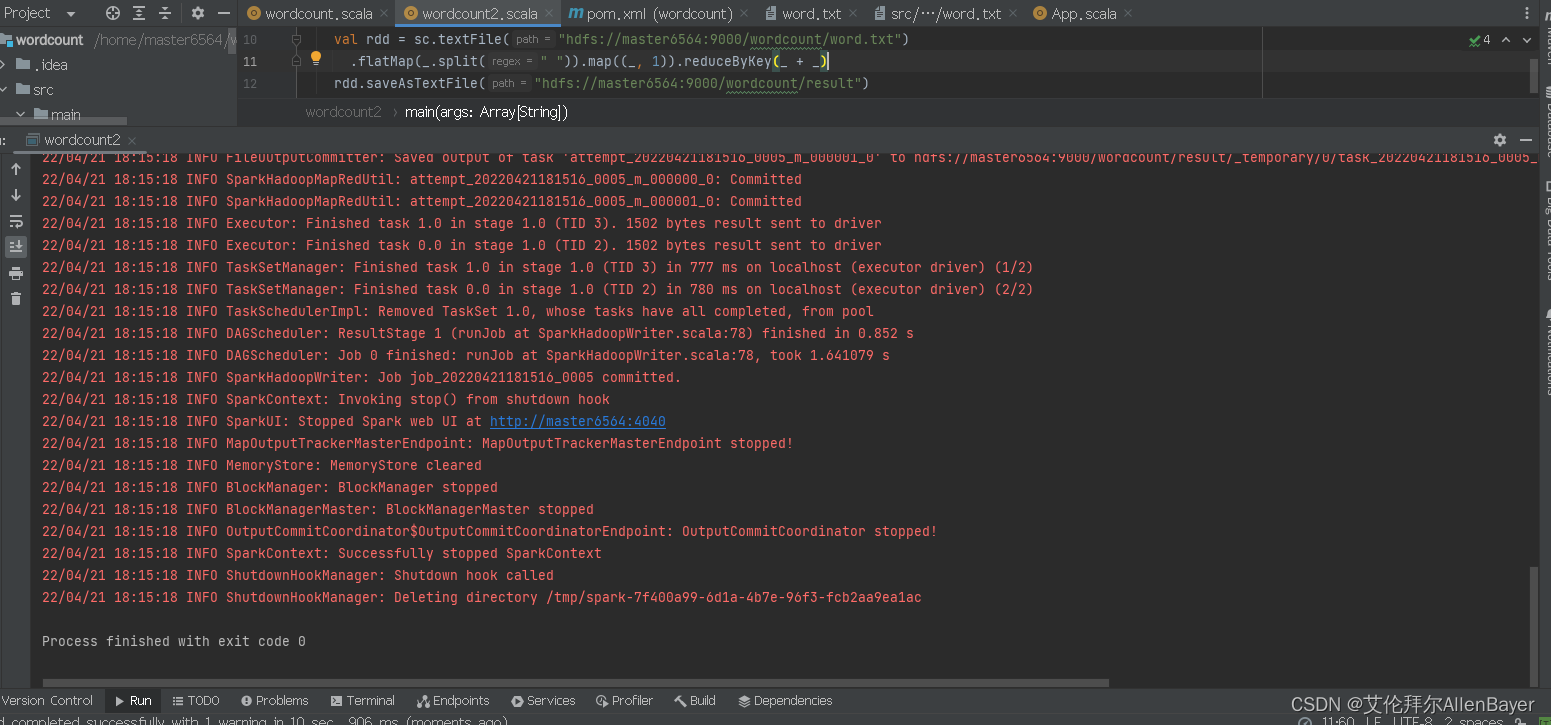

# hadoop fs -put word.txt /wordcount回idea跑一下代码

?运行成功

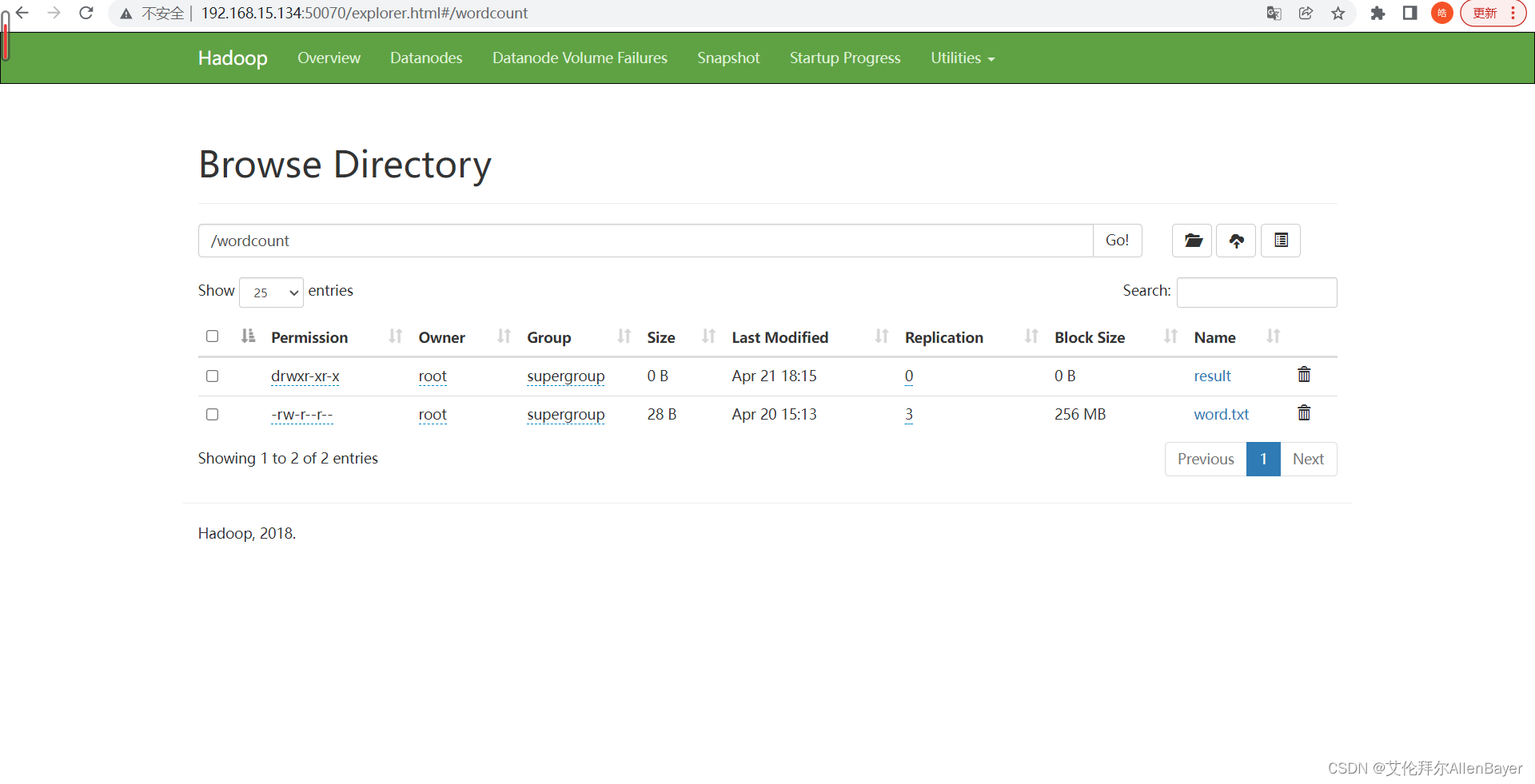

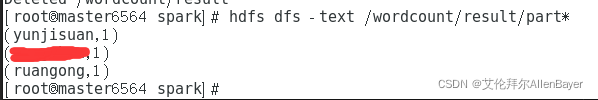

查看结果

有result,然后在终端查看result

hdfs dfs -text /wordcount/result/part*?

?完成

6.Spark On Yarn配置

修改主机和从机的配置文件

首先是修改hadoop配置文件yarn-site.xml,添加如下内容:

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

然后修改spark-env.sh,进入spark目录,添加如下配置:

[root@master conf]$ vi spark-env.sh

YARN_CONF_DIR=/usr/local/hadoop/etc/hadoop

HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop一定要在主机从机上都修改,(可以用前面的scp方法传)

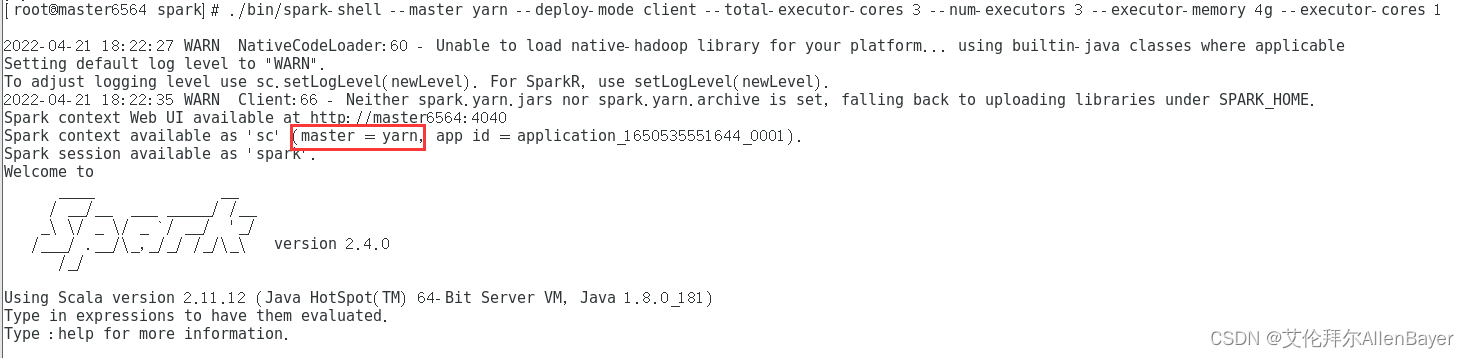

运行spark-shell

[root@master6564 spark]# ./bin/spark-shell --master yarn --deploy-mode client --total-executor-cores 3 --num-executors 3 --executor-memory 4g --executor-cores 1

配置成功

参考资料: