问题语句

select app_name,count(1) as cnt from

(

select name,seq_id

from tmp.data_20220418

group by name ,seq_id

) a group by app_name limit 10;

当我在spark去运行这个语句时加不加limit 结果是一致的

但是当我用beeline去运行时, 加limit 结果只有一行错误数据, 不加limit 才能跑出正常结果

排查过程

把limit相关参数提取出来 ,提取的是相关默认参数

# hcat 可以避开权限检查

hcat -e "set -v " |grep limit

可以得到下面这些默认配置,根据这些参数去对比

hive.limit.optimize.enable=false

hive.limit.optimize.fetch.max=50000

hive.limit.optimize.limit.file=10

hive.limit.pushdown.memory.usage=0.1

hive.limit.query.max.table.partition=-1

hive.limit.row.max.size=100000

hive.metastore.limit.partition.request=-1

mapreduce.job.running.map.limit=0

mapreduce.job.running.reduce.limit=0

mapreduce.reduce.shuffle.memory.limit.percent=0.25

mapreduce.task.userlog.limit.kb=0

yarn.app.attempt.diagnostics.limit.kc=64

yarn.app.mapreduce.am.container.log.limit.kb=0

yarn.app.mapreduce.shuffle.log.limit.kb=0

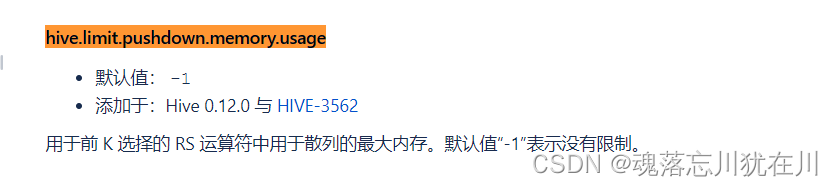

去官网找到对应参数描述: https://cwiki.apache.org/confluence/display/Hive/Configuration+Properties

最终发现此参数被设置为0.1, 改成-1后恢复正常

set hive.limit.pushdown.memory.usage=0.1